Gong Chen

TKG-Thinker: Towards Dynamic Reasoning over Temporal Knowledge Graphs via Agentic Reinforcement Learning

Feb 05, 2026Abstract:Temporal knowledge graph question answering (TKGQA) aims to answer time-sensitive questions by leveraging temporal knowledge bases. While Large Language Models (LLMs) demonstrate significant potential in TKGQA, current prompting strategies constrain their efficacy in two primary ways. First, they are prone to reasoning hallucinations under complex temporal constraints. Second, static prompting limits model autonomy and generalization, as it lack optimization through dynamic interaction with temporal knowledge graphs (TKGs) environments. To address these limitations, we propose \textbf{TKG-Thinker}, a novel agent equipped with autonomous planning and adaptive retrieval capabilities for reasoning over TKGs. Specifically, TKG-Thinker performs in-depth temporal reasoning through dynamic multi-turn interactions with TKGs via a dual-training strategy. We first apply Supervised Fine-Tuning (SFT) with chain-of thought data to instill core planning capabilities, followed by a Reinforcement Learning (RL) stage that leverages multi-dimensional rewards to refine reasoning policies under intricate temporal constraints. Experimental results on benchmark datasets with three open-source LLMs show that TKG-Thinker achieves state-of-the-art performance and exhibits strong generalization across complex TKGQA settings.

CoRA: A Collaborative Robust Architecture with Hybrid Fusion for Efficient Perception

Dec 15, 2025Abstract:Collaborative perception has garnered significant attention as a crucial technology to overcome the perceptual limitations of single-agent systems. Many state-of-the-art (SOTA) methods have achieved communication efficiency and high performance via intermediate fusion. However, they share a critical vulnerability: their performance degrades under adverse communication conditions due to the misalignment induced by data transmission, which severely hampers their practical deployment. To bridge this gap, we re-examine different fusion paradigms, and recover that the strengths of intermediate and late fusion are not a trade-off, but a complementary pairing. Based on this key insight, we propose CoRA, a novel collaborative robust architecture with a hybrid approach to decouple performance from robustness with low communication. It is composed of two components: a feature-level fusion branch and an object-level correction branch. Its first branch selects critical features and fuses them efficiently to ensure both performance and scalability. The second branch leverages semantic relevance to correct spatial displacements, guaranteeing resilience against pose errors. Experiments demonstrate the superiority of CoRA. Under extreme scenarios, CoRA improves upon its baseline performance by approximately 19% in AP@0.7 with more than 5x less communication volume, which makes it a promising solution for robust collaborative perception.

CompLex: Music Theory Lexicon Constructed by Autonomous Agents for Automatic Music Generation

Aug 27, 2025Abstract:Generative artificial intelligence in music has made significant strides, yet it still falls short of the substantial achievements seen in natural language processing, primarily due to the limited availability of music data. Knowledge-informed approaches have been shown to enhance the performance of music generation models, even when only a few pieces of musical knowledge are integrated. This paper seeks to leverage comprehensive music theory in AI-driven music generation tasks, such as algorithmic composition and style transfer, which traditionally require significant manual effort with existing techniques. We introduce a novel automatic music lexicon construction model that generates a lexicon, named CompLex, comprising 37,432 items derived from just 9 manually input category keywords and 5 sentence prompt templates. A new multi-agent algorithm is proposed to automatically detect and mitigate hallucinations. CompLex demonstrates impressive performance improvements across three state-of-the-art text-to-music generation models, encompassing both symbolic and audio-based methods. Furthermore, we evaluate CompLex in terms of completeness, accuracy, non-redundancy, and executability, confirming that it possesses the key characteristics of an effective lexicon.

RollingQ: Reviving the Cooperation Dynamics in Multimodal Transformer

Jun 13, 2025Abstract:Multimodal learning faces challenges in effectively fusing information from diverse modalities, especially when modality quality varies across samples. Dynamic fusion strategies, such as attention mechanism in Transformers, aim to address such challenge by adaptively emphasizing modalities based on the characteristics of input data. However, through amounts of carefully designed experiments, we surprisingly observed that the dynamic adaptability of widely-used self-attention models diminishes. Model tends to prefer one modality regardless of data characteristics. This bias triggers a self-reinforcing cycle that progressively overemphasizes the favored modality, widening the distribution gap in attention keys across modalities and deactivating attention mechanism's dynamic properties. To revive adaptability, we propose a simple yet effective method Rolling Query (RollingQ), which balances attention allocation by rotating the query to break the self-reinforcing cycle and mitigate the key distribution gap. Extensive experiments on various multimodal scenarios validate the effectiveness of RollingQ and the restoration of cooperation dynamics is pivotal for enhancing the broader capabilities of widely deployed multimodal Transformers. The source code is available at https://github.com/GeWu-Lab/RollingQ_ICML2025.

LENVIZ: A High-Resolution Low-Exposure Night Vision Benchmark Dataset

Mar 25, 2025

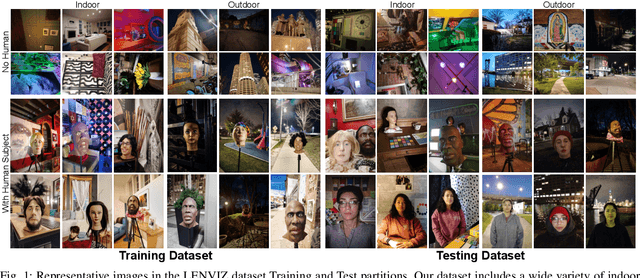

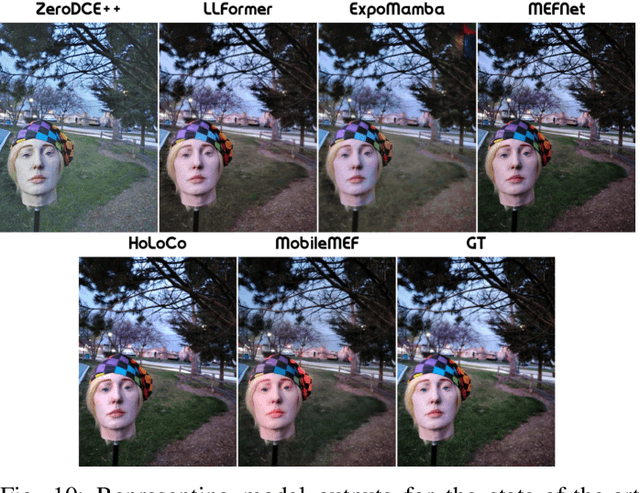

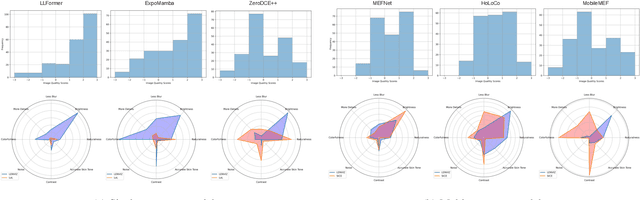

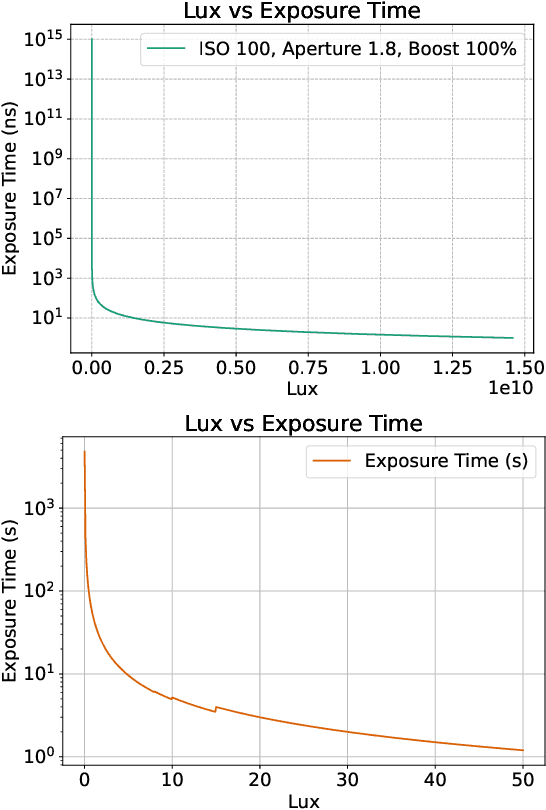

Abstract:Low-light image enhancement is crucial for a myriad of applications, from night vision and surveillance, to autonomous driving. However, due to the inherent limitations that come in hand with capturing images in low-illumination environments, the task of enhancing such scenes still presents a formidable challenge. To advance research in this field, we introduce our Low Exposure Night Vision (LENVIZ) Dataset, a comprehensive multi-exposure benchmark dataset for low-light image enhancement comprising of over 230K frames showcasing 24K real-world indoor and outdoor, with-and without human, scenes. Captured using 3 different camera sensors, LENVIZ offers a wide range of lighting conditions, noise levels, and scene complexities, making it the largest publicly available up-to 4K resolution benchmark in the field. LENVIZ includes high quality human-generated ground truth, for which each multi-exposure low-light scene has been meticulously curated and edited by expert photographers to ensure optimal image quality. Furthermore, we also conduct a comprehensive analysis of current state-of-the-art low-light image enhancement techniques on our dataset and highlight potential areas of improvement.

Divide and Conquer: Heterogeneous Noise Integration for Diffusion-based Adversarial Purification

Mar 03, 2025Abstract:Existing diffusion-based purification methods aim to disrupt adversarial perturbations by introducing a certain amount of noise through a forward diffusion process, followed by a reverse process to recover clean examples. However, this approach is fundamentally flawed: the uniform operation of the forward process across all pixels compromises normal pixels while attempting to combat adversarial perturbations, resulting in the target model producing incorrect predictions. Simply relying on low-intensity noise is insufficient for effective defense. To address this critical issue, we implement a heterogeneous purification strategy grounded in the interpretability of neural networks. Our method decisively applies higher-intensity noise to specific pixels that the target model focuses on while the remaining pixels are subjected to only low-intensity noise. This requirement motivates us to redesign the sampling process of the diffusion model, allowing for the effective removal of varying noise levels. Furthermore, to evaluate our method against strong adaptative attack, our proposed method sharply reduces time cost and memory usage through a single-step resampling. The empirical evidence from extensive experiments across three datasets demonstrates that our method outperforms most current adversarial training and purification techniques by a substantial margin.

From References to Insights: Collaborative Knowledge Minigraph Agents for Automating Scholarly Literature Review

Nov 09, 2024Abstract:Literature reviews play a crucial role in scientific research for understanding the current state of research, identifying gaps, and guiding future studies on specific topics. However, the process of conducting a comprehensive literature review is yet time-consuming. This paper proposes a novel framework, collaborative knowledge minigraph agents (CKMAs), to automate scholarly literature reviews. A novel prompt-based algorithm, the knowledge minigraph construction agent (KMCA), is designed to identify relationships between information pieces from academic literature and automatically constructs knowledge minigraphs. By leveraging the capabilities of large language models on constructed knowledge minigraphs, the multiple path summarization agent (MPSA) efficiently organizes information pieces and relationships from different viewpoints to generate literature review paragraphs. We evaluate CKMAs on three benchmark datasets. Experimental results demonstrate that the proposed techniques generate informative, complete, consistent, and insightful summaries for different research problems, promoting the use of LLMs in more professional fields.

Robot Guided Evacuation with Viewpoint Constraints

Sep 28, 2024

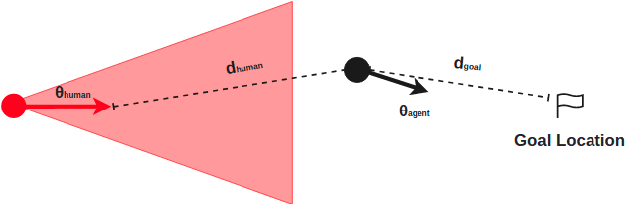

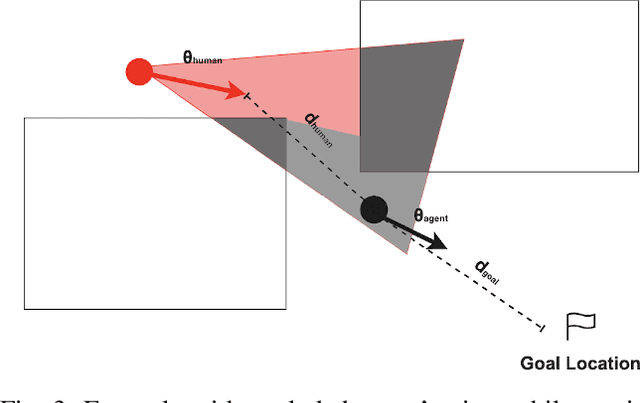

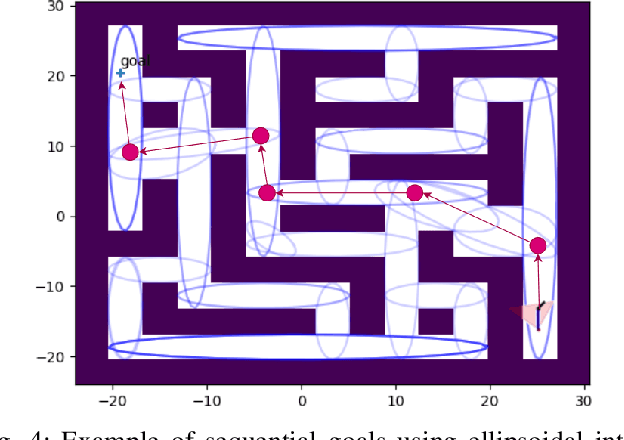

Abstract:We present a viewpoint-based non-linear Model Predictive Control (MPC) for evacuation guiding robots. Specifically, the proposed MPC algorithm enables evacuation guiding robots to track and guide cooperative human targets in emergency scenarios. Our algorithm accounts for the environment layout as well as distances between the robot and human target and distance to the goal location. A key challenge for evacuation guiding robot is the trade-off between its planned motion for leading the target toward a goal position and staying in the target's viewpoint while maintaining line-of-sight for guiding. We illustrate the effectiveness of our proposed evacuation guiding algorithm in both simulated and real-world environments with an Unmanned Aerial Vehicle (UAV) guiding a human. Our results suggest that using the contextual information from the environment for motion planning, increases the visibility of the guiding UAV to the human while achieving faster total evacuation time.

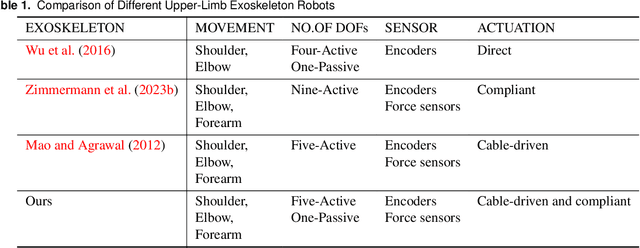

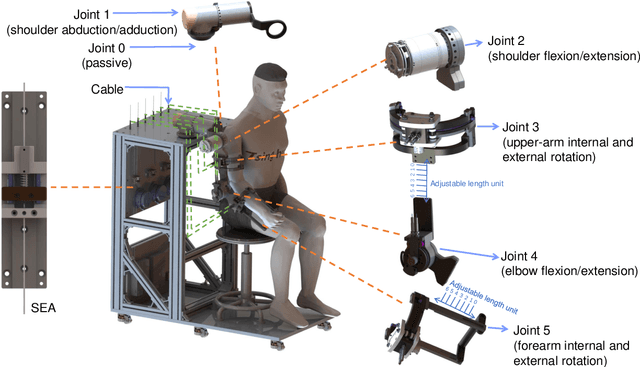

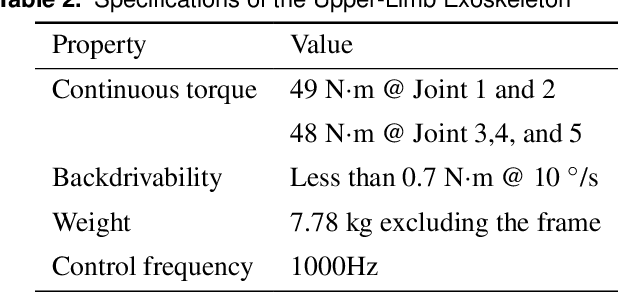

Upper-Limb Rehabilitation with a Dual-Mode Individualized Exoskeleton Robot: A Generative-Model-Based Solution

Sep 05, 2024

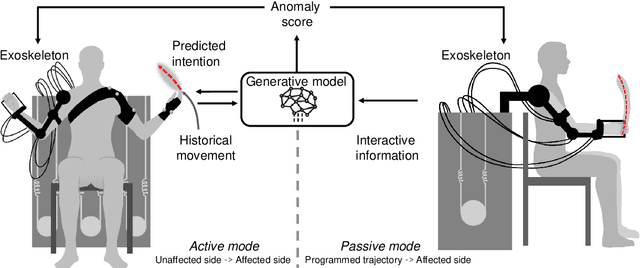

Abstract:Several upper-limb exoskeleton robots have been developed for stroke rehabilitation, but their rather low level of individualized assistance typically limits their effectiveness and practicability. Individualized assistance involves an upper-limb exoskeleton robot continuously assessing feedback from a stroke patient and then meticulously adjusting interaction forces to suit specific conditions and online changes. This paper describes the development of a new upper-limb exoskeleton robot with a novel online generative capability that allows it to provide individualized assistance to support the rehabilitation training of stroke patients. Specifically, the upper-limb exoskeleton robot exploits generative models to customize the fine and fit trajectory for the patient, as medical conditions, responses, and comfort feedback during training generally differ between patients. This generative capability is integrated into the two working modes of the upper-limb exoskeleton robot: an active mirroring mode for patients who retain motor abilities on one side of the body and a passive following mode for patients who lack motor ability on both sides of the body. The performance of the upper-limb exoskeleton robot was illustrated in experiments involving healthy subjects and stroke patients.

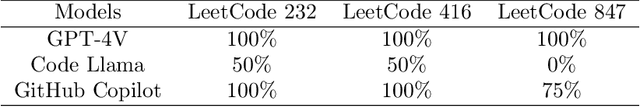

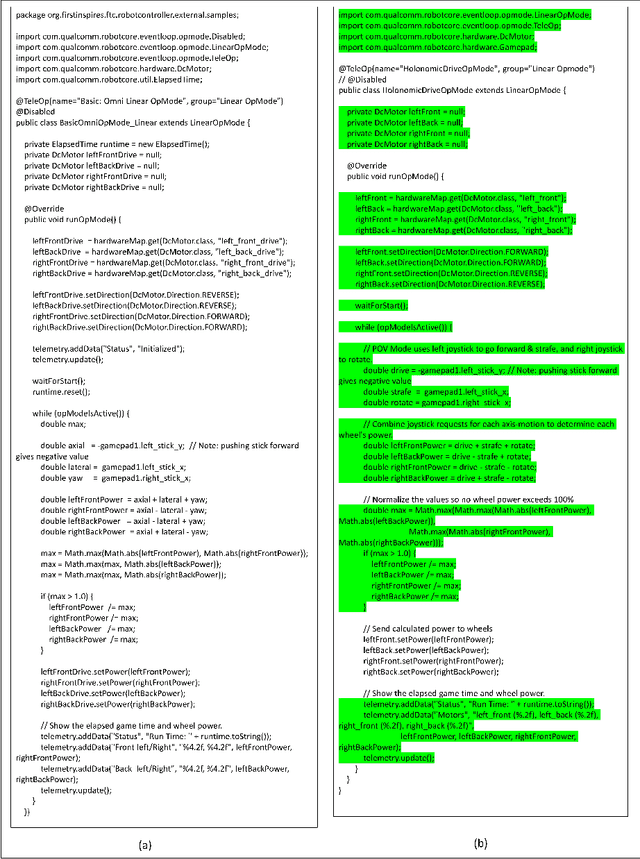

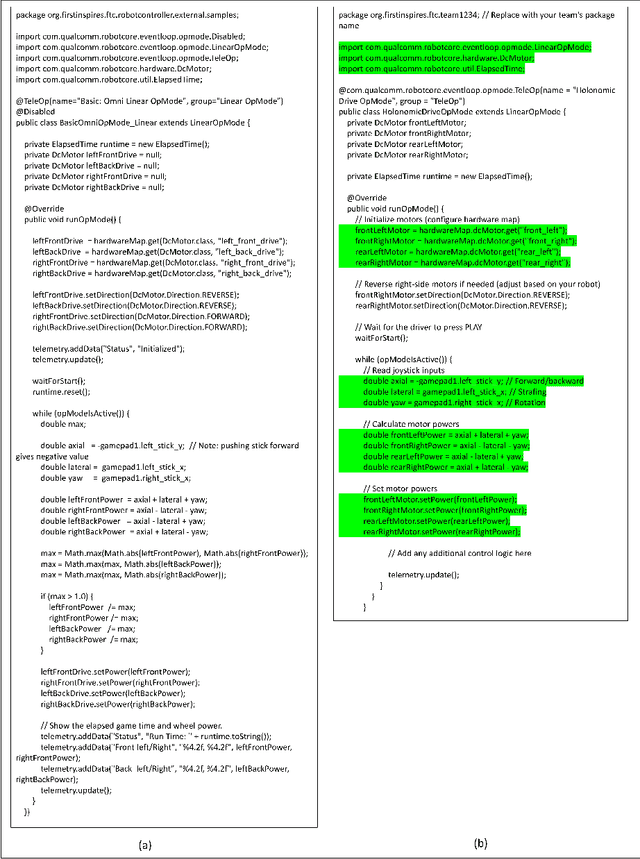

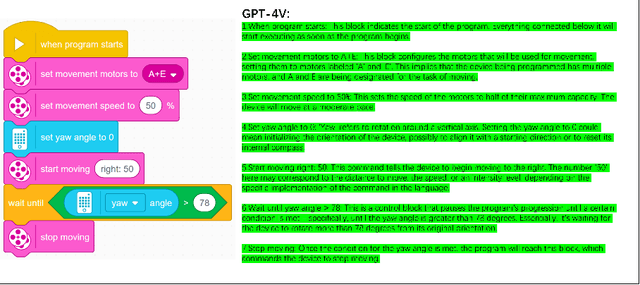

LLMs for Coding and Robotics Education

Feb 09, 2024

Abstract:Large language models and multimodal large language models have revolutionized artificial intelligence recently. An increasing number of regions are now embracing these advanced technologies. Within this context, robot coding education is garnering increasing attention. To teach young children how to code and compete in robot challenges, large language models are being utilized for robot code explanation, generation, and modification. In this paper, we highlight an important trend in robot coding education. We test several mainstream large language models on both traditional coding tasks and the more challenging task of robot code generation, which includes block diagrams. Our results show that GPT-4V outperforms other models in all of our tests but struggles with generating block diagram images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge