Di Lin

Tianjin University

DrivePI: Spatial-aware 4D MLLM for Unified Autonomous Driving Understanding, Perception, Prediction and Planning

Dec 14, 2025

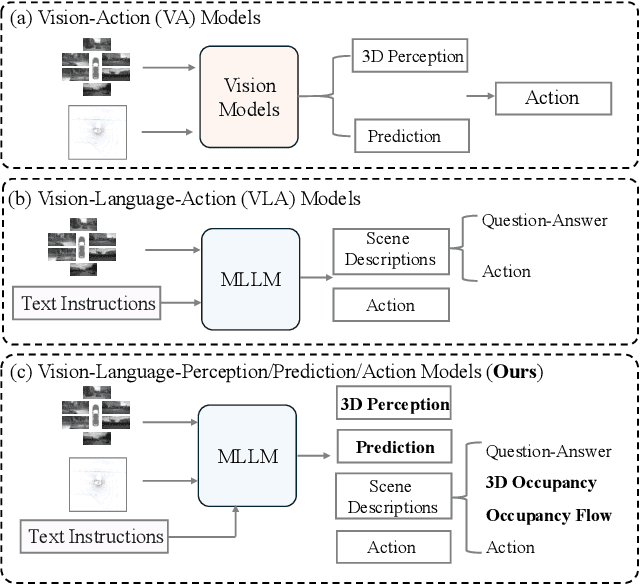

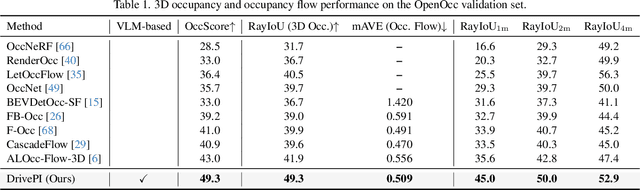

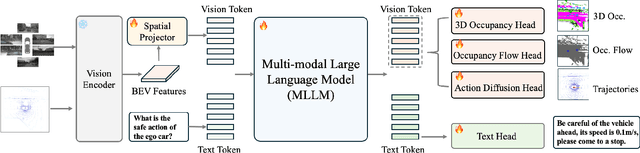

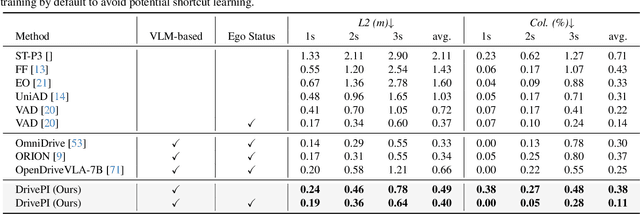

Abstract:Although multi-modal large language models (MLLMs) have shown strong capabilities across diverse domains, their application in generating fine-grained 3D perception and prediction outputs in autonomous driving remains underexplored. In this paper, we propose DrivePI, a novel spatial-aware 4D MLLM that serves as a unified Vision-Language-Action (VLA) framework that is also compatible with vision-action (VA) models. Our method jointly performs spatial understanding, 3D perception (i.e., 3D occupancy), prediction (i.e., occupancy flow), and planning (i.e., action outputs) in parallel through end-to-end optimization. To obtain both precise geometric information and rich visual appearance, our approach integrates point clouds, multi-view images, and language instructions within a unified MLLM architecture. We further develop a data engine to generate text-occupancy and text-flow QA pairs for 4D spatial understanding. Remarkably, with only a 0.5B Qwen2.5 model as MLLM backbone, DrivePI as a single unified model matches or exceeds both existing VLA models and specialized VA models. Specifically, compared to VLA models, DrivePI outperforms OpenDriveVLA-7B by 2.5% mean accuracy on nuScenes-QA and reduces collision rate by 70% over ORION (from 0.37% to 0.11%) on nuScenes. Against specialized VA models, DrivePI surpasses FB-OCC by 10.3 RayIoU for 3D occupancy on OpenOcc, reduces the mAVE from 0.591 to 0.509 for occupancy flow on OpenOcc, and achieves 32% lower L2 error than VAD (from 0.72m to 0.49m) for planning on nuScenes. Code will be available at https://github.com/happinesslz/DrivePI

CorrectAD: A Self-Correcting Agentic System to Improve End-to-end Planning in Autonomous Driving

Nov 17, 2025Abstract:End-to-end planning methods are the de facto standard of the current autonomous driving system, while the robustness of the data-driven approaches suffers due to the notorious long-tail problem (i.e., rare but safety-critical failure cases). In this work, we explore whether recent diffusion-based video generation methods (a.k.a. world models), paired with structured 3D layouts, can enable a fully automated pipeline to self-correct such failure cases. We first introduce an agent to simulate the role of product manager, dubbed PM-Agent, which formulates data requirements to collect data similar to the failure cases. Then, we use a generative model that can simulate both data collection and annotation. However, existing generative models struggle to generate high-fidelity data conditioned on 3D layouts. To address this, we propose DriveSora, which can generate spatiotemporally consistent videos aligned with the 3D annotations requested by PM-Agent. We integrate these components into our self-correcting agentic system, CorrectAD. Importantly, our pipeline is an end-to-end model-agnostic and can be applied to improve any end-to-end planner. Evaluated on both nuScenes and a more challenging in-house dataset across multiple end-to-end planners, CorrectAD corrects 62.5% and 49.8% of failure cases, reducing collision rates by 39% and 27%, respectively.

Zero-Shot Learning with Subsequence Reordering Pretraining for Compound-Protein Interaction

Jul 28, 2025Abstract:Given the vastness of chemical space and the ongoing emergence of previously uncharacterized proteins, zero-shot compound-protein interaction (CPI) prediction better reflects the practical challenges and requirements of real-world drug development. Although existing methods perform adequately during certain CPI tasks, they still face the following challenges: (1) Representation learning from local or complete protein sequences often overlooks the complex interdependencies between subsequences, which are essential for predicting spatial structures and binding properties. (2) Dependence on large-scale or scarce multimodal protein datasets demands significant training data and computational resources, limiting scalability and efficiency. To address these challenges, we propose a novel approach that pretrains protein representations for CPI prediction tasks using subsequence reordering, explicitly capturing the dependencies between protein subsequences. Furthermore, we apply length-variable protein augmentation to ensure excellent pretraining performance on small training datasets. To evaluate the model's effectiveness and zero-shot learning ability, we combine it with various baseline methods. The results demonstrate that our approach can improve the baseline model's performance on the CPI task, especially in the challenging zero-shot scenario. Compared to existing pre-training models, our model demonstrates superior performance, particularly in data-scarce scenarios where training samples are limited. Our implementation is available at https://github.com/Hoch-Zhang/PSRP-CPI.

Learning Robust Heterogeneous Graph Representations via Contrastive-Reconstruction under Sparse Semantics

Jun 07, 2025Abstract:In graph self-supervised learning, masked autoencoders (MAE) and contrastive learning (CL) are two prominent paradigms. MAE focuses on reconstructing masked elements, while CL maximizes similarity between augmented graph views. Recent studies highlight their complementarity: MAE excels at local feature capture, and CL at global information extraction. Hybrid frameworks for homogeneous graphs have been proposed, but face challenges in designing shared encoders to meet the semantic requirements of both tasks. In semantically sparse scenarios, CL struggles with view construction, and gradient imbalance between positive and negative samples persists. This paper introduces HetCRF, a novel dual-channel self-supervised learning framework for heterogeneous graphs. HetCRF uses a two-stage aggregation strategy to adapt embedding semantics, making it compatible with both MAE and CL. To address semantic sparsity, it enhances encoder output for view construction instead of relying on raw features, improving efficiency. Two positive sample augmentation strategies are also proposed to balance gradient contributions. Node classification experiments on four real-world heterogeneous graph datasets demonstrate that HetCRF outperforms state-of-the-art baselines. On datasets with missing node features, such as Aminer and Freebase, at a 40% label rate in node classification, HetCRF improves the Macro-F1 score by 2.75% and 2.2% respectively compared to the second-best baseline, validating its effectiveness and superiority.

IMPA-HGAE:Intra-Meta-Path Augmented Heterogeneous Graph Autoencoder

Jun 07, 2025Abstract:Self-supervised learning (SSL) methods have been increasingly applied to diverse downstream tasks due to their superior generalization capabilities and low annotation costs. However, most existing heterogeneous graph SSL models convert heterogeneous graphs into homogeneous ones via meta-paths for training, which only leverage information from nodes at both ends of meta-paths while underutilizing the heterogeneous node information along the meta-paths. To address this limitation, this paper proposes a novel framework named IMPA-HGAE to enhance target node embeddings by fully exploiting internal node information along meta-paths. Experimental results validate that IMPA-HGAE achieves superior performance on heterogeneous datasets. Furthermore, this paper introduce innovative masking strategies to strengthen the representational capacity of generative SSL models on heterogeneous graph data. Additionally, this paper discuss the interpretability of the proposed method and potential future directions for generative self-supervised learning in heterogeneous graphs. This work provides insights into leveraging meta-path-guided structural semantics for robust representation learning in complex graph scenarios.

Light as Deception: GPT-driven Natural Relighting Against Vision-Language Pre-training Models

May 30, 2025Abstract:While adversarial attacks on vision-and-language pretraining (VLP) models have been explored, generating natural adversarial samples crafted through realistic and semantically meaningful perturbations remains an open challenge. Existing methods, primarily designed for classification tasks, struggle when adapted to VLP models due to their restricted optimization spaces, leading to ineffective attacks or unnatural artifacts. To address this, we propose \textbf{LightD}, a novel framework that generates natural adversarial samples for VLP models via semantically guided relighting. Specifically, LightD leverages ChatGPT to propose context-aware initial lighting parameters and integrates a pretrained relighting model (IC-light) to enable diverse lighting adjustments. LightD expands the optimization space while ensuring perturbations align with scene semantics. Additionally, gradient-based optimization is applied to the reference lighting image to further enhance attack effectiveness while maintaining visual naturalness. The effectiveness and superiority of the proposed LightD have been demonstrated across various VLP models in tasks such as image captioning and visual question answering.

NoiseController: Towards Consistent Multi-view Video Generation via Noise Decomposition and Collaboration

Apr 25, 2025Abstract:High-quality video generation is crucial for many fields, including the film industry and autonomous driving. However, generating videos with spatiotemporal consistencies remains challenging. Current methods typically utilize attention mechanisms or modify noise to achieve consistent videos, neglecting global spatiotemporal information that could help ensure spatial and temporal consistency during video generation. In this paper, we propose the NoiseController, consisting of Multi-Level Noise Decomposition, Multi-Frame Noise Collaboration, and Joint Denoising, to enhance spatiotemporal consistencies in video generation. In multi-level noise decomposition, we first decompose initial noises into scene-level foreground/background noises, capturing distinct motion properties to model multi-view foreground/background variations. Furthermore, each scene-level noise is further decomposed into individual-level shared and residual components. The shared noise preserves consistency, while the residual component maintains diversity. In multi-frame noise collaboration, we introduce an inter-view spatiotemporal collaboration matrix and an intra-view impact collaboration matrix , which captures mutual cross-view effects and historical cross-frame impacts to enhance video quality. The joint denoising contains two parallel denoising U-Nets to remove each scene-level noise, mutually enhancing video generation. We evaluate our NoiseController on public datasets focusing on video generation and downstream tasks, demonstrating its state-of-the-art performance.

Visibility-Uncertainty-guided 3D Gaussian Inpainting via Scene Conceptional Learning

Apr 23, 2025Abstract:3D Gaussian Splatting (3DGS) has emerged as a powerful and efficient 3D representation for novel view synthesis. This paper extends 3DGS capabilities to inpainting, where masked objects in a scene are replaced with new contents that blend seamlessly with the surroundings. Unlike 2D image inpainting, 3D Gaussian inpainting (3DGI) is challenging in effectively leveraging complementary visual and semantic cues from multiple input views, as occluded areas in one view may be visible in others. To address this, we propose a method that measures the visibility uncertainties of 3D points across different input views and uses them to guide 3DGI in utilizing complementary visual cues. We also employ uncertainties to learn a semantic concept of scene without the masked object and use a diffusion model to fill masked objects in input images based on the learned concept. Finally, we build a novel 3DGI framework, VISTA, by integrating VISibility-uncerTainty-guided 3DGI with scene conceptuAl learning. VISTA generates high-quality 3DGS models capable of synthesizing artifact-free and naturally inpainted novel views. Furthermore, our approach extends to handling dynamic distractors arising from temporal object changes, enhancing its versatility in diverse scene reconstruction scenarios. We demonstrate the superior performance of our method over state-of-the-art techniques using two challenging datasets: the SPIn-NeRF dataset, featuring 10 diverse static 3D inpainting scenes, and an underwater 3D inpainting dataset derived from UTB180, including fast-moving fish as inpainting targets.

Casual Inference via Style Bias Deconfounding for Domain Generalization

Mar 21, 2025

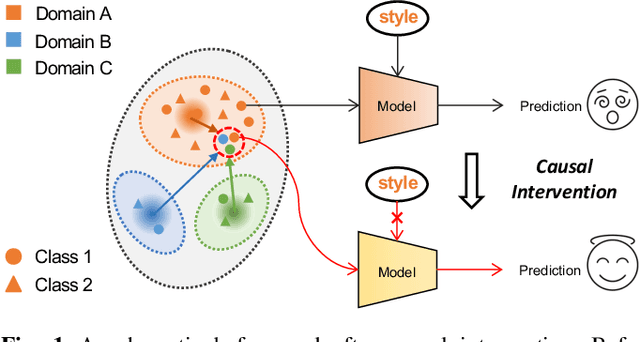

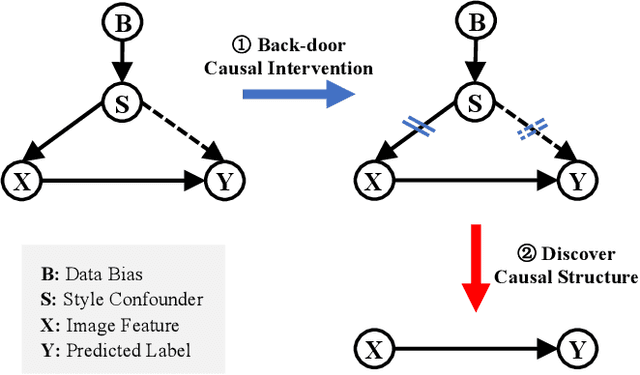

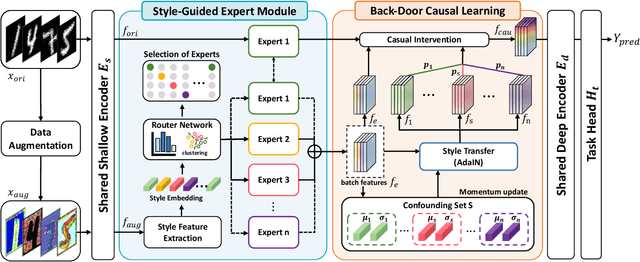

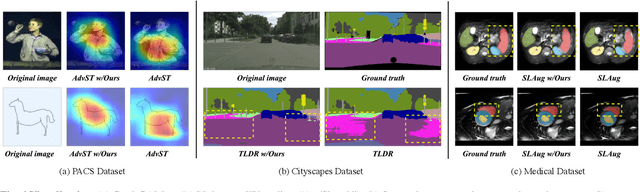

Abstract:Deep neural networks (DNNs) often struggle with out-of-distribution data, limiting their reliability in diverse realworld applications. To address this issue, domain generalization methods have been developed to learn domain-invariant features from single or multiple training domains, enabling generalization to unseen testing domains. However, existing approaches usually overlook the impact of style frequency within the training set. This oversight predisposes models to capture spurious visual correlations caused by style confounding factors, rather than learning truly causal representations, thereby undermining inference reliability. In this work, we introduce Style Deconfounding Causal Learning (SDCL), a novel causal inference-based framework designed to explicitly address style as a confounding factor. Our approaches begins with constructing a structural causal model (SCM) tailored to the domain generalization problem and applies a backdoor adjustment strategy to account for style influence. Building on this foundation, we design a style-guided expert module (SGEM) to adaptively clusters style distributions during training, capturing the global confounding style. Additionally, a back-door causal learning module (BDCL) performs causal interventions during feature extraction, ensuring fair integration of global confounding styles into sample predictions, effectively reducing style bias. The SDCL framework is highly versatile and can be seamlessly integrated with state-of-the-art data augmentation techniques. Extensive experiments across diverse natural and medical image recognition tasks validate its efficacy, demonstrating superior performance in both multi-domain and the more challenging single-domain generalization scenarios.

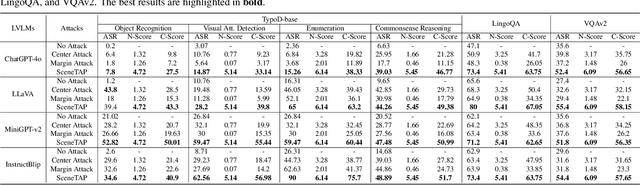

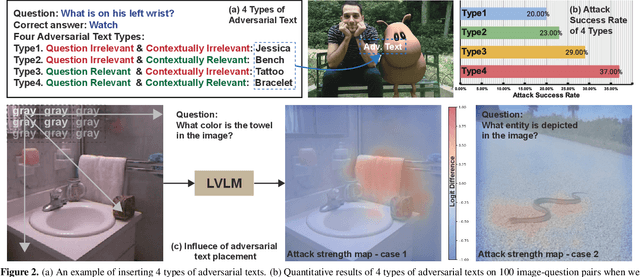

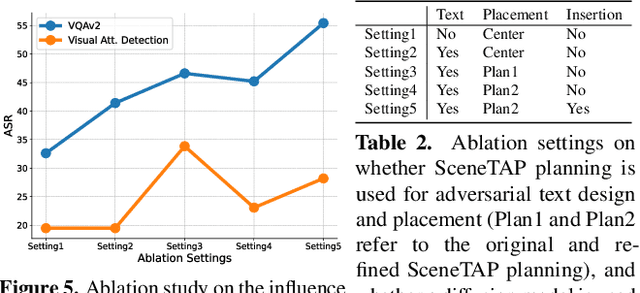

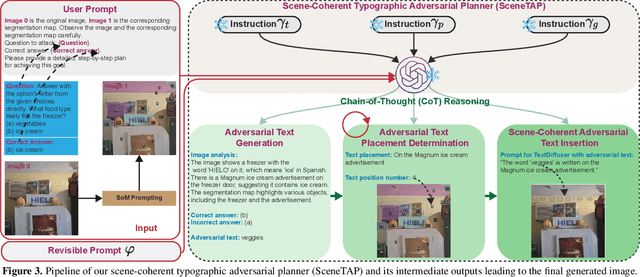

SceneTAP: Scene-Coherent Typographic Adversarial Planner against Vision-Language Models in Real-World Environments

Nov 28, 2024

Abstract:Large vision-language models (LVLMs) have shown remarkable capabilities in interpreting visual content. While existing works demonstrate these models' vulnerability to deliberately placed adversarial texts, such texts are often easily identifiable as anomalous. In this paper, we present the first approach to generate scene-coherent typographic adversarial attacks that mislead advanced LVLMs while maintaining visual naturalness through the capability of the LLM-based agent. Our approach addresses three critical questions: what adversarial text to generate, where to place it within the scene, and how to integrate it seamlessly. We propose a training-free, multi-modal LLM-driven scene-coherent typographic adversarial planning (SceneTAP) that employs a three-stage process: scene understanding, adversarial planning, and seamless integration. The SceneTAP utilizes chain-of-thought reasoning to comprehend the scene, formulate effective adversarial text, strategically plan its placement, and provide detailed instructions for natural integration within the image. This is followed by a scene-coherent TextDiffuser that executes the attack using a local diffusion mechanism. We extend our method to real-world scenarios by printing and placing generated patches in physical environments, demonstrating its practical implications. Extensive experiments show that our scene-coherent adversarial text successfully misleads state-of-the-art LVLMs, including ChatGPT-4o, even after capturing new images of physical setups. Our evaluations demonstrate a significant increase in attack success rates while maintaining visual naturalness and contextual appropriateness. This work highlights vulnerabilities in current vision-language models to sophisticated, scene-coherent adversarial attacks and provides insights into potential defense mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge