Ziying Song

DGFusion: Dual-guided Fusion for Robust Multi-Modal 3D Object Detection

Nov 13, 2025Abstract:As a critical task in autonomous driving perception systems, 3D object detection is used to identify and track key objects, such as vehicles and pedestrians. However, detecting distant, small, or occluded objects (hard instances) remains a challenge, which directly compromises the safety of autonomous driving systems. We observe that existing multi-modal 3D object detection methods often follow a single-guided paradigm, failing to account for the differences in information density of hard instances between modalities. In this work, we propose DGFusion, based on the Dual-guided paradigm, which fully inherits the advantages of the Point-guide-Image paradigm and integrates the Image-guide-Point paradigm to address the limitations of the single paradigms. The core of DGFusion, the Difficulty-aware Instance Pair Matcher (DIPM), performs instance-level feature matching based on difficulty to generate easy and hard instance pairs, while the Dual-guided Modules exploit the advantages of both pair types to enable effective multi-modal feature fusion. Experimental results demonstrate that our DGFusion outperforms the baseline methods, with respective improvements of +1.0\% mAP, +0.8\% NDS, and +1.3\% average recall on nuScenes. Extensive experiments demonstrate consistent robustness gains for hard instance detection across ego-distance, size, visibility, and small-scale training scenarios.

Beyond Imitation: Constraint-Aware Trajectory Generation with Flow Matching For End-to-End Autonomous Driving

Oct 30, 2025Abstract:Planning is a critical component of end-to-end autonomous driving. However, prevailing imitation learning methods often suffer from mode collapse, failing to produce diverse trajectory hypotheses. Meanwhile, existing generative approaches struggle to incorporate crucial safety and physical constraints directly into the generative process, necessitating an additional optimization stage to refine their outputs. To address these limitations, we propose CATG, a novel planning framework that leverages Constrained Flow Matching. Concretely, CATG explicitly models the flow matching process, which inherently mitigates mode collapse and allows for flexible guidance from various conditioning signals. Our primary contribution is the novel imposition of explicit constraints directly within the flow matching process, ensuring that the generated trajectories adhere to vital safety and kinematic rules. Secondly, CATG parameterizes driving aggressiveness as a control signal during generation, enabling precise manipulation of trajectory style. Notably, on the NavSim v2 challenge, CATG achieved 2nd place with an EPDMS score of 51.31 and was honored with the Innovation Award.

Progressive Bird's Eye View Perception for Safety-Critical Autonomous Driving: A Comprehensive Survey

Aug 11, 2025Abstract:Bird's-Eye-View (BEV) perception has become a foundational paradigm in autonomous driving, enabling unified spatial representations that support robust multi-sensor fusion and multi-agent collaboration. As autonomous vehicles transition from controlled environments to real-world deployment, ensuring the safety and reliability of BEV perception in complex scenarios - such as occlusions, adverse weather, and dynamic traffic - remains a critical challenge. This survey provides the first comprehensive review of BEV perception from a safety-critical perspective, systematically analyzing state-of-the-art frameworks and implementation strategies across three progressive stages: single-modality vehicle-side, multimodal vehicle-side, and multi-agent collaborative perception. Furthermore, we examine public datasets encompassing vehicle-side, roadside, and collaborative settings, evaluating their relevance to safety and robustness. We also identify key open-world challenges - including open-set recognition, large-scale unlabeled data, sensor degradation, and inter-agent communication latency - and outline future research directions, such as integration with end-to-end autonomous driving systems, embodied intelligence, and large language models.

Stable at Any Speed: Speed-Driven Multi-Object Tracking with Learnable Kalman Filtering

Aug 01, 2025Abstract:Multi-object tracking (MOT) enables autonomous vehicles to continuously perceive dynamic objects, supplying essential temporal cues for prediction, behavior understanding, and safe planning. However, conventional tracking-by-detection methods typically rely on static coordinate transformations based on ego-vehicle poses, disregarding ego-vehicle speed-induced variations in observation noise and reference frame changes, which degrades tracking stability and accuracy in dynamic, high-speed scenarios. In this paper, we investigate the critical role of ego-vehicle speed in MOT and propose a Speed-Guided Learnable Kalman Filter (SG-LKF) that dynamically adapts uncertainty modeling to ego-vehicle speed, significantly improving stability and accuracy in highly dynamic scenarios. Central to SG-LKF is MotionScaleNet (MSNet), a decoupled token-mixing and channel-mixing MLP that adaptively predicts key parameters of SG-LKF. To enhance inter-frame association and trajectory continuity, we introduce a self-supervised trajectory consistency loss jointly optimized with semantic and positional constraints. Extensive experiments show that SG-LKF ranks first among all vision-based methods on KITTI 2D MOT with 79.59% HOTA, delivers strong results on KITTI 3D MOT with 82.03% HOTA, and outperforms SimpleTrack by 2.2% AMOTA on nuScenes 3D MOT.

FocalAD: Local Motion Planning for End-to-End Autonomous Driving

Jun 13, 2025Abstract:In end-to-end autonomous driving,the motion prediction plays a pivotal role in ego-vehicle planning. However, existing methods often rely on globally aggregated motion features, ignoring the fact that planning decisions are primarily influenced by a small number of locally interacting agents. Failing to attend to these critical local interactions can obscure potential risks and undermine planning reliability. In this work, we propose FocalAD, a novel end-to-end autonomous driving framework that focuses on critical local neighbors and refines planning by enhancing local motion representations. Specifically, FocalAD comprises two core modules: the Ego-Local-Agents Interactor (ELAI) and the Focal-Local-Agents Loss (FLA Loss). ELAI conducts a graph-based ego-centric interaction representation that captures motion dynamics with local neighbors to enhance both ego planning and agent motion queries. FLA Loss increases the weights of decision-critical neighboring agents, guiding the model to prioritize those more relevant to planning. Extensive experiments show that FocalAD outperforms existing state-of-the-art methods on the open-loop nuScenes datasets and closed-loop Bench2Drive benchmark. Notably, on the robustness-focused Adv-nuScenes dataset, FocalAD achieves even greater improvements, reducing the average colilision rate by 41.9% compared to DiffusionDrive and by 15.6% compared to SparseDrive.

S2R-Bench: A Sim-to-Real Evaluation Benchmark for Autonomous Driving

May 24, 2025

Abstract:Safety is a long-standing and the final pursuit in the development of autonomous driving systems, with a significant portion of safety challenge arising from perception. How to effectively evaluate the safety as well as the reliability of perception algorithms is becoming an emerging issue. Despite its critical importance, existing perception methods exhibit a limitation in their robustness, primarily due to the use of benchmarks are entierly simulated, which fail to align predicted results with actual outcomes, particularly under extreme weather conditions and sensor anomalies that are prevalent in real-world scenarios. To fill this gap, in this study, we propose a Sim-to-Real Evaluation Benchmark for Autonomous Driving (S2R-Bench). We collect diverse sensor anomaly data under various road conditions to evaluate the robustness of autonomous driving perception methods in a comprehensive and realistic manner. This is the first corruption robustness benchmark based on real-world scenarios, encompassing various road conditions, weather conditions, lighting intensities, and time periods. By comparing real-world data with simulated data, we demonstrate the reliability and practical significance of the collected data for real-world applications. We hope that this dataset will advance future research and contribute to the development of more robust perception models for autonomous driving. This dataset is released on https://github.com/adept-thu/S2R-Bench.

Two Tasks, One Goal: Uniting Motion and Planning for Excellent End To End Autonomous Driving Performance

Apr 17, 2025Abstract:End-to-end autonomous driving has made impressive progress in recent years. Former end-to-end autonomous driving approaches often decouple planning and motion tasks, treating them as separate modules. This separation overlooks the potential benefits that planning can gain from learning out-of-distribution data encountered in motion tasks. However, unifying these tasks poses significant challenges, such as constructing shared contextual representations and handling the unobservability of other vehicles' states. To address these challenges, we propose TTOG, a novel two-stage trajectory generation framework. In the first stage, a diverse set of trajectory candidates is generated, while the second stage focuses on refining these candidates through vehicle state information. To mitigate the issue of unavailable surrounding vehicle states, TTOG employs a self-vehicle data-trained state estimator, subsequently extended to other vehicles. Furthermore, we introduce ECSA (equivariant context-sharing scene adapter) to enhance the generalization of scene representations across different agents. Experimental results demonstrate that TTOG achieves state-of-the-art performance across both planning and motion tasks. Notably, on the challenging open-loop nuScenes dataset, TTOG reduces the L2 distance by 36.06\%. Furthermore, on the closed-loop Bench2Drive dataset, our approach achieves a 22\% improvement in the driving score (DS), significantly outperforming existing baselines.

Collaborative Perception Datasets for Autonomous Driving: A Review

Apr 17, 2025

Abstract:Collaborative perception has attracted growing interest from academia and industry due to its potential to enhance perception accuracy, safety, and robustness in autonomous driving through multi-agent information fusion. With the advancement of Vehicle-to-Everything (V2X) communication, numerous collaborative perception datasets have emerged, varying in cooperation paradigms, sensor configurations, data sources, and application scenarios. However, the absence of systematic summarization and comparative analysis hinders effective resource utilization and standardization of model evaluation. As the first comprehensive review focused on collaborative perception datasets, this work reviews and compares existing resources from a multi-dimensional perspective. We categorize datasets based on cooperation paradigms, examine their data sources and scenarios, and analyze sensor modalities and supported tasks. A detailed comparative analysis is conducted across multiple dimensions. We also outline key challenges and future directions, including dataset scalability, diversity, domain adaptation, standardization, privacy, and the integration of large language models. To support ongoing research, we provide a continuously updated online repository of collaborative perception datasets and related literature: https://github.com/frankwnb/Collaborative-Perception-Datasets-for-Autonomous-Driving.

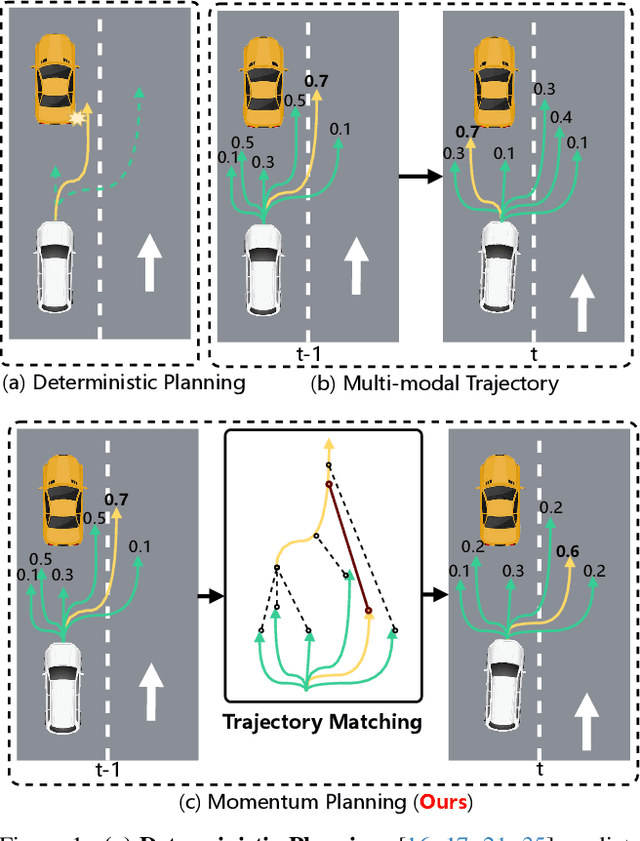

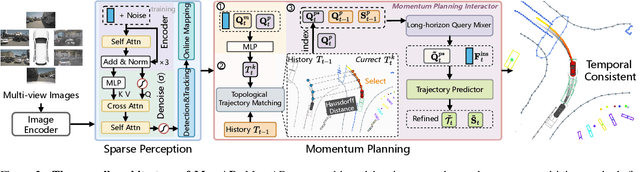

Don't Shake the Wheel: Momentum-Aware Planning in End-to-End Autonomous Driving

Mar 05, 2025

Abstract:End-to-end autonomous driving frameworks enable seamless integration of perception and planning but often rely on one-shot trajectory prediction, which may lead to unstable control and vulnerability to occlusions in single-frame perception. To address this, we propose the Momentum-Aware Driving (MomAD) framework, which introduces trajectory momentum and perception momentum to stabilize and refine trajectory predictions. MomAD comprises two core components: (1) Topological Trajectory Matching (TTM) employs Hausdorff Distance to select the optimal planning query that aligns with prior paths to ensure coherence;(2) Momentum Planning Interactor (MPI) cross-attends the selected planning query with historical queries to expand static and dynamic perception files. This enriched query, in turn, helps regenerate long-horizon trajectory and reduce collision risks. To mitigate noise arising from dynamic environments and detection errors, we introduce robust instance denoising during training, enabling the planning model to focus on critical signals and improve its robustness. We also propose a novel Trajectory Prediction Consistency (TPC) metric to quantitatively assess planning stability. Experiments on the nuScenes dataset demonstrate that MomAD achieves superior long-term consistency (>=3s) compared to SOTA methods. Moreover, evaluations on the curated Turning-nuScenes shows that MomAD reduces the collision rate by 26% and improves TPC by 0.97m (33.45%) over a 6s prediction horizon, while closedloop on Bench2Drive demonstrates an up to 16.3% improvement in success rate.

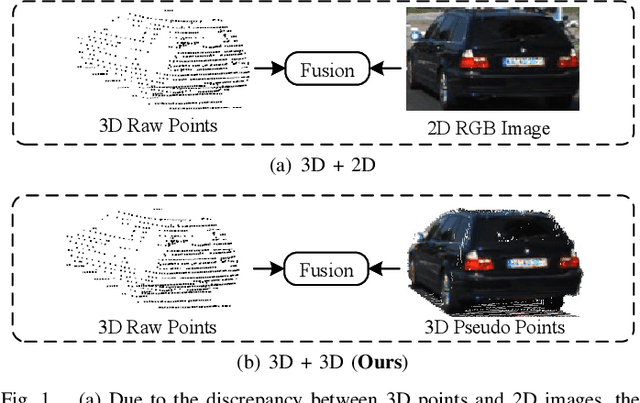

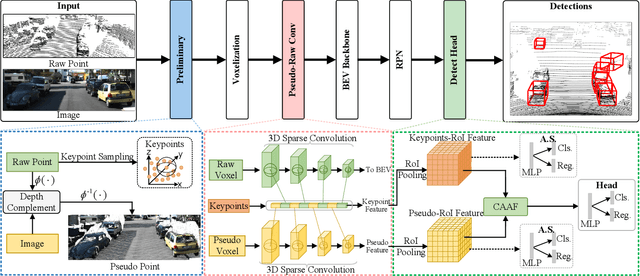

FGU3R: Fine-Grained Fusion via Unified 3D Representation for Multimodal 3D Object Detection

Jan 08, 2025

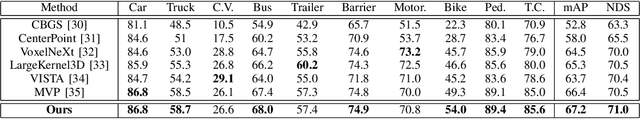

Abstract:Multimodal 3D object detection has garnered considerable interest in autonomous driving. However, multimodal detectors suffer from dimension mismatches that derive from fusing 3D points with 2D pixels coarsely, which leads to sub-optimal fusion performance. In this paper, we propose a multimodal framework FGU3R to tackle the issue mentioned above via unified 3D representation and fine-grained fusion, which consists of two important components. First, we propose an efficient feature extractor for raw and pseudo points, termed Pseudo-Raw Convolution (PRConv), which modulates multimodal features synchronously and aggregates the features from different types of points on key points based on multimodal interaction. Second, a Cross-Attention Adaptive Fusion (CAAF) is designed to fuse homogeneous 3D RoI (Region of Interest) features adaptively via a cross-attention variant in a fine-grained manner. Together they make fine-grained fusion on unified 3D representation. The experiments conducted on the KITTI and nuScenes show the effectiveness of our proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge