Yadan Luo

MirrorLA: Reflecting Feature Map for Vision Linear Attention

Feb 04, 2026Abstract:Linear attention significantly reduces the computational complexity of Transformers from quadratic to linear, yet it consistently lags behind softmax-based attention in performance. We identify the root cause of this degradation as the non-negativity constraint imposed on kernel feature maps: standard projections like ReLU act as "passive truncation" operators, indiscriminately discarding semantic information residing in the negative domain. We propose MirrorLA, a geometric framework that substitutes passive truncation with active reorientation. By leveraging learnable Householder reflections, MirrorLA rotates the feature geometry into the non-negative orthant to maximize information retention. Our approach restores representational density through a cohesive, multi-scale design: it first optimizes local discriminability via block-wise isometries, stabilizes long-context dynamics using variance-aware modulation to diversify activations, and finally, integrates dispersed subspaces via cross-head reflections to induce global covariance mixing. MirrorLA achieves state-of-the-art performance across standard benchmarks, demonstrating that strictly linear efficiency can be achieved without compromising representational fidelity.

STILL: Selecting Tokens for Intra-Layer Hybrid Attention to Linearize LLMs

Feb 02, 2026Abstract:Linearizing pretrained large language models (LLMs) primarily relies on intra-layer hybrid attention mechanisms to alleviate the quadratic complexity of standard softmax attention. Existing methods perform token routing based on sliding-window partitions, resulting in position-based selection and fails to capture token-specific global importance. Meanwhile, linear attention further suffers from distribution shift caused by learnable feature maps that distort pretrained feature magnitudes. Motivated by these limitations, we propose STILL, an intra-layer hybrid linearization framework for efficiently linearizing LLMs. STILL introduces a Self-Saliency Score with strong local-global consistency, enabling accurate token selection using sliding-window computation, and retains salient tokens for sparse softmax attention while summarizing the remaining context via linear attention. To preserve pretrained representations, we design a Norm-Preserved Feature Map (NP-Map) that decouples feature direction from magnitude and reinjects pretrained norms. We further adopt a unified training-inference architecture with chunk-wise parallelization and delayed selection to improve hardware efficiency. Experiments show that STILL matches or surpasses the original pretrained model on commonsense and general reasoning tasks, and achieves up to a 86.2% relative improvement over prior linearized attention methods on long-context benchmarks.

The RoboSense Challenge: Sense Anything, Navigate Anywhere, Adapt Across Platforms

Jan 08, 2026Abstract:Autonomous systems are increasingly deployed in open and dynamic environments -- from city streets to aerial and indoor spaces -- where perception models must remain reliable under sensor noise, environmental variation, and platform shifts. However, even state-of-the-art methods often degrade under unseen conditions, highlighting the need for robust and generalizable robot sensing. The RoboSense 2025 Challenge is designed to advance robustness and adaptability in robot perception across diverse sensing scenarios. It unifies five complementary research tracks spanning language-grounded decision making, socially compliant navigation, sensor configuration generalization, cross-view and cross-modal correspondence, and cross-platform 3D perception. Together, these tasks form a comprehensive benchmark for evaluating real-world sensing reliability under domain shifts, sensor failures, and platform discrepancies. RoboSense 2025 provides standardized datasets, baseline models, and unified evaluation protocols, enabling large-scale and reproducible comparison of robust perception methods. The challenge attracted 143 teams from 85 institutions across 16 countries, reflecting broad community engagement. By consolidating insights from 23 winning solutions, this report highlights emerging methodological trends, shared design principles, and open challenges across all tracks, marking a step toward building robots that can sense reliably, act robustly, and adapt across platforms in real-world environments.

Distributed Zero-Shot Learning for Visual Recognition

Nov 11, 2025Abstract:In this paper, we propose a Distributed Zero-Shot Learning (DistZSL) framework that can fully exploit decentralized data to learn an effective model for unseen classes. Considering the data heterogeneity issues across distributed nodes, we introduce two key components to ensure the effective learning of DistZSL: a cross-node attribute regularizer and a global attribute-to-visual consensus. Our proposed cross-node attribute regularizer enforces the distances between attribute features to be similar across different nodes. In this manner, the overall attribute feature space would be stable during learning, and thus facilitate the establishment of visual-to-attribute(V2A) relationships. Then, we introduce the global attribute-tovisual consensus to mitigate biased V2A mappings learned from individual nodes. Specifically, we enforce the bilateral mapping between the attribute and visual feature distributions to be consistent across different nodes. Thus, the learned consistent V2A mapping can significantly enhance zero-shot learning across different nodes. Extensive experiments demonstrate that DistZSL achieves superior performance to the state-of-the-art in learning from distributed data.

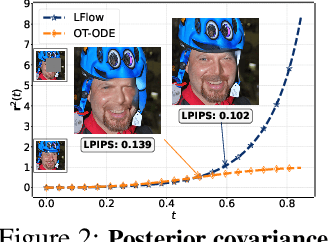

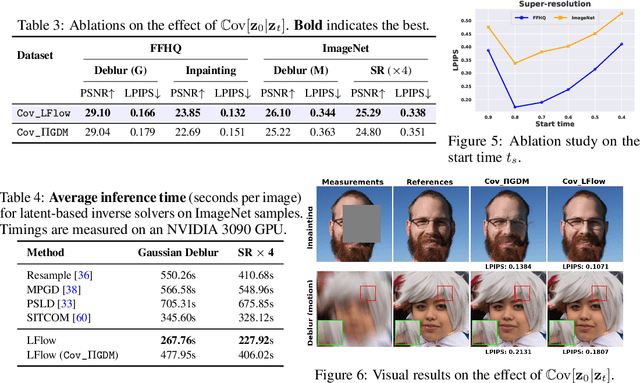

Latent Refinement via Flow Matching for Training-free Linear Inverse Problem Solving

Nov 08, 2025

Abstract:Recent advances in inverse problem solving have increasingly adopted flow priors over diffusion models due to their ability to construct straight probability paths from noise to data, thereby enhancing efficiency in both training and inference. However, current flow-based inverse solvers face two primary limitations: (i) they operate directly in pixel space, which demands heavy computational resources for training and restricts scalability to high-resolution images, and (ii) they employ guidance strategies with prior-agnostic posterior covariances, which can weaken alignment with the generative trajectory and degrade posterior coverage. In this paper, we propose LFlow (Latent Refinement via Flows), a training-free framework for solving linear inverse problems via pretrained latent flow priors. LFlow leverages the efficiency of flow matching to perform ODE sampling in latent space along an optimal path. This latent formulation further allows us to introduce a theoretically grounded posterior covariance, derived from the optimal vector field, enabling effective flow guidance. Experimental results demonstrate that our proposed method outperforms state-of-the-art latent diffusion solvers in reconstruction quality across most tasks. The code will be publicly available at https://github.com/hosseinaskari-cs/LFlow .

Are Synthetic Videos Useful? A Benchmark for Retrieval-Centric Evaluation of Synthetic Videos

Jul 03, 2025Abstract:Text-to-video (T2V) synthesis has advanced rapidly, yet current evaluation metrics primarily capture visual quality and temporal consistency, offering limited insight into how synthetic videos perform in downstream tasks such as text-to-video retrieval (TVR). In this work, we introduce SynTVA, a new dataset and benchmark designed to evaluate the utility of synthetic videos for building retrieval models. Based on 800 diverse user queries derived from MSRVTT training split, we generate synthetic videos using state-of-the-art T2V models and annotate each video-text pair along four key semantic alignment dimensions: Object \& Scene, Action, Attribute, and Prompt Fidelity. Our evaluation framework correlates general video quality assessment (VQA) metrics with these alignment scores, and examines their predictive power for downstream TVR performance. To explore pathways of scaling up, we further develop an Auto-Evaluator to estimate alignment quality from existing metrics. Beyond benchmarking, our results show that SynTVA is a valuable asset for dataset augmentation, enabling the selection of high-utility synthetic samples that measurably improve TVR outcomes. Project page and dataset can be found at https://jasoncodemaker.github.io/SynTVA/.

WisWheat: A Three-Tiered Vision-Language Dataset for Wheat Management

Jun 06, 2025Abstract:Wheat management strategies play a critical role in determining yield. Traditional management decisions often rely on labour-intensive expert inspections, which are expensive, subjective and difficult to scale. Recently, Vision-Language Models (VLMs) have emerged as a promising solution to enable scalable, data-driven management support. However, due to a lack of domain-specific knowledge, directly applying VLMs to wheat management tasks results in poor quantification and reasoning capabilities, ultimately producing vague or even misleading management recommendations. In response, we propose WisWheat, a wheat-specific dataset with a three-layered design to enhance VLM performance on wheat management tasks: (1) a foundational pretraining dataset of 47,871 image-caption pairs for coarsely adapting VLMs to wheat morphology; (2) a quantitative dataset comprising 7,263 VQA-style image-question-answer triplets for quantitative trait measuring tasks; and (3) an Instruction Fine-tuning dataset with 4,888 samples targeting biotic and abiotic stress diagnosis and management plan for different phenological stages. Extensive experimental results demonstrate that fine-tuning open-source VLMs (e.g., Qwen2.5 7B) on our dataset leads to significant performance improvements. Specifically, the Qwen2.5 VL 7B fine-tuned on our wheat instruction dataset achieves accuracy scores of 79.2% and 84.6% on wheat stress and growth stage conversation tasks respectively, surpassing even general-purpose commercial models such as GPT-4o by a margin of 11.9% and 34.6%.

CodeMerge: Codebook-Guided Model Merging for Robust Test-Time Adaptation in Autonomous Driving

May 22, 2025

Abstract:Maintaining robust 3D perception under dynamic and unpredictable test-time conditions remains a critical challenge for autonomous driving systems. Existing test-time adaptation (TTA) methods often fail in high-variance tasks like 3D object detection due to unstable optimization and sharp minima. While recent model merging strategies based on linear mode connectivity (LMC) offer improved stability by interpolating between fine-tuned checkpoints, they are computationally expensive, requiring repeated checkpoint access and multiple forward passes. In this paper, we introduce CodeMerge, a lightweight and scalable model merging framework that bypasses these limitations by operating in a compact latent space. Instead of loading full models, CodeMerge represents each checkpoint with a low-dimensional fingerprint derived from the source model's penultimate features and constructs a key-value codebook. We compute merging coefficients using ridge leverage scores on these fingerprints, enabling efficient model composition without compromising adaptation quality. Our method achieves strong performance across challenging benchmarks, improving end-to-end 3D detection 14.9% NDS on nuScenes-C and LiDAR-based detection by over 7.6% mAP on nuScenes-to-KITTI, while benefiting downstream tasks such as online mapping, motion prediction and planning even without training. Code and pretrained models are released in the supplementary material.

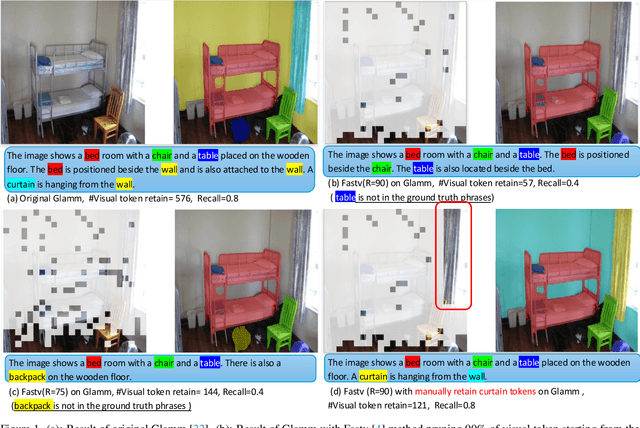

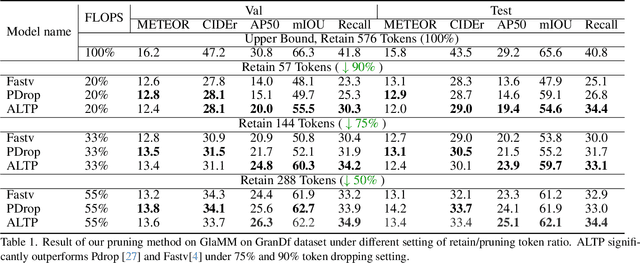

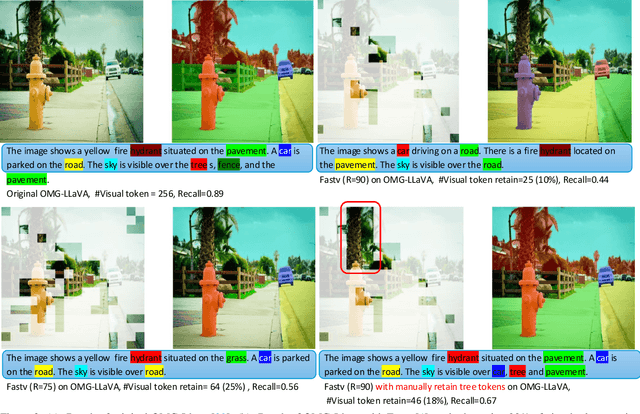

Local Information Matters: Inference Acceleration For Grounded Conversation Generation Models Through Adaptive Local-Aware Token Pruning

Apr 01, 2025

Abstract:Grounded Conversation Generation (GCG) is an emerging vision-language task that requires models to generate natural language responses seamlessly intertwined with corresponding object segmentation masks. Recent models, such as GLaMM and OMG-LLaVA, achieve pixel-level grounding but incur significant computational costs due to processing a large number of visual tokens. Existing token pruning methods, like FastV and PyramidDrop, fail to preserve the local visual features critical for accurate grounding, leading to substantial performance drops in GCG tasks. To address this, we propose Adaptive Local-Aware Token Pruning (ALTP), a simple yet effective framework that accelerates GCG models by prioritizing local object information. ALTP introduces two key components: (1) Detail Density Capture (DDC), which uses superpixel segmentation to retain tokens in object-centric regions, preserving fine-grained details, and (2) Dynamic Density Formation (DDF), which dynamically allocates tokens based on information density, ensuring higher retention in semantically rich areas. Extensive experiments on the GranDf dataset demonstrate that ALTP significantly outperforms existing token pruning methods, such as FastV and PyramidDrop, on both GLaMM and OMG-LLaVA models. Notably, when applied to GLaMM, ALTP achieves a 90% reduction in visual tokens with a 4.9% improvement in AP50 and a 5.0% improvement in Recall compared to PyramidDrop. Similarly, on OMG-LLaVA, ALTP improves AP by 2.1% and mIOU by 3.0% at a 90% token reduction compared with PDrop.

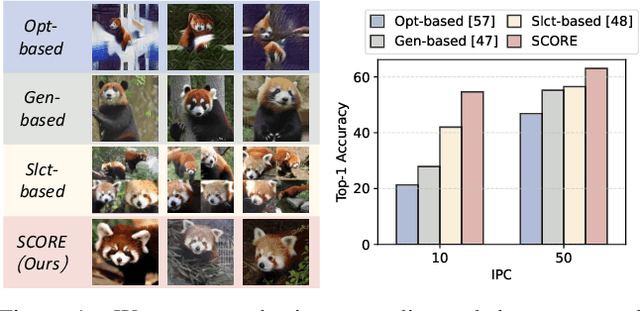

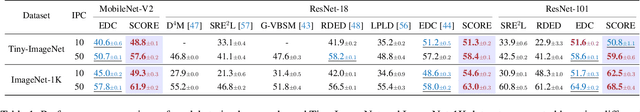

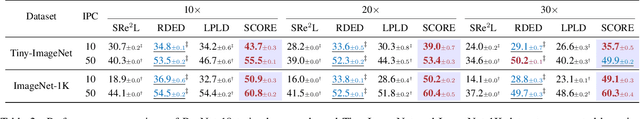

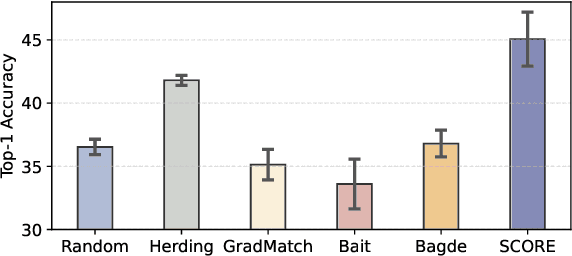

SCORE: Soft Label Compression-Centric Dataset Condensation via Coding Rate Optimization

Mar 18, 2025

Abstract:Dataset Condensation (DC) aims to obtain a condensed dataset that allows models trained on the condensed dataset to achieve performance comparable to those trained on the full dataset. Recent DC approaches increasingly focus on encoding knowledge into realistic images with soft labeling, for their scalability to ImageNet-scale datasets and strong capability of cross-domain generalization. However, this strong performance comes at a substantial storage cost which could significantly exceed the storage cost of the original dataset. We argue that the three key properties to alleviate this performance-storage dilemma are informativeness, discriminativeness, and compressibility of the condensed data. Towards this end, this paper proposes a \textbf{S}oft label compression-centric dataset condensation framework using \textbf{CO}ding \textbf{R}at\textbf{E} (SCORE). SCORE formulates dataset condensation as a min-max optimization problem, which aims to balance the three key properties from an information-theoretic perspective. In particular, we theoretically demonstrate that our coding rate-inspired objective function is submodular, and its optimization naturally enforces low-rank structure in the soft label set corresponding to each condensed data. Extensive experiments on large-scale datasets, including ImageNet-1K and Tiny-ImageNet, demonstrate that SCORE outperforms existing methods in most cases. Even with 30$\times$ compression of soft labels, performance decreases by only 5.5\% and 2.7\% for ImageNet-1K with IPC 10 and 50, respectively. Code will be released upon paper acceptance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge