Zi Huang

StomataSeg: Semi-Supervised Instance Segmentation for Sorghum Stomatal Components

Jan 31, 2026Abstract:Sorghum is a globally important cereal grown widely in water-limited and stress-prone regions. Its strong drought tolerance makes it a priority crop for climate-resilient agriculture. Improving water-use efficiency in sorghum requires precise characterisation of stomatal traits, as stomata control of gas exchange, transpiration and photosynthesis have a major influence on crop performance. Automated analysis of sorghum stomata is difficult because the stomata are small (often less than 40 $μ$m in length in grasses such as sorghum) and vary in shape across genotypes and leaf surfaces. Automated segmentation contributes to high-throughput stomatal phenotyping, yet current methods still face challenges related to nested small structures and annotation bottlenecks. In this paper, we propose a semi-supervised instance segmentation framework tailored for analysis of sorghum stomatal components. We collect and annotate a sorghum leaf imagery dataset containing 11,060 human-annotated patches, covering the three stomatal components (pore, guard cell and complex area) across multiple genotypes and leaf surfaces. To improve the detection of tiny structures, we split high-resolution microscopy images into overlapping small patches. We then apply a pseudo-labelling strategy to unannotated images, producing an additional 56,428 pseudo-labelled patches. Benchmarking across semantic and instance segmentation models shows substantial performance gains: for semantic models the top mIoU increases from 65.93% to 70.35%, whereas for instance models the top AP rises from 28.30% to 46.10%. These results demonstrate that combining patch-based preprocessing with semi-supervised learning significantly improves the segmentation of fine stomatal structures. The proposed framework supports scalable extraction of stomatal traits and facilitates broader adoption of AI-driven phenotyping in crop science.

Generative Recall, Dense Reranking: Learning Multi-View Semantic IDs for Efficient Text-to-Video Retrieval

Jan 29, 2026Abstract:Text-to-Video Retrieval (TVR) is essential in video platforms. Dense retrieval with dual-modality encoders leads in accuracy, but its computation and storage scale poorly with corpus size. Thus, real-time large-scale applications adopt two-stage retrieval, where a fast recall model gathers a small candidate pool, which is reranked by an advanced dense retriever. Due to hugely reduced candidates, the reranking model can use any off-the-shelf dense retriever without hurting efficiency, meaning the recall model bounds two-stage TVR performance. Recently, generative retrieval (GR) replaces dense video embeddings with discrete semantic IDs and retrieves by decoding text queries into ID tokens. GR offers near-constant inference and storage complexity, and its semantic IDs capture high-level video features via quantization, making it ideal for quickly eliminating irrelevant candidates during recall. However, as a recall model in two-stage TVR, GR suffers from (i) semantic ambiguity, where each video satisfies diverse queries but is forced into one semantic ID; and (ii) cross-modal misalignment, as semantic IDs are solely derived from visual features without text supervision. We propose Generative Recall and Dense Reranking (GRDR), designing a novel GR method to uplift recalled candidate quality. GRDR assigns multiple semantic IDs to each video using a query-guided multi-view tokenizer exposing diverse semantic access paths, and jointly trains the tokenizer and generative retriever via a shared codebook to cast semantic IDs as the semantic bridge between texts and videos. At inference, trie-constrained decoding generates a compact candidate set reranked by a dense model for fine-grained matching. Experiments on TVR benchmarks show GRDR matches strong dense retrievers in accuracy while reducing index storage by an order of magnitude and accelerating up to 300$\times$ in full-corpus retrieval.

Integrating Vision-Centric Text Understanding for Conversational Recommender Systems

Jan 20, 2026Abstract:Conversational Recommender Systems (CRSs) have attracted growing attention for their ability to deliver personalized recommendations through natural language interactions. To more accurately infer user preferences from multi-turn conversations, recent works increasingly expand conversational context (e.g., by incorporating diverse entity information or retrieving related dialogues). While such context enrichment can assist preference modeling, it also introduces longer and more heterogeneous inputs, leading to practical issues such as input length constraints, text style inconsistency, and irrelevant textual noise, thereby raising the demand for stronger language understanding ability. In this paper, we propose STARCRS, a Screen-Text-AwaRe Conversational Recommender System that integrates two complementary text understanding modes: (1) a screen-reading pathway that encodes auxiliary textual information as visual tokens, mimicking skim reading on a screen, and (2) an LLM-based textual pathway that focuses on a limited set of critical content for fine-grained reasoning. We design a knowledge-anchored fusion framework that combines contrastive alignment, cross-attention interaction, and adaptive gating to integrate the two modes for improved preference modeling and response generation. Extensive experiments on two widely used benchmarks demonstrate that STARCRS consistently improves both recommendation accuracy and generated response quality.

Hierarchical Refinement of Universal Multimodal Attacks on Vision-Language Models

Jan 15, 2026Abstract:Existing adversarial attacks for VLP models are mostly sample-specific, resulting in substantial computational overhead when scaled to large datasets or new scenarios. To overcome this limitation, we propose Hierarchical Refinement Attack (HRA), a multimodal universal attack framework for VLP models. HRA refines universal adversarial perturbations (UAPs) at both the sample level and the optimization level. For the image modality, we disentangle adversarial examples into clean images and perturbations, allowing each component to be handled independently for more effective disruption of cross-modal alignment. We further introduce a ScMix augmentation strategy that diversifies visual contexts and strengthens both global and local utility of UAPs, thereby reducing reliance on spurious features. In addition, we refine the optimization path by leveraging a temporal hierarchy of historical and estimated future gradients to avoid local minima and stabilize universal perturbation learning. For the text modality, HRA identifies globally influential words by combining intra-sentence and inter-sentence importance measures, and subsequently utilizes these words as universal text perturbations. Extensive experiments across various downstream tasks, VLP models, and datasets demonstrate the superiority of the proposed universal multimodal attacks.

Distributed Zero-Shot Learning for Visual Recognition

Nov 11, 2025Abstract:In this paper, we propose a Distributed Zero-Shot Learning (DistZSL) framework that can fully exploit decentralized data to learn an effective model for unseen classes. Considering the data heterogeneity issues across distributed nodes, we introduce two key components to ensure the effective learning of DistZSL: a cross-node attribute regularizer and a global attribute-to-visual consensus. Our proposed cross-node attribute regularizer enforces the distances between attribute features to be similar across different nodes. In this manner, the overall attribute feature space would be stable during learning, and thus facilitate the establishment of visual-to-attribute(V2A) relationships. Then, we introduce the global attribute-tovisual consensus to mitigate biased V2A mappings learned from individual nodes. Specifically, we enforce the bilateral mapping between the attribute and visual feature distributions to be consistent across different nodes. Thus, the learned consistent V2A mapping can significantly enhance zero-shot learning across different nodes. Extensive experiments demonstrate that DistZSL achieves superior performance to the state-of-the-art in learning from distributed data.

ReaKase-8B: Legal Case Retrieval via Knowledge and Reasoning Representations with LLMs

Oct 30, 2025Abstract:Legal case retrieval (LCR) is a cornerstone of real-world legal decision making, as it enables practitioners to identify precedents for a given query case. Existing approaches mainly rely on traditional lexical models and pretrained language models to encode the texts of legal cases. Yet there are rich information in the relations among different legal entities as well as the crucial reasoning process that uncovers how legal facts and legal issues can lead to judicial decisions. Such relational reasoning process reflects the distinctive characteristics of each case that can distinguish one from another, mirroring the real-world judicial process. Naturally, incorporating such information into the precise case embedding could further enhance the accuracy of case retrieval. In this paper, a novel ReaKase-8B framework is proposed to leverage extracted legal facts, legal issues, legal relation triplets and legal reasoning for effective legal case retrieval. ReaKase-8B designs an in-context legal case representation learning paradigm with a fine-tuned large language model. Extensive experiments on two benchmark datasets from COLIEE 2022 and COLIEE 2023 demonstrate that our knowledge and reasoning augmented embeddings substantially improve retrieval performance over baseline models, highlighting the potential of integrating legal reasoning into legal case retrieval systems. The code has been released on https://github.com/yanran-tang/ReaKase-8B.

Does Homophily Help in Robust Test-time Node Classification?

Oct 25, 2025Abstract:Homophily, the tendency of nodes from the same class to connect, is a fundamental property of real-world graphs, underpinning structural and semantic patterns in domains such as citation networks and social networks. Existing methods exploit homophily through designing homophily-aware GNN architectures or graph structure learning strategies, yet they primarily focus on GNN learning with training graphs. However, in real-world scenarios, test graphs often suffer from data quality issues and distribution shifts, such as domain shifts across users from different regions in social networks and temporal evolution shifts in citation network graphs collected over varying time periods. These factors significantly compromise the pre-trained model's robustness, resulting in degraded test-time performance. With empirical observations and theoretical analysis, we reveal that transforming the test graph structure by increasing homophily in homophilic graphs or decreasing it in heterophilic graphs can significantly improve the robustness and performance of pre-trained GNNs on node classifications, without requiring model training or update. Motivated by these insights, a novel test-time graph structural transformation method grounded in homophily, named GrapHoST, is proposed. Specifically, a homophily predictor is developed to discriminate test edges, facilitating adaptive test-time graph structural transformation by the confidence of predicted homophily scores. Extensive experiments on nine benchmark datasets under a range of test-time data quality issues demonstrate that GrapHoST consistently achieves state-of-the-art performance, with improvements of up to 10.92%. Our code has been released at https://github.com/YanJiangJerry/GrapHoST.

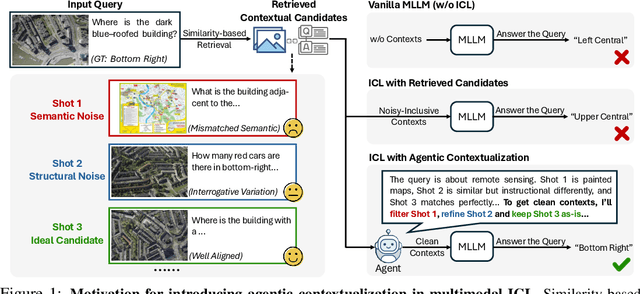

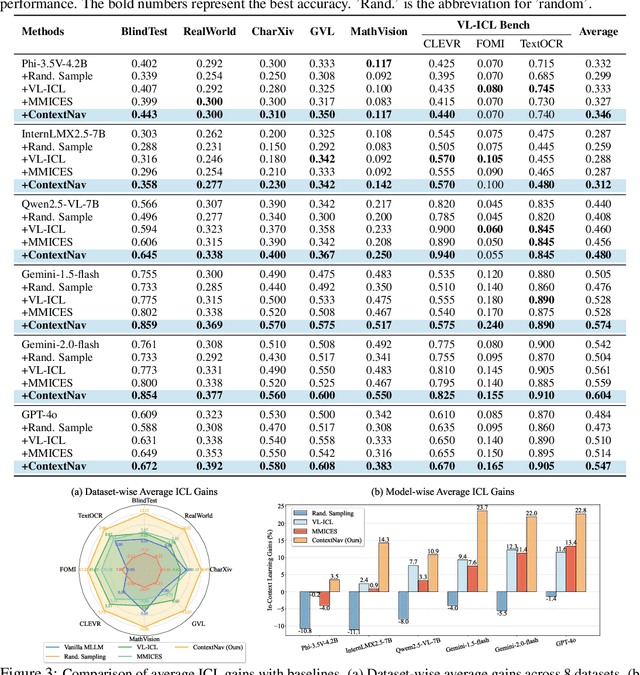

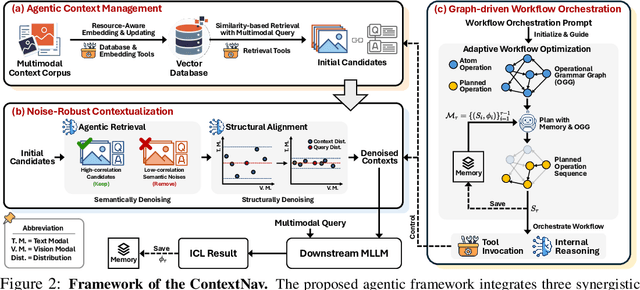

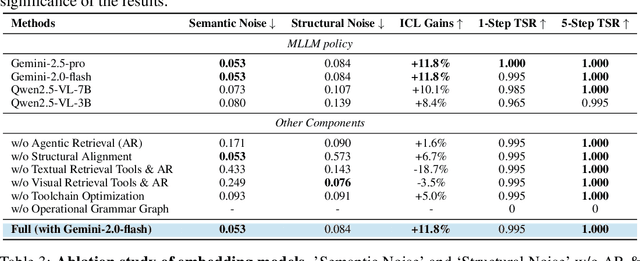

ContextNav: Towards Agentic Multimodal In-Context Learning

Oct 06, 2025

Abstract:Recent advances demonstrate that multimodal large language models (MLLMs) exhibit strong multimodal in-context learning (ICL) capabilities, enabling them to adapt to novel vision-language tasks from a few contextual examples. However, existing ICL approaches face challenges in reconciling scalability with robustness across diverse tasks and noisy contextual examples: manually selecting examples produces clean contexts but is labor-intensive and task-specific, while similarity-based retrieval improves scalability but could introduce irrelevant or structurally inconsistent samples that degrade ICL performance. To address these limitations, we propose ContextNav, the first agentic framework that integrates the scalability of automated retrieval with the quality and adaptiveness of human-like curation, enabling noise-robust and dynamically optimized contextualization for multimodal ICL. ContextNav unifies context management and noise-robust contextualization within a closed-loop workflow driven by graph-based orchestration. Specifically, it builds a resource-aware multimodal embedding pipeline, maintains a retrievable vector database, and applies agentic retrieval and structural alignment to construct noise-resilient contexts. An Operational Grammar Graph (OGG) further supports adaptive workflow planning and optimization, enabling the agent to refine its operational strategies based on downstream ICL feedback. Experimental results demonstrate that ContextNav achieves state-of-the-art performance across various datasets, underscoring the promise of agentic workflows for advancing scalable and robust contextualization in multimodal ICL.

ALSA: Anchors in Logit Space for Out-of-Distribution Accuracy Estimation

Aug 27, 2025Abstract:Estimating model accuracy on unseen, unlabeled datasets is crucial for real-world machine learning applications, especially under distribution shifts that can degrade performance. Existing methods often rely on predicted class probabilities (softmax scores) or data similarity metrics. While softmax-based approaches benefit from representing predictions on the standard simplex, compressing logits into probabilities leads to information loss. Meanwhile, similarity-based methods can be computationally expensive and domain-specific, limiting their broader applicability. In this paper, we introduce ALSA (Anchors in Logit Space for Accuracy estimation), a novel framework that preserves richer information by operating directly in the logit space. Building on theoretical insights and empirical observations, we demonstrate that the aggregation and distribution of logits exhibit a strong correlation with the predictive performance of the model. To exploit this property, ALSA employs an anchor-based modeling strategy: multiple learnable anchors are initialized in logit space, each assigned an influence function that captures subtle variations in the logits. This allows ALSA to provide robust and accurate performance estimates across a wide range of distribution shifts. Extensive experiments on vision, language, and graph benchmarks demonstrate ALSA's superiority over both softmax- and similarity-based baselines. Notably, ALSA's robustness under significant distribution shifts highlights its potential as a practical tool for reliable model evaluation.

Text Meets Topology: Rethinking Out-of-distribution Detection in Text-Rich Networks

Aug 25, 2025Abstract:Out-of-distribution (OOD) detection remains challenging in text-rich networks, where textual features intertwine with topological structures. Existing methods primarily address label shifts or rudimentary domain-based splits, overlooking the intricate textual-structural diversity. For example, in social networks, where users represent nodes with textual features (name, bio) while edges indicate friendship status, OOD may stem from the distinct language patterns between bot and normal users. To address this gap, we introduce the TextTopoOOD framework for evaluating detection across diverse OOD scenarios: (1) attribute-level shifts via text augmentations and embedding perturbations; (2) structural shifts through edge rewiring and semantic connections; (3) thematically-guided label shifts; and (4) domain-based divisions. Furthermore, we propose TNT-OOD to model the complex interplay between Text aNd Topology using: 1) a novel cross-attention module to fuse local structure into node-level text representations, and 2) a HyperNetwork to generate node-specific transformation parameters. This aligns topological and semantic features of ID nodes, enhancing ID/OOD distinction across structural and textual shifts. Experiments on 11 datasets across four OOD scenarios demonstrate the nuanced challenge of TextTopoOOD for evaluating OOD detection in text-rich networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge