Xiaoyu Wang

Liaoning Cancer Hospital and Institute, Shenyang, China

ERNIE 5.0 Technical Report

Feb 04, 2026Abstract:In this report, we introduce ERNIE 5.0, a natively autoregressive foundation model desinged for unified multimodal understanding and generation across text, image, video, and audio. All modalities are trained from scratch under a unified next-group-of-tokens prediction objective, based on an ultra-sparse mixture-of-experts (MoE) architecture with modality-agnostic expert routing. To address practical challenges in large-scale deployment under diverse resource constraints, ERNIE 5.0 adopts a novel elastic training paradigm. Within a single pre-training run, the model learns a family of sub-models with varying depths, expert capacities, and routing sparsity, enabling flexible trade-offs among performance, model size, and inference latency in memory- or time-constrained scenarios. Moreover, we systematically address the challenges of scaling reinforcement learning to unified foundation models, thereby guaranteeing efficient and stable post-training under ultra-sparse MoE architectures and diverse multimodal settings. Extensive experiments demonstrate that ERNIE 5.0 achieves strong and balanced performance across multiple modalities. To the best of our knowledge, among publicly disclosed models, ERNIE 5.0 represents the first production-scale realization of a trillion-parameter unified autoregressive model that supports both multimodal understanding and generation. To facilitate further research, we present detailed visualizations of modality-agnostic expert routing in the unified model, alongside comprehensive empirical analysis of elastic training, aiming to offer profound insights to the community.

Stabilizing Decentralized Federated Fine-Tuning via Topology-Aware Alternating LoRA

Jan 31, 2026Abstract:Decentralized federated learning (DFL), a serverless variant of federated learning, poses unique challenges for parameter-efficient fine-tuning due to the factorized structure of low-rank adaptation (LoRA). Unlike linear parameters, decentralized aggregation of LoRA updates introduces topology-dependent cross terms that can destabilize training under dynamic communication graphs. We propose \texttt{TAD-LoRA}, a Topology-Aware Decentralized Low-Rank Adaptation framework that coordinates the updates and mixing of LoRA factors to control inter-client misalignment. We theoretically prove the convergence of \texttt{TAD-LoRA} under non-convex objectives, explicitly characterizing the trade-off between topology-induced cross-term error and block-coordinate representation bias governed by the switching interval of alternative training. Experiments under various communication conditions validate our analysis, showing that \texttt{TAD-LoRA} achieves robust performance across different communication scenarios, remaining competitive in strongly connected topologies and delivering clear gains under moderately and weakly connected topologies, with particularly strong results on the MNLI dataset.

PID-Guided Partial Alignment for Multimodal Decentralized Federated Learning

Jan 15, 2026Abstract:Multimodal decentralized federated learning (DFL) is challenging because agents differ in available modalities and model architectures, yet must collaborate over peer-to-peer (P2P) networks without a central coordinator. Standard multimodal pipelines learn a single shared embedding across all modalities. In DFL, such a monolithic representation induces gradient misalignment between uni- and multimodal agents; as a result, it suppresses heterogeneous sharing and cross-modal interaction. We present PARSE, a multimodal DFL framework that operationalizes partial information decomposition (PID) in a server-free setting. Each agent performs feature fission to factorize its latent representation into redundant, unique, and synergistic slices. P2P knowledge sharing among heterogeneous agents is enabled by slice-level partial alignment: only semantically shareable branches are exchanged among agents that possess the corresponding modality. By removing the need for central coordination and gradient surgery, PARSE resolves uni-/multimodal gradient conflicts, thereby overcoming the multimodal DFL dilemma while remaining compatible with standard DFL constraints. Across benchmarks and agent mixes, PARSE yields consistent gains over task-, modality-, and hybrid-sharing DFL baselines. Ablations on fusion operators and split ratios, together with qualitative visualizations, further demonstrate the efficiency and robustness of the proposed design.

CuMA: Aligning LLMs with Sparse Cultural Values via Demographic-Aware Mixture of Adapters

Jan 08, 2026Abstract:As Large Language Models (LLMs) serve a global audience, alignment must transition from enforcing universal consensus to respecting cultural pluralism. We demonstrate that dense models, when forced to fit conflicting value distributions, suffer from \textbf{Mean Collapse}, converging to a generic average that fails to represent diverse groups. We attribute this to \textbf{Cultural Sparsity}, where gradient interference prevents dense parameters from spanning distinct cultural modes. To resolve this, we propose \textbf{\textsc{CuMA}} (\textbf{Cu}ltural \textbf{M}ixture of \textbf{A}dapters), a framework that frames alignment as a \textbf{conditional capacity separation} problem. By incorporating demographic-aware routing, \textsc{CuMA} internalizes a \textit{Latent Cultural Topology} to explicitly disentangle conflicting gradients into specialized expert subspaces. Extensive evaluations on WorldValuesBench, Community Alignment, and PRISM demonstrate that \textsc{CuMA} achieves state-of-the-art performance, significantly outperforming both dense baselines and semantic-only MoEs. Crucially, our analysis confirms that \textsc{CuMA} effectively mitigates mean collapse, preserving cultural diversity. Our code is available at https://github.com/Throll/CuMA.

Sampling non-log-concave densities via Hessian-free high-resolution dynamics

Jan 06, 2026Abstract:We study the problem of sampling from a target distribution $π(q)\propto e^{-U(q)}$ on $\mathbb{R}^d$, where $U$ can be non-convex, via the Hessian-free high-resolution (HFHR) dynamics, which is a second-order Langevin-type process that has $e^{-U(q)-\frac12|p|^2}$ as its unique invariant distribution, and it reduces to kinetic Langevin dynamics (KLD) as the resolution parameter $α\to0$. The existing theory for HFHR dynamics in the literature is restricted to strongly-convex $U$, although numerical experiments are promising for non-convex settings as well. We focus on studying the convergence of HFHR dynamics when $U$ can be non-convex, which bridges a gap between theory and practice. Under a standard assumption of dissipativity and smoothness on $U$, we adopt the reflection/synchronous coupling method. This yields a Lyapunov-weighted Wasserstein distance in which the HFHR semigroup is exponentially contractive for all sufficiently small $α>0$ whenever KLD is. We further show that, under an additional assumption that asymptotically $\nabla U$ has linear growth at infinity, the contraction rate for HFHR dynamics is strictly better than that of KLD, with an explicit gain. As a case study, we verify the assumptions and the resulting acceleration for three examples: a multi-well potential, Bayesian linear regression with $L^p$ regularizer and Bayesian binary classification. We conduct numerical experiments based on these examples, as well as an additional example of Bayesian logistic regression with real data processed by the neural networks, which illustrates the efficiency of the algorithms based on HFHR dynamics and verifies the acceleration and superior performance compared to KLD.

ManipShield: A Unified Framework for Image Manipulation Detection, Localization and Explanation

Nov 18, 2025

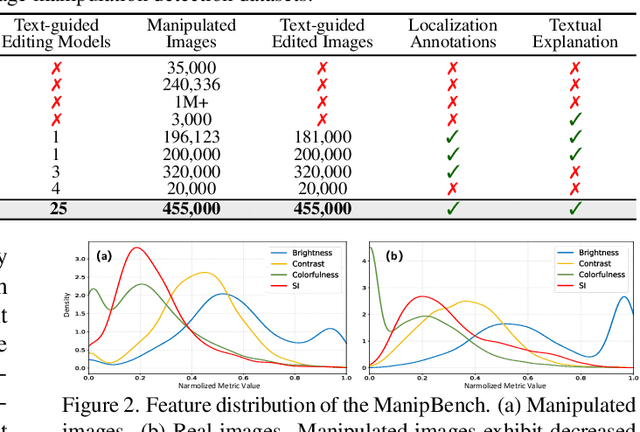

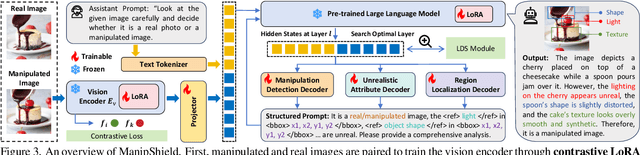

Abstract:With the rapid advancement of generative models, powerful image editing methods now enable diverse and highly realistic image manipulations that far surpass traditional deepfake techniques, posing new challenges for manipulation detection. Existing image manipulation detection and localization (IMDL) benchmarks suffer from limited content diversity, narrow generative-model coverage, and insufficient interpretability, which hinders the generalization and explanation capabilities of current manipulation detection methods. To address these limitations, we introduce \textbf{ManipBench}, a large-scale benchmark for image manipulation detection and localization focusing on AI-edited images. ManipBench contains over 450K manipulated images produced by 25 state-of-the-art image editing models across 12 manipulation categories, among which 100K images are further annotated with bounding boxes, judgment cues, and textual explanations to support interpretable detection. Building upon ManipBench, we propose \textbf{ManipShield}, an all-in-one model based on a Multimodal Large Language Model (MLLM) that leverages contrastive LoRA fine-tuning and task-specific decoders to achieve unified image manipulation detection, localization, and explanation. Extensive experiments on ManipBench and several public datasets demonstrate that ManipShield achieves state-of-the-art performance and exhibits strong generality to unseen manipulation models. Both ManipBench and ManipShield will be released upon publication.

DMSORT: An efficient parallel maritime multi-object tracking architecture for unmanned vessel platforms

Nov 16, 2025

Abstract:Accurate perception of the marine environment through robust multi-object tracking (MOT) is essential for ensuring safe vessel navigation and effective maritime surveillance. However, the complicated maritime environment often causes camera motion and subsequent visual degradation, posing significant challenges to MOT. To address this challenge, we propose an efficient Dual-branch Maritime SORT (DMSORT) method for maritime MOT. The core of the framework is a parallel tracker with affine compensation, which incorporates an object detection and re-identification (ReID) branch, along with a dedicated branch for dynamic camera motion estimation. Specifically, a Reversible Columnar Detection Network (RCDN) is integrated into the detection module to leverage multi-level visual features for robust object detection. Furthermore, a lightweight Transformer-based appearance extractor (Li-TAE) is designed to capture global contextual information and generate robust appearance features. Another branch decouples platform-induced and target-intrinsic motion by constructing a projective transformation, applying platform-motion compensation within the Kalman filter, and thereby stabilizing true object trajectories. Finally, a clustering-optimized feature fusion module effectively combines motion and appearance cues to ensure identity consistency under noise, occlusion, and drift. Extensive evaluations on the Singapore Maritime Dataset demonstrate that DMSORT achieves state-of-the-art performance. Notably, DMSORT attains the fastest runtime among existing ReID-based MOT frameworks while maintaining high identity consistency and robustness to jitter and occlusion. Code is available at: https://github.com/BiscuitsLzy/DMSORT-An-efficient-parallel-maritime-multi-object-tracking-architecture-.

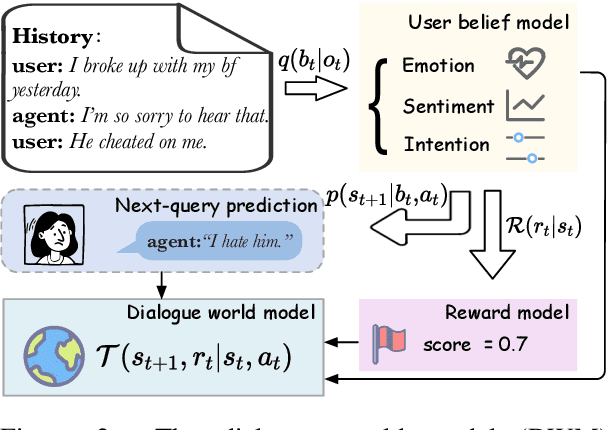

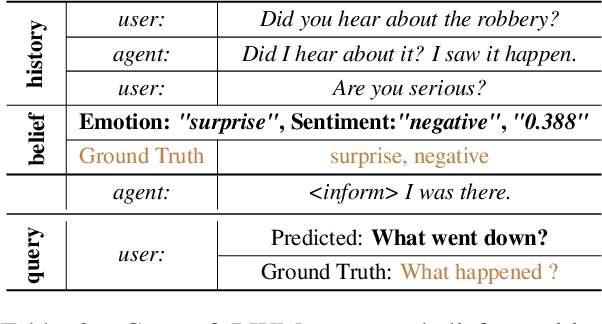

Dream to Chat: Model-based Reinforcement Learning on Dialogues with User Belief Modeling

Aug 23, 2025

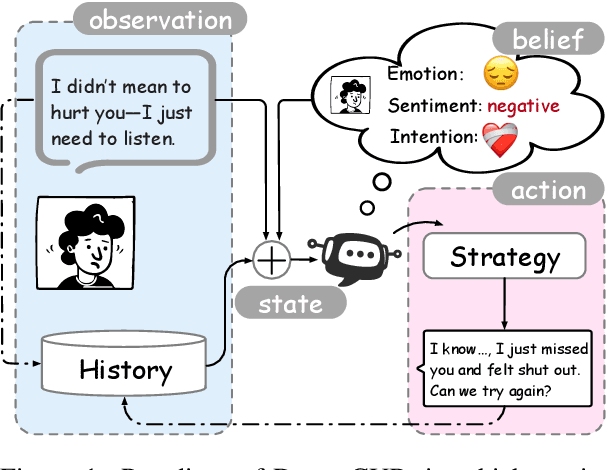

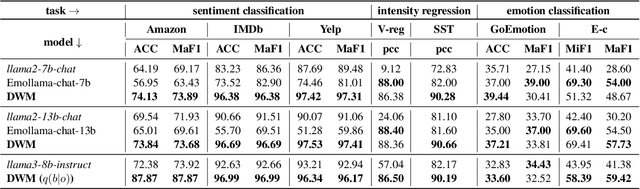

Abstract:World models have been widely utilized in robotics, gaming, and auto-driving. However, their applications on natural language tasks are relatively limited. In this paper, we construct the dialogue world model, which could predict the user's emotion, sentiment, and intention, and future utterances. By defining a POMDP, we argue emotion, sentiment and intention can be modeled as the user belief and solved by maximizing the information bottleneck. By this user belief modeling, we apply the model-based reinforcement learning framework to the dialogue system, and propose a framework called DreamCUB. Experiments show that the pretrained dialogue world model can achieve state-of-the-art performances on emotion classification and sentiment identification, while dialogue quality is also enhanced by joint training of the policy, critic and dialogue world model. Further analysis shows that this manner holds a reasonable exploration-exploitation balance and also transfers well to out-of-domain scenarios such as empathetic dialogues.

CliCARE: Grounding Large Language Models in Clinical Guidelines for Decision Support over Longitudinal Cancer Electronic Health Records

Jul 30, 2025Abstract:Large Language Models (LLMs) hold significant promise for improving clinical decision support and reducing physician burnout by synthesizing complex, longitudinal cancer Electronic Health Records (EHRs). However, their implementation in this critical field faces three primary challenges: the inability to effectively process the extensive length and multilingual nature of patient records for accurate temporal analysis; a heightened risk of clinical hallucination, as conventional grounding techniques such as Retrieval-Augmented Generation (RAG) do not adequately incorporate process-oriented clinical guidelines; and unreliable evaluation metrics that hinder the validation of AI systems in oncology. To address these issues, we propose CliCARE, a framework for Grounding Large Language Models in Clinical Guidelines for Decision Support over Longitudinal Cancer Electronic Health Records. The framework operates by transforming unstructured, longitudinal EHRs into patient-specific Temporal Knowledge Graphs (TKGs) to capture long-range dependencies, and then grounding the decision support process by aligning these real-world patient trajectories with a normative guideline knowledge graph. This approach provides oncologists with evidence-grounded decision support by generating a high-fidelity clinical summary and an actionable recommendation. We validated our framework using large-scale, longitudinal data from a private Chinese cancer dataset and the public English MIMIC-IV dataset. In these diverse settings, CliCARE significantly outperforms strong baselines, including leading long-context LLMs and Knowledge Graph-enhanced RAG methods. The clinical validity of our results is supported by a robust evaluation protocol, which demonstrates a high correlation with assessments made by expert oncologists.

A Deep Learning Pipeline Using Synthetic Data to Improve Interpretation of Paper ECG Images

Jul 29, 2025Abstract:Cardiovascular diseases (CVDs) are the leading global cause of death, and early detection is essential to improve patient outcomes. Electrocardiograms (ECGs), especially 12-lead ECGs, play a key role in the identification of CVDs. These are routinely interpreted by human experts, a process that is time-consuming and requires expert knowledge. Historical research in this area has focused on automatic ECG interpretation from digital signals, with recent deep learning approaches achieving strong results. In practice, however, most ECG data in clinical practice are stored or shared in image form. To bridge this gap, we propose a deep learning framework designed specifically to classify paper-like ECG images into five main diagnostic categories. Our method was the winning entry to the 2024 British Heart Foundation Open Data Science Challenge. It addresses two main challenges of paper ECG classification: visual noise (e.g., shadows or creases) and the need to detect fine-detailed waveform patterns. We propose a pre-processing pipeline that reduces visual noise and a two-stage fine-tuning strategy: the model is first fine-tuned on synthetic and external ECG image datasets to learn domain-specific features, and then further fine-tuned on the target dataset to enhance disease-specific recognition. We adopt the ConvNeXt architecture as the backbone of our model. Our method achieved AUROC scores of 0.9688 on the public validation set and 0.9677 on the private test set of the British Heart Foundation Open Data Science Challenge, highlighting its potential as a practical tool for automated ECG interpretation in clinical workflows.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge