Scott Sanner

Department of Mechanical and Industrial Engineering, University of Toronto

Improving Set Function Approximation with Quasi-Arithmetic Neural Networks

Feb 04, 2026Abstract:Sets represent a fundamental abstraction across many types of data. To handle the unordered nature of set-structured data, models such as DeepSets and PointNet rely on fixed, non-learnable pooling operations (e.g., sum or max) -- a design choice that can hinder the transferability of learned embeddings and limits model expressivity. More recently, learnable aggregation functions have been proposed as more expressive alternatives. In this work, we advance this line of research by introducing the Neuralized Kolmogorov Mean (NKM) -- a novel, trainable framework for learning a generalized measure of central tendency through an invertible neural function. We further propose quasi-arithmetic neural networks (QUANNs), which incorporate the NKM as a learnable aggregation function. We provide a theoretical analysis showing that, QUANNs are universal approximators for a broad class of common set-function decompositions and, thanks to their invertible neural components, learn more structured latent representations. Empirically, QUANNs outperform state-of-the-art baselines across diverse benchmarks, while learning embeddings that transfer effectively even to tasks that do not involve sets.

Near-optimal Linear Predictive Clustering in Non-separable Spaces via Mixed Integer Programming and Quadratic Pseudo-Boolean Reductions

Nov 17, 2025Abstract:Linear Predictive Clustering (LPC) partitions samples based on shared linear relationships between feature and target variables, with numerous applications including marketing, medicine, and education. Greedy optimization methods, commonly used for LPC, alternate between clustering and linear regression but lack global optimality. While effective for separable clusters, they struggle in non-separable settings where clusters overlap in feature space. In an alternative constrained optimization paradigm, Bertsimas and Shioda (2007) formulated LPC as a Mixed-Integer Program (MIP), ensuring global optimality regardless of separability but suffering from poor scalability. This work builds on the constrained optimization paradigm to introduce two novel approaches that improve the efficiency of global optimization for LPC. By leveraging key theoretical properties of separability, we derive near-optimal approximations with provable error bounds, significantly reducing the MIP formulation's complexity and improving scalability. Additionally, we can further approximate LPC as a Quadratic Pseudo-Boolean Optimization (QPBO) problem, achieving substantial computational improvements in some settings. Comparative analyses on synthetic and real-world datasets demonstrate that our methods consistently achieve near-optimal solutions with substantially lower regression errors than greedy optimization while exhibiting superior scalability over existing MIP formulations.

Reflect-then-Plan: Offline Model-Based Planning through a Doubly Bayesian Lens

Jun 06, 2025Abstract:Offline reinforcement learning (RL) is crucial when online exploration is costly or unsafe but often struggles with high epistemic uncertainty due to limited data. Existing methods rely on fixed conservative policies, restricting adaptivity and generalization. To address this, we propose Reflect-then-Plan (RefPlan), a novel doubly Bayesian offline model-based (MB) planning approach. RefPlan unifies uncertainty modeling and MB planning by recasting planning as Bayesian posterior estimation. At deployment, it updates a belief over environment dynamics using real-time observations, incorporating uncertainty into MB planning via marginalization. Empirical results on standard benchmarks show that RefPlan significantly improves the performance of conservative offline RL policies. In particular, RefPlan maintains robust performance under high epistemic uncertainty and limited data, while demonstrating resilience to changing environment dynamics, improving the flexibility, generalizability, and robustness of offline-learned policies.

Batched Self-Consistency Improves LLM Relevance Assessment and Ranking

May 18, 2025Abstract:Given some information need, Large Language Models (LLMs) are increasingly used for candidate text relevance assessment, typically using a one-by-one pointwise (PW) strategy where each LLM call evaluates one candidate at a time. Meanwhile, it has been shown that LLM performance can be improved through self-consistency: prompting the LLM to do the same task multiple times (possibly in perturbed ways) and then aggregating the responses. To take advantage of self-consistency, we hypothesize that batched PW strategies, where multiple passages are judged in one LLM call, are better suited than one-by-one PW methods since a larger input context can induce more diverse LLM sampling across self-consistency calls. We first propose several candidate batching strategies to create prompt diversity across self-consistency calls through subset reselection and permutation. We then test our batched PW methods on relevance assessment and ranking tasks against one-by-one PW and listwise LLM ranking baselines with and without self-consistency, using three passage retrieval datasets and GPT-4o, Claude Sonnet 3, and Amazon Nova Pro. We find that batched PW methods outperform all baselines, and show that batching can greatly amplify the positive effects of self-consistency. For instance, on our legal search dataset, GPT-4o one-by-one PW ranking NDCG@10 improves only from 44.9% to 46.8% without self-consistency vs. with 15 self consistency calls, while batched PW ranking improves from 43.8% to 51.3%, respectively.

CoLoTa: A Dataset for Entity-based Commonsense Reasoning over Long-Tail Knowledge

Apr 20, 2025Abstract:The rise of Large Language Models (LLMs) has redefined the AI landscape, particularly due to their ability to encode factual and commonsense knowledge, and their outstanding performance in tasks requiring reasoning. Despite these advances, hallucinations and reasoning errors remain a significant barrier to their deployment in high-stakes settings. In this work, we observe that even the most prominent LLMs, such as OpenAI-o1, suffer from high rates of reasoning errors and hallucinations on tasks requiring commonsense reasoning over obscure, long-tail entities. To investigate this limitation, we present a new dataset for Commonsense reasoning over Long-Tail entities (CoLoTa), that consists of 3,300 queries from question answering and claim verification tasks and covers a diverse range of commonsense reasoning skills. We remark that CoLoTa can also serve as a Knowledge Graph Question Answering (KGQA) dataset since the support of knowledge required to answer its queries is present in the Wikidata knowledge graph. However, as opposed to existing KGQA benchmarks that merely focus on factoid questions, our CoLoTa queries also require commonsense reasoning. Our experiments with strong LLM-based KGQA methodologies indicate their severe inability to answer queries involving commonsense reasoning. Hence, we propose CoLoTa as a novel benchmark for assessing both (i) LLM commonsense reasoning capabilities and their robustness to hallucinations on long-tail entities and (ii) the commonsense reasoning capabilities of KGQA methods.

Q-STRUM Debate: Query-Driven Contrastive Summarization for Recommendation Comparison

Feb 18, 2025

Abstract:Query-driven recommendation with unknown items poses a challenge for users to understand why certain items are appropriate for their needs. Query-driven Contrastive Summarization (QCS) is a methodology designed to address this issue by leveraging language-based item descriptions to clarify contrasts between them. However, existing state-of-the-art contrastive summarization methods such as STRUM-LLM fall short of this goal. To overcome these limitations, we introduce Q-STRUM Debate, a novel extension of STRUM-LLM that employs debate-style prompting to generate focused and contrastive summarizations of item aspects relevant to a query. Leveraging modern large language models (LLMs) as powerful tools for generating debates, Q-STRUM Debate provides enhanced contrastive summaries. Experiments across three datasets demonstrate that Q-STRUM Debate yields significant performance improvements over existing methods on key contrastive summarization criteria, thus introducing a novel and performant debate prompting methodology for QCS.

Self-Supervised Transformers as Iterative Solution Improvers for Constraint Satisfaction

Feb 18, 2025Abstract:We present a Transformer-based framework for Constraint Satisfaction Problems (CSPs). CSPs find use in many applications and thus accelerating their solution with machine learning is of wide interest. Most existing approaches rely on supervised learning from feasible solutions or reinforcement learning, paradigms that require either feasible solutions to these NP-Complete CSPs or large training budgets and a complex expert-designed reward signal. To address these challenges, we propose ConsFormer, a self-supervised framework that leverages a Transformer as a solution refiner. ConsFormer constructs a solution to a CSP iteratively in a process that mimics local search. Instead of using feasible solutions as labeled data, we devise differentiable approximations to the discrete constraints of a CSP to guide model training. Our model is trained to improve random assignments for a single step but is deployed iteratively at test time, circumventing the bottlenecks of supervised and reinforcement learning. Our method can tackle out-of-distribution CSPs simply through additional iterations.

Multi-hop Upstream Preemptive Traffic Signal Control with Deep Reinforcement Learning

Nov 10, 2024

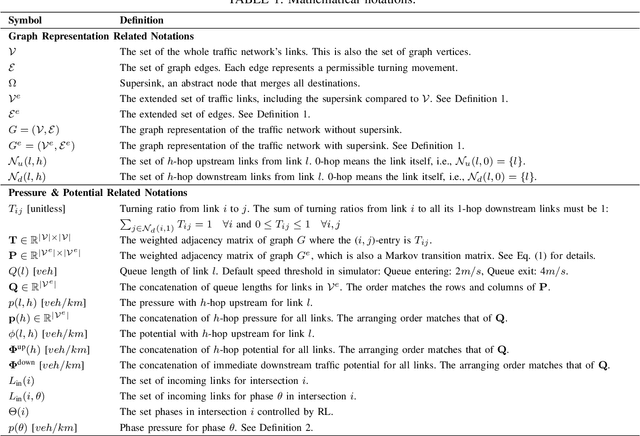

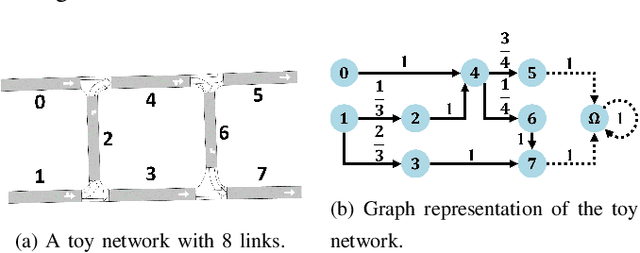

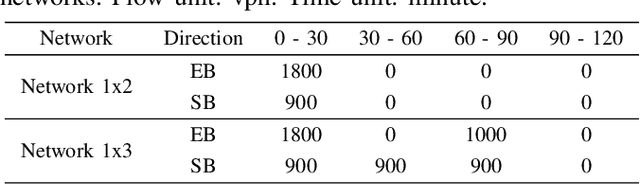

Abstract:Traffic signal control is crucial for managing congestion in urban networks. Existing myopic pressure-based control methods focus only on immediate upstream links, leading to suboptimal green time allocation and increased network delays. Effective signal control, however, inherently requires a broader spatial scope, as traffic conditions further upstream can significantly impact traffic at the current location. This paper introduces a novel concept based on the Markov chain theory, namely multi-hop upstream pressure, that generalizes the conventional pressure to account for traffic conditions beyond the immediate upstream links. This farsighted and compact metric informs the deep reinforcement learning agent to preemptively clear the present queues, guiding the agent to optimize signal timings with a broader spatial awareness. Simulations on synthetic and realistic (Toronto) scenarios demonstrate controllers utilizing multi-hop upstream pressure significantly reduce overall network delay by prioritizing traffic movements based on a broader understanding of upstream congestion.

Elaborative Subtopic Query Reformulation for Broad and Indirect Queries in Travel Destination Recommendation

Oct 02, 2024Abstract:In Query-driven Travel Recommender Systems (RSs), it is crucial to understand the user intent behind challenging natural language(NL) destination queries such as the broadly worded "youth-friendly activities" or the indirect description "a high school graduation trip". Such queries are challenging due to the wide scope and subtlety of potential user intents that confound the ability of retrieval methods to infer relevant destinations from available textual descriptions such as WikiVoyage. While query reformulation (QR) has proven effective in enhancing retrieval by addressing user intent, existing QR methods tend to focus only on expanding the range of potentially matching query subtopics (breadth) or elaborating on the potential meaning of a query (depth), but not both. In this paper, we introduce Elaborative Subtopic Query Reformulation (EQR), a large language model-based QR method that combines both breadth and depth by generating potential query subtopics with information-rich elaborations. We also release TravelDest, a novel dataset for query-driven travel destination RSs. Experiments on TravelDest show that EQR achieves significant improvements in recall and precision over existing state-of-the-art QR methods.

Multi-modal Generative Models in Recommendation System

Sep 17, 2024

Abstract:Many recommendation systems limit user inputs to text strings or behavior signals such as clicks and purchases, and system outputs to a list of products sorted by relevance. With the advent of generative AI, users have come to expect richer levels of interactions. In visual search, for example, a user may provide a picture of their desired product along with a natural language modification of the content of the picture (e.g., a dress like the one shown in the picture but in red color). Moreover, users may want to better understand the recommendations they receive by visualizing how the product fits their use case, e.g., with a representation of how a garment might look on them, or how a furniture item might look in their room. Such advanced levels of interaction require recommendation systems that are able to discover both shared and complementary information about the product across modalities, and visualize the product in a realistic and informative way. However, existing systems often treat multiple modalities independently: text search is usually done by comparing the user query to product titles and descriptions, while visual search is typically done by comparing an image provided by the customer to product images. We argue that future recommendation systems will benefit from a multi-modal understanding of the products that leverages the rich information retailers have about both customers and products to come up with the best recommendations. In this chapter we review recommendation systems that use multiple data modalities simultaneously.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge