Yuman Gao

USS-Nav: Unified Spatio-Semantic Scene Graph for Lightweight UAV Zero-Shot Object Navigation

Feb 03, 2026Abstract:Zero-Shot Object Navigation in unknown environments poses significant challenges for Unmanned Aerial Vehicles (UAVs) due to the conflict between high-level semantic reasoning requirements and limited onboard computational resources. To address this, we present USS-Nav, a lightweight framework that incrementally constructs a Unified Spatio-Semantic scene graph and enables efficient Large Language Model (LLM)-augmented Zero-Shot Object Navigation in unknown environments. Specifically, we introduce an incremental Spatial Connectivity Graph generation method utilizing polyhedral expansion to capture global geometric topology, which is dynamically partitioned into semantic regions via graph clustering. Concurrently, open-vocabulary object semantics are instantiated and anchored to this topology to form a hierarchical environmental representation. Leveraging this hierarchical structure, we present a coarse-to-fine exploration strategy: LLM grounded in the scene graph's semantics to determine global target regions, while a local planner optimizes frontier coverage based on information gain. Experimental results demonstrate that our framework outperforms state-of-the-art methods in terms of computational efficiency and real-time update frequency (15 Hz) on a resource-constrained platform. Furthermore, ablation studies confirm the effectiveness of our framework, showing substantial improvements in Success weighted by Path Length (SPL). The source code will be made publicly available to foster further research.

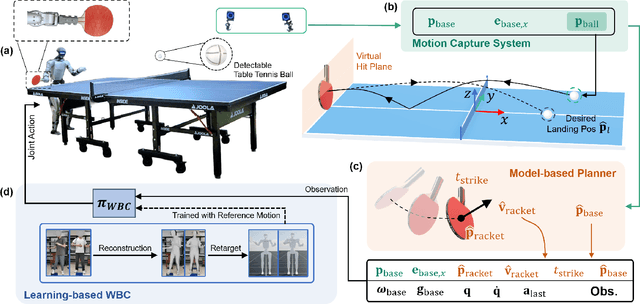

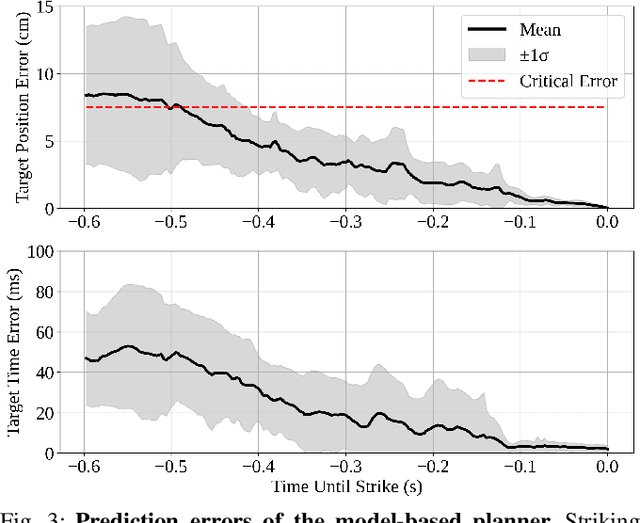

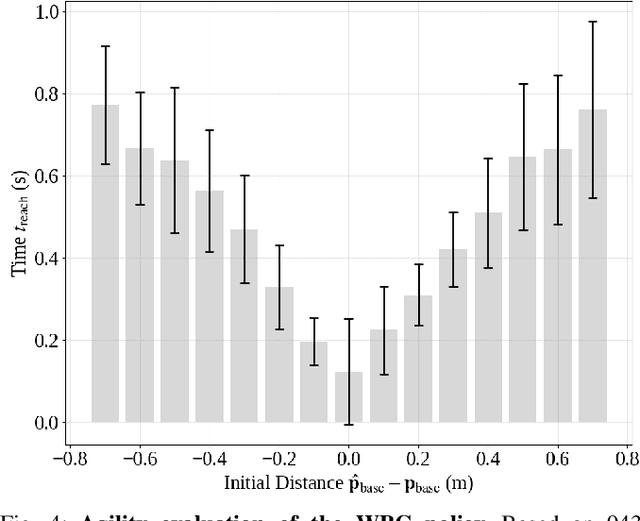

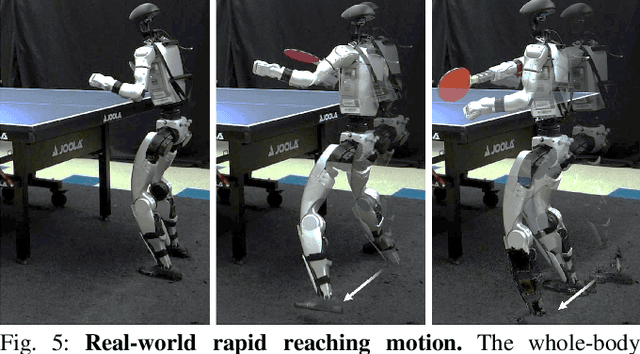

HITTER: A HumanoId Table TEnnis Robot via Hierarchical Planning and Learning

Aug 28, 2025

Abstract:Humanoid robots have recently achieved impressive progress in locomotion and whole-body control, yet they remain constrained in tasks that demand rapid interaction with dynamic environments through manipulation. Table tennis exemplifies such a challenge: with ball speeds exceeding 5 m/s, players must perceive, predict, and act within sub-second reaction times, requiring both agility and precision. To address this, we present a hierarchical framework for humanoid table tennis that integrates a model-based planner for ball trajectory prediction and racket target planning with a reinforcement learning-based whole-body controller. The planner determines striking position, velocity and timing, while the controller generates coordinated arm and leg motions that mimic human strikes and maintain stability and agility across consecutive rallies. Moreover, to encourage natural movements, human motion references are incorporated during training. We validate our system on a general-purpose humanoid robot, achieving up to 106 consecutive shots with a human opponent and sustained exchanges against another humanoid. These results demonstrate real-world humanoid table tennis with sub-second reactive control, marking a step toward agile and interactive humanoid behaviors.

Toward Real-World Cooperative and Competitive Soccer with Quadrupedal Robot Teams

May 20, 2025Abstract:Achieving coordinated teamwork among legged robots requires both fine-grained locomotion control and long-horizon strategic decision-making. Robot soccer offers a compelling testbed for this challenge, combining dynamic, competitive, and multi-agent interactions. In this work, we present a hierarchical multi-agent reinforcement learning (MARL) framework that enables fully autonomous and decentralized quadruped robot soccer. First, a set of highly dynamic low-level skills is trained for legged locomotion and ball manipulation, such as walking, dribbling, and kicking. On top of these, a high-level strategic planning policy is trained with Multi-Agent Proximal Policy Optimization (MAPPO) via Fictitious Self-Play (FSP). This learning framework allows agents to adapt to diverse opponent strategies and gives rise to sophisticated team behaviors, including coordinated passing, interception, and dynamic role allocation. With an extensive ablation study, the proposed learning method shows significant advantages in the cooperative and competitive multi-agent soccer game. We deploy the learned policies to real quadruped robots relying solely on onboard proprioception and decentralized localization, with the resulting system supporting autonomous robot-robot and robot-human soccer matches on indoor and outdoor soccer courts.

LangWBC: Language-directed Humanoid Whole-Body Control via End-to-end Learning

Apr 30, 2025Abstract:General-purpose humanoid robots are expected to interact intuitively with humans, enabling seamless integration into daily life. Natural language provides the most accessible medium for this purpose. However, translating language into humanoid whole-body motion remains a significant challenge, primarily due to the gap between linguistic understanding and physical actions. In this work, we present an end-to-end, language-directed policy for real-world humanoid whole-body control. Our approach combines reinforcement learning with policy distillation, allowing a single neural network to interpret language commands and execute corresponding physical actions directly. To enhance motion diversity and compositionality, we incorporate a Conditional Variational Autoencoder (CVAE) structure. The resulting policy achieves agile and versatile whole-body behaviors conditioned on language inputs, with smooth transitions between various motions, enabling adaptation to linguistic variations and the emergence of novel motions. We validate the efficacy and generalizability of our method through extensive simulations and real-world experiments, demonstrating robust whole-body control. Please see our website at LangWBC.github.io for more information.

Multi-Robot System for Cooperative Exploration in Unknown Environments: A Survey

Mar 10, 2025Abstract:With the advancement of multi-robot technology, cooperative exploration tasks have garnered increasing attention. This paper presents a comprehensive review of multi-robot cooperative exploration systems. First, we review the evolution of robotic exploration and introduce a modular research framework tailored for multi-robot cooperative exploration. Based on this framework, we systematically categorize and summarize key system components. As a foundational module for multi-robot exploration, the localization and mapping module is primarily introduced by focusing on global and relative pose estimation, as well as multi-robot map merging techniques. The cooperative motion module is further divided into learning-based approaches and multi-stage planning, with the latter encompassing target generation, task allocation, and motion planning strategies. Given the communication constraints of real-world environments, we also analyze the communication module, emphasizing how robots exchange information within local communication ranges and under limited transmission capabilities. Finally, we discuss the challenges and future research directions for multi-robot cooperative exploration in light of real-world trends. This review aims to serve as a valuable reference for researchers and practitioners in the field.

Microsaccade-inspired Event Camera for Robotics

May 28, 2024Abstract:Neuromorphic vision sensors or event cameras have made the visual perception of extremely low reaction time possible, opening new avenues for high-dynamic robotics applications. These event cameras' output is dependent on both motion and texture. However, the event camera fails to capture object edges that are parallel to the camera motion. This is a problem intrinsic to the sensor and therefore challenging to solve algorithmically. Human vision deals with perceptual fading using the active mechanism of small involuntary eye movements, the most prominent ones called microsaccades. By moving the eyes constantly and slightly during fixation, microsaccades can substantially maintain texture stability and persistence. Inspired by microsaccades, we designed an event-based perception system capable of simultaneously maintaining low reaction time and stable texture. In this design, a rotating wedge prism was mounted in front of the aperture of an event camera to redirect light and trigger events. The geometrical optics of the rotating wedge prism allows for algorithmic compensation of the additional rotational motion, resulting in a stable texture appearance and high informational output independent of external motion. The hardware device and software solution are integrated into a system, which we call Artificial MIcrosaccade-enhanced EVent camera (AMI-EV). Benchmark comparisons validate the superior data quality of AMI-EV recordings in scenarios where both standard cameras and event cameras fail to deliver. Various real-world experiments demonstrate the potential of the system to facilitate robotics perception both for low-level and high-level vision tasks.

GS-Planner: A Gaussian-Splatting-based Planning Framework for Active High-Fidelity Reconstruction

May 16, 2024Abstract:Active reconstruction technique enables robots to autonomously collect scene data for full coverage, relieving users from tedious and time-consuming data capturing process. However, designed based on unsuitable scene representations, existing methods show unrealistic reconstruction results or the inability of online quality evaluation. Due to the recent advancements in explicit radiance field technology, online active high-fidelity reconstruction has become achievable. In this paper, we propose GS-Planner, a planning framework for active high-fidelity reconstruction using 3D Gaussian Splatting. With improvement on 3DGS to recognize unobserved regions, we evaluate the reconstruction quality and completeness of 3DGS map online to guide the robot. Then we design a sampling-based active reconstruction strategy to explore the unobserved areas and improve the reconstruction geometric and textural quality. To establish a complete robot active reconstruction system, we choose quadrotor as the robotic platform for its high agility. Then we devise a safety constraint with 3DGS to generate executable trajectories for quadrotor navigation in the 3DGS map. To validate the effectiveness of our method, we conduct extensive experiments and ablation studies in highly realistic simulation scenes.

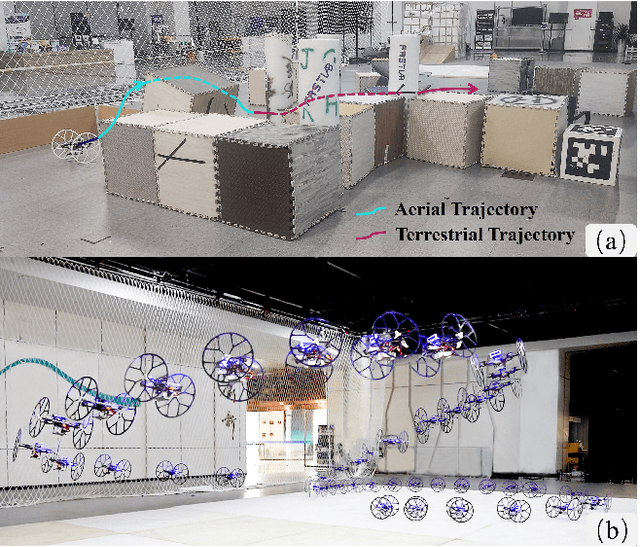

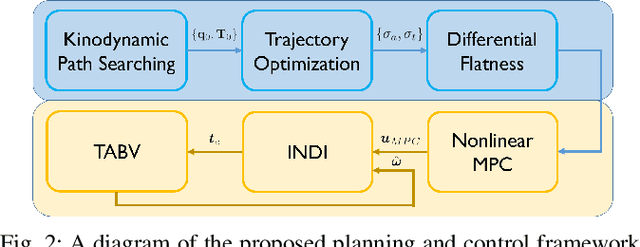

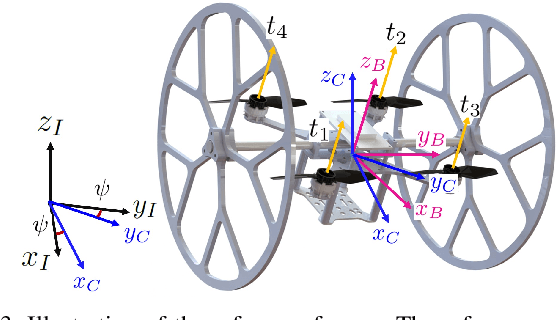

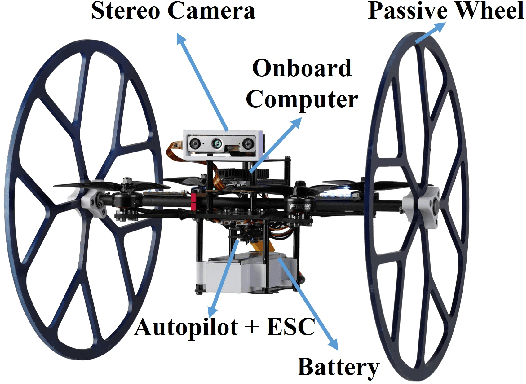

Model-Based Planning and Control for Terrestrial-Aerial Bimodal Vehicles with Passive Wheels

Mar 01, 2024

Abstract:Terrestrial and aerial bimodal vehicles have gained widespread attention due to their cross-domain maneuverability. Nevertheless, their bimodal dynamics significantly increase the complexity of motion planning and control, thus hindering robust and efficient autonomous navigation in unknown environments. To resolve this issue, we develop a model-based planning and control framework for terrestrial aerial bi-modal vehicles. This work begins by deriving a unified dynamic model and the corresponding differential flatness. Leveraging differential flatness, an optimization-based trajectory planner is proposed, which takes into account both solution quality and computational efficiency. Moreover, we design a tracking controller using nonlinear model predictive control based on the proposed unified dynamic model to achieve accurate trajectory tracking and smooth mode transition. We validate our framework through extensive benchmark comparisons and experiments, demonstrating its effectiveness in terms of planning quality and control performance.

Adaptive Tracking and Perching for Quadrotor in Dynamic Scenarios

Dec 19, 2023

Abstract:Perching on the moving platforms is a promising solution to enhance the endurance and operational range of quadrotors, which could benefit the efficiency of a variety of air-ground cooperative tasks. To ensure robust perching, tracking with a steady relative state and reliable perception is a prerequisite. This paper presents an adaptive dynamic tracking and perching scheme for autonomous quadrotors to achieve tight integration with moving platforms. For reliable perception of dynamic targets, we introduce elastic visibility-aware planning to actively avoid occlusion and target loss. Additionally, we propose a flexible terminal adjustment method that adapts the changes in flight duration and the coupled terminal states, ensuring full-state synchronization with the time-varying perching surface at various angles. A relaxation strategy is developed by optimizing the tangential relative speed to address the dynamics and safety violations brought by hard boundary conditions. Moreover, we take SE(3) motion planning into account to ensure no collision between the quadrotor and the platform until the contact moment. Furthermore, we propose an efficient spatiotemporal trajectory optimization framework considering full state dynamics for tracking and perching. The proposed method is extensively tested through benchmark comparisons and ablation studies. To facilitate the application of academic research to industry and to validate the efficiency of our scheme under strictly limited computational resources, we deploy our system on a commercial drone (DJI-MAVIC3) with a full-size sport-utility vehicle (SUV). We conduct extensive real-world experiments, where the drone successfully tracks and perches at 30~km/h (8.3~m/s) on the top of the SUV, and at 3.5~m/s with 60{\deg} inclined into the trunk of the SUV.

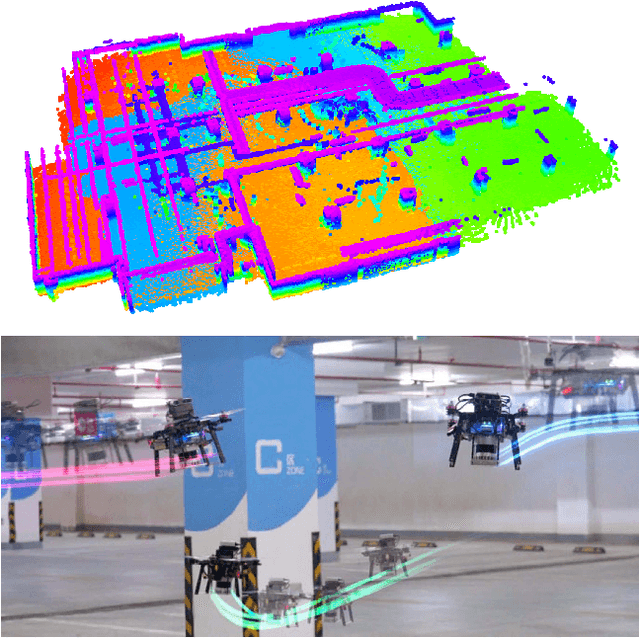

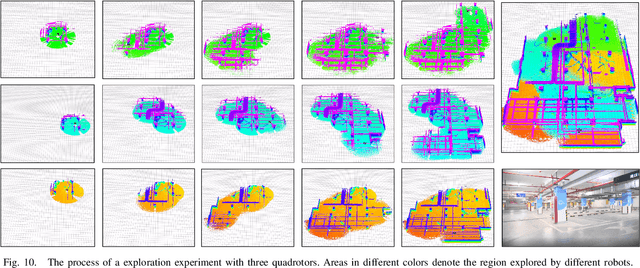

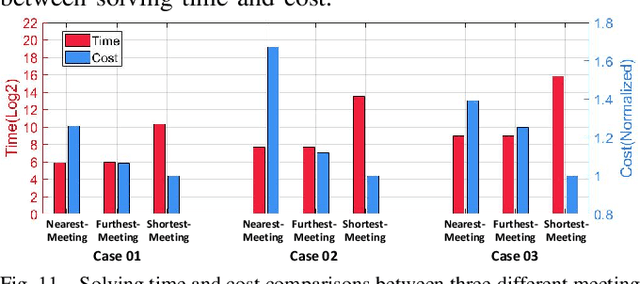

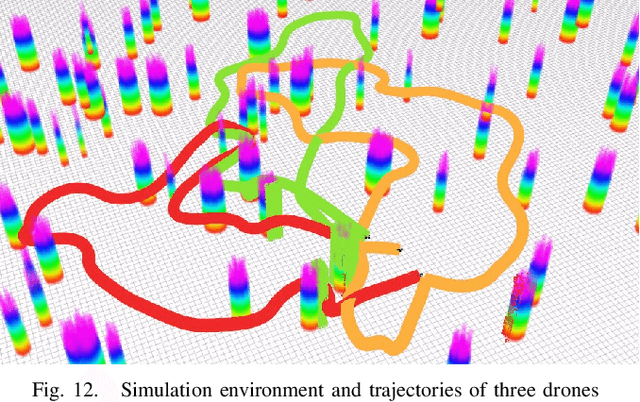

Meeting-Merging-Mission: A Multi-robot Coordinate Framework for Large-Scale Communication-Limited Exploration

Sep 16, 2021

Abstract:This letter presents a complete framework Meeting-Merging-Mission for multi-robot exploration under communication restriction. Considering communication is limited in both bandwidth and range in the real world, we propose a lightweight environment presentation method and an efficient cooperative exploration strategy. For lower bandwidth, each robot utilizes specific polytopes to maintains free space and super frontier information (SFI) as the source for exploration decision-making. To reduce repeated exploration, we develop a mission-based protocol that drives robots to share collected information in stable rendezvous. We also design a complete path planning scheme for both centralized and decentralized cases. To validate that our framework is practical and generic, we present an extensive benchmark and deploy our system into multi-UGV and multi-UAV platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge