Tiankai Yang

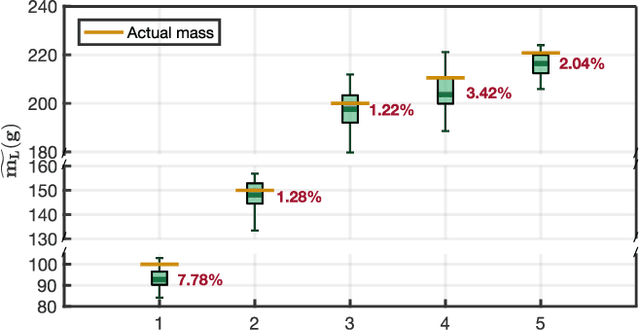

Ground-Effect-Aware Modeling and Control for Multicopters

Jun 24, 2025Abstract:The ground effect on multicopters introduces several challenges, such as control errors caused by additional lift, oscillations that may occur during near-ground flight due to external torques, and the influence of ground airflow on models such as the rotor drag and the mixing matrix. This article collects and analyzes the dynamics data of near-ground multicopter flight through various methods, including force measurement platforms and real-world flights. For the first time, we summarize the mathematical model of the external torque of multicopters under ground effect. The influence of ground airflow on rotor drag and the mixing matrix is also verified through adequate experimentation and analysis. Through simplification and derivation, the differential flatness of the multicopter's dynamic model under ground effect is confirmed. To mitigate the influence of these disturbance models on control, we propose a control method that combines dynamic inverse and disturbance models, ensuring consistent control effectiveness at both high and low altitudes. In this method, the additional thrust and variations in rotor drag under ground effect are both considered and compensated through feedforward models. The leveling torque of ground effect can be equivalently represented as variations in the center of gravity and the moment of inertia. In this way, the leveling torque does not explicitly appear in the dynamic model. The final experimental results show that the method proposed in this paper reduces the control error (RMSE) by \textbf{45.3\%}. Please check the supplementary material at: https://github.com/ZJU-FAST-Lab/Ground-effect-controller.

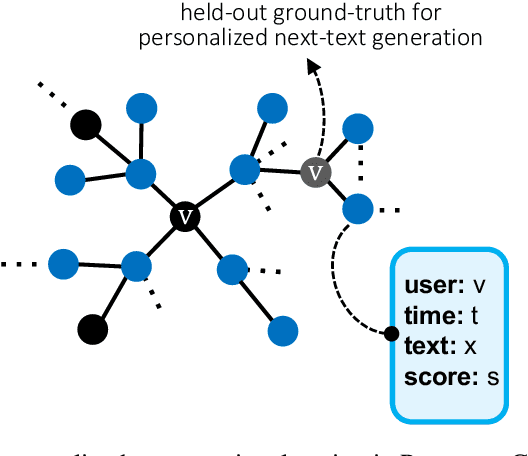

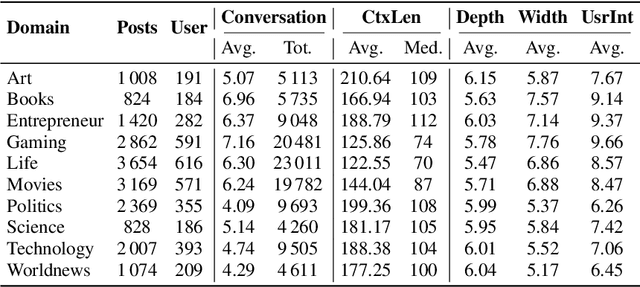

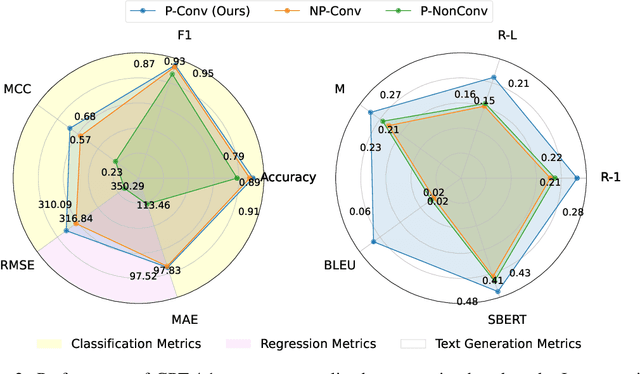

A Personalized Conversational Benchmark: Towards Simulating Personalized Conversations

May 20, 2025

Abstract:We present PersonaConvBench, a large-scale benchmark for evaluating personalized reasoning and generation in multi-turn conversations with large language models (LLMs). Unlike existing work that focuses on either personalization or conversational structure in isolation, PersonaConvBench integrates both, offering three core tasks: sentence classification, impact regression, and user-centric text generation across ten diverse Reddit-based domains. This design enables systematic analysis of how personalized conversational context shapes LLM outputs in realistic multi-user scenarios. We benchmark several commercial and open-source LLMs under a unified prompting setup and observe that incorporating personalized history yields substantial performance improvements, including a 198 percent relative gain over the best non-conversational baseline in sentiment classification. By releasing PersonaConvBench with evaluations and code, we aim to support research on LLMs that adapt to individual styles, track long-term context, and produce contextually rich, engaging responses.

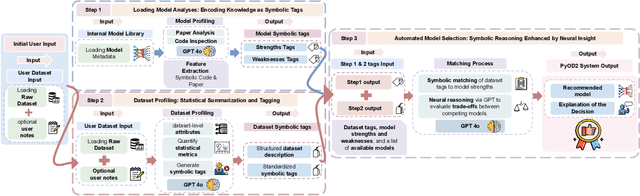

AD-AGENT: A Multi-agent Framework for End-to-end Anomaly Detection

May 19, 2025

Abstract:Anomaly detection (AD) is essential in areas such as fraud detection, network monitoring, and scientific research. However, the diversity of data modalities and the increasing number of specialized AD libraries pose challenges for non-expert users who lack in-depth library-specific knowledge and advanced programming skills. To tackle this, we present AD-AGENT, an LLM-driven multi-agent framework that turns natural-language instructions into fully executable AD pipelines. AD-AGENT coordinates specialized agents for intent parsing, data preparation, library and model selection, documentation mining, and iterative code generation and debugging. Using a shared short-term workspace and a long-term cache, the agents integrate popular AD libraries like PyOD, PyGOD, and TSLib into a unified workflow. Experiments demonstrate that AD-AGENT produces reliable scripts and recommends competitive models across libraries. The system is open-sourced to support further research and practical applications in AD.

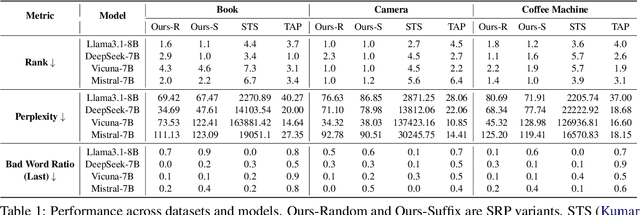

StealthRank: LLM Ranking Manipulation via Stealthy Prompt Optimization

Apr 08, 2025

Abstract:The integration of large language models (LLMs) into information retrieval systems introduces new attack surfaces, particularly for adversarial ranking manipulations. We present StealthRank, a novel adversarial ranking attack that manipulates LLM-driven product recommendation systems while maintaining textual fluency and stealth. Unlike existing methods that often introduce detectable anomalies, StealthRank employs an energy-based optimization framework combined with Langevin dynamics to generate StealthRank Prompts (SRPs)-adversarial text sequences embedded within product descriptions that subtly yet effectively influence LLM ranking mechanisms. We evaluate StealthRank across multiple LLMs, demonstrating its ability to covertly boost the ranking of target products while avoiding explicit manipulation traces that can be easily detected. Our results show that StealthRank consistently outperforms state-of-the-art adversarial ranking baselines in both effectiveness and stealth, highlighting critical vulnerabilities in LLM-driven recommendation systems.

Efficient Model Selection for Time Series Forecasting via LLMs

Apr 02, 2025Abstract:Model selection is a critical step in time series forecasting, traditionally requiring extensive performance evaluations across various datasets. Meta-learning approaches aim to automate this process, but they typically depend on pre-constructed performance matrices, which are costly to build. In this work, we propose to leverage Large Language Models (LLMs) as a lightweight alternative for model selection. Our method eliminates the need for explicit performance matrices by utilizing the inherent knowledge and reasoning capabilities of LLMs. Through extensive experiments with LLaMA, GPT and Gemini, we demonstrate that our approach outperforms traditional meta-learning techniques and heuristic baselines, while significantly reducing computational overhead. These findings underscore the potential of LLMs in efficient model selection for time series forecasting.

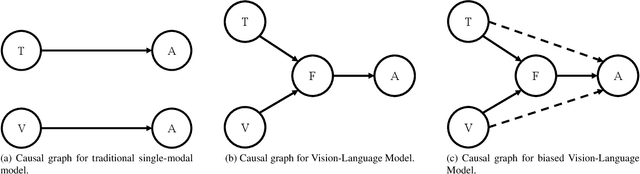

Treble Counterfactual VLMs: A Causal Approach to Hallucination

Mar 08, 2025

Abstract:Vision-Language Models (VLMs) have advanced multi-modal tasks like image captioning, visual question answering, and reasoning. However, they often generate hallucinated outputs inconsistent with the visual context or prompt, limiting reliability in critical applications like autonomous driving and medical imaging. Existing studies link hallucination to statistical biases, language priors, and biased feature learning but lack a structured causal understanding. In this work, we introduce a causal perspective to analyze and mitigate hallucination in VLMs. We hypothesize that hallucination arises from unintended direct influences of either the vision or text modality, bypassing proper multi-modal fusion. To address this, we construct a causal graph for VLMs and employ counterfactual analysis to estimate the Natural Direct Effect (NDE) of vision, text, and their cross-modal interaction on the output. We systematically identify and mitigate these unintended direct effects to ensure that responses are primarily driven by genuine multi-modal fusion. Our approach consists of three steps: (1) designing structural causal graphs to distinguish correct fusion pathways from spurious modality shortcuts, (2) estimating modality-specific and cross-modal NDE using perturbed image representations, hallucinated text embeddings, and degraded visual inputs, and (3) implementing a test-time intervention module to dynamically adjust the model's dependence on each modality. Experimental results demonstrate that our method significantly reduces hallucination while preserving task performance, providing a robust and interpretable framework for improving VLM reliability. To enhance accessibility and reproducibility, our code is publicly available at https://github.com/TREE985/Treble-Counterfactual-VLMs.

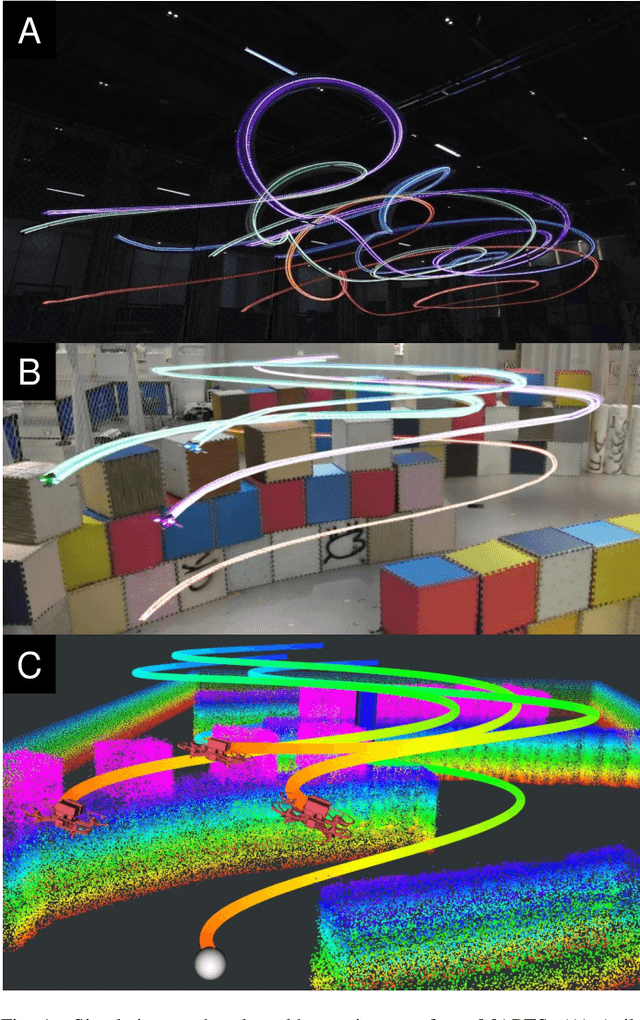

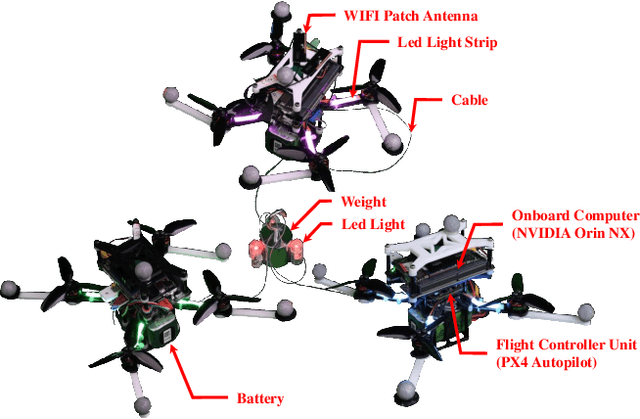

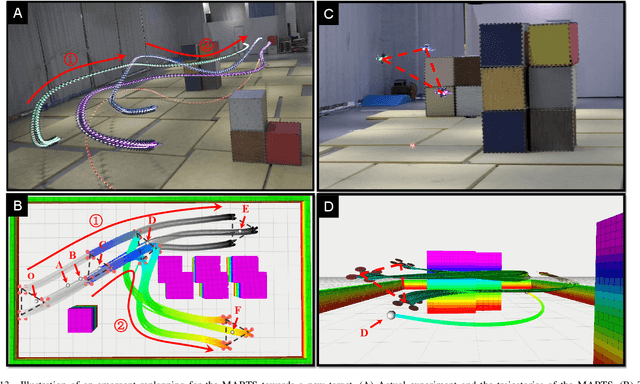

Safe and Agile Transportation of Cable-Suspended Payload via Multiple Aerial Robots

Jan 25, 2025

Abstract:Transporting a heavy payload using multiple aerial robots (MARs) is an efficient manner to extend the load capacity of a single aerial robot. However, existing schemes for the multiple aerial robots transportation system (MARTS) still lack the capability to generate a collision-free and dynamically feasible trajectory in real-time and further track an agile trajectory especially when there are no sensors available to measure the states of payload and cable. Therefore, they are limited to low-agility transportation in simple environments. To bridge the gap, we propose complete planning and control schemes for the MARTS, achieving safe and agile aerial transportation (SAAT) of a cable-suspended payload in complex environments. Flatness maps for the aerial robot considering the complete kinematical constraint and the dynamical coupling between each aerial robot and payload are derived. To improve the responsiveness for the generation of the safe, dynamically feasible, and agile trajectory in complex environments, a real-time spatio-temporal trajectory planning scheme is proposed for the MARTS. Besides, we break away from the reliance on the state measurement for both the payload and cable, as well as the closed-loop control for the payload, and propose a fully distributed control scheme to track the agile trajectory that is robust against imprecise payload mass and non-point mass payload. The proposed schemes are extensively validated through benchmark comparisons, ablation studies, and simulations. Finally, extensive real-world experiments are conducted on a MARTS integrated by three aerial robots with onboard computers and sensors. The result validates the efficiency and robustness of our proposed schemes for SAAT in complex environments.

AD-LLM: Benchmarking Large Language Models for Anomaly Detection

Dec 15, 2024

Abstract:Anomaly detection (AD) is an important machine learning task with many real-world uses, including fraud detection, medical diagnosis, and industrial monitoring. Within natural language processing (NLP), AD helps detect issues like spam, misinformation, and unusual user activity. Although large language models (LLMs) have had a strong impact on tasks such as text generation and summarization, their potential in AD has not been studied enough. This paper introduces AD-LLM, the first benchmark that evaluates how LLMs can help with NLP anomaly detection. We examine three key tasks: (i) zero-shot detection, using LLMs' pre-trained knowledge to perform AD without tasks-specific training; (ii) data augmentation, generating synthetic data and category descriptions to improve AD models; and (iii) model selection, using LLMs to suggest unsupervised AD models. Through experiments with different datasets, we find that LLMs can work well in zero-shot AD, that carefully designed augmentation methods are useful, and that explaining model selection for specific datasets remains challenging. Based on these results, we outline six future research directions on LLMs for AD.

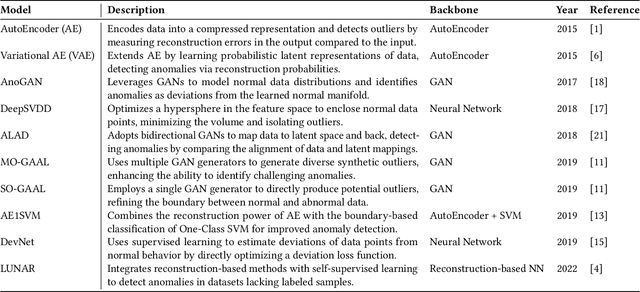

PyOD 2: A Python Library for Outlier Detection with LLM-powered Model Selection

Dec 11, 2024

Abstract:Outlier detection (OD), also known as anomaly detection, is a critical machine learning (ML) task with applications in fraud detection, network intrusion detection, clickstream analysis, recommendation systems, and social network moderation. Among open-source libraries for outlier detection, the Python Outlier Detection (PyOD) library is the most widely adopted, with over 8,500 GitHub stars, 25 million downloads, and diverse industry usage. However, PyOD currently faces three limitations: (1) insufficient coverage of modern deep learning algorithms, (2) fragmented implementations across PyTorch and TensorFlow, and (3) no automated model selection, making it hard for non-experts. To address these issues, we present PyOD Version 2 (PyOD 2), which integrates 12 state-of-the-art deep learning models into a unified PyTorch framework and introduces a large language model (LLM)-based pipeline for automated OD model selection. These improvements simplify OD workflows, provide access to 45 algorithms, and deliver robust performance on various datasets. In this paper, we demonstrate how PyOD 2 streamlines the deployment and automation of OD models and sets a new standard in both research and industry. PyOD 2 is accessible at [https://github.com/yzhao062/pyod](https://github.com/yzhao062/pyod). This study aligns with the Web Mining and Content Analysis track, addressing topics such as the robustness of Web mining methods and the quality of algorithmically-generated Web data.

NLP-ADBench: NLP Anomaly Detection Benchmark

Dec 06, 2024

Abstract:Anomaly detection (AD) is a critical machine learning task with diverse applications in web systems, including fraud detection, content moderation, and user behavior analysis. Despite its significance, AD in natural language processing (NLP) remains underexplored, limiting advancements in detecting anomalies in text data such as harmful content, phishing attempts, or spam reviews. In this paper, we introduce NLP-ADBench, the most comprehensive benchmark for NLP anomaly detection (NLP-AD), comprising eight curated datasets and evaluations of nineteen state-of-the-art algorithms. These include three end-to-end methods and sixteen two-step algorithms that apply traditional anomaly detection techniques to language embeddings generated by bert-base-uncased and OpenAI's text-embedding-3-large models. Our results reveal critical insights and future directions for NLP-AD. Notably, no single model excels across all datasets, highlighting the need for automated model selection. Moreover, two-step methods leveraging transformer-based embeddings consistently outperform specialized end-to-end approaches, with OpenAI embeddings demonstrating superior performance over BERT embeddings. By releasing NLP-ADBench at https://github.com/USC-FORTIS/NLP-ADBench, we provide a standardized framework for evaluating NLP-AD methods, fostering the development of innovative approaches. This work fills a crucial gap in the field and establishes a foundation for advancing NLP anomaly detection, particularly in the context of improving the safety and reliability of web-based systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge