Haojia Li

FlyCo: Foundation Model-Empowered Drones for Autonomous 3D Structure Scanning in Open-World Environments

Jan 12, 2026Abstract:Autonomous 3D scanning of open-world target structures via drones remains challenging despite broad applications. Existing paradigms rely on restrictive assumptions or effortful human priors, limiting practicality, efficiency, and adaptability. Recent foundation models (FMs) offer great potential to bridge this gap. This paper investigates a critical research problem: What system architecture can effectively integrate FM knowledge for this task? We answer it with FlyCo, a principled FM-empowered perception-prediction-planning loop enabling fully autonomous, prompt-driven 3D target scanning in diverse unknown open-world environments. FlyCo directly translates low-effort human prompts (text, visual annotations) into precise adaptive scanning flights via three coordinated stages: (1) perception fuses streaming sensor data with vision-language FMs for robust target grounding and tracking; (2) prediction distills FM knowledge and combines multi-modal cues to infer the partially observed target's complete geometry; (3) planning leverages predictive foresight to generate efficient and safe paths with comprehensive target coverage. Building on this, we further design key components to boost open-world target grounding efficiency and robustness, enhance prediction quality in terms of shape accuracy, zero-shot generalization, and temporal stability, and balance long-horizon flight efficiency with real-time computability and online collision avoidance. Extensive challenging real-world and simulation experiments show FlyCo delivers precise scene understanding, high efficiency, and real-time safety, outperforming existing paradigms with lower human effort and verifying the proposed architecture's practicality. Comprehensive ablations validate each component's contribution. FlyCo also serves as a flexible, extensible blueprint, readily leveraging future FM and robotics advances. Code will be released.

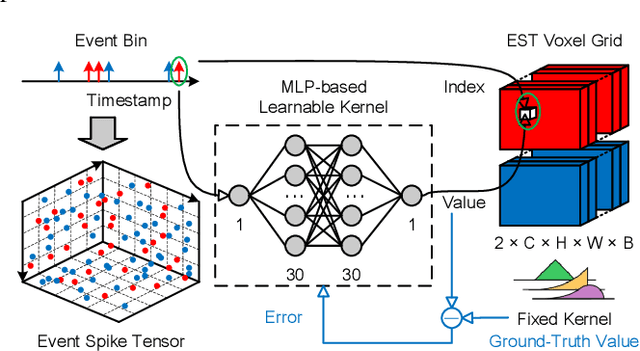

Microsaccade-inspired Event Camera for Robotics

May 28, 2024Abstract:Neuromorphic vision sensors or event cameras have made the visual perception of extremely low reaction time possible, opening new avenues for high-dynamic robotics applications. These event cameras' output is dependent on both motion and texture. However, the event camera fails to capture object edges that are parallel to the camera motion. This is a problem intrinsic to the sensor and therefore challenging to solve algorithmically. Human vision deals with perceptual fading using the active mechanism of small involuntary eye movements, the most prominent ones called microsaccades. By moving the eyes constantly and slightly during fixation, microsaccades can substantially maintain texture stability and persistence. Inspired by microsaccades, we designed an event-based perception system capable of simultaneously maintaining low reaction time and stable texture. In this design, a rotating wedge prism was mounted in front of the aperture of an event camera to redirect light and trigger events. The geometrical optics of the rotating wedge prism allows for algorithmic compensation of the additional rotational motion, resulting in a stable texture appearance and high informational output independent of external motion. The hardware device and software solution are integrated into a system, which we call Artificial MIcrosaccade-enhanced EVent camera (AMI-EV). Benchmark comparisons validate the superior data quality of AMI-EV recordings in scenarios where both standard cameras and event cameras fail to deliver. Various real-world experiments demonstrate the potential of the system to facilitate robotics perception both for low-level and high-level vision tasks.

AutoTrans: A Complete Planning and Control Framework for Autonomous UAV Payload Transportation

Oct 23, 2023Abstract:The robotics community is increasingly interested in autonomous aerial transportation. Unmanned aerial vehicles with suspended payloads have advantages over other systems, including mechanical simplicity and agility, but pose great challenges in planning and control. To realize fully autonomous aerial transportation, this paper presents a systematic solution to address these difficulties. First, we present a real-time planning method that generates smooth trajectories considering the time-varying shape and non-linear dynamics of the system, ensuring whole-body safety and dynamic feasibility. Additionally, an adaptive NMPC with a hierarchical disturbance compensation strategy is designed to overcome unknown external perturbations and inaccurate model parameters. Extensive experiments show that our method is capable of generating high-quality trajectories online, even in highly constrained environments, and tracking aggressive flight trajectories accurately, even under significant uncertainty. We plan to release our code to benefit the community.

FC-Planner: A Skeleton-guided Planning Framework for Fast Aerial Coverage of Complex 3D Scenes

Sep 25, 2023

Abstract:3D coverage path planning for UAVs is a crucial problem in diverse practical applications. However, existing methods have shown unsatisfactory system simplicity, computation efficiency, and path quality in large and complex scenes. To address these challenges, we propose FC-Planner, a skeleton-guided planning framework that can achieve fast aerial coverage of complex 3D scenes without pre-processing. We decompose the scene into several simple subspaces by a skeleton-based space decomposition (SSD). Additionally, the skeleton guides us to effortlessly determine free space. We utilize the skeleton to efficiently generate a minimal set of specialized and informative viewpoints for complete coverage. Based on SSD, a hierarchical planner effectively divides the large planning problem into independent sub-problems, enabling parallel planning for each subspace. The carefully designed global and local planning strategies are then incorporated to guarantee both high quality and efficiency in path generation. We conduct extensive benchmark and real-world tests, where FC-Planner computes over 10 times faster compared to state-of-the-art methods with shorter path and more complete coverage. The source code will be open at https://github.com/HKUST-Aerial-Robotics/FC-Planner.

PredRecon: A Prediction-boosted Planning Framework for Fast and High-quality Autonomous Aerial Reconstruction

Feb 09, 2023Abstract:Autonomous UAV path planning for 3D reconstruction has been actively studied in various applications for high-quality 3D models. However, most existing works have adopted explore-then-exploit, prior-based or exploration-based strategies, demonstrating inefficiency with repeated flight and low autonomy. In this paper, we propose PredRecon, a prediction-boosted planning framework that can autonomously generate paths for high 3D reconstruction quality. We obtain inspiration from humans can roughly infer the complete construction structure from partial observation. Hence, we devise a surface prediction module (SPM) to predict the coarse complete surfaces of the target from the current partial reconstruction. Then, the uncovered surfaces are produced by online volumetric mapping waiting for observation by UAV. Lastly, a hierarchical planner plans motions for 3D reconstruction, which sequentially finds efficient global coverage paths, plans local paths for maximizing the performance of Multi-View Stereo (MVS), and generates smooth trajectories for image-pose pairs acquisition. We conduct benchmarks in the realistic simulator, which validates the performance of PredRecon compared with the classical and state-of-the-art methods. The open-source code is released at https://github.com/HKUST-Aerial-Robotics/PredRecon.

FAST-Dynamic-Vision: Detection and Tracking Dynamic Objects with Event and Depth Sensing

Mar 11, 2021

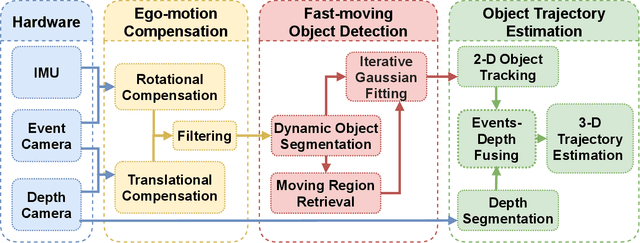

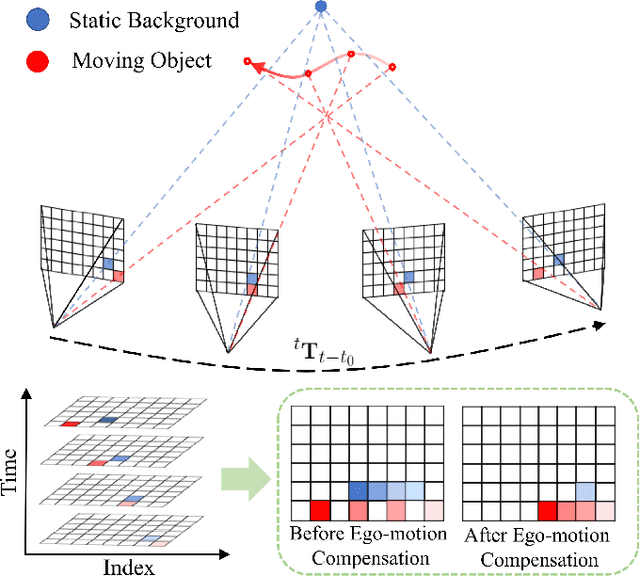

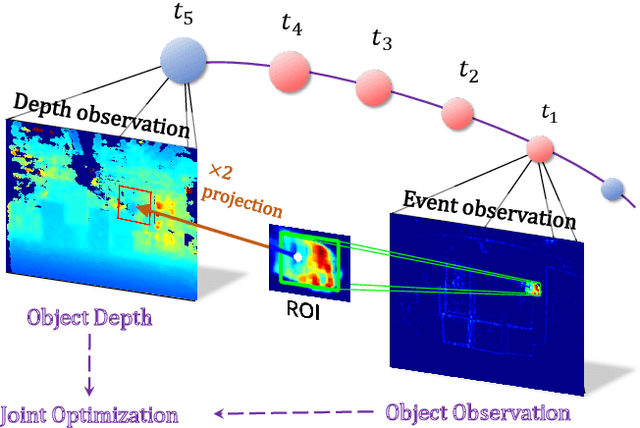

Abstract:The development of aerial autonomy has enabled aerial robots to fly agilely in complex environments. However, dodging fast-moving objects in flight remains a challenge, limiting the further application of unmanned aerial vehicles (UAVs). The bottleneck of solving this problem is the accurate perception of rapid dynamic objects. Recently, event cameras have shown great potential in solving this problem. This paper presents a complete perception system including ego-motion compensation, object detection, and trajectory prediction for fast-moving dynamic objects with low latency and high precision. Firstly, we propose an accurate ego-motion compensation algorithm by considering both rotational and translational motion for more robust object detection. Then, for dynamic object detection, an event camera-based efficient regression algorithm is designed. Finally, we propose an optimizationbased approach that asynchronously fuses event and depth cameras for trajectory prediction. Extensive real-world experiments and benchmarks are performed to validate our framework. Moreover, our code will be released to benefit related researches.

Event-VPR: End-to-End Weakly Supervised Network Architecture for Event-based Visual Place Recognition

Nov 06, 2020

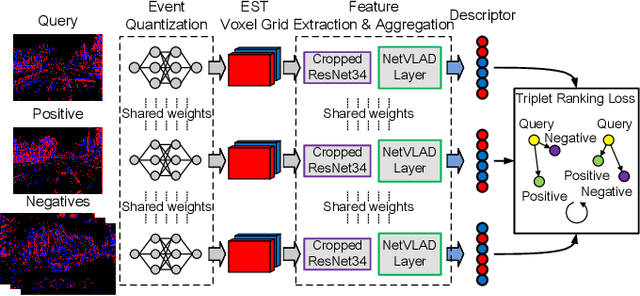

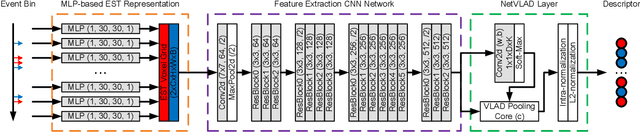

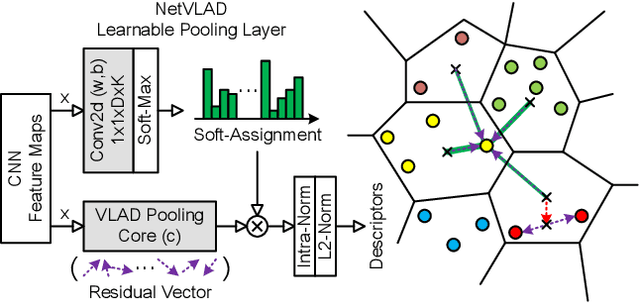

Abstract:Traditional visual place recognition (VPR) methods generally use frame-based cameras, which is easy to fail due to dramatic illumination changes or fast motions. In this paper, we propose an end-to-end visual place recognition network for event cameras, which can achieve good place recognition performance in challenging environments. The key idea of the proposed algorithm is firstly to characterize the event streams with the EST voxel grid, then extract features using a convolution network, and finally aggregate features using an improved VLAD network to realize end-to-end visual place recognition using event streams. To verify the effectiveness of the proposed algorithm, we compare the proposed method with classical VPR methods on the event-based driving datasets (MVSEC, DDD17) and the synthetic datasets (Oxford RobotCar). Experimental results show that the proposed method can achieve much better performance in challenging scenarios. To our knowledge, this is the first end-to-end event-based VPR method. The accompanying source code is available at https://github.com/kongdelei/Event-VPR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge