Qianli Dong

R-VoxelMap: Accurate Voxel Mapping with Recursive Plane Fitting for Online LiDAR Odometry

Jan 18, 2026Abstract:This paper proposes R-VoxelMap, a novel voxel mapping method that constructs accurate voxel maps using a geometry-driven recursive plane fitting strategy to enhance the localization accuracy of online LiDAR odometry. VoxelMap and its variants typically fit and check planes using all points in a voxel, which may lead to plane parameter deviation caused by outliers, over segmentation of large planes, and incorrect merging across different physical planes. To address these issues, R-VoxelMap utilizes a geometry-driven recursive construction strategy based on an outlier detect-and-reuse pipeline. Specifically, for each voxel, accurate planes are first fitted while separating outliers using random sample consensus (RANSAC). The remaining outliers are then propagated to deeper octree levels for recursive processing, ensuring a detailed representation of the environment. In addition, a point distribution-based validity check algorithm is devised to prevent erroneous plane merging. Extensive experiments on diverse open-source LiDAR(-inertial) simultaneous localization and mapping (SLAM) datasets validate that our method achieves higher accuracy than other state-of-the-art approaches, with comparable efficiency and memory usage. Code will be available on GitHub.

Dora: QoE-Aware Hybrid Parallelism for Distributed Edge AI

Dec 09, 2025

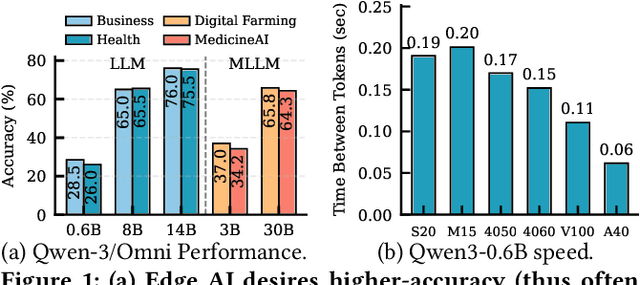

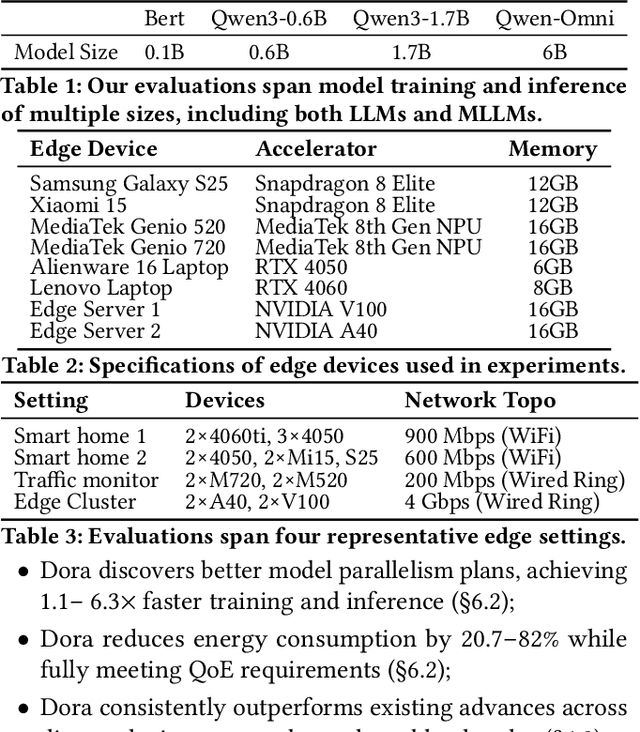

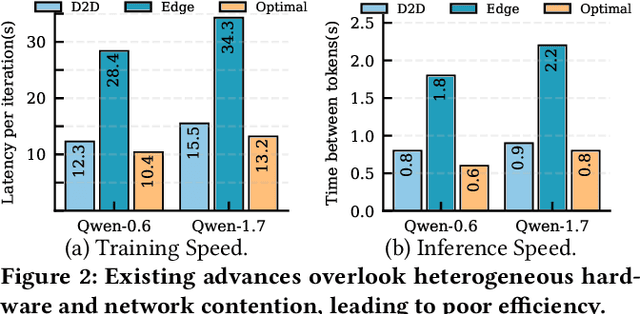

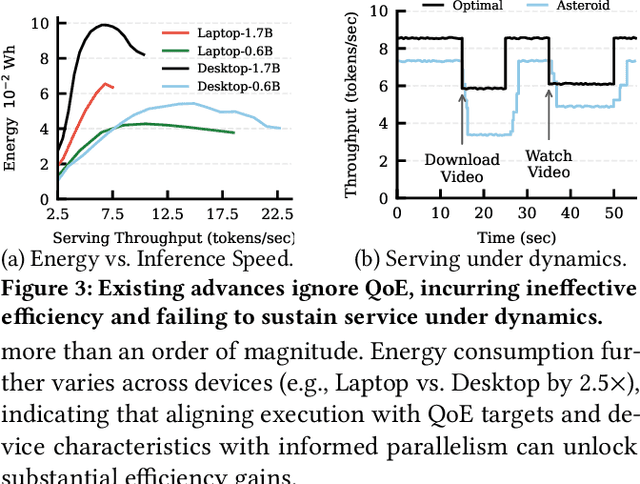

Abstract:With the proliferation of edge AI applications, satisfying user quality of experience (QoE) requirements, such as model inference latency, has become a first class objective, as these models operate in resource constrained settings and directly interact with users. Yet, modern AI models routinely exceed the resource capacity of individual devices, necessitating distributed execution across heterogeneous devices over variable and contention prone networks. Existing planners for hybrid (e.g., data and pipeline) parallelism largely optimize for throughput or device utilization, overlooking QoE, leading to severe resource inefficiency (e.g., unnecessary energy drain) or QoE violations under runtime dynamics. We present Dora, a framework for QoE aware hybrid parallelism in distributed edge AI training and inference. Dora jointly optimizes heterogeneous computation, contention prone networks, and multi dimensional QoE objectives via three key mechanisms: (i) a heterogeneity aware model partitioner that determines and assigns model partitions across devices, forming a compact set of QoE compliant plans; (ii) a contention aware network scheduler that further refines these candidate plans by maximizing compute communication overlap; and (iii) a runtime adapter that adaptively composes multiple plans to maximize global efficiency while respecting overall QoEs. Across representative edge deployments, including smart homes, traffic analytics, and small edge clusters, Dora achieves 1.1--6.3 times faster execution and, alternatively, reduces energy consumption by 21--82 percent, all while maintaining QoE under runtime dynamics.

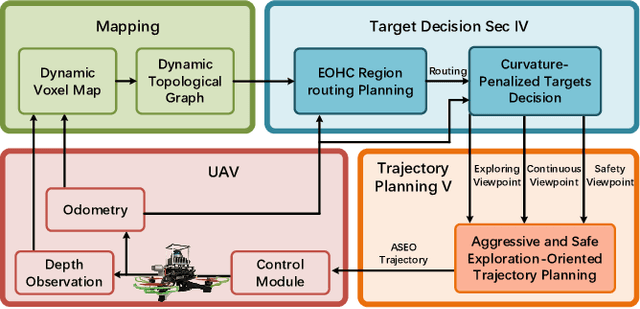

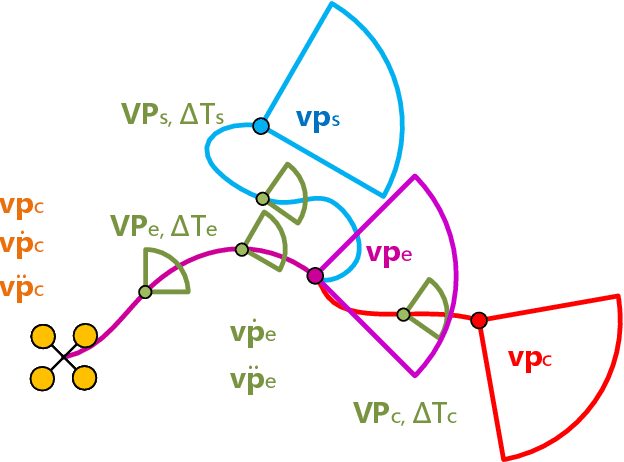

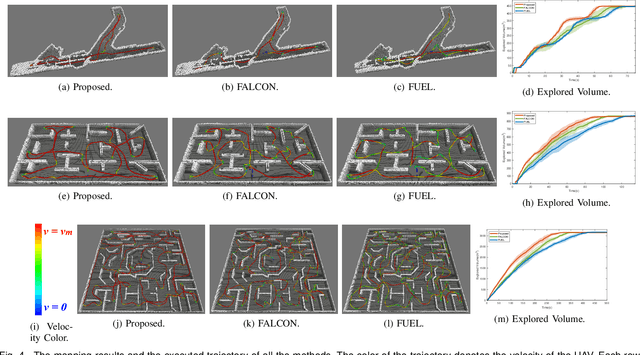

EDEN: Efficient Dual-Layer Exploration Planning for Fast UAV Autonomous Exploration in Large 3-D Environments

Jun 05, 2025

Abstract:Efficient autonomous exploration in large-scale environments remains challenging due to the high planning computational cost and low-speed maneuvers. In this paper, we propose a fast and computationally efficient dual-layer exploration planning method. The insight of our dual-layer method is efficiently finding an acceptable long-term region routing and greedily exploring the target in the region of the first routing area with high speed. Specifically, the proposed method finds the long-term area routing through an approximate algorithm to ensure real-time planning in large-scale environments. Then, the viewpoint in the first routing region with the lowest curvature-penalized cost, which can effectively reduce decelerations caused by sharp turn motions, will be chosen as the next exploration target. To further speed up the exploration, we adopt an aggressive and safe exploration-oriented trajectory to enhance exploration continuity. The proposed method is compared to state-of-the-art methods in challenging simulation environments. The results show that the proposed method outperforms other methods in terms of exploration efficiency, computational cost, and trajectory speed. We also conduct real-world experiments to validate the effectiveness of the proposed method. The code will be open-sourced.

FSMP: A Frontier-Sampling-Mixed Planner for Fast Autonomous Exploration of Complex and Large 3-D Environments

Feb 28, 2025

Abstract:In this paper, we propose a systematic framework for fast exploration of complex and large 3-D environments using micro aerial vehicles (MAVs). The key insight is the organic integration of the frontier-based and sampling-based strategies that can achieve rapid global exploration of the environment. Specifically, a field-of-view-based (FOV) frontier detector with the guarantee of completeness and soundness is devised for identifying 3-D map frontiers. Different from random sampling-based methods, the deterministic sampling technique is employed to build and maintain an incremental road map based on the recorded sensor FOVs and newly detected frontiers. With the resulting road map, we propose a two-stage path planner. First, it quickly computes the global optimal exploration path on the road map using the lazy evaluation strategy. Then, the best exploration path is smoothed for further improving the exploration efficiency. We validate the proposed method both in simulation and real-world experiments. The comparative results demonstrate the promising performance of our planner in terms of exploration efficiency, computational time, and explored volume.

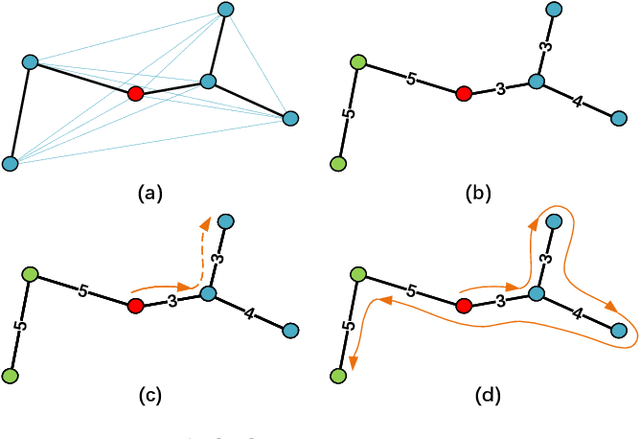

Fast and Communication-Efficient Multi-UAV Exploration Via Voronoi Partition on Dynamic Topological Graph

Aug 11, 2024

Abstract:Efficient data transmission and reasonable task allocation are important to improve multi-robot exploration efficiency. However, most communication data types typically contain redundant information and thus require massive communication volume. Moreover, exploration-oriented task allocation is far from trivial and becomes even more challenging for resource-limited unmanned aerial vehicles (UAVs). In this paper, we propose a fast and communication-efficient multi-UAV exploration method for exploring large environments. We first design a multi-robot dynamic topological graph (MR-DTG) consisting of nodes representing the explored and exploring regions and edges connecting nodes. Supported by MR-DTG, our method achieves efficient communication by only transferring the necessary information required by exploration planning. To further improve the exploration efficiency, a hierarchical multi-UAV exploration method is devised using MR-DTG. Specifically, the \emph{graph Voronoi partition} is used to allocate MR-DTG's nodes to the closest UAVs, considering the actual motion cost, thus achieving reasonable task allocation. To our knowledge, this is the first work to address multi-UAV exploration using \emph{graph Voronoi partition}. The proposed method is compared with a state-of-the-art method in simulations. The results show that the proposed method is able to reduce the exploration time and communication volume by up to 38.3\% and 95.5\%, respectively. Finally, the effectiveness of our method is validated in the real-world experiment with 6 UAVs. We will release the source code to benefit the community.

FAST-Dynamic-Vision: Detection and Tracking Dynamic Objects with Event and Depth Sensing

Mar 11, 2021

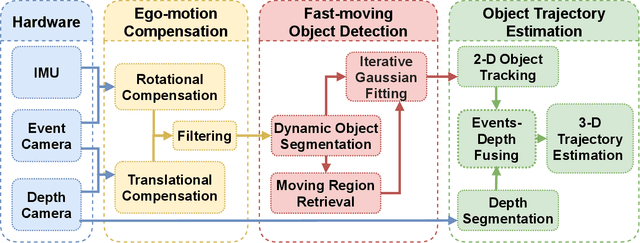

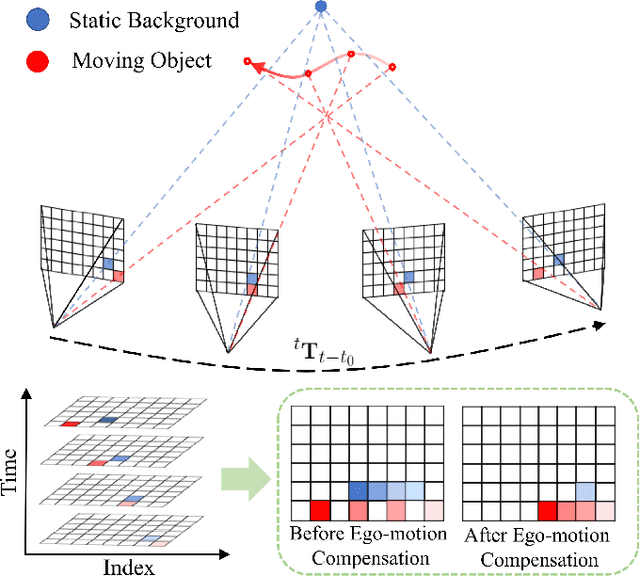

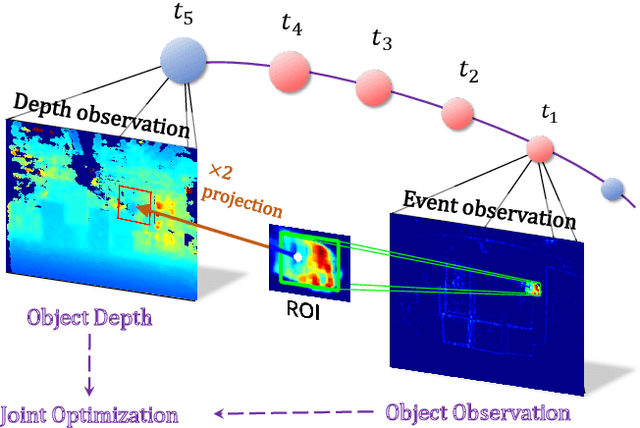

Abstract:The development of aerial autonomy has enabled aerial robots to fly agilely in complex environments. However, dodging fast-moving objects in flight remains a challenge, limiting the further application of unmanned aerial vehicles (UAVs). The bottleneck of solving this problem is the accurate perception of rapid dynamic objects. Recently, event cameras have shown great potential in solving this problem. This paper presents a complete perception system including ego-motion compensation, object detection, and trajectory prediction for fast-moving dynamic objects with low latency and high precision. Firstly, we propose an accurate ego-motion compensation algorithm by considering both rotational and translational motion for more robust object detection. Then, for dynamic object detection, an event camera-based efficient regression algorithm is designed. Finally, we propose an optimizationbased approach that asynchronously fuses event and depth cameras for trajectory prediction. Extensive real-world experiments and benchmarks are performed to validate our framework. Moreover, our code will be released to benefit related researches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge