Kuanxu Hou

Spike-EVPR: Deep Spiking Residual Network with Cross-Representation Aggregation for Event-Based Visual Place Recognition

Feb 16, 2024

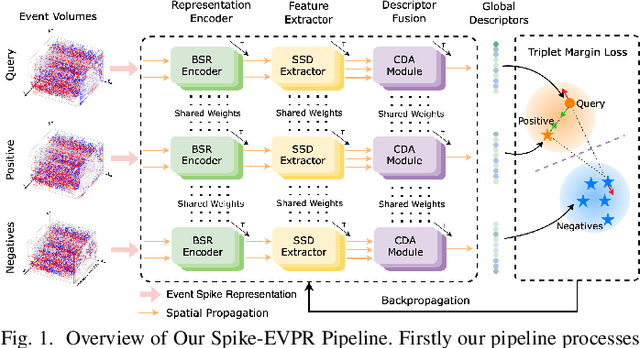

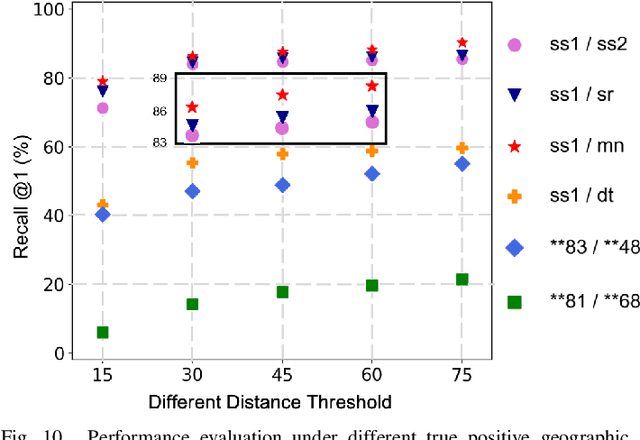

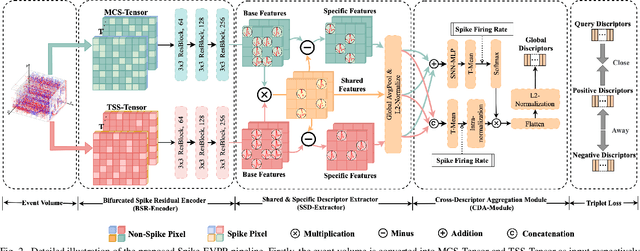

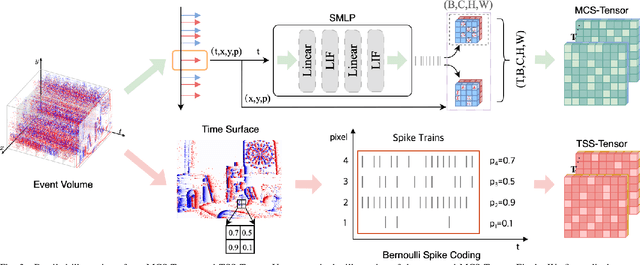

Abstract:Event cameras have been successfully applied to visual place recognition (VPR) tasks by using deep artificial neural networks (ANNs) in recent years. However, previously proposed deep ANN architectures are often unable to harness the abundant temporal information presented in event streams. In contrast, deep spiking networks exhibit more intricate spatiotemporal dynamics and are inherently well-suited to process sparse asynchronous event streams. Unfortunately, directly inputting temporal-dense event volumes into the spiking network introduces excessive time steps, resulting in prohibitively high training costs for large-scale VPR tasks. To address the aforementioned issues, we propose a novel deep spiking network architecture called Spike-EVPR for event-based VPR tasks. First, we introduce two novel event representations tailored for SNN to fully exploit the spatio-temporal information from the event streams, and reduce the video memory occupation during training as much as possible. Then, to exploit the full potential of these two representations, we construct a Bifurcated Spike Residual Encoder (BSR-Encoder) with powerful representational capabilities to better extract the high-level features from the two event representations. Next, we introduce a Shared & Specific Descriptor Extractor (SSD-Extractor). This module is designed to extract features shared between the two representations and features specific to each. Finally, we propose a Cross-Descriptor Aggregation Module (CDA-Module) that fuses the above three features to generate a refined, robust global descriptor of the scene. Our experimental results indicate the superior performance of our Spike-EVPR compared to several existing EVPR pipelines on Brisbane-Event-VPR and DDD20 datasets, with the average Recall@1 increased by 7.61% on Brisbane and 13.20% on DDD20.

EV-MGRFlowNet: Motion-Guided Recurrent Network for Unsupervised Event-based Optical Flow with Hybrid Motion-Compensation Loss

May 13, 2023

Abstract:Event cameras offer promising properties, such as high temporal resolution and high dynamic range. These benefits have been utilized into many machine vision tasks, especially optical flow estimation. Currently, most existing event-based works use deep learning to estimate optical flow. However, their networks have not fully exploited prior hidden states and motion flows. Additionally, their supervision strategy has not fully leveraged the geometric constraints of event data to unlock the potential of networks. In this paper, we propose EV-MGRFlowNet, an unsupervised event-based optical flow estimation pipeline with motion-guided recurrent networks using a hybrid motion-compensation loss. First, we propose a feature-enhanced recurrent encoder network (FERE-Net) which fully utilizes prior hidden states to obtain multi-level motion features. Then, we propose a flow-guided decoder network (FGD-Net) to integrate prior motion flows. Finally, we design a hybrid motion-compensation loss (HMC-Loss) to strengthen geometric constraints for the more accurate alignment of events. Experimental results show that our method outperforms the current state-of-the-art (SOTA) method on the MVSEC dataset, with an average reduction of approximately 22.71% in average endpoint error (AEE). To our knowledge, our method ranks first among unsupervised learning-based methods.

FE-Fusion-VPR: Attention-based Multi-Scale Network Architecture for Visual Place Recognition by Fusing Frames and Events

Nov 23, 2022

Abstract:Traditional visual place recognition (VPR), usually using standard cameras, is easy to fail due to glare or high-speed motion. By contrast, event cameras have the advantages of low latency, high temporal resolution, and high dynamic range, which can deal with the above issues. Nevertheless, event cameras are prone to failure in weakly textured or motionless scenes, while standard cameras can still provide appearance information in this case. Thus, exploiting the complementarity of standard cameras and event cameras can effectively improve the performance of VPR algorithms. In the paper, we propose FE-Fusion-VPR, an attention-based multi-scale network architecture for VPR by fusing frames and events. First, the intensity frame and event volume are fed into the two-stream feature extraction network for shallow feature fusion. Next, the three-scale features are obtained through the multi-scale fusion network and aggregated into three sub-descriptors using the VLAD layer. Finally, the weight of each sub-descriptor is learned through the descriptor re-weighting network to obtain the final refined descriptor. Experimental results show that on the Brisbane-Event-VPR and DDD20 datasets, the Recall@1 of our FE-Fusion-VPR is 29.26% and 33.59% higher than Event-VPR and Ensemble-EventVPR, and is 7.00% and 14.15% higher than MultiRes-NetVLAD and NetVLAD. To our knowledge, this is the first end-to-end network that goes beyond the existing event-based and frame-based SOTA methods to fuse frame and events directly for VPR.

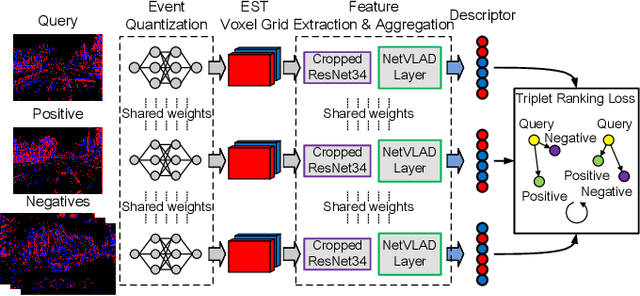

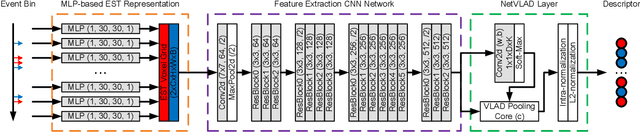

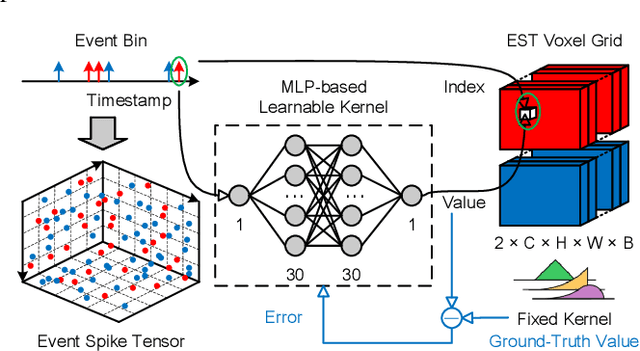

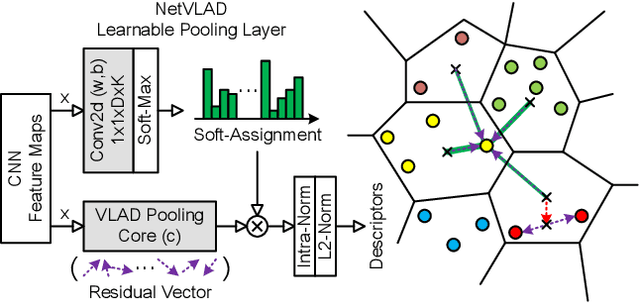

Event-VPR: End-to-End Weakly Supervised Network Architecture for Event-based Visual Place Recognition

Nov 06, 2020

Abstract:Traditional visual place recognition (VPR) methods generally use frame-based cameras, which is easy to fail due to dramatic illumination changes or fast motions. In this paper, we propose an end-to-end visual place recognition network for event cameras, which can achieve good place recognition performance in challenging environments. The key idea of the proposed algorithm is firstly to characterize the event streams with the EST voxel grid, then extract features using a convolution network, and finally aggregate features using an improved VLAD network to realize end-to-end visual place recognition using event streams. To verify the effectiveness of the proposed algorithm, we compare the proposed method with classical VPR methods on the event-based driving datasets (MVSEC, DDD17) and the synthetic datasets (Oxford RobotCar). Experimental results show that the proposed method can achieve much better performance in challenging scenarios. To our knowledge, this is the first end-to-end event-based VPR method. The accompanying source code is available at https://github.com/kongdelei/Event-VPR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge