Yanjun Cao

Polytechnique Montreal

TriphiBot: A Triphibious Robot Combining FOC-based Propulsion with Eccentric Design

Feb 01, 2026Abstract:Triphibious robots capable of multi-domain motion and cross-domain transitions are promising to handle complex tasks across diverse environments. However, existing designs primarily focus on dual-mode platforms, and some designs suffer from high mechanical complexity or low propulsion efficiency, which limits their application. In this paper, we propose a novel triphibious robot capable of aerial, terrestrial, and aquatic motion, by a minimalist design combining a quadcopter structure with two passive wheels, without extra actuators. To address inefficiency of ground-support motion (moving on land/seabed) for quadcopter based designs, we introduce an eccentric Center of Gravity (CoG) design that inherently aligns thrust with motion, enhancing efficiency without specialized mechanical transformation designs. Furthermore, to address the drastic differences in motion control caused by different fluids (air and water), we develop a unified propulsion system based on Field-Oriented Control (FOC). This method resolves torque matching issues and enables precise, rapid bidirectional thrust across different mediums. Grounded in the perspective of living condition and ground support, we analyse the robot's dynamics and propose a Hybrid Nonlinear Model Predictive Control (HNMPC)-PID control system to ensure stable multi-domain motion and seamless transitions. Experimental results validate the robot's multi-domain motion and cross-mode transition capability, along with the efficiency and adaptability of the proposed propulsion system.

CREPES-X: Hierarchical Bearing-Distance-Inertial Direct Cooperative Relative Pose Estimation System

Dec 31, 2025Abstract:Relative localization is critical for cooperation in autonomous multi-robot systems. Existing approaches either rely on shared environmental features or inertial assumptions or suffer from non-line-of-sight degradation and outliers in complex environments. Robust and efficient fusion of inter-robot measurements such as bearings, distances, and inertials for tens of robots remains challenging. We present CREPES-X (Cooperative RElative Pose Estimation System with multiple eXtended features), a hierarchical relative localization framework that enhances speed, accuracy, and robustness under challenging conditions, without requiring any global information. CREPES-X starts with a compact hardware design: InfraRed (IR) LEDs, an IR camera, an ultra-wideband module, and an IMU housed in a cube no larger than 6cm on each side. Then CREPES-X implements a two-stage hierarchical estimator to meet different requirements, considering speed, accuracy, and robustness. First, we propose a single-frame relative estimator that provides instant relative poses for multi-robot setups through a closed-form solution and robust bearing outlier rejection. Then a multi-frame relative estimator is designed to offer accurate and robust relative states by exploring IMU pre-integration via robocentric relative kinematics with loosely- and tightly-coupled optimization. Extensive simulations and real-world experiments validate the effectiveness of CREPES-X, showing robustness to up to 90% bearing outliers, proving resilience in challenging conditions, and achieving RMSE of 0.073m and 1.817° in real-world datasets.

Neural Ranging Inertial Odometry

Dec 11, 2025Abstract:Ultra-wideband (UWB) has shown promising potential in GPS-denied localization thanks to its lightweight and drift-free characteristics, while the accuracy is limited in real scenarios due to its sensitivity to sensor arrangement and non-Gaussian pattern induced by multi-path or multi-signal interference, which commonly occurs in many typical applications like long tunnels. We introduce a novel neural fusion framework for ranging inertial odometry which involves a graph attention UWB network and a recurrent neural inertial network. Our graph net learns scene-relevant ranging patterns and adapts to any number of anchors or tags, realizing accurate positioning without calibration. Additionally, the integration of least squares and the incorporation of nominal frame enhance overall performance and scalability. The effectiveness and robustness of our methods are validated through extensive experiments on both public and self-collected datasets, spanning indoor, outdoor, and tunnel environments. The results demonstrate the superiority of our proposed IR-ULSG in handling challenging conditions, including scenarios outside the convex envelope and cases where only a single anchor is available.

Mr. Virgil: Learning Multi-robot Visual-range Relative Localization

Dec 11, 2025Abstract:Ultra-wideband (UWB)-vision fusion localization has achieved extensive applications in the domain of multi-agent relative localization. The challenging matching problem between robots and visual detection renders existing methods highly dependent on identity-encoded hardware or delicate tuning algorithms. Overconfident yet erroneous matches may bring about irreversible damage to the localization system. To address this issue, we introduce Mr. Virgil, an end-to-end learning multi-robot visual-range relative localization framework, consisting of a graph neural network for data association between UWB rangings and visual detections, and a differentiable pose graph optimization (PGO) back-end. The graph-based front-end supplies robust matching results, accurate initial position predictions, and credible uncertainty estimates, which are subsequently integrated into the PGO back-end to elevate the accuracy of the final pose estimation. Additionally, a decentralized system is implemented for real-world applications. Experiments spanning varying robot numbers, simulation and real-world, occlusion and non-occlusion conditions showcase the stability and exactitude under various scenes compared to conventional methods. Our code is available at: https://github.com/HiOnes/Mr-Virgil.

Efficient Trajectory Generation Based on Traversable Planes in 3D Complex Architectural Spaces

Mar 11, 2025Abstract:With the increasing integration of robots into human life, their role in architectural spaces where people spend most of their time has become more prominent. While motion capabilities and accurate localization for automated robots have rapidly developed, the challenge remains to generate efficient, smooth, comprehensive, and high-quality trajectories in these areas. In this paper, we propose a novel efficient planner for ground robots to autonomously navigate in large complex multi-layered architectural spaces. Considering that traversable regions typically include ground, slopes, and stairs, which are planar or nearly planar structures, we simplify the problem to navigation within and between complex intersecting planes. We first extract traversable planes from 3D point clouds through segmenting, merging, classifying, and connecting to build a plane-graph, which is lightweight but fully represents the traversable regions. We then build a trajectory optimization based on motion state trajectory and fully consider special constraints when crossing multi-layer planes to maximize the robot's maneuverability. We conduct experiments in simulated environments and test on a CubeTrack robot in real-world scenarios, validating the method's effectiveness and practicality.

Real-time Spatial-temporal Traversability Assessment via Feature-based Sparse Gaussian Process

Mar 06, 2025Abstract:Terrain analysis is critical for the practical application of ground mobile robots in real-world tasks, especially in outdoor unstructured environments. In this paper, we propose a novel spatial-temporal traversability assessment method, which aims to enable autonomous robots to effectively navigate through complex terrains. Our approach utilizes sparse Gaussian processes (SGP) to extract geometric features (curvature, gradient, elevation, etc.) directly from point cloud scans. These features are then used to construct a high-resolution local traversability map. Then, we design a spatial-temporal Bayesian Gaussian kernel (BGK) inference method to dynamically evaluate traversability scores, integrating historical and real-time data while considering factors such as slope, flatness, gradient, and uncertainty metrics. GPU acceleration is applied in the feature extraction step, and the system achieves real-time performance. Extensive simulation experiments across diverse terrain scenarios demonstrate that our method outperforms SOTA approaches in both accuracy and computational efficiency. Additionally, we develop an autonomous navigation framework integrated with the traversability map and validate it with a differential driven vehicle in complex outdoor environments. Our code will be open-source for further research and development by the community, https://github.com/ZJU-FAST-Lab/FSGP_BGK.

SEB-Naver: A SE(2)-based Local Navigation Framework for Car-like Robots on Uneven Terrain

Mar 05, 2025Abstract:Autonomous navigation of car-like robots on uneven terrain poses unique challenges compared to flat terrain, particularly in traversability assessment and terrain-associated kinematic modelling for motion planning. This paper introduces SEB-Naver, a novel SE(2)-based local navigation framework designed to overcome these challenges. First, we propose an efficient traversability assessment method for SE(2) grids, leveraging GPU parallel computing to enable real-time updates and maintenance of local maps. Second, inspired by differential flatness, we present an optimization-based trajectory planning method that integrates terrain-associated kinematic models, significantly improving both planning efficiency and trajectory quality. Finally, we unify these components into SEB-Naver, achieving real-time terrain assessment and trajectory optimization. Extensive simulations and real-world experiments demonstrate the effectiveness and efficiency of our approach. The code is at https://github.com/ZJU-FAST-Lab/seb_naver.

Tracailer: An Efficient Trajectory Planner for Tractor-Trailer Vehicles in Unstructured Environments

Feb 27, 2025Abstract:The tractor-trailer vehicle (robot) consists of a drivable tractor and one or more non-drivable trailers connected via hitches. Compared to typical car-like robots, the addition of trailers provides greater transportation capability. However, this also complicates motion planning due to the robot's complex kinematics, high-dimensional state space, and deformable structure. To efficiently plan safe, time-optimal trajectories that adhere to the kinematic constraints of the robot and address the challenges posed by its unique features, this paper introduces a lightweight, compact, and high-order smooth trajectory representation for tractor-trailer robots. Based on it, we design an efficiently solvable spatio-temporal trajectory optimization problem. To deal with deformable structures, which leads to difficulties in collision avoidance, we fully leverage the collision-free regions of the environment, directly applying deformations to trajectories in continuous space. This approach not requires constructing safe regions from the environment using convex approximations through collision-free seed points before each optimization, avoiding the loss of the solution space, thus reducing the dependency of the optimization on initial values. Moreover, a multi-terminal fast path search algorithm is proposed to generate the initial values for optimization. Extensive simulation experiments demonstrate that our approach achieves several-fold improvements in efficiency compared to existing algorithms, while also ensuring lower curvature and trajectory duration. Real-world experiments involving the transportation, loading and unloading of goods in both indoor and outdoor scenarios further validate the effectiveness of our method. The source code is accessible at https://github.com/ZJU-FAST-Lab/tracailer/.

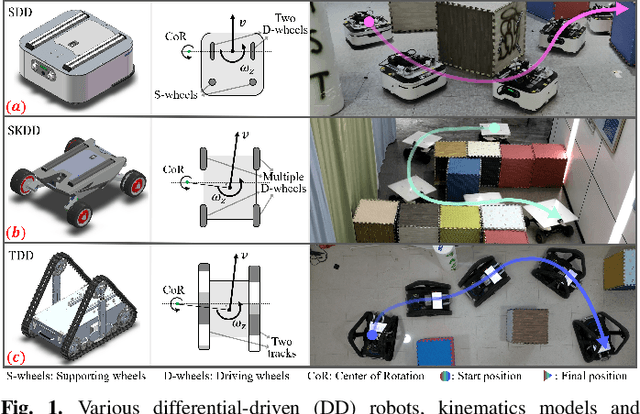

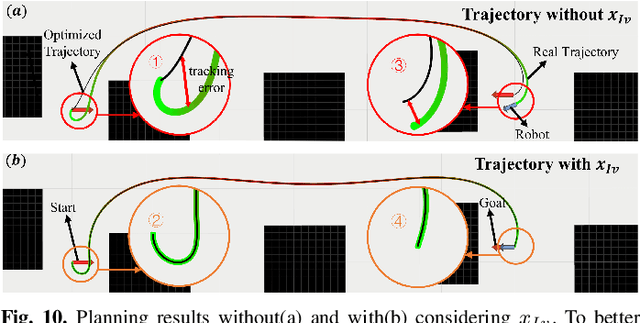

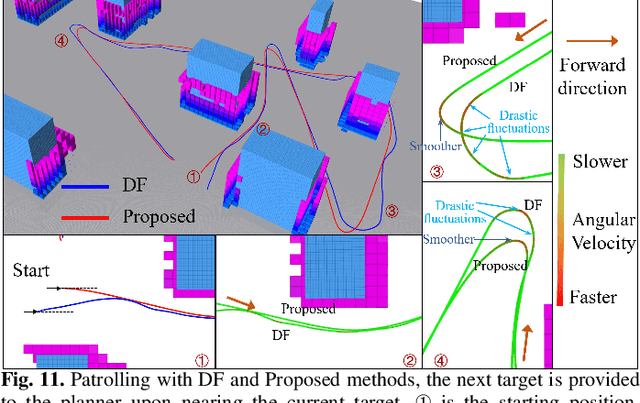

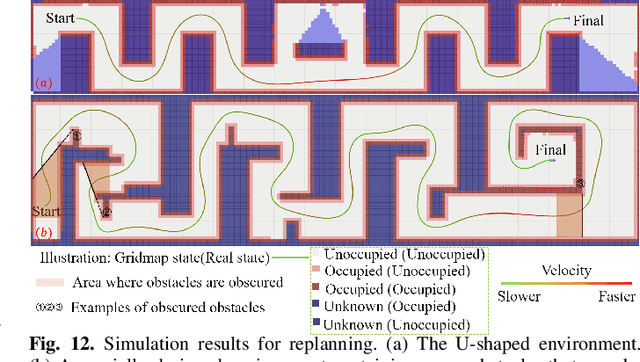

Universal Trajectory Optimization Framework for Differential-Driven Robot Class

Sep 12, 2024

Abstract:Differential-driven robots are widely used in various scenarios thanks to their straightforward principle, from household service robots to disaster response field robots. There are several different types of deriving mechanisms considering the real-world applications, including two-wheeled, four-wheeled skid-steering, tracked robots, etc. The differences in the driving mechanism usually require specific kinematic modeling when precise controlling is desired. Furthermore, the nonholonomic dynamics and possible lateral slip lead to different degrees of difficulty in getting feasible and high-quality trajectories. Therefore, a comprehensive trajectory optimization framework to compute trajectories efficiently for various kinds of differential-driven robots is highly desirable. In this paper, we propose a universal trajectory optimization framework that can be applied to differential-driven robot class, enabling the generation of high-quality trajectories within a restricted computational timeframe. We introduce a novel trajectory representation based on polynomial parameterization of motion states or their integrals, such as angular and linear velocities, that inherently matching robots' motion to the control principle for differential-driven robot class. The trajectory optimization problem is formulated to minimize complexity while prioritizing safety and operational efficiency. We then build a full-stack autonomous planning and control system to show the feasibility and robustness. We conduct extensive simulations and real-world testing in crowded environments with three kinds of differential-driven robots to validate the effectiveness of our approach. We will release our method as an open-source package.

Microsaccade-inspired Event Camera for Robotics

May 28, 2024Abstract:Neuromorphic vision sensors or event cameras have made the visual perception of extremely low reaction time possible, opening new avenues for high-dynamic robotics applications. These event cameras' output is dependent on both motion and texture. However, the event camera fails to capture object edges that are parallel to the camera motion. This is a problem intrinsic to the sensor and therefore challenging to solve algorithmically. Human vision deals with perceptual fading using the active mechanism of small involuntary eye movements, the most prominent ones called microsaccades. By moving the eyes constantly and slightly during fixation, microsaccades can substantially maintain texture stability and persistence. Inspired by microsaccades, we designed an event-based perception system capable of simultaneously maintaining low reaction time and stable texture. In this design, a rotating wedge prism was mounted in front of the aperture of an event camera to redirect light and trigger events. The geometrical optics of the rotating wedge prism allows for algorithmic compensation of the additional rotational motion, resulting in a stable texture appearance and high informational output independent of external motion. The hardware device and software solution are integrated into a system, which we call Artificial MIcrosaccade-enhanced EVent camera (AMI-EV). Benchmark comparisons validate the superior data quality of AMI-EV recordings in scenarios where both standard cameras and event cameras fail to deliver. Various real-world experiments demonstrate the potential of the system to facilitate robotics perception both for low-level and high-level vision tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge