Xiaolin Huang

VL-RouterBench: A Benchmark for Vision-Language Model Routing

Dec 29, 2025Abstract:Multi-model routing has evolved from an engineering technique into essential infrastructure, yet existing work lacks a systematic, reproducible benchmark for evaluating vision-language models (VLMs). We present VL-RouterBench to assess the overall capability of VLM routing systems systematically. The benchmark is grounded in raw inference and scoring logs from VLMs and constructs quality and cost matrices over sample-model pairs. In scale, VL-RouterBench covers 14 datasets across 3 task groups, totaling 30,540 samples, and includes 15 open-source models and 2 API models, yielding 519,180 sample-model pairs and a total input-output token volume of 34,494,977. The evaluation protocol jointly measures average accuracy, average cost, and throughput, and builds a ranking score from the harmonic mean of normalized cost and accuracy to enable comparison across router configurations and cost budgets. On this benchmark, we evaluate 10 routing methods and baselines and observe a significant routability gain, while the best current routers still show a clear gap to the ideal Oracle, indicating considerable room for improvement in router architecture through finer visual cues and modeling of textual structure. We will open-source the complete data construction and evaluation toolchain to promote comparability, reproducibility, and practical deployment in multimodal routing research.

Bi-LoRA: Efficient Sharpness-Aware Minimization for Fine-Tuning Large-Scale Models

Aug 27, 2025

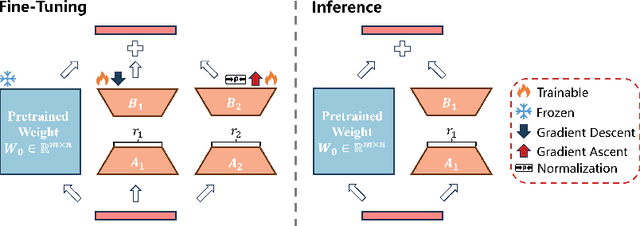

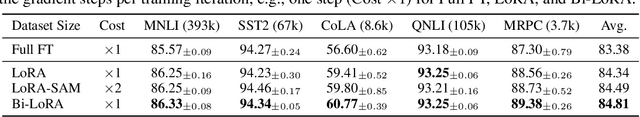

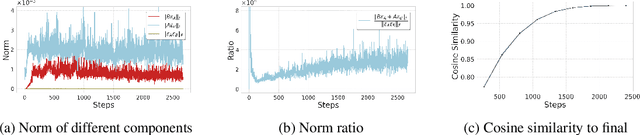

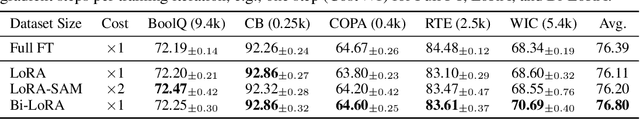

Abstract:Fine-tuning large-scale pre-trained models with limited data presents significant challenges for generalization. While Sharpness-Aware Minimization (SAM) has proven effective in improving generalization by seeking flat minima, its substantial extra memory and computation overhead make it impractical for large models. Integrating SAM with parameter-efficient fine-tuning methods like Low-Rank Adaptation (LoRA) is a promising direction. However, we find that directly applying SAM to LoRA parameters limits the sharpness optimization to a restricted subspace, hindering its effectiveness. To address this limitation, we propose Bi-directional Low-Rank Adaptation (Bi-LoRA), which introduces an auxiliary LoRA module to model SAM's adversarial weight perturbations. It decouples SAM's weight perturbations from LoRA optimization: the primary LoRA module adapts to specific tasks via standard gradient descent, while the auxiliary module captures the sharpness of the loss landscape through gradient ascent. Such dual-module design enables Bi-LoRA to capture broader sharpness for achieving flatter minima while remaining memory-efficient. Another important benefit is that the dual design allows for simultaneous optimization and perturbation, eliminating SAM's doubled training costs. Extensive experiments across diverse tasks and architectures demonstrate Bi-LoRA's efficiency and effectiveness in enhancing generalization.

T2I-ConBench: Text-to-Image Benchmark for Continual Post-training

May 22, 2025Abstract:Continual post-training adapts a single text-to-image diffusion model to learn new tasks without incurring the cost of separate models, but naive post-training causes forgetting of pretrained knowledge and undermines zero-shot compositionality. We observe that the absence of a standardized evaluation protocol hampers related research for continual post-training. To address this, we introduce T2I-ConBench, a unified benchmark for continual post-training of text-to-image models. T2I-ConBench focuses on two practical scenarios, item customization and domain enhancement, and analyzes four dimensions: (1) retention of generality, (2) target-task performance, (3) catastrophic forgetting, and (4) cross-task generalization. It combines automated metrics, human-preference modeling, and vision-language QA for comprehensive assessment. We benchmark ten representative methods across three realistic task sequences and find that no approach excels on all fronts. Even joint "oracle" training does not succeed for every task, and cross-task generalization remains unsolved. We release all datasets, code, and evaluation tools to accelerate research in continual post-training for text-to-image models.

A Unified Gradient-based Framework for Task-agnostic Continual Learning-Unlearning

May 21, 2025Abstract:Recent advancements in deep models have highlighted the need for intelligent systems that combine continual learning (CL) for knowledge acquisition with machine unlearning (MU) for data removal, forming the Continual Learning-Unlearning (CLU) paradigm. While existing work treats CL and MU as separate processes, we reveal their intrinsic connection through a unified optimization framework based on Kullback-Leibler divergence minimization. This framework decomposes gradient updates for approximate CLU into four components: learning new knowledge, unlearning targeted data, preserving existing knowledge, and modulation via weight saliency. A critical challenge lies in balancing knowledge update and retention during sequential learning-unlearning cycles. To resolve this stability-plasticity dilemma, we introduce a remain-preserved manifold constraint to induce a remaining Hessian compensation for CLU iterations. A fast-slow weight adaptation mechanism is designed to efficiently approximate the second-order optimization direction, combined with adaptive weighting coefficients and a balanced weight saliency mask, proposing a unified implementation framework for gradient-based CLU. Furthermore, we pioneer task-agnostic CLU scenarios that support fine-grained unlearning at the cross-task category and random sample levels beyond the traditional task-aware setups. Experiments demonstrate that the proposed UG-CLU framework effectively coordinates incremental learning, precise unlearning, and knowledge stability across multiple datasets and model architectures, providing a theoretical foundation and methodological support for dynamic, compliant intelligent systems.

SEB-Naver: A SE(2)-based Local Navigation Framework for Car-like Robots on Uneven Terrain

Mar 05, 2025Abstract:Autonomous navigation of car-like robots on uneven terrain poses unique challenges compared to flat terrain, particularly in traversability assessment and terrain-associated kinematic modelling for motion planning. This paper introduces SEB-Naver, a novel SE(2)-based local navigation framework designed to overcome these challenges. First, we propose an efficient traversability assessment method for SE(2) grids, leveraging GPU parallel computing to enable real-time updates and maintenance of local maps. Second, inspired by differential flatness, we present an optimization-based trajectory planning method that integrates terrain-associated kinematic models, significantly improving both planning efficiency and trajectory quality. Finally, we unify these components into SEB-Naver, achieving real-time terrain assessment and trajectory optimization. Extensive simulations and real-world experiments demonstrate the effectiveness and efficiency of our approach. The code is at https://github.com/ZJU-FAST-Lab/seb_naver.

MUSO: Achieving Exact Machine Unlearning in Over-Parameterized Regimes

Oct 11, 2024

Abstract:Machine unlearning (MU) is to make a well-trained model behave as if it had never been trained on specific data. In today's over-parameterized models, dominated by neural networks, a common approach is to manually relabel data and fine-tune the well-trained model. It can approximate the MU model in the output space, but the question remains whether it can achieve exact MU, i.e., in the parameter space. We answer this question by employing random feature techniques to construct an analytical framework. Under the premise of model optimization via stochastic gradient descent, we theoretically demonstrated that over-parameterized linear models can achieve exact MU through relabeling specific data. We also extend this work to real-world nonlinear networks and propose an alternating optimization algorithm that unifies the tasks of unlearning and relabeling. The algorithm's effectiveness, confirmed through numerical experiments, highlights its superior performance in unlearning across various scenarios compared to current state-of-the-art methods, particularly excelling over similar relabeling-based MU approaches.

Unified Gradient-Based Machine Unlearning with Remain Geometry Enhancement

Sep 29, 2024

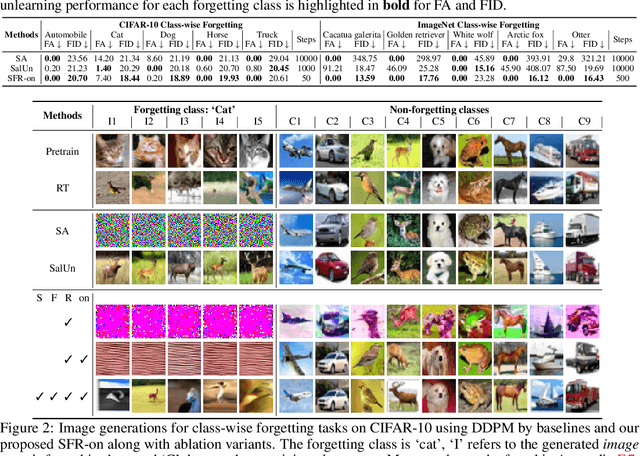

Abstract:Machine unlearning (MU) has emerged to enhance the privacy and trustworthiness of deep neural networks. Approximate MU is a practical method for large-scale models. Our investigation into approximate MU starts with identifying the steepest descent direction, minimizing the output Kullback-Leibler divergence to exact MU inside a parameters' neighborhood. This probed direction decomposes into three components: weighted forgetting gradient ascent, fine-tuning retaining gradient descent, and a weight saliency matrix. Such decomposition derived from Euclidean metric encompasses most existing gradient-based MU methods. Nevertheless, adhering to Euclidean space may result in sub-optimal iterative trajectories due to the overlooked geometric structure of the output probability space. We suggest embedding the unlearning update into a manifold rendered by the remaining geometry, incorporating second-order Hessian from the remaining data. It helps prevent effective unlearning from interfering with the retained performance. However, computing the second-order Hessian for large-scale models is intractable. To efficiently leverage the benefits of Hessian modulation, we propose a fast-slow parameter update strategy to implicitly approximate the up-to-date salient unlearning direction. Free from specific modal constraints, our approach is adaptable across computer vision unlearning tasks, including classification and generation. Extensive experiments validate our efficacy and efficiency. Notably, our method successfully performs class-forgetting on ImageNet using DiT and forgets a class on CIFAR-10 using DDPM in just 50 steps, compared to thousands of steps required by previous methods.

Flat-LoRA: Low-Rank Adaption over a Flat Loss Landscape

Sep 22, 2024

Abstract:Fine-tuning large-scale pre-trained models is prohibitively expensive in terms of computational and memory costs. Low-Rank Adaptation (LoRA), a popular Parameter-Efficient Fine-Tuning (PEFT) method, provides an efficient way to fine-tune models by optimizing only a low-rank matrix. Despite recent progress made in improving LoRA's performance, the connection between the LoRA optimization space and the original full parameter space is often overlooked. A solution that appears flat in the LoRA space may exist sharp directions in the full parameter space, potentially harming generalization performance. In this paper, we propose Flat-LoRA, an efficient approach that seeks a low-rank adaptation located in a flat region of the full parameter space.Instead of relying on the well-established sharpness-aware minimization approach, which can incur significant computational and memory burdens, we utilize random weight perturbation with a Bayesian expectation loss objective to maintain training efficiency and design a refined perturbation generation strategy for improved performance. Experiments on natural language processing and image classification tasks with various architectures demonstrate the effectiveness of our approach.

DDoS: Diffusion Distribution Similarity for Out-of-Distribution Detection

Sep 16, 2024

Abstract:Out-of-Distribution (OoD) detection determines whether the given samples are from the training distribution of the classifier-under-protection, i.e., the In-Distribution (InD), or from a different OoD. Latest researches introduce diffusion models pre-trained on InD data to advocate OoD detection by transferring an OoD image into a generated one that is close to InD, so that one could capture the distribution disparities between original and generated images to detect OoD data. Existing diffusion-based detectors adopt perceptual metrics on the two images to measure such disparities, but ignore a fundamental fact: Perceptual metrics are devised essentially for human-perceived similarities of low-level image patterns, e.g., textures and colors, and are not advisable in evaluating distribution disparities, since images with different low-level patterns could possibly come from the same distribution. To address this issue, we formulate a diffusion-based detection framework that considers the distribution similarity between a tested image and its generated counterpart via a novel proper similarity metric in the informative feature space and probability space learned by the classifier-under-protection. An anomaly-removal strategy is further presented to enlarge such distribution disparities by removing abnormal OoD information in the feature space to facilitate the detection. Extensive empirical results unveil the insufficiency of perceptual metrics and the effectiveness of our distribution similarity framework with new state-of-the-art detection performance.

Scalable Learned Model Soup on a Single GPU: An Efficient Subspace Training Strategy

Jul 04, 2024

Abstract:Pre-training followed by fine-tuning is widely adopted among practitioners. The performance can be improved by "model soups"~\cite{wortsman2022model} via exploring various hyperparameter configurations.The Learned-Soup, a variant of model soups, significantly improves the performance but suffers from substantial memory and time costs due to the requirements of (i) having to load all fine-tuned models simultaneously, and (ii) a large computational graph encompassing all fine-tuned models. In this paper, we propose Memory Efficient Hyperplane Learned Soup (MEHL-Soup) to tackle this issue by formulating the learned soup as a hyperplane optimization problem and introducing block coordinate gradient descent to learn the mixing coefficients. At each iteration, MEHL-Soup only needs to load a few fine-tuned models and build a computational graph with one combined model. We further extend MEHL-Soup to MEHL-Soup+ in a layer-wise manner. Experimental results on various ViT models and data sets show that MEHL-Soup(+) outperforms Learned-Soup(+) in terms of test accuracy, and also reduces memory usage by more than $13\times$. Moreover, MEHL-Soup(+) can be run on a single GPU and achieves $9\times$ speed up in soup construction compared with the Learned-Soup. The code is released at https://github.com/nblt/MEHL-Soup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge