Yujun Li

Robust Design for Multi-Antenna LEO Satellite Communications with Fractional Delay and Doppler Shifts: An RSMA-OTFS Approach

Dec 16, 2025Abstract:Low-Earth-orbit (LEO) satellite communication systems face challenges due to high satellite mobility, which hinders the reliable acquisition of instantaneous channel state information at the transmitter (CSIT) and subsequently degrades multi-user transmission performance. This paper investigates a downlink multi-user multi-antenna system, and tackles the above challenges by introducing orthogonal time frequency space (OTFS) modulation and rate-splitting multiple access (RSMA) transmission. Specifically, OTFS enables stable characterization of time-varying channels by representing them in the delay-Doppler domain. However, realistic propagation introduces various inter-symbol and inter-user interference due to non-orthogonal yet practical rectangular pulse shaping, fractional delays, Doppler shifts, and imperfect (statistical) CSIT. In this context, RSMA offers promising robustness for interference mitigation and CSIT imperfections, and hence is integrated with OTFS to provide a comprehensive solution. A compact cross-domain input-output relationship for RSMA-OTFS is established, and an ergodic sum-rate maximization problem is formulated and solved using a weighted minimum mean-square-error based alternating optimization algorithm that does not depend on channel sparsity. Simulation results reveal that the considered practical propagation effects significantly degrade performance if unaddressed. Furthermore, the RSMA-OTFS scheme demonstrates improved ergodic sum-rate and robustness against CSIT uncertainty across various user deployments and CSIT qualities.

3D-UIR: 3D Gaussian for Underwater 3D Scene Reconstruction via Physics Based Appearance-Medium Decoupling

May 29, 2025Abstract:Novel view synthesis for underwater scene reconstruction presents unique challenges due to complex light-media interactions. Optical scattering and absorption in water body bring inhomogeneous medium attenuation interference that disrupts conventional volume rendering assumptions of uniform propagation medium. While 3D Gaussian Splatting (3DGS) offers real-time rendering capabilities, it struggles with underwater inhomogeneous environments where scattering media introduce artifacts and inconsistent appearance. In this study, we propose a physics-based framework that disentangles object appearance from water medium effects through tailored Gaussian modeling. Our approach introduces appearance embeddings, which are explicit medium representations for backscatter and attenuation, enhancing scene consistency. In addition, we propose a distance-guided optimization strategy that leverages pseudo-depth maps as supervision with depth regularization and scale penalty terms to improve geometric fidelity. By integrating the proposed appearance and medium modeling components via an underwater imaging model, our approach achieves both high-quality novel view synthesis and physically accurate scene restoration. Experiments demonstrate our significant improvements in rendering quality and restoration accuracy over existing methods. The project page is available at https://bilityniu.github.io/3D-UIR.

Pangu Ultra MoE: How to Train Your Big MoE on Ascend NPUs

May 07, 2025

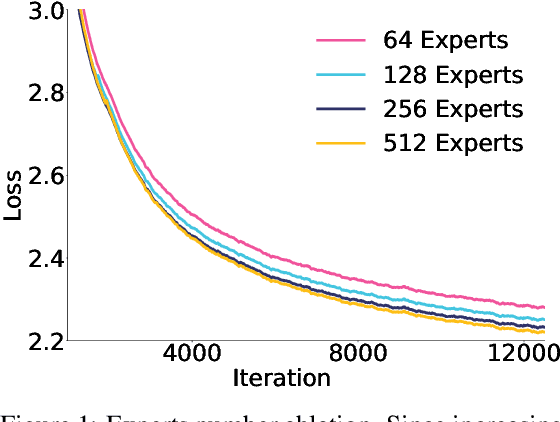

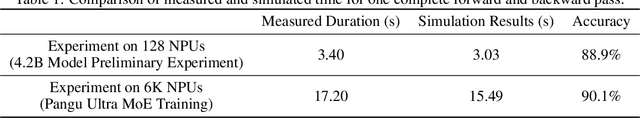

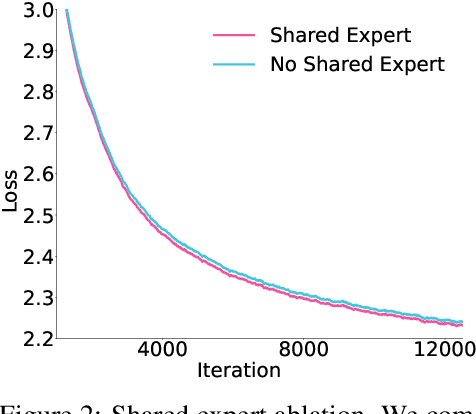

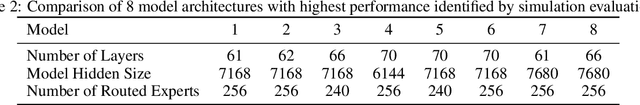

Abstract:Sparse large language models (LLMs) with Mixture of Experts (MoE) and close to a trillion parameters are dominating the realm of most capable language models. However, the massive model scale poses significant challenges for the underlying software and hardware systems. In this paper, we aim to uncover a recipe to harness such scale on Ascend NPUs. The key goals are better usage of the computing resources under the dynamic sparse model structures and materializing the expected performance gain on the actual hardware. To select model configurations suitable for Ascend NPUs without repeatedly running the expensive experiments, we leverage simulation to compare the trade-off of various model hyperparameters. This study led to Pangu Ultra MoE, a sparse LLM with 718 billion parameters, and we conducted experiments on the model to verify the simulation results. On the system side, we dig into Expert Parallelism to optimize the communication between NPU devices to reduce the synchronization overhead. We also optimize the memory efficiency within the devices to further reduce the parameter and activation management overhead. In the end, we achieve an MFU of 30.0% when training Pangu Ultra MoE, with performance comparable to that of DeepSeek R1, on 6K Ascend NPUs, and demonstrate that the Ascend system is capable of harnessing all the training stages of the state-of-the-art language models. Extensive experiments indicate that our recipe can lead to efficient training of large-scale sparse language models with MoE. We also study the behaviors of such models for future reference.

Pangu Ultra: Pushing the Limits of Dense Large Language Models on Ascend NPUs

Apr 10, 2025

Abstract:We present Pangu Ultra, a Large Language Model (LLM) with 135 billion parameters and dense Transformer modules trained on Ascend Neural Processing Units (NPUs). Although the field of LLM has been witnessing unprecedented advances in pushing the scale and capability of LLM in recent years, training such a large-scale model still involves significant optimization and system challenges. To stabilize the training process, we propose depth-scaled sandwich normalization, which effectively eliminates loss spikes during the training process of deep models. We pre-train our model on 13.2 trillion diverse and high-quality tokens and further enhance its reasoning capabilities during post-training. To perform such large-scale training efficiently, we utilize 8,192 Ascend NPUs with a series of system optimizations. Evaluations on multiple diverse benchmarks indicate that Pangu Ultra significantly advances the state-of-the-art capabilities of dense LLMs such as Llama 405B and Mistral Large 2, and even achieves competitive results with DeepSeek-R1, whose sparse model structure contains much more parameters. Our exploration demonstrates that Ascend NPUs are capable of efficiently and effectively training dense models with more than 100 billion parameters. Our model and system will be available for our commercial customers.

Learnable Sequence Augmenter for Triplet Contrastive Learning in Sequential Recommendation

Mar 26, 2025Abstract:Most existing contrastive learning-based sequential recommendation (SR) methods rely on random operations (e.g., crop, reorder, and substitute) to generate augmented sequences. These methods often struggle to create positive sample pairs that closely resemble the representations of the raw sequences, potentially disrupting item correlations by deleting key items or introducing noisy iterac, which misguides the contrastive learning process. To address this limitation, we propose Learnable sequence Augmentor for triplet Contrastive Learning in sequential Recommendation (LACLRec). Specifically, the self-supervised learning-based augmenter can automatically delete noisy items from sequences and insert new items that better capture item transition patterns, generating a higher-quality augmented sequence. Subsequently, we randomly generate another augmented sequence and design a ranking-based triplet contrastive loss to differentiate the similarities between the raw sequence, the augmented sequence from augmenter, and the randomly augmented sequence, providing more fine-grained contrastive signals. Extensive experiments on three real-world datasets demonstrate that both the sequence augmenter and the triplet contrast contribute to improving recommendation accuracy. LACLRec significantly outperforms the baseline model CL4SRec, and demonstrates superior performance compared to several state-of-the-art sequential recommendation algorithms.

Edge Contrastive Learning: An Augmentation-Free Graph Contrastive Learning Model

Dec 15, 2024

Abstract:Graph contrastive learning (GCL) aims to learn representations from unlabeled graph data in a self-supervised manner and has developed rapidly in recent years. However, edgelevel contrasts are not well explored by most existing GCL methods. Most studies in GCL only regard edges as auxiliary information while updating node features. One of the primary obstacles of edge-based GCL is the heavy computation burden. To tackle this issue, we propose a model that can efficiently learn edge features for GCL, namely AugmentationFree Edge Contrastive Learning (AFECL) to achieve edgeedge contrast. AFECL depends on no augmentation consisting of two parts. Firstly, we design a novel edge feature generation method, where edge features are computed by embedding concatenation of their connected nodes. Secondly, an edge contrastive learning scheme is developed, where edges connecting the same nodes are defined as positive pairs, and other edges are defined as negative pairs. Experimental results show that compared with recent state-of-the-art GCL methods or even some supervised GNNs, AFECL achieves SOTA performance on link prediction and semi-supervised node classification of extremely scarce labels. The source code is available at https://github.com/YujunLi361/AFECL.

Flat-LoRA: Low-Rank Adaption over a Flat Loss Landscape

Sep 22, 2024

Abstract:Fine-tuning large-scale pre-trained models is prohibitively expensive in terms of computational and memory costs. Low-Rank Adaptation (LoRA), a popular Parameter-Efficient Fine-Tuning (PEFT) method, provides an efficient way to fine-tune models by optimizing only a low-rank matrix. Despite recent progress made in improving LoRA's performance, the connection between the LoRA optimization space and the original full parameter space is often overlooked. A solution that appears flat in the LoRA space may exist sharp directions in the full parameter space, potentially harming generalization performance. In this paper, we propose Flat-LoRA, an efficient approach that seeks a low-rank adaptation located in a flat region of the full parameter space.Instead of relying on the well-established sharpness-aware minimization approach, which can incur significant computational and memory burdens, we utilize random weight perturbation with a Bayesian expectation loss objective to maintain training efficiency and design a refined perturbation generation strategy for improved performance. Experiments on natural language processing and image classification tasks with various architectures demonstrate the effectiveness of our approach.

Copyleft for Alleviating AIGC Copyright Dilemma: What-if Analysis, Public Perception and Implications

Feb 19, 2024Abstract:As AIGC has impacted our society profoundly in the past years, ethical issues have received tremendous attention. The most urgent one is the AIGC copyright dilemma, which can immensely stifle the development of AIGC and greatly cost the entire society. Given the complexity of AIGC copyright governance and the fact that no perfect solution currently exists, previous work advocated copyleft on AI governance but without substantive analysis. In this paper, we take a step further to explore the feasibility of copyleft to alleviate the AIGC copyright dilemma. We conduct a mixed-methods study from two aspects: qualitatively, we use a formal what-if analysis to clarify the dilemma and provide case studies to show the feasibility of copyleft; quantitatively, we perform a carefully designed survey to find out how the public feels about copylefting AIGC. The key findings include: a) people generally perceive the dilemma, b) they prefer to use authorized AIGC under loose restriction, and c) they are positive to copyleft in AIGC and willing to use it in the future.

Privacy-Preserving Sequential Recommendation with Collaborative Confusion

Jan 09, 2024Abstract:Sequential recommendation has attracted a lot of attention from both academia and industry, however the privacy risks associated to gathering and transferring users' personal interaction data are often underestimated or ignored. Existing privacy-preserving studies are mainly applied to traditional collaborative filtering or matrix factorization rather than sequential recommendation. Moreover, these studies are mostly based on differential privacy or federated learning, which often leads to significant performance degradation, or has high requirements for communication. In this work, we address privacy-preserving from a different perspective. Unlike existing research, we capture collaborative signals of neighbor interaction sequences and directly inject indistinguishable items into the target sequence before the recommendation process begins, thereby increasing the perplexity of the target sequence. Even if the target interaction sequence is obtained by attackers, it is difficult to discern which ones are the actual user interaction records. To achieve this goal, we propose a CoLlaborative-cOnfusion seqUential recommenDer, namely CLOUD, which incorporates a collaborative confusion mechanism to edit the raw interaction sequences before conducting recommendation. Specifically, CLOUD first calculates the similarity between the target interaction sequence and other neighbor sequences to find similar sequences. Then, CLOUD considers the shared representation of the target sequence and similar sequences to determine the operation to be performed: keep, delete, or insert. We design a copy mechanism to make items from similar sequences have a higher probability to be inserted into the target sequence. Finally, the modified sequence is used to train the recommender and predict the next item.

Teach Large Language Models to Forget Privacy

Dec 30, 2023

Abstract:Large Language Models (LLMs) have proven powerful, but the risk of privacy leakage remains a significant concern. Traditional privacy-preserving methods, such as Differential Privacy and Homomorphic Encryption, are inadequate for black-box API-only settings, demanding either model transparency or heavy computational resources. We propose Prompt2Forget (P2F), the first framework designed to tackle the LLM local privacy challenge by teaching LLM to forget. The method involves decomposing full questions into smaller segments, generating fabricated answers, and obfuscating the model's memory of the original input. A benchmark dataset was crafted with questions containing privacy-sensitive information from diverse fields. P2F achieves zero-shot generalization, allowing adaptability across a wide range of use cases without manual adjustments. Experimental results indicate P2F's robust capability to obfuscate LLM's memory, attaining a forgetfulness score of around 90\% without any utility loss. This represents an enhancement of up to 63\% when contrasted with the naive direct instruction technique, highlighting P2F's efficacy in mitigating memory retention of sensitive information within LLMs. Our findings establish the first benchmark in the novel field of the LLM forgetting task, representing a meaningful advancement in privacy preservation in the emerging LLM domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge