Mengke Zhang

TriphiBot: A Triphibious Robot Combining FOC-based Propulsion with Eccentric Design

Feb 01, 2026Abstract:Triphibious robots capable of multi-domain motion and cross-domain transitions are promising to handle complex tasks across diverse environments. However, existing designs primarily focus on dual-mode platforms, and some designs suffer from high mechanical complexity or low propulsion efficiency, which limits their application. In this paper, we propose a novel triphibious robot capable of aerial, terrestrial, and aquatic motion, by a minimalist design combining a quadcopter structure with two passive wheels, without extra actuators. To address inefficiency of ground-support motion (moving on land/seabed) for quadcopter based designs, we introduce an eccentric Center of Gravity (CoG) design that inherently aligns thrust with motion, enhancing efficiency without specialized mechanical transformation designs. Furthermore, to address the drastic differences in motion control caused by different fluids (air and water), we develop a unified propulsion system based on Field-Oriented Control (FOC). This method resolves torque matching issues and enables precise, rapid bidirectional thrust across different mediums. Grounded in the perspective of living condition and ground support, we analyse the robot's dynamics and propose a Hybrid Nonlinear Model Predictive Control (HNMPC)-PID control system to ensure stable multi-domain motion and seamless transitions. Experimental results validate the robot's multi-domain motion and cross-mode transition capability, along with the efficiency and adaptability of the proposed propulsion system.

Trajectory Optimization for Differential Drive Mobile Manipulators via Topological Paths Search and Arc Length-Yaw Parameterization

Jul 03, 2025Abstract:We present an efficient hierarchical motion planning pipeline for differential drive mobile manipulators. Our approach first searches for multiple collisionfree and topologically distinct paths for the mobile base to extract the space in which optimal solutions may exist. Further sampling and optimization are then conducted in parallel to explore feasible whole-body trajectories. For trajectory optimization, we employ polynomial trajectories and arc length-yaw parameterization, enabling efficient handling of the nonholonomic dynamics while ensuring optimality.

Efficient Trajectory Generation Based on Traversable Planes in 3D Complex Architectural Spaces

Mar 11, 2025Abstract:With the increasing integration of robots into human life, their role in architectural spaces where people spend most of their time has become more prominent. While motion capabilities and accurate localization for automated robots have rapidly developed, the challenge remains to generate efficient, smooth, comprehensive, and high-quality trajectories in these areas. In this paper, we propose a novel efficient planner for ground robots to autonomously navigate in large complex multi-layered architectural spaces. Considering that traversable regions typically include ground, slopes, and stairs, which are planar or nearly planar structures, we simplify the problem to navigation within and between complex intersecting planes. We first extract traversable planes from 3D point clouds through segmenting, merging, classifying, and connecting to build a plane-graph, which is lightweight but fully represents the traversable regions. We then build a trajectory optimization based on motion state trajectory and fully consider special constraints when crossing multi-layer planes to maximize the robot's maneuverability. We conduct experiments in simulated environments and test on a CubeTrack robot in real-world scenarios, validating the method's effectiveness and practicality.

Real-time Spatial-temporal Traversability Assessment via Feature-based Sparse Gaussian Process

Mar 06, 2025Abstract:Terrain analysis is critical for the practical application of ground mobile robots in real-world tasks, especially in outdoor unstructured environments. In this paper, we propose a novel spatial-temporal traversability assessment method, which aims to enable autonomous robots to effectively navigate through complex terrains. Our approach utilizes sparse Gaussian processes (SGP) to extract geometric features (curvature, gradient, elevation, etc.) directly from point cloud scans. These features are then used to construct a high-resolution local traversability map. Then, we design a spatial-temporal Bayesian Gaussian kernel (BGK) inference method to dynamically evaluate traversability scores, integrating historical and real-time data while considering factors such as slope, flatness, gradient, and uncertainty metrics. GPU acceleration is applied in the feature extraction step, and the system achieves real-time performance. Extensive simulation experiments across diverse terrain scenarios demonstrate that our method outperforms SOTA approaches in both accuracy and computational efficiency. Additionally, we develop an autonomous navigation framework integrated with the traversability map and validate it with a differential driven vehicle in complex outdoor environments. Our code will be open-source for further research and development by the community, https://github.com/ZJU-FAST-Lab/FSGP_BGK.

Policy Decorator: Model-Agnostic Online Refinement for Large Policy Model

Dec 18, 2024

Abstract:Recent advancements in robot learning have used imitation learning with large models and extensive demonstrations to develop effective policies. However, these models are often limited by the quantity, quality, and diversity of demonstrations. This paper explores improving offline-trained imitation learning models through online interactions with the environment. We introduce Policy Decorator, which uses a model-agnostic residual policy to refine large imitation learning models during online interactions. By implementing controlled exploration strategies, Policy Decorator enables stable, sample-efficient online learning. Our evaluation spans eight tasks across two benchmarks-ManiSkill and Adroit-and involves two state-of-the-art imitation learning models (Behavior Transformer and Diffusion Policy). The results show Policy Decorator effectively improves the offline-trained policies and preserves the smooth motion of imitation learning models, avoiding the erratic behaviors of pure RL policies. See our project page (https://policydecorator.github.io) for videos.

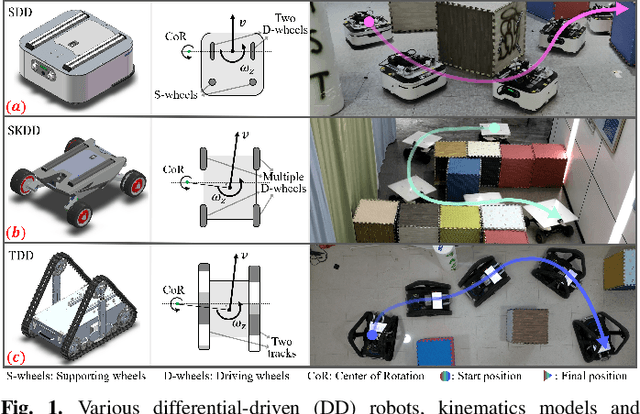

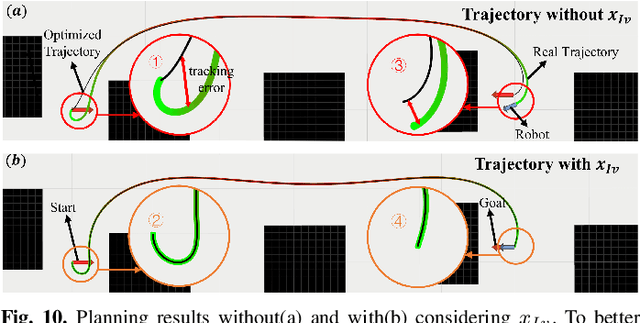

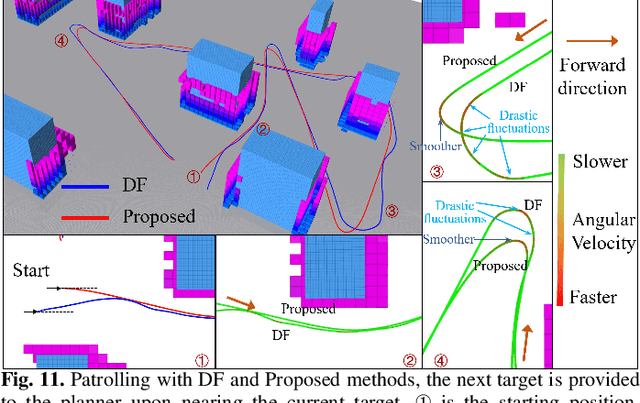

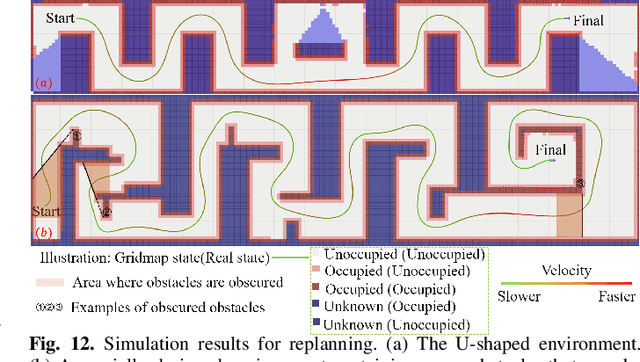

Universal Trajectory Optimization Framework for Differential-Driven Robot Class

Sep 12, 2024

Abstract:Differential-driven robots are widely used in various scenarios thanks to their straightforward principle, from household service robots to disaster response field robots. There are several different types of deriving mechanisms considering the real-world applications, including two-wheeled, four-wheeled skid-steering, tracked robots, etc. The differences in the driving mechanism usually require specific kinematic modeling when precise controlling is desired. Furthermore, the nonholonomic dynamics and possible lateral slip lead to different degrees of difficulty in getting feasible and high-quality trajectories. Therefore, a comprehensive trajectory optimization framework to compute trajectories efficiently for various kinds of differential-driven robots is highly desirable. In this paper, we propose a universal trajectory optimization framework that can be applied to differential-driven robot class, enabling the generation of high-quality trajectories within a restricted computational timeframe. We introduce a novel trajectory representation based on polynomial parameterization of motion states or their integrals, such as angular and linear velocities, that inherently matching robots' motion to the control principle for differential-driven robot class. The trajectory optimization problem is formulated to minimize complexity while prioritizing safety and operational efficiency. We then build a full-stack autonomous planning and control system to show the feasibility and robustness. We conduct extensive simulations and real-world testing in crowded environments with three kinds of differential-driven robots to validate the effectiveness of our approach. We will release our method as an open-source package.

LatticeGen: A Cooperative Framework which Hides Generated Text in a Lattice for Privacy-Aware Generation on Cloud

Oct 02, 2023

Abstract:In the current user-server interaction paradigm of prompted generation with large language models (LLM) on cloud, the server fully controls the generation process, which leaves zero options for users who want to keep the generated text to themselves. We propose LatticeGen, a cooperative framework in which the server still handles most of the computation while the user controls the sampling operation. The key idea is that the true generated sequence is mixed with noise tokens by the user and hidden in a noised lattice. Considering potential attacks from a hypothetically malicious server and how the user can defend against it, we propose the repeated beam-search attack and the mixing noise scheme. In our experiments we apply LatticeGen to protect both prompt and generation. It is shown that while the noised lattice degrades generation quality, LatticeGen successfully protects the true generation to a remarkable degree under strong attacks (more than 50% of the semantic remains hidden as measured by BERTScore).

Scalable neural network models and terascale datasets for particle-flow reconstruction

Sep 13, 2023Abstract:We study scalable machine learning models for full event reconstruction in high-energy electron-positron collisions based on a highly granular detector simulation. Particle-flow (PF) reconstruction can be formulated as a supervised learning task using tracks and calorimeter clusters or hits. We compare a graph neural network and kernel-based transformer and demonstrate that both avoid quadratic memory allocation and computational cost while achieving realistic PF reconstruction. We show that hyperparameter tuning on a supercomputer significantly improves the physics performance of the models. We also demonstrate that the resulting model is highly portable across hardware processors, supporting Nvidia, AMD, and Intel Habana cards. Finally, we demonstrate that the model can be trained on highly granular inputs consisting of tracks and calorimeter hits, resulting in a competitive physics performance with the baseline. Datasets and software to reproduce the studies are published following the findable, accessible, interoperable, and reusable (FAIR) principles.

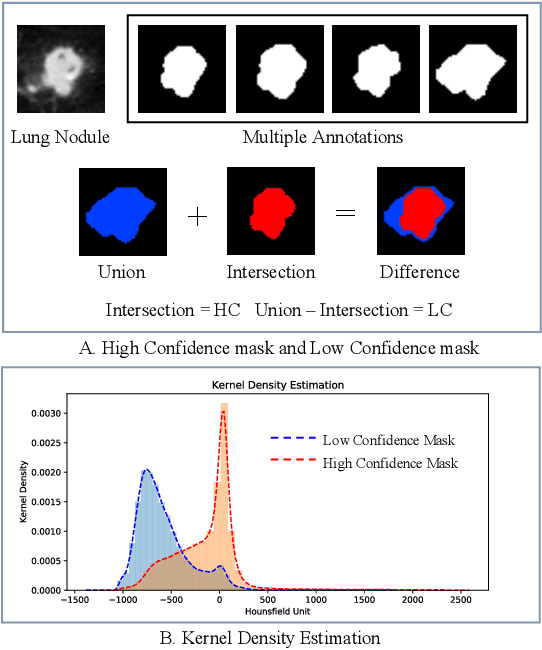

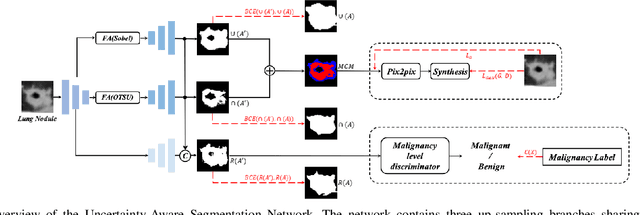

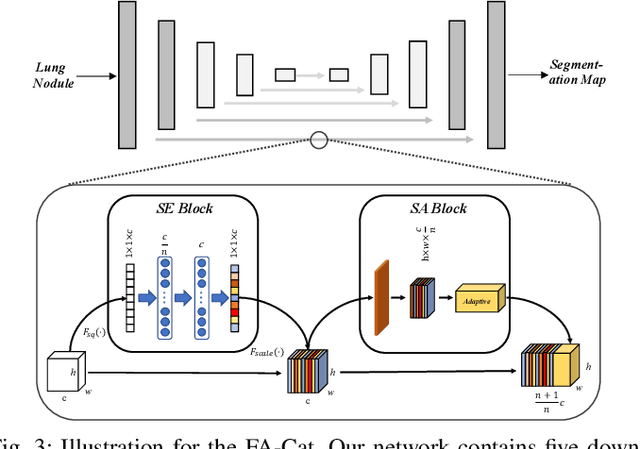

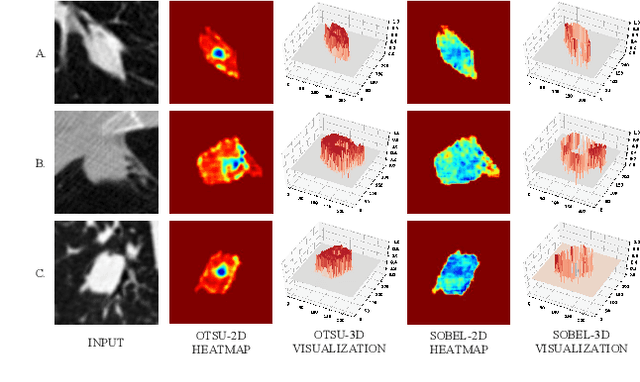

Uncertainty-Aware Lung Nodule Segmentation with Multiple Annotations

Oct 24, 2021

Abstract:Since radiologists have different training and clinical experience, they may provide various segmentation maps for a lung nodule. As a result, for a specific lung nodule, some regions have a higher chance of causing segmentation uncertainty, which brings difficulty for lung nodule segmentation with multiple annotations. To address this problem, this paper proposes an Uncertainty-Aware Segmentation Network (UAS-Net) based on multi-branch U-Net, which can learn the valuable visual features from the regions that may cause segmentation uncertainty and contribute to a better segmentation result. Meanwhile, this network can provide a Multi-Confidence Mask (MCM) simultaneously, pointing out regions with different segmentation uncertainty levels. We introduce a Feature-Aware Concatenation structure for different learning targets and let each branch have a specific learning preference. Moreover, a joint adversarial learning process is also adopted to help learn discriminative features of complex structures. Experimental results show that our method can predict the reasonable regions with higher uncertainty and improve lung nodule segmentation performance in LIDC-IDRI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge