Teng Ma

EcoAlign: An Economically Rational Framework for Efficient LVLM Alignment

Nov 14, 2025Abstract:Large Vision-Language Models (LVLMs) exhibit powerful reasoning capabilities but suffer sophisticated jailbreak vulnerabilities. Fundamentally, aligning LVLMs is not just a safety challenge but a problem of economic efficiency. Current alignment methods struggle with the trade-off between safety, utility, and operational costs. Critically, a focus solely on final outputs (process-blindness) wastes significant computational budget on unsafe deliberation. This flaw allows harmful reasoning to be disguised with benign justifications, thereby circumventing simple additive safety scores. To address this, we propose EcoAlign, an inference-time framework that reframes alignment as an economically rational search by treating the LVLM as a boundedly rational agent. EcoAlign incrementally expands a thought graph and scores actions using a forward-looking function (analogous to net present value) that dynamically weighs expected safety, utility, and cost against the remaining budget. To prevent deception, path safety is enforced via the weakest-link principle. Extensive experiments across 3 closed-source and 2 open-source models on 6 datasets show that EcoAlign matches or surpasses state-of-the-art safety and utility at a lower computational cost, thereby offering a principled, economical pathway to robust LVLM alignment.

Mining Platoon Patterns from Traffic Videos

Dec 28, 2024Abstract:Discovering co-movement patterns from urban-scale video data sources has emerged as an attractive topic. This task aims to identify groups of objects that travel together along a common route, which offers effective support for government agencies in enhancing smart city management. However, the previous work has made a strong assumption on the accuracy of recovered trajectories from videos and their co-movement pattern definition requires the group of objects to appear across consecutive cameras along the common route. In practice, this often leads to missing patterns if a vehicle is not correctly identified from a certain camera due to object occlusion or vehicle mis-matching. To address this challenge, we propose a relaxed definition of co-movement patterns from video data, which removes the consecutiveness requirement in the common route and accommodates a certain number of missing captured cameras for objects within the group. Moreover, a novel enumeration framework called MaxGrowth is developed to efficiently retrieve the relaxed patterns. Unlike previous filter-and-refine frameworks comprising both candidate enumeration and subsequent candidate verification procedures, MaxGrowth incurs no verification cost for the candidate patterns. It treats the co-movement pattern as an equivalent sequence of clusters, enumerating candidates with increasing sequence length while avoiding the generation of any false positives. Additionally, we also propose two effective pruning rules to efficiently filter the non-maximal patterns. Extensive experiments are conducted to validate the efficiency of MaxGrowth and the quality of its generated co-movement patterns. Our MaxGrowth runs up to two orders of magnitude faster than the baseline algorithm. It also demonstrates high accuracy in real video dataset when the trajectory recovery algorithm is not perfect.

CLDG: Contrastive Learning on Dynamic Graphs

Dec 19, 2024

Abstract:The graph with complex annotations is the most potent data type, whose constantly evolving motivates further exploration of the unsupervised dynamic graph representation. One of the representative paradigms is graph contrastive learning. It constructs self-supervised signals by maximizing the mutual information between the statistic graph's augmentation views. However, the semantics and labels may change within the augmentation process, causing a significant performance drop in downstream tasks. This drawback becomes greatly magnified on dynamic graphs. To address this problem, we designed a simple yet effective framework named CLDG. Firstly, we elaborate that dynamic graphs have temporal translation invariance at different levels. Then, we proposed a sampling layer to extract the temporally-persistent signals. It will encourage the node to maintain consistent local and global representations, i.e., temporal translation invariance under the timespan views. The extensive experiments demonstrate the effectiveness and efficiency of the method on seven datasets by outperforming eight unsupervised state-of-the-art baselines and showing competitiveness against four semi-supervised methods. Compared with the existing dynamic graph method, the number of model parameters and training time is reduced by an average of 2,001.86 times and 130.31 times on seven datasets, respectively.

Timely reliable Bayesian decision-making enabled using memristors

Dec 07, 2024Abstract:Brains perform timely reliable decision-making by Bayes theorem. Bayes theorem quantifies events as probabilities and, through probability rules, renders the decisions. Learning from this, applying Bayes theorem in practical problems can visualize the potential risks and decision confidence, thereby enabling efficient user-scene interactions. However, given the probabilistic nature, implementing Bayes theorem with the conventional deterministic computing can inevitably induce excessive computational cost and decision latency. Herein, we propose a probabilistic computing approach using memristors to implement Bayes theorem. We integrate volatile memristors with Boolean logics and, by exploiting the volatile stochastic switching of the memristors, realize Boolean operations with statistical probabilities and correlations, key for enabling Bayes theorem. To practically demonstrate the effectiveness of our memristor-enabled Bayes theorem approach in user-scene interactions, we design lightweight Bayesian inference and fusion operators using our probabilistic logics and apply the operators in road scene parsing for self-driving, including route planning and obstacle detection. The results show that our operators can achieve reliable decisions at a rate over 2,500 frames per second, outperforming human decision-making and the existing driving assistance systems.

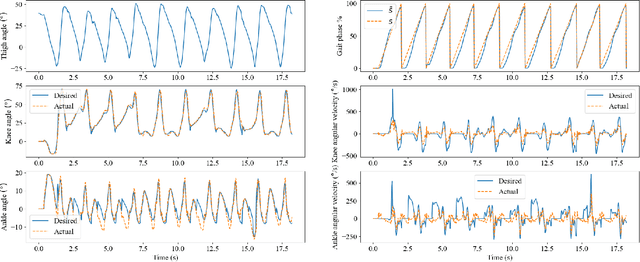

Beyond Gait: Learning Knee Angle for Seamless Prosthesis Control in Multiple Scenarios

Apr 10, 2024Abstract:Deep learning models have become a powerful tool in knee angle estimation for lower limb prostheses, owing to their adaptability across various gait phases and locomotion modes. Current methods utilize Multi-Layer Perceptrons (MLP), Long-Short Term Memory Networks (LSTM), and Convolutional Neural Networks (CNN), predominantly analyzing motion information from the thigh. Contrary to these approaches, our study introduces a holistic perspective by integrating whole-body movements as inputs. We propose a transformer-based probabilistic framework, termed the Angle Estimation Probabilistic Model (AEPM), that offers precise angle estimations across extensive scenarios beyond walking. AEPM achieves an overall RMSE of 6.70 degrees, with an RMSE of 3.45 degrees in walking scenarios. Compared to the state of the art, AEPM has improved the prediction accuracy for walking by 11.31%. Our method can achieve seamless adaptation between different locomotion modes. Also, this model can be utilized to analyze the synergy between the knee and other joints. We reveal that the whole body movement has valuable information for knee movement, which can provide insights into designing sensors for prostheses. The code is available at https://github.com/penway/Beyond-Gait-AEPM.

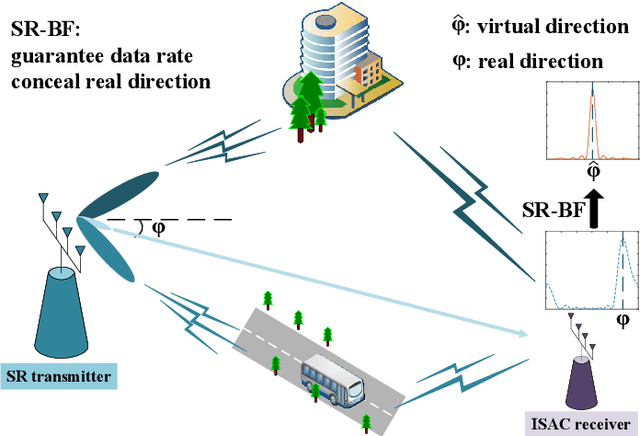

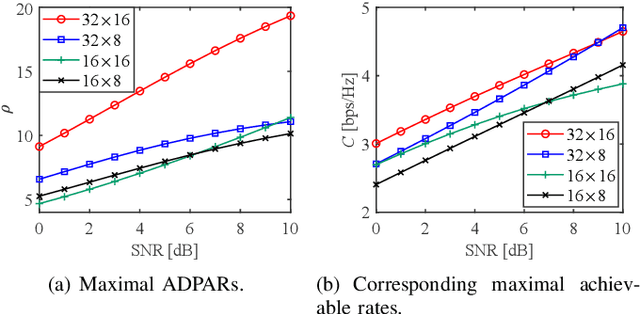

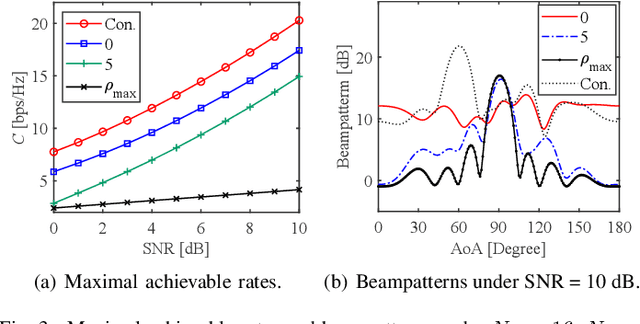

Sensing-Resistance-Oriented Beamforming for Privacy Protection from ISAC Devices

Apr 08, 2024

Abstract:With the evolution of integrated sensing and communication (ISAC) technology, a growing number of devices go beyond conventional communication functions with sensing abilities. Therefore, future networks are divinable to encounter new privacy concerns on sensing, such as the exposure of position information to unintended receivers. In contrast to traditional privacy preserving schemes aiming to prevent eavesdropping, this contribution conceives a novel beamforming design toward sensing resistance (SR). Specifically, we expect to guarantee the communication quality while masking the real direction of the SR transmitter during the communication. To evaluate the SR performance, a metric termed angular-domain peak-to-average ratio (ADPAR) is first defined and analyzed. Then, we resort to the null-space technique to conceal the real direction, hence to convert the optimization problem to a more tractable form. Moreover, semidefinite relaxation along with index optimization is further utilized to obtain the optimal beamformer. Finally, simulation results demonstrate the feasibility of the proposed SR-oriented beamforming design toward privacy protection from ISAC receivers.

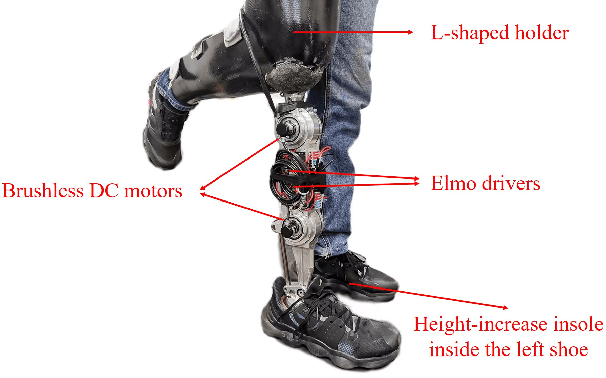

Learning Task-adaptive Quasi-stiffness Control for A Powered Transfemoral Prosthesis

Nov 25, 2023Abstract:While significant advancements have been made in the mechanical and task-specific controller designs of powered transfemoral prostheses, developing a task-adaptive control framework that generalizes across various locomotion modes and terrain conditions remains an open problem. This study proposes a task-adaptive learning quasi-stiffness control framework for powered prostheses that generalizes across tasks, including the torque-angle relationship reconstruction part and the quasi-stiffness controller design part. Quasi-stiffness is defined as the slope of the human joint's torque-angle relationship. To accurately obtain the torque-angle relationship in a new task, a Gaussian Process Regression (GPR) model is introduced to predict the target features of the human joint's angle and torque in the task. Then a Kernelized Movement Primitives (KMP) is employed to reconstruct the torque-angle relationship of a new task from multiple human demonstrations and estimated target features. Based on the torque-angle relationship of the new task, a quasi-stiffness control approach is designed for a powered prosthesis. Finally, the proposed framework is validated through practical examples, including varying speed and incline walking tasks. The proposed framework has the potential to expand to variable walking tasks in daily life for the transfemoral amputees.

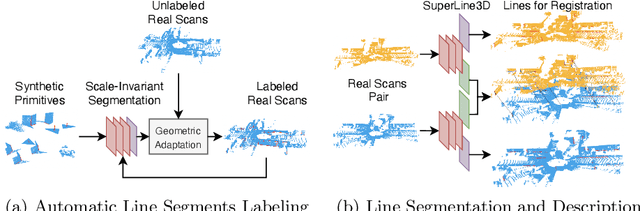

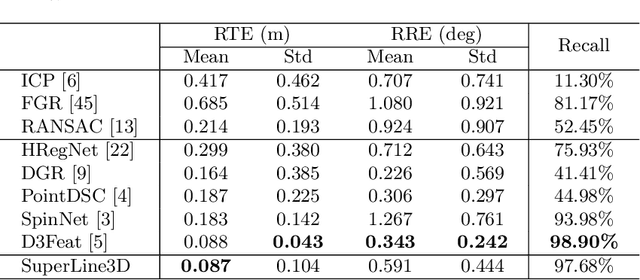

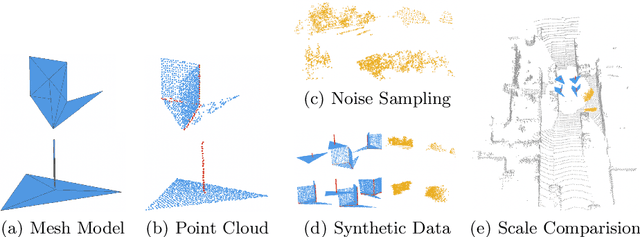

SuperLine3D: Self-supervised Line Segmentation and Description for LiDAR Point Cloud

Aug 03, 2022

Abstract:Poles and building edges are frequently observable objects on urban roads, conveying reliable hints for various computer vision tasks. To repetitively extract them as features and perform association between discrete LiDAR frames for registration, we propose the first learning-based feature segmentation and description model for 3D lines in LiDAR point cloud. To train our model without the time consuming and tedious data labeling process, we first generate synthetic primitives for the basic appearance of target lines, and build an iterative line auto-labeling process to gradually refine line labels on real LiDAR scans. Our segmentation model can extract lines under arbitrary scale perturbations, and we use shared EdgeConv encoder layers to train the two segmentation and descriptor heads jointly. Base on the model, we can build a highly-available global registration module for point cloud registration, in conditions without initial transformation hints. Experiments have demonstrated that our line-based registration method is highly competitive to state-of-the-art point-based approaches. Our code is available at https://github.com/zxrzju/SuperLine3D.git.

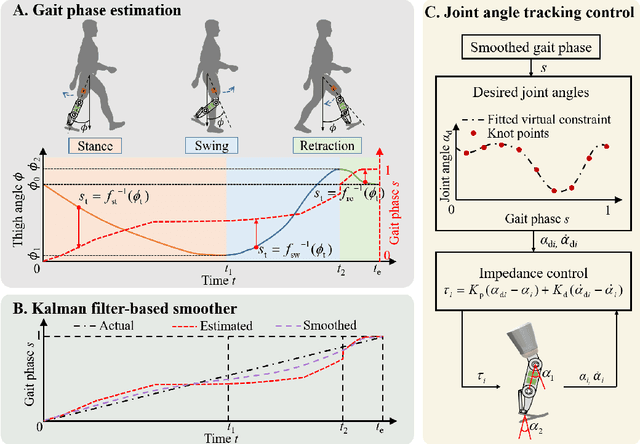

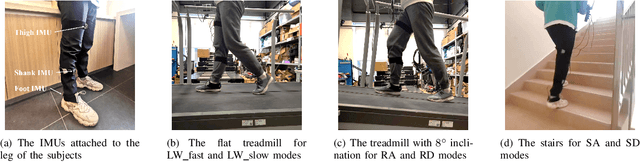

A Piecewise Monotonic Gait Phase Estimation Model for Controlling a Powered Transfemoral Prosthesis in Various Locomotion Modes

Jul 25, 2022

Abstract:Gait phase-based control is a trending research topic for walking-aid robots, especially robotic lower-limb prostheses. Gait phase estimation is a challenge for gait phase-based control. Previous researches used the integration or the differential of the human's thigh angle to estimate the gait phase, but accumulative measurement errors and noises can affect the estimation results. In this paper, a more robust gait phase estimation method is proposed using a unified form of piecewise monotonic gait phase-thigh angle models for various locomotion modes. The gait phase is estimated from only the thigh angle, which is a stable variable and avoids phase drifting. A Kalman filter-based smoother is designed to further suppress the mutations of the estimated gait phase. Based on the proposed gait phase estimation method, a gait phase-based joint angle tracking controller is designed for a transfemoral prosthesis. The proposed gait estimation method, the gait phase smoother, and the controller are evaluated through offline analysis on walking data in various locomotion modes. And the real-time performance of the gait phase-based controller is validated in an experiment on the transfemoral prosthesis.

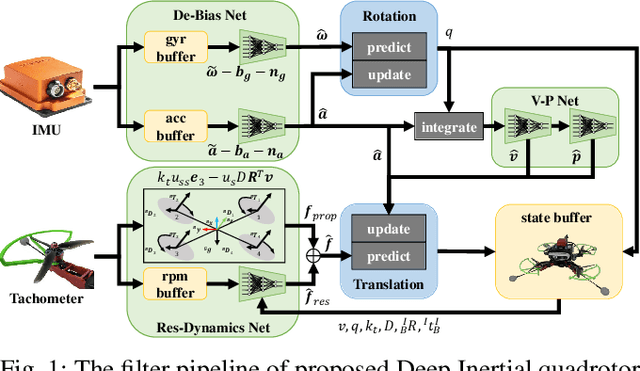

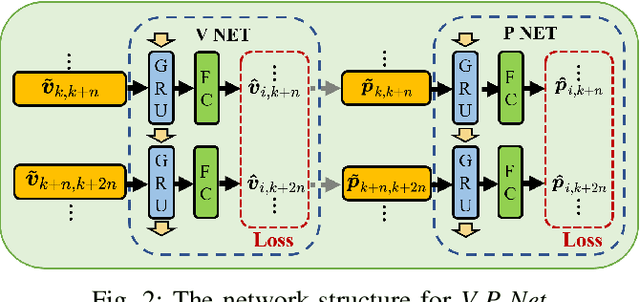

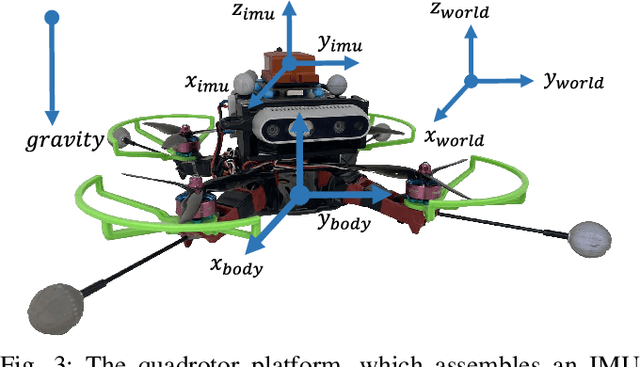

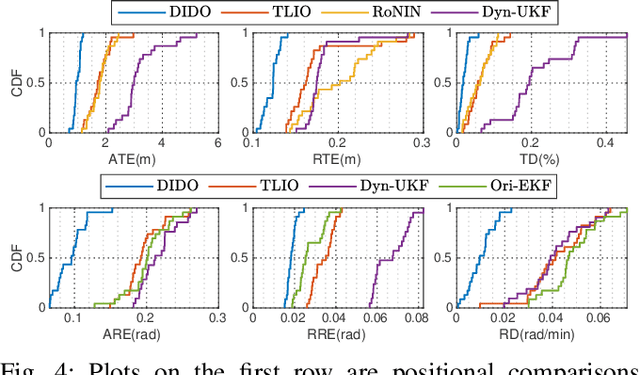

DIDO: Deep Inertial Quadrotor Dynamical Odometry

Mar 07, 2022

Abstract:In this work, we propose an interoceptive-only state estimation system for a quadrotor with deep neural network processing, where the quadrotor dynamics is considered as a perceptive supplement of the inertial kinematics. To improve the precision of multi-sensor fusion, we train cascaded networks on real-world quadrotor flight data to learn IMU kinematic properties, quadrotor dynamic characteristics, and motion states of the quadrotor along with their uncertainty information, respectively. This encoded information empowers us to address the issues of IMU bias stability, dynamic constraints, and multi-sensor calibration during sensor fusion. The above multi-source information is fused into a two-stage Extended Kalman Filter (EKF) framework for better estimation. Experiments have demonstrated the advantages of our proposed work over several conventional and learning-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge