Kunyi Zhang

WING: Wheel-Inertial Neural Odometry with Ground Manifold Constraints

Jul 14, 2024

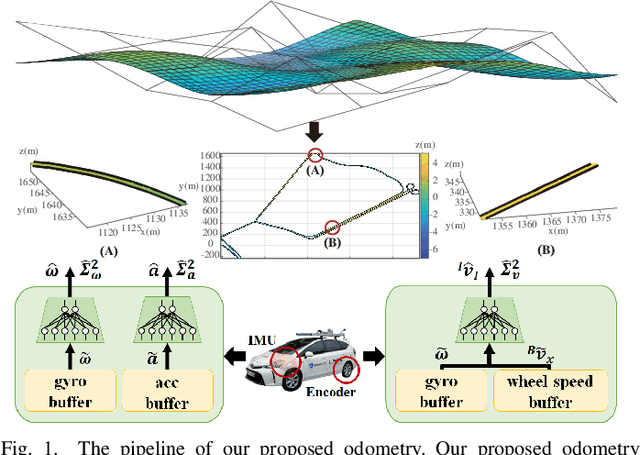

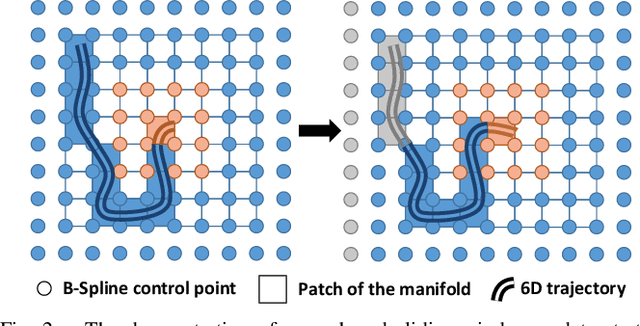

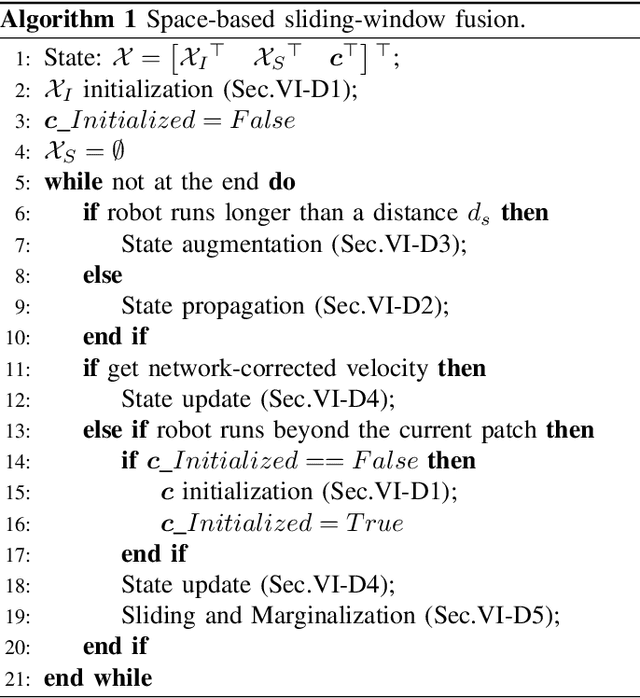

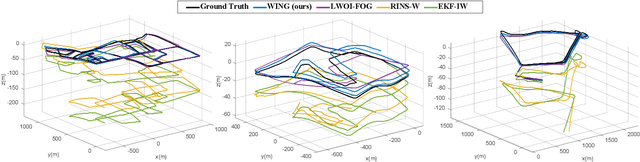

Abstract:In this paper, we propose an interoceptive-only odometry system for ground robots with neural network processing and soft constraints based on the assumption of a globally continuous ground manifold. Exteroceptive sensors such as cameras, GPS and LiDAR may encounter difficulties in scenarios with poor illumination, indoor environments, dusty areas and straight tunnels. Therefore, improving the pose estimation accuracy only using interoceptive sensors is important to enhance the reliability of navigation system even in degrading scenarios mentioned above. However, interoceptive sensors like IMU and wheel encoders suffer from large drift due to noisy measurements. To overcome these challenges, the proposed system trains deep neural networks to correct the measurements from IMU and wheel encoders, while considering their uncertainty. Moreover, because ground robots can only travel on the ground, we model the ground surface as a globally continuous manifold using a dual cubic B-spline manifold to further improve the estimation accuracy by this soft constraint. A novel space-based sliding-window filtering framework is proposed to fully exploit the $C^2$ continuity of ground manifold soft constraints and fuse all the information from raw measurements and neural networks in a yaw-independent attitude convention. Extensive experiments demonstrate that our proposed approach can outperform state-of-the-art learning-based interoceptive-only odometry methods.

DIDO: Deep Inertial Quadrotor Dynamical Odometry

Mar 07, 2022

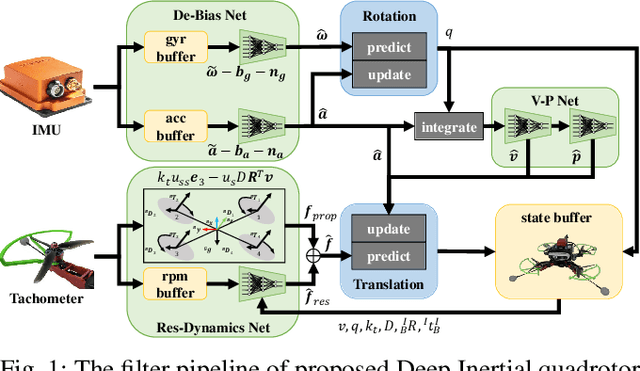

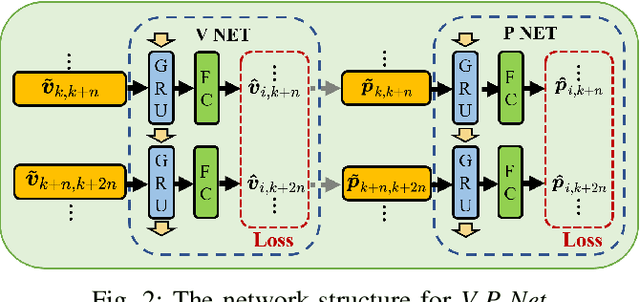

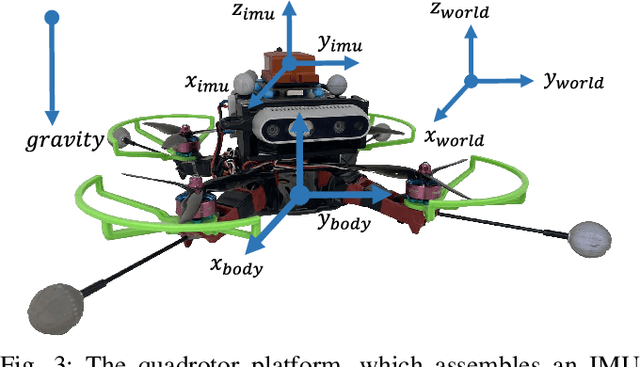

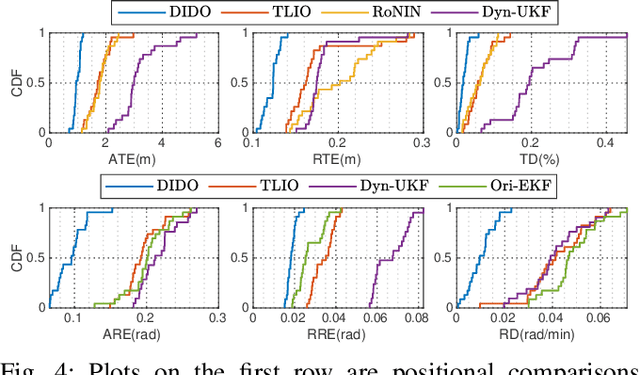

Abstract:In this work, we propose an interoceptive-only state estimation system for a quadrotor with deep neural network processing, where the quadrotor dynamics is considered as a perceptive supplement of the inertial kinematics. To improve the precision of multi-sensor fusion, we train cascaded networks on real-world quadrotor flight data to learn IMU kinematic properties, quadrotor dynamic characteristics, and motion states of the quadrotor along with their uncertainty information, respectively. This encoded information empowers us to address the issues of IMU bias stability, dynamic constraints, and multi-sensor calibration during sensor fusion. The above multi-source information is fused into a two-stage Extended Kalman Filter (EKF) framework for better estimation. Experiments have demonstrated the advantages of our proposed work over several conventional and learning-based methods.

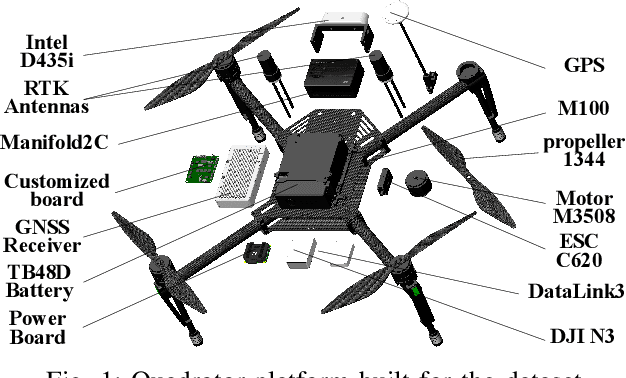

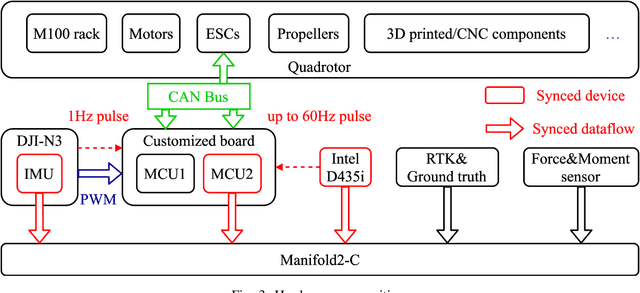

The Visual-Inertial-Dynamical UAV Dataset

Mar 20, 2021

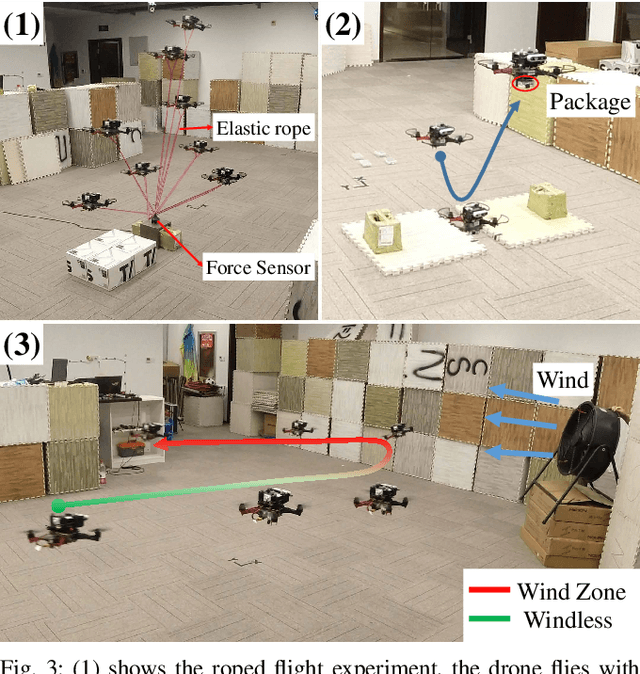

Abstract:Recently, the community has witnessed numerous datasets built for developing and testing state estimators. However, for some applications such as aerial transportation or search-and-rescue, the contact force or other disturbance must be perceived for robust planning robust control, which is beyond the capacity of these datasets. This paper introduces a Visual-Inertial-Dynamical(VID) dataset, not only focusing on traditional six degrees of freedom (6DOF) pose estimation but also providing dynamical characteristics of the flight platform for external force perception or dynamics-aided estimation. The VID dataset contains hard synchronized imagery and inertial measurements, with accurate ground truth trajectories for evaluating common visual-inertial estimators. Moreover, the proposed dataset highlights the measurements of rotor speed and motor current, dynamical inputs, and ground truth 6-axis force data to evaluate external force estimation. To the best of our knowledge, the proposed VID dataset is the first public dataset containing visual-inertial and complete dynamical information for pose and external force evaluation. The dataset and related open source files are available at \url{https://github.com/ZJU-FAST-Lab/VID-Dataset}.

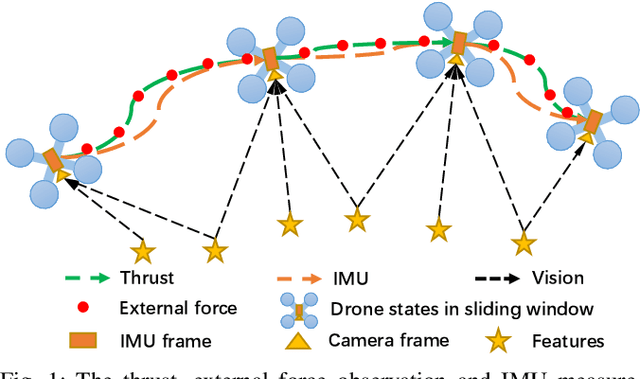

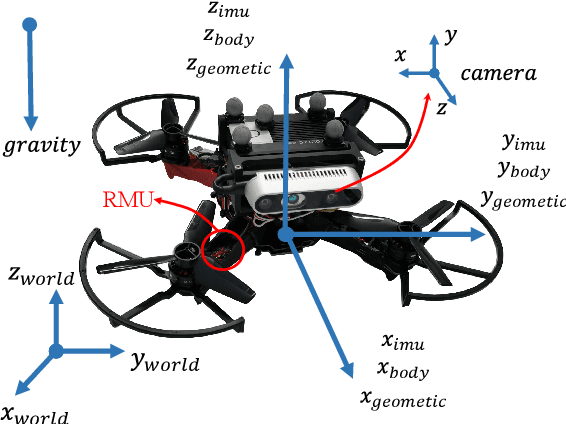

VID-Fusion: Robust Visual-Inertial-Dynamics Odometry for Accurate External Force Estimation

Nov 08, 2020

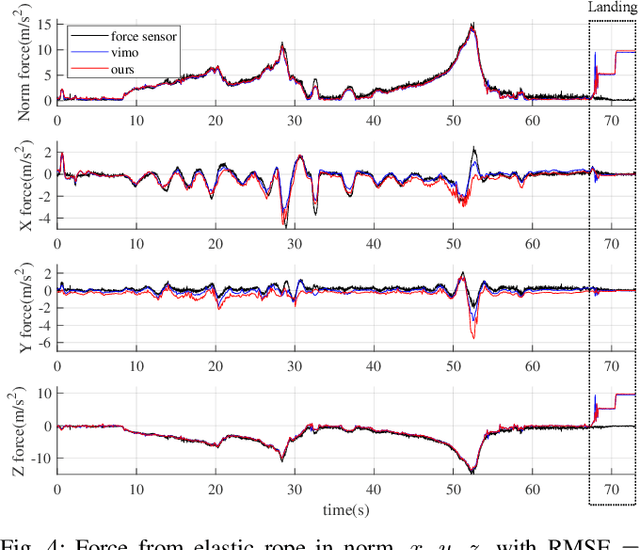

Abstract:Recently, quadrotors are gaining significant attention in aerial transportation and delivery. In these scenarios, an accurate estimation of the external force is as essential as the 6 degree-of-freedom (DoF) pose since it is of vital importance for planning and control of the vehicle. To this end, we propose a tightly-coupled Visual-Inertial-Dynamics (VID) system that simultaneously estimates the external force applied to the quadrotor along with the 6 DoF pose. Our method builds on the state-of-the-art optimization-based Visual-Inertial system, with a novel deduction of the dynamics and external force factor extended from VIMO. Utilizing the proposed dynamics and external force factor, our estimator robustly and accurately estimates the external force even when it varies widely. Moreover, since we explicitly consider the influence of the external force, when compared with VIMO and VINS-Mono, our method shows comparable and superior pose accuracy, even when the external force ranges from neglectable to significant. The robustness and effectiveness of the proposed method are validated by extensive real-world experiments and application scenario simulation. We will release an open-source package of this method along with datasets with ground truth force measurements for the reference of the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge