Jinghang Li

AirGS: Real-Time 4D Gaussian Streaming for Free-Viewpoint Video Experiences

Dec 24, 2025

Abstract:Free-viewpoint video (FVV) enables immersive viewing experiences by allowing users to view scenes from arbitrary perspectives. As a prominent reconstruction technique for FVV generation, 4D Gaussian Splatting (4DGS) models dynamic scenes with time-varying 3D Gaussian ellipsoids and achieves high-quality rendering via fast rasterization. However, existing 4DGS approaches suffer from quality degradation over long sequences and impose substantial bandwidth and storage overhead, limiting their applicability in real-time and wide-scale deployments. Therefore, we present AirGS, a streaming-optimized 4DGS framework that rearchitects the training and delivery pipeline to enable high-quality, low-latency FVV experiences. AirGS converts Gaussian video streams into multi-channel 2D formats and intelligently identifies keyframes to enhance frame reconstruction quality. It further combines temporal coherence with inflation loss to reduce training time and representation size. To support communication-efficient transmission, AirGS models 4DGS delivery as an integer linear programming problem and design a lightweight pruning level selection algorithm to adaptively prune the Gaussian updates to be transmitted, balancing reconstruction quality and bandwidth consumption. Extensive experiments demonstrate that AirGS reduces quality deviation in PSNR by more than 20% when scene changes, maintains frame-level PSNR consistently above 30, accelerates training by 6 times, reduces per-frame transmission size by nearly 50% compared to the SOTA 4DGS approaches.

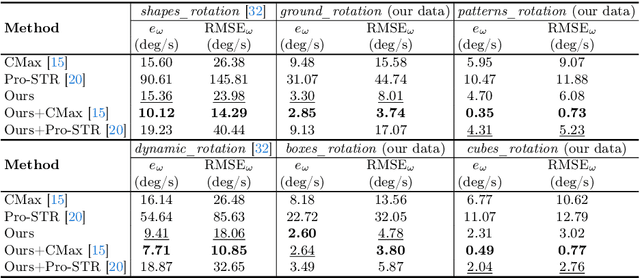

EvTTC: An Event Camera Dataset for Time-to-Collision Estimation

Dec 06, 2024Abstract:Time-to-Collision (TTC) estimation lies in the core of the forward collision warning (FCW) functionality, which is key to all Automatic Emergency Braking (AEB) systems. Although the success of solutions using frame-based cameras (e.g., Mobileye's solutions) has been witnessed in normal situations, some extreme cases, such as the sudden variation in the relative speed of leading vehicles and the sudden appearance of pedestrians, still pose significant risks that cannot be handled. This is due to the inherent imaging principles of frame-based cameras, where the time interval between adjacent exposures introduces considerable system latency to AEB. Event cameras, as a novel bio-inspired sensor, offer ultra-high temporal resolution and can asynchronously report brightness changes at the microsecond level. To explore the potential of event cameras in the above-mentioned challenging cases, we propose EvTTC, which is, to the best of our knowledge, the first multi-sensor dataset focusing on TTC tasks under high-relative-speed scenarios. EvTTC consists of data collected using standard cameras and event cameras, covering various potential collision scenarios in daily driving and involving multiple collision objects. Additionally, LiDAR and GNSS/INS measurements are provided for the calculation of ground-truth TTC. Considering the high cost of testing TTC algorithms on full-scale mobile platforms, we also provide a small-scale TTC testbed for experimental validation and data augmentation. All the data and the design of the testbed are open sourced, and they can serve as a benchmark that will facilitate the development of vision-based TTC techniques.

Motion and Structure from Event-based Normal Flow

Jul 19, 2024

Abstract:Recovering the camera motion and scene geometry from visual data is a fundamental problem in the field of computer vision. Its success in standard vision is attributed to the maturity of feature extraction, data association and multi-view geometry. The recent emergence of neuromorphic event-based cameras places great demands on approaches that use raw event data as input to solve this fundamental problem.Existing state-of-the-art solutions typically infer implicitly data association by iteratively reversing the event data generation process. However, the nonlinear nature of these methods limits their applicability in real-time tasks, and the constant-motion assumption leads to unstable results under agile motion. To this end, we rethink the problem formulation in a way that aligns better with the differential working principle of event cameras.We show that the event-based normal flow can be used, via the proposed geometric error term, as an alternative to the full flow in solving a family of geometric problems that involve instantaneous first-order kinematics and scene geometry. Furthermore, we develop a fast linear solver and a continuous-time nonlinear solver on top of the proposed geometric error term.Experiments on both synthetic and real data show the superiority of our linear solver in terms of accuracy and efficiency, and indicate its complementary feature as an initialization method for existing nonlinear solvers. Besides, our continuous-time non-linear solver exhibits exceptional capability in accommodating sudden variations in motion since it does not rely on the constant-motion assumption.

Event-Aided Time-to-Collision Estimation for Autonomous Driving

Jul 10, 2024Abstract:Predicting a potential collision with leading vehicles is an essential functionality of any autonomous/assisted driving system. One bottleneck of existing vision-based solutions is that their updating rate is limited to the frame rate of standard cameras used. In this paper, we present a novel method that estimates the time to collision using a neuromorphic event-based camera, a biologically inspired visual sensor that can sense at exactly the same rate as scene dynamics. The core of the proposed algorithm consists of a two-step approach for efficient and accurate geometric model fitting on event data in a coarse-to-fine manner. The first step is a robust linear solver based on a novel geometric measurement that overcomes the partial observability of event-based normal flow. The second step further refines the resulting model via a spatio-temporal registration process formulated as a nonlinear optimization problem. Experiments on both synthetic and real data demonstrate the effectiveness of the proposed method, outperforming other alternative methods in terms of efficiency and accuracy.

BeNeRF: Neural Radiance Fields from a Single Blurry Image and Event Stream

Jul 03, 2024Abstract:Neural implicit representation of visual scenes has attracted a lot of attention in recent research of computer vision and graphics. Most prior methods focus on how to reconstruct 3D scene representation from a set of images. In this work, we demonstrate the possibility to recover the neural radiance fields (NeRF) from a single blurry image and its corresponding event stream. We model the camera motion with a cubic B-Spline in SE(3) space. Both the blurry image and the brightness change within a time interval, can then be synthesized from the 3D scene representation given the 6-DoF poses interpolated from the cubic B-Spline. Our method can jointly learn both the implicit neural scene representation and recover the camera motion by minimizing the differences between the synthesized data and the real measurements without pre-computed camera poses from COLMAP. We evaluate the proposed method with both synthetic and real datasets. The experimental results demonstrate that we are able to render view-consistent latent sharp images from the learned NeRF and bring a blurry image alive in high quality. Code and data are available at https://github.com/WU-CVGL/BeNeRF.

wmh_seg: Transformer based U-Net for Robust and Automatic White Matter Hyperintensity Segmentation across 1.5T, 3T and 7T

Feb 20, 2024

Abstract:White matter hyperintensity (WMH) remains the top imaging biomarker for neurodegenerative diseases. Robust and accurate segmentation of WMH holds paramount significance for neuroimaging studies. The growing shift from 3T to 7T MRI necessitates robust tools for harmonized segmentation across field strengths and artifacts. Recent deep learning models exhibit promise in WMH segmentation but still face challenges, including diverse training data representation and limited analysis of MRI artifacts' impact. To address these, we introduce wmh_seg, a novel deep learning model leveraging a transformer-based encoder from SegFormer. wmh_seg is trained on an unmatched dataset, including 1.5T, 3T, and 7T FLAIR images from various sources, alongside with artificially added MR artifacts. Our approach bridges gaps in training diversity and artifact analysis. Our model demonstrated stable performance across magnetic field strengths, scanner manufacturers, and common MR imaging artifacts. Despite the unique inhomogeneity artifacts on ultra-high field MR images, our model still offers robust and stable segmentation on 7T FLAIR images. Our model, to date, is the first that offers quality white matter lesion segmentation on 7T FLAIR images.

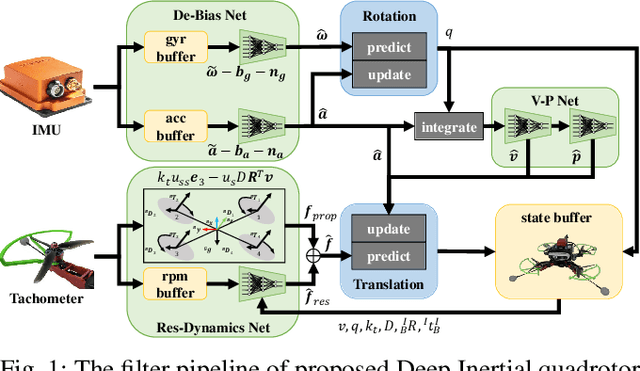

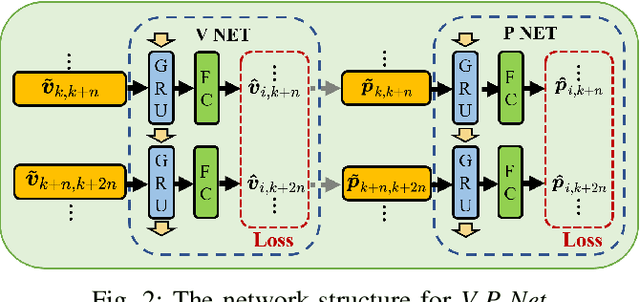

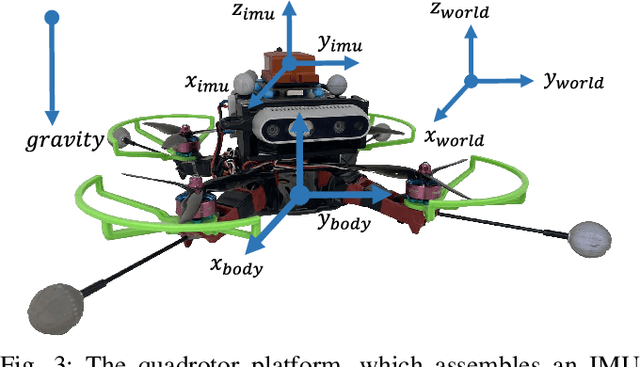

DIDO: Deep Inertial Quadrotor Dynamical Odometry

Mar 07, 2022

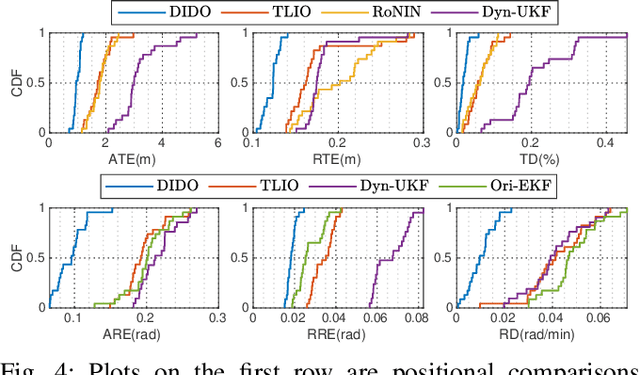

Abstract:In this work, we propose an interoceptive-only state estimation system for a quadrotor with deep neural network processing, where the quadrotor dynamics is considered as a perceptive supplement of the inertial kinematics. To improve the precision of multi-sensor fusion, we train cascaded networks on real-world quadrotor flight data to learn IMU kinematic properties, quadrotor dynamic characteristics, and motion states of the quadrotor along with their uncertainty information, respectively. This encoded information empowers us to address the issues of IMU bias stability, dynamic constraints, and multi-sensor calibration during sensor fusion. The above multi-source information is fused into a two-stage Extended Kalman Filter (EKF) framework for better estimation. Experiments have demonstrated the advantages of our proposed work over several conventional and learning-based methods.

Driver-Specific Risk Recognition in Interactive Driving Scenarios using Graph Representation

Nov 11, 2021

Abstract:This paper presents a driver-specific risk recognition framework for autonomous vehicles that can extract inter-vehicle interactions. This extraction is carried out for urban driving scenarios in a driver-cognitive manner to improve the recognition accuracy of risky scenes. First, clustering analysis is applied to the operation data of drivers for learning the subjective assessment of risky scenes of different drivers and generating the corresponding risk label for each scene. Second, the graph representation model (GRM) is adopted to unify and construct the features of dynamic vehicles, inter-vehicle interactions and static traffic markings in real driving scenes into graphs. The driver-specific risk label provides ground truth to capture the risk evaluation criteria of different drivers. In addition, the graph model represents multiple features of the driving scenes. Therefore, the proposed framework can learn the risk-evaluating pattern of driving scenes of different drivers and establish driver-specific risk identifiers. Last, the performance of the proposed framework is evaluated via experiments conducted using real-world urban driving datasets collected by multiple drivers. The results show that the risks and their levels in real driving environments can be accurately recognized by the proposed framework.

Prediction of Pedestrian Spatiotemporal Risk Levels for Intelligent Vehicles: A Data-driven Approach

Nov 06, 2021

Abstract:In recent years, road safety has attracted significant attention from researchers and practitioners in the intelligent transport systems domain. As one of the most common and vulnerable groups of road users, pedestrians cause great concerns due to their unpredictable behavior and movement, as subtle misunderstandings in vehicle-pedestrian interaction can easily lead to risky situations or collisions. Existing methods use either predefined collision-based models or human-labeling approaches to estimate the pedestrians' risks. These approaches are usually limited by their poor generalization ability and lack of consideration of interactions between the ego vehicle and a pedestrian. This work tackles the listed problems by proposing a Pedestrian Risk Level Prediction system. The system consists of three modules. Firstly, vehicle-perspective pedestrian data are collected. Since the data contains information regarding the movement of both the ego vehicle and pedestrian, it can simplify the prediction of spatiotemporal features in an interaction-aware fashion. Using the long short-term memory model, the pedestrian trajectory prediction module predicts their spatiotemporal features in the subsequent five frames. As the predicted trajectory follows certain interaction and risk patterns, a hybrid clustering and classification method is adopted to explore the risk patterns in the spatiotemporal features and train a risk level classifier using the learned patterns. Upon predicting the spatiotemporal features of pedestrians and identifying the corresponding risk level, the risk patterns between the ego vehicle and pedestrians are determined. Experimental results verified the capability of the PRLP system to predict the risk level of pedestrians, thus supporting the collision risk assessment of intelligent vehicles and providing safety warnings to both vehicles and pedestrians.

Driver Behavior Modelling at the Urban Intersection via Canonical Correlation Analysis

Jul 11, 2020Abstract:The urban intersection is a typically dynamic and complex scenario for intelligent vehicles, which exists a variety of driving behaviors and traffic participants. Accurately modelling the driver behavior at the intersection is essential for intelligent transportation systems (ITS). Previous researches mainly focus on using attention mechanism to model the degree of correlation. In this research, a canonical correlation analysis (CCA)-based framework is proposed. The value of canonical correlation is used for feature selection. Gaussian mixture model and Gaussian process regression are applied for driver behavior modelling. Two experiments using simulated and naturalistic driving data are designed for verification. Experimental results are consistent with the driver's judgment. Comparative studies show that the proposed framework can obtain a better performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge