Ziming Ding

External Forces Resilient Safe Motion Planning for Quadrotor

Mar 20, 2021

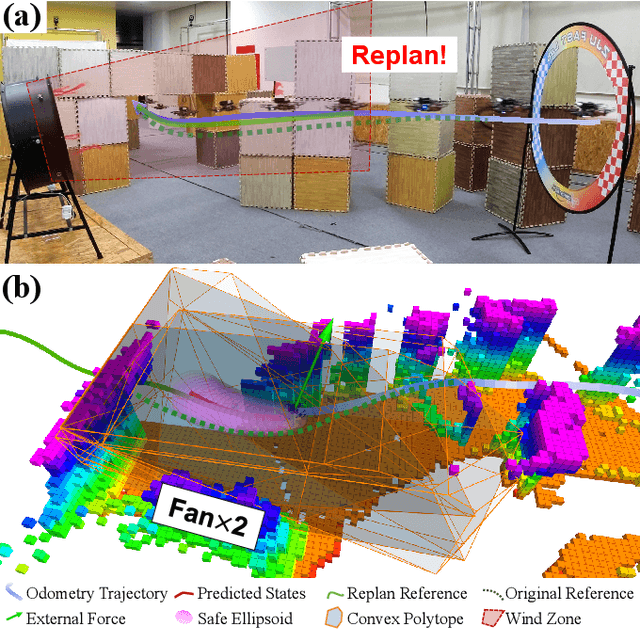

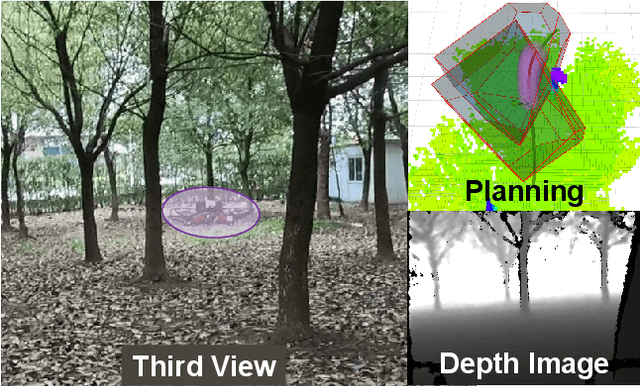

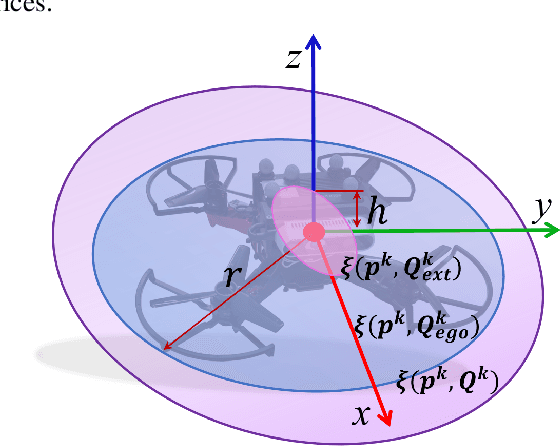

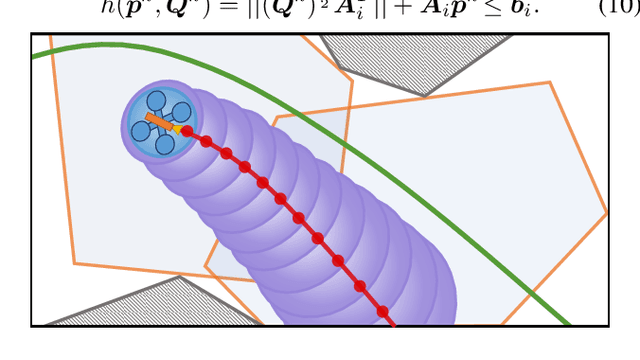

Abstract:Adaptive autonomous navigation with no prior knowledge of extraneous disturbance is of great significance for quadrotors in a complex and unknown environment. The mainstream that considers external disturbance is to implement disturbance-rejected control and path tracking. However, the robust control to compensate for tracking deviations is not well-considered regarding energy consumption, and even the reference path will become risky and intractable with disturbance. As recent external forces estimation advances, it is possible to incorporate a real-time force estimator to develop more robust and safe planning frameworks. This paper proposes a systematic (re)planning framework that can resiliently generate safe trajectories under volatile conditions. Firstly, a front-end kinodynamic path is searched with force-biased motion primitives. Then we develop a nonlinear model predictive control (NMPC) as a local planner with Hamilton-Jacobi (HJ) forward reachability analysis for error dynamics caused by external forces. It guarantees collision-free by constraining the ellipsoid of the quadrotor body expanded with the forward reachable sets (FRSs) within safe convex polytopes. Our method is validated in simulations and real-world experiments with different sources of external forces.

The Visual-Inertial-Dynamical UAV Dataset

Mar 20, 2021

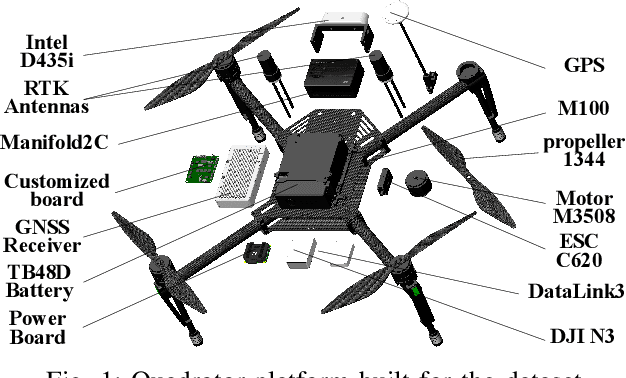

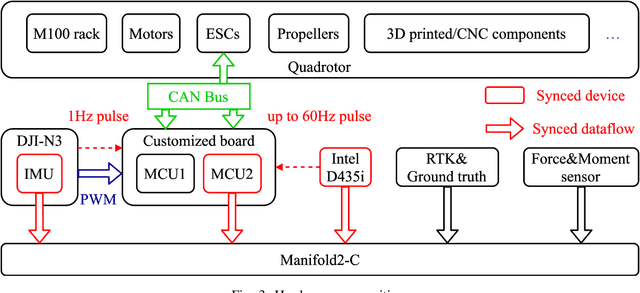

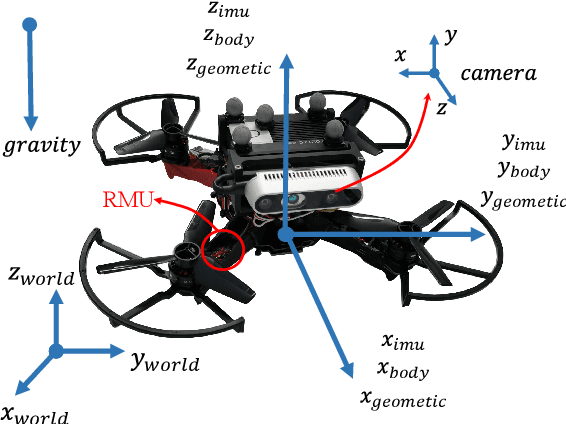

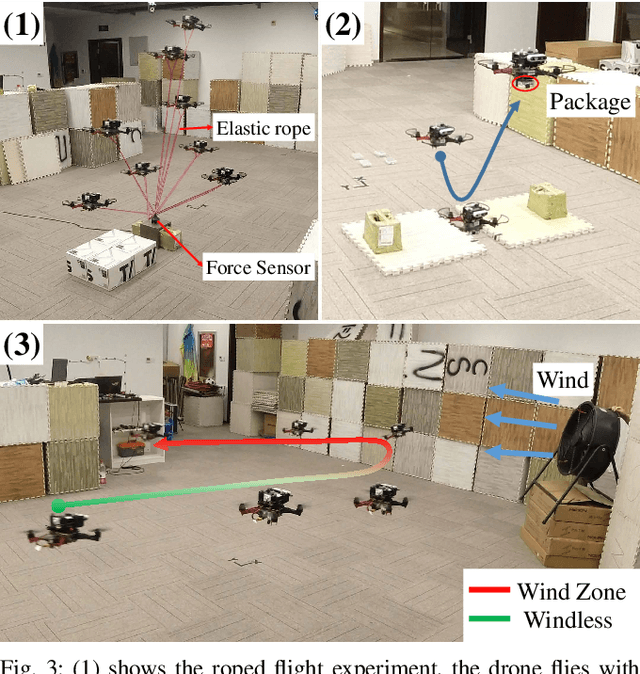

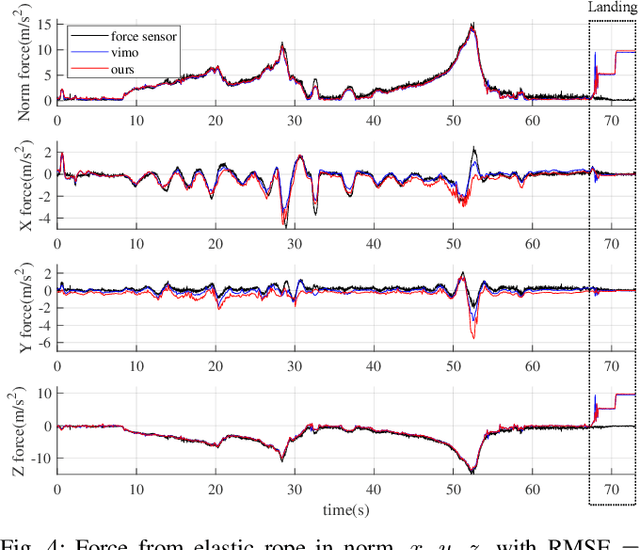

Abstract:Recently, the community has witnessed numerous datasets built for developing and testing state estimators. However, for some applications such as aerial transportation or search-and-rescue, the contact force or other disturbance must be perceived for robust planning robust control, which is beyond the capacity of these datasets. This paper introduces a Visual-Inertial-Dynamical(VID) dataset, not only focusing on traditional six degrees of freedom (6DOF) pose estimation but also providing dynamical characteristics of the flight platform for external force perception or dynamics-aided estimation. The VID dataset contains hard synchronized imagery and inertial measurements, with accurate ground truth trajectories for evaluating common visual-inertial estimators. Moreover, the proposed dataset highlights the measurements of rotor speed and motor current, dynamical inputs, and ground truth 6-axis force data to evaluate external force estimation. To the best of our knowledge, the proposed VID dataset is the first public dataset containing visual-inertial and complete dynamical information for pose and external force evaluation. The dataset and related open source files are available at \url{https://github.com/ZJU-FAST-Lab/VID-Dataset}.

VID-Fusion: Robust Visual-Inertial-Dynamics Odometry for Accurate External Force Estimation

Nov 08, 2020

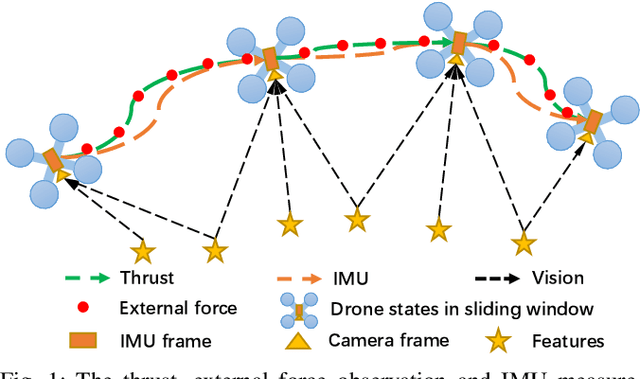

Abstract:Recently, quadrotors are gaining significant attention in aerial transportation and delivery. In these scenarios, an accurate estimation of the external force is as essential as the 6 degree-of-freedom (DoF) pose since it is of vital importance for planning and control of the vehicle. To this end, we propose a tightly-coupled Visual-Inertial-Dynamics (VID) system that simultaneously estimates the external force applied to the quadrotor along with the 6 DoF pose. Our method builds on the state-of-the-art optimization-based Visual-Inertial system, with a novel deduction of the dynamics and external force factor extended from VIMO. Utilizing the proposed dynamics and external force factor, our estimator robustly and accurately estimates the external force even when it varies widely. Moreover, since we explicitly consider the influence of the external force, when compared with VIMO and VINS-Mono, our method shows comparable and superior pose accuracy, even when the external force ranges from neglectable to significant. The robustness and effectiveness of the proposed method are validated by extensive real-world experiments and application scenario simulation. We will release an open-source package of this method along with datasets with ground truth force measurements for the reference of the community.

A Convolutional Neural Network for Aspect Sentiment Classification

Jul 04, 2018

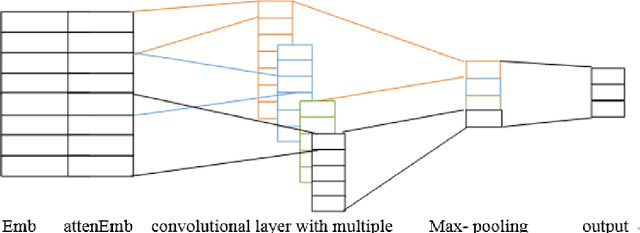

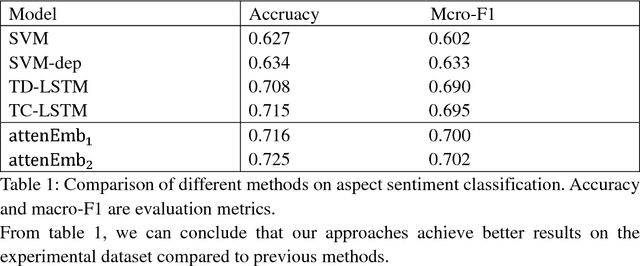

Abstract:With the development of the Internet, natural language processing (NLP), in which sentiment analysis is an important task, became vital in information processing.Sentiment analysis includes aspect sentiment classification. Aspect sentiment can provide complete and in-depth results with increased attention on aspect-level. Different context words in a sentence influence the sentiment polarity of a sentence variably, and polarity varies based on the different aspects in a sentence. Take the sentence, 'I bought a new camera. The picture quality is amazing but the battery life is too short.'as an example. If the aspect is picture quality, then the expected sentiment polarity is 'positive', if the battery life aspect is considered, then the sentiment polarity should be 'negative'; therefore, aspect is important to consider when we explore aspect sentiment in the sentence. Recurrent neural network (RNN) is regarded as a good model to deal with natural language processing, and RNNs has get good performance on aspect sentiment classification including Target-Dependent LSTM (TD-LSTM) ,Target-Connection LSTM (TC-LSTM) (Tang, 2015a, b), AE-LSTM, AT-LSTM, AEAT-LSTM (Wang et al., 2016).There are also extensive literatures on sentiment classification utilizing convolutional neural network, but there is little literature on aspect sentiment classification using convolutional neural network. In our paper, we develop attention-based input layers in which aspect information is considered by input layer. We then incorporate attention-based input layers into convolutional neural network (CNN) to introduce context words information. In our experiment, incorporating aspect information into CNN improves the latter's aspect sentiment classification performance without using syntactic parser or external sentiment lexicons in a benchmark dataset from Twitter but get better performance compared with other models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge