Kuan Xu

Every Step Evolves: Scaling Reinforcement Learning for Trillion-Scale Thinking Model

Oct 21, 2025

Abstract:We present Ring-1T, the first open-source, state-of-the-art thinking model with a trillion-scale parameter. It features 1 trillion total parameters and activates approximately 50 billion per token. Training such models at a trillion-parameter scale introduces unprecedented challenges, including train-inference misalignment, inefficiencies in rollout processing, and bottlenecks in the RL system. To address these, we pioneer three interconnected innovations: (1) IcePop stabilizes RL training via token-level discrepancy masking and clipping, resolving instability from training-inference mismatches; (2) C3PO++ improves resource utilization for long rollouts under a token budget by dynamically partitioning them, thereby obtaining high time efficiency; and (3) ASystem, a high-performance RL framework designed to overcome the systemic bottlenecks that impede trillion-parameter model training. Ring-1T delivers breakthrough results across critical benchmarks: 93.4 on AIME-2025, 86.72 on HMMT-2025, 2088 on CodeForces, and 55.94 on ARC-AGI-v1. Notably, it attains a silver medal-level result on the IMO-2025, underscoring its exceptional reasoning capabilities. By releasing the complete 1T parameter MoE model to the community, we provide the research community with direct access to cutting-edge reasoning capabilities. This contribution marks a significant milestone in democratizing large-scale reasoning intelligence and establishes a new baseline for open-source model performance.

Ring-lite: Scalable Reasoning via C3PO-Stabilized Reinforcement Learning for LLMs

Jun 18, 2025Abstract:We present Ring-lite, a Mixture-of-Experts (MoE)-based large language model optimized via reinforcement learning (RL) to achieve efficient and robust reasoning capabilities. Built upon the publicly available Ling-lite model, a 16.8 billion parameter model with 2.75 billion activated parameters, our approach matches the performance of state-of-the-art (SOTA) small-scale reasoning models on challenging benchmarks (e.g., AIME, LiveCodeBench, GPQA-Diamond) while activating only one-third of the parameters required by comparable models. To accomplish this, we introduce a joint training pipeline integrating distillation with RL, revealing undocumented challenges in MoE RL training. First, we identify optimization instability during RL training, and we propose Constrained Contextual Computation Policy Optimization(C3PO), a novel approach that enhances training stability and improves computational throughput via algorithm-system co-design methodology. Second, we empirically demonstrate that selecting distillation checkpoints based on entropy loss for RL training, rather than validation metrics, yields superior performance-efficiency trade-offs in subsequent RL training. Finally, we develop a two-stage training paradigm to harmonize multi-domain data integration, addressing domain conflicts that arise in training with mixed dataset. We will release the model, dataset, and code.

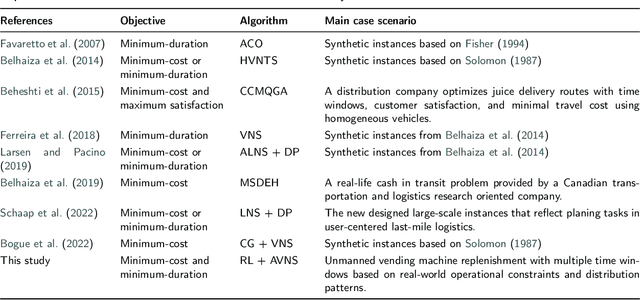

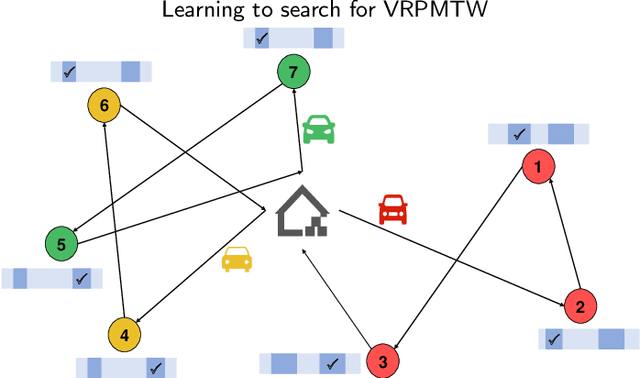

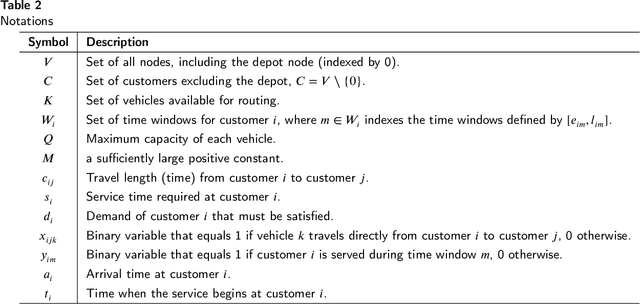

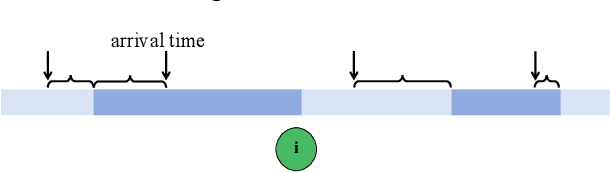

Learning to Search for Vehicle Routing with Multiple Time Windows

May 29, 2025

Abstract:In this study, we propose a reinforcement learning-based adaptive variable neighborhood search (RL-AVNS) method designed for effectively solving the Vehicle Routing Problem with Multiple Time Windows (VRPMTW). Unlike traditional adaptive approaches that rely solely on historical operator performance, our method integrates a reinforcement learning framework to dynamically select neighborhood operators based on real-time solution states and learned experience. We introduce a fitness metric that quantifies customers' temporal flexibility to improve the shaking phase, and employ a transformer-based neural policy network to intelligently guide operator selection during the local search. Extensive computational experiments are conducted on realistic scenarios derived from the replenishment of unmanned vending machines, characterized by multiple clustered replenishment windows. Results demonstrate that RL-AVNS significantly outperforms traditional variable neighborhood search (VNS), adaptive VNS (AVNS), and state-of-the-art learning-based heuristics, achieving substantial improvements in solution quality and computational efficiency across various instance scales and time window complexities. Particularly notable is the algorithm's capability to generalize effectively to problem instances not encountered during training, underscoring its practical utility for complex logistics scenarios.

AirSwarm: Enabling Cost-Effective Multi-UAV Research with COTS drones

Mar 10, 2025Abstract:Traditional unmanned aerial vehicle (UAV) swarm missions rely heavily on expensive custom-made drones with onboard perception or external positioning systems, limiting their widespread adoption in research and education. To address this issue, we propose AirSwarm. AirSwarm democratizes multi-drone coordination using low-cost commercially available drones such as Tello or Anafi, enabling affordable swarm aerial robotics research and education. Key innovations include a hierarchical control architecture for reliable multi-UAV coordination, an infrastructure-free visual SLAM system for precise localization without external motion capture, and a ROS-based software framework for simplified swarm development. Experiments demonstrate cm-level tracking accuracy, low-latency control, communication failure resistance, formation flight, and trajectory tracking. By reducing financial and technical barriers, AirSwarm makes multi-robot education and research more accessible. The complete instructions and open source code will be available at

Every FLOP Counts: Scaling a 300B Mixture-of-Experts LING LLM without Premium GPUs

Mar 07, 2025

Abstract:In this technical report, we tackle the challenges of training large-scale Mixture of Experts (MoE) models, focusing on overcoming cost inefficiency and resource limitations prevalent in such systems. To address these issues, we present two differently sized MoE large language models (LLMs), namely Ling-Lite and Ling-Plus (referred to as "Bailing" in Chinese, spelled B\v{a}il\'ing in Pinyin). Ling-Lite contains 16.8 billion parameters with 2.75 billion activated parameters, while Ling-Plus boasts 290 billion parameters with 28.8 billion activated parameters. Both models exhibit comparable performance to leading industry benchmarks. This report offers actionable insights to improve the efficiency and accessibility of AI development in resource-constrained settings, promoting more scalable and sustainable technologies. Specifically, to reduce training costs for large-scale MoE models, we propose innovative methods for (1) optimization of model architecture and training processes, (2) refinement of training anomaly handling, and (3) enhancement of model evaluation efficiency. Additionally, leveraging high-quality data generated from knowledge graphs, our models demonstrate superior capabilities in tool use compared to other models. Ultimately, our experimental findings demonstrate that a 300B MoE LLM can be effectively trained on lower-performance devices while achieving comparable performance to models of a similar scale, including dense and MoE models. Compared to high-performance devices, utilizing a lower-specification hardware system during the pre-training phase demonstrates significant cost savings, reducing computing costs by approximately 20%. The models can be accessed at https://huggingface.co/inclusionAI.

ChorusCVR: Chorus Supervision for Entire Space Post-Click Conversion Rate Modeling

Feb 12, 2025

Abstract:Post-click conversion rate (CVR) estimation is a vital task in many recommender systems of revenue businesses, e.g., e-commerce and advertising. In a perspective of sample, a typical CVR positive sample usually goes through a funnel of exposure to click to conversion. For lack of post-event labels for un-clicked samples, CVR learning task commonly only utilizes clicked samples, rather than all exposed samples as for click-through rate (CTR) learning task. However, during online inference, CVR and CTR are estimated on the same assumed exposure space, which leads to a inconsistency of sample space between training and inference, i.e., sample selection bias (SSB). To alleviate SSB, previous wisdom proposes to design novel auxiliary tasks to enable the CVR learning on un-click training samples, such as CTCVR and counterfactual CVR, etc. Although alleviating SSB to some extent, none of them pay attention to the discrimination between ambiguous negative samples (un-clicked) and factual negative samples (clicked but un-converted) during modelling, which makes CVR model lacks robustness. To full this gap, we propose a novel ChorusCVR model to realize debiased CVR learning in entire-space.

An Efficient Scene Coordinate Encoding and Relocalization Method

Dec 09, 2024

Abstract:Scene Coordinate Regression (SCR) is a visual localization technique that utilizes deep neural networks (DNN) to directly regress 2D-3D correspondences for camera pose estimation. However, current SCR methods often face challenges in handling repetitive textures and meaningless areas due to their reliance on implicit triangulation. In this paper, we propose an efficient scene coordinate encoding and relocalization method. Compared with the existing SCR methods, we design a unified architecture for both scene encoding and salient keypoint detection, enabling our system to focus on encoding informative regions, thereby significantly enhancing efficiency. Additionally, we introduce a mechanism that leverages sequential information during both map encoding and relocalization, which strengthens implicit triangulation, particularly in repetitive texture environments. Comprehensive experiments conducted across indoor and outdoor datasets demonstrate that the proposed system outperforms other state-of-the-art (SOTA) SCR methods. Our single-frame relocalization mode improves the recall rate of our baseline by 6.4% and increases the running speed from 56Hz to 90Hz. Furthermore, our sequence-based mode increases the recall rate by 11% while maintaining the original efficiency.

AirSLAM: An Efficient and Illumination-Robust Point-Line Visual SLAM System

Aug 07, 2024

Abstract:In this paper, we present an efficient visual SLAM system designed to tackle both short-term and long-term illumination challenges. Our system adopts a hybrid approach that combines deep learning techniques for feature detection and matching with traditional backend optimization methods. Specifically, we propose a unified convolutional neural network (CNN) that simultaneously extracts keypoints and structural lines. These features are then associated, matched, triangulated, and optimized in a coupled manner. Additionally, we introduce a lightweight relocalization pipeline that reuses the built map, where keypoints, lines, and a structure graph are used to match the query frame with the map. To enhance the applicability of the proposed system to real-world robots, we deploy and accelerate the feature detection and matching networks using C++ and NVIDIA TensorRT. Extensive experiments conducted on various datasets demonstrate that our system outperforms other state-of-the-art visual SLAM systems in illumination-challenging environments. Efficiency evaluations show that our system can run at a rate of 73Hz on a PC and 40Hz on an embedded platform.

Enhancing Column Generation by Reinforcement Learning-Based Hyper-Heuristic for Vehicle Routing and Scheduling Problems

Oct 15, 2023

Abstract:Column generation (CG) is a vital method to solve large-scale problems by dynamically generating variables. It has extensive applications in common combinatorial optimization, such as vehicle routing and scheduling problems, where each iteration step requires solving an NP-hard constrained shortest path problem. Although some heuristic methods for acceleration already exist, they are not versatile enough to solve different problems. In this work, we propose a reinforcement learning-based hyper-heuristic framework, dubbed RLHH, to enhance the performance of CG. RLHH is a selection module embedded in CG to accelerate convergence and get better integer solutions. In each CG iteration, the RL agent selects a low-level heuristic to construct a reduced network only containing the edges with a greater chance of being part of the optimal solution. In addition, we specify RLHH to solve two typical combinatorial optimization problems: Vehicle Routing Problem with Time Windows (VRPTW) and Bus Driver Scheduling Problem (BDSP). The total cost can be reduced by up to 27.9\% in VRPTW and 15.4\% in BDSP compared to the best lower-level heuristic in our tested scenarios, within equivalent or even less computational time. The proposed RLHH is the first RL-based CG method that outperforms traditional approaches in terms of solution quality, which can promote the application of CG in combinatorial optimization.

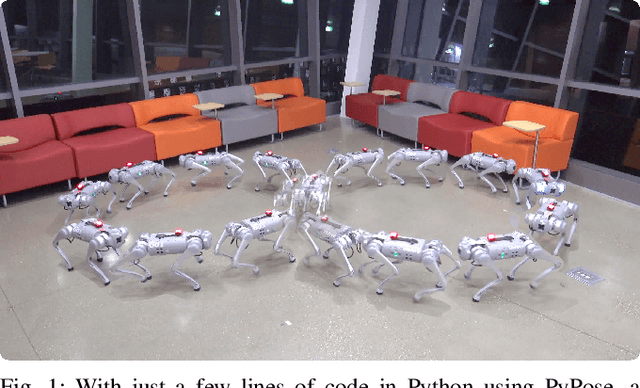

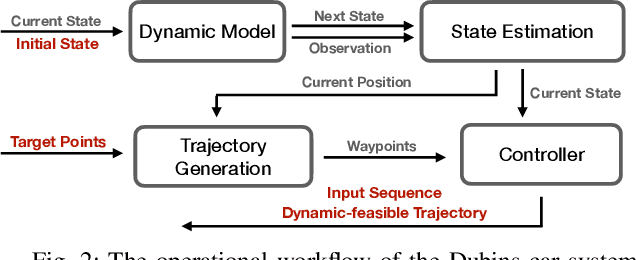

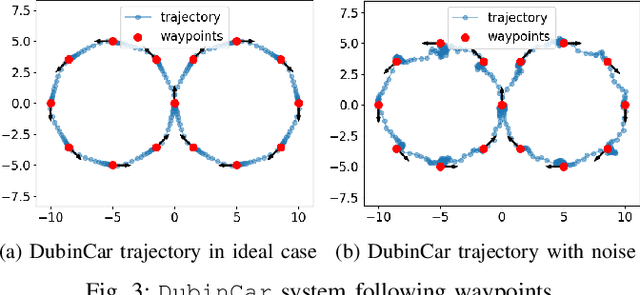

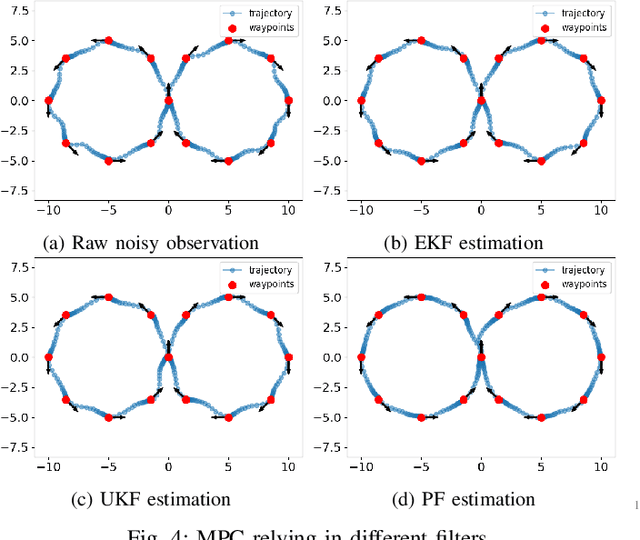

PyPose v0.6: The Imperative Programming Interface for Robotics

Sep 22, 2023

Abstract:PyPose is an open-source library for robot learning. It combines a learning-based approach with physics-based optimization, which enables seamless end-to-end robot learning. It has been used in many tasks due to its meticulously designed application programming interface (API) and efficient implementation. From its initial launch in early 2022, PyPose has experienced significant enhancements, incorporating a wide variety of new features into its platform. To satisfy the growing demand for understanding and utilizing the library and reduce the learning curve of new users, we present the fundamental design principle of the imperative programming interface, and showcase the flexible usage of diverse functionalities and modules using an extremely simple Dubins car example. We also demonstrate that the PyPose can be easily used to navigate a real quadruped robot with a few lines of code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge