Chang Meng

Who You Are Matters: Bridging Topics and Social Roles via LLM-Enhanced Logical Recommendation

May 16, 2025

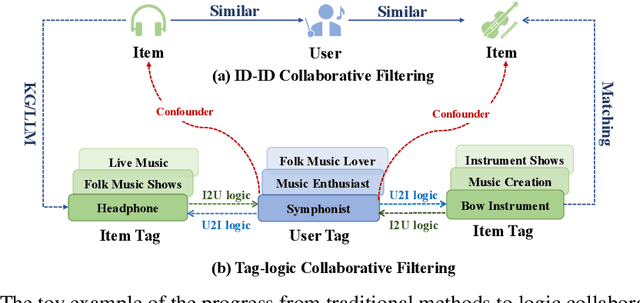

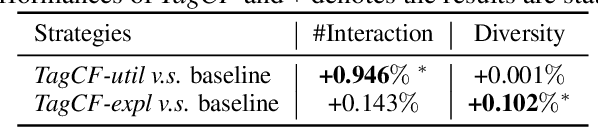

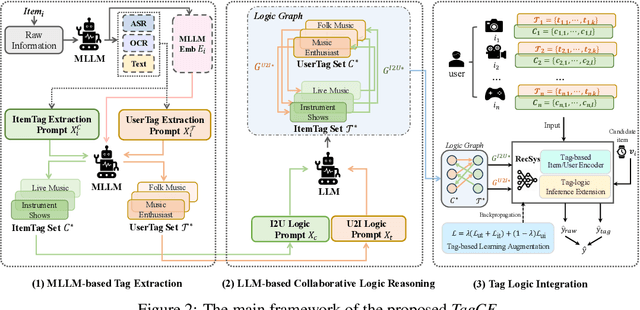

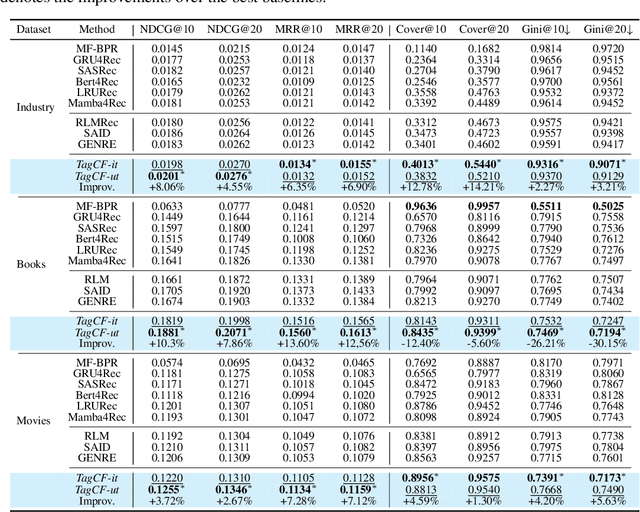

Abstract:Recommender systems filter contents/items valuable to users by inferring preferences from user features and historical behaviors. Mainstream approaches follow the learning-to-rank paradigm, which focus on discovering and modeling item topics (e.g., categories), and capturing user preferences on these topics based on historical interactions. However, this paradigm often neglects the modeling of user characteristics and their social roles, which are logical confounders influencing the correlated interest and user preference transition. To bridge this gap, we introduce the user role identification task and the behavioral logic modeling task that aim to explicitly model user roles and learn the logical relations between item topics and user social roles. We show that it is possible to explicitly solve these tasks through an efficient integration framework of Large Language Model (LLM) and recommendation systems, for which we propose TagCF. On the one hand, the exploitation of the LLM's world knowledge and logic inference ability produces a virtual logic graph that reveals dynamic and expressive knowledge of users, augmenting the recommendation performance. On the other hand, the user role aligns the user behavioral logic with the observed user feedback, refining our understanding of user behaviors. Additionally, we also show that the extracted user-item logic graph is empirically a general knowledge that can benefit a wide range of recommendation tasks, and conduct experiments on industrial and several public datasets as verification.

VLM as Policy: Common-Law Content Moderation Framework for Short Video Platform

Apr 21, 2025Abstract:Exponentially growing short video platforms (SVPs) face significant challenges in moderating content detrimental to users' mental health, particularly for minors. The dissemination of such content on SVPs can lead to catastrophic societal consequences. Although substantial efforts have been dedicated to moderating such content, existing methods suffer from critical limitations: (1) Manual review is prone to human bias and incurs high operational costs. (2) Automated methods, though efficient, lack nuanced content understanding, resulting in lower accuracy. (3) Industrial moderation regulations struggle to adapt to rapidly evolving trends due to long update cycles. In this paper, we annotate the first SVP content moderation benchmark with authentic user/reviewer feedback to fill the absence of benchmark in this field. Then we evaluate various methods on the benchmark to verify the existence of the aforementioned limitations. We further propose our common-law content moderation framework named KuaiMod to address these challenges. KuaiMod consists of three components: training data construction, offline adaptation, and online deployment & refinement. Leveraging large vision language model (VLM) and Chain-of-Thought (CoT) reasoning, KuaiMod adequately models video toxicity based on sparse user feedback and fosters dynamic moderation policy with rapid update speed and high accuracy. Offline experiments and large-scale online A/B test demonstrates the superiority of KuaiMod: KuaiMod achieves the best moderation performance on our benchmark. The deployment of KuaiMod reduces the user reporting rate by 20% and its application in video recommendation increases both Daily Active User (DAU) and APP Usage Time (AUT) on several Kuaishou scenarios. We have open-sourced our benchmark at https://kuaimod.github.io.

Combinatorial Optimization Perspective based Framework for Multi-behavior Recommendation

Feb 04, 2025

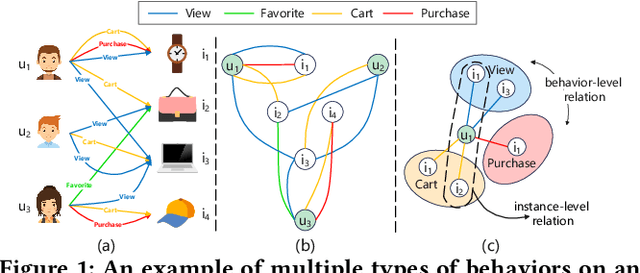

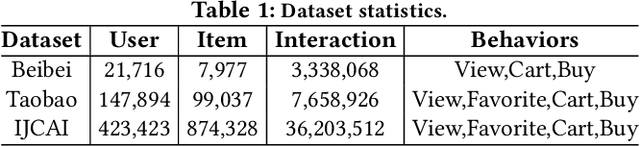

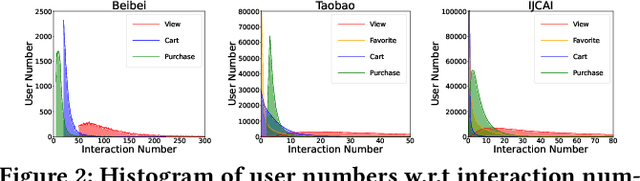

Abstract:In real-world recommendation scenarios, users engage with items through various types of behaviors. Leveraging diversified user behavior information for learning can enhance the recommendation of target behaviors (e.g., buy), as demonstrated by recent multi-behavior methods. The mainstream multi-behavior recommendation framework consists of two steps: fusion and prediction. Recent approaches utilize graph neural networks for multi-behavior fusion and employ multi-task learning paradigms for joint optimization in the prediction step, achieving significant success. However, these methods have limited perspectives on multi-behavior fusion, which leads to inaccurate capture of user behavior patterns in the fusion step. Moreover, when using multi-task learning for prediction, the relationship between the target task and auxiliary tasks is not sufficiently coordinated, resulting in negative information transfer. To address these problems, we propose a novel multi-behavior recommendation framework based on the combinatorial optimization perspective, named COPF. Specifically, we treat multi-behavior fusion as a combinatorial optimization problem, imposing different constraints at various stages of each behavior to restrict the solution space, thus significantly enhancing fusion efficiency (COGCN). In the prediction step, we improve both forward and backward propagation during the generation and aggregation of multiple experts to mitigate negative transfer caused by differences in both feature and label distributions (DFME). Comprehensive experiments on three real-world datasets indicate the superiority of COPF. Further analyses also validate the effectiveness of the COGCN and DFME modules. Our code is available at https://github.com/1918190/COPF.

Coarse-to-fine Dynamic Uplift Modeling for Real-time Video Recommendation

Oct 22, 2024Abstract:With the rise of short video platforms, video recommendation technology faces more complex challenges. Currently, there are multiple non-personalized modules in the video recommendation pipeline that urgently need personalized modeling techniques for improvement. Inspired by the success of uplift modeling in online marketing, we attempt to implement uplift modeling in the video recommendation scenario. However, we face two main challenges: 1) Design and utilization of treatments, and 2) Capture of user real-time interest. To address them, we design adjusting the distribution of videos with varying durations as the treatment and propose Coarse-to-fine Dynamic Uplift Modeling (CDUM) for real-time video recommendation. CDUM consists of two modules, CPM and FIC. The former module fully utilizes the offline features of users to model their long-term preferences, while the latter module leverages online real-time contextual features and request-level candidates to model users' real-time interests. These two modules work together to dynamically identify and targeting specific user groups and applying treatments effectively. Further, we conduct comprehensive experiments on the offline public and industrial datasets and online A/B test, demonstrating the superiority and effectiveness of our proposed CDUM. Our proposed CDUM is eventually fully deployed on the Kuaishou platform, serving hundreds of millions of users every day. The source code will be provided after the paper is accepted.

Time-aligned Exposure-enhanced Model for Click-Through Rate Prediction

Aug 19, 2023

Abstract:Click-Through Rate (CTR) prediction, crucial in applications like recommender systems and online advertising, involves ranking items based on the likelihood of user clicks. User behavior sequence modeling has marked progress in CTR prediction, which extracts users' latent interests from their historical behavior sequences to facilitate accurate CTR prediction. Recent research explores using implicit feedback sequences, like unclicked records, to extract diverse user interests. However, these methods encounter key challenges: 1) temporal misalignment due to disparate sequence time ranges and 2) the lack of fine-grained interaction among feedback sequences. To address these challenges, we propose a novel framework called TEM4CTR, which ensures temporal alignment among sequences while leveraging auxiliary feedback information to enhance click behavior at the item level through a representation projection mechanism. Moreover, this projection-based information transfer module can effectively alleviate the negative impact of irrelevant or even potentially detrimental components of the auxiliary feedback information on the learning process of click behavior. Comprehensive experiments on public and industrial datasets confirm the superiority and effectiveness of TEM4CTR, showcasing the significance of temporal alignment in multi-feedback modeling.

Parallel Knowledge Enhancement based Framework for Multi-behavior Recommendation

Aug 09, 2023Abstract:Multi-behavior recommendation algorithms aim to leverage the multiplex interactions between users and items to learn users' latent preferences. Recent multi-behavior recommendation frameworks contain two steps: fusion and prediction. In the fusion step, advanced neural networks are used to model the hierarchical correlations between user behaviors. In the prediction step, multiple signals are utilized to jointly optimize the model with a multi-task learning (MTL) paradigm. However, recent approaches have not addressed the issue caused by imbalanced data distribution in the fusion step, resulting in the learned relationships being dominated by high-frequency behaviors. In the prediction step, the existing methods use a gate mechanism to directly aggregate expert information generated by coupling input, leading to negative information transfer. To tackle these issues, we propose a Parallel Knowledge Enhancement Framework (PKEF) for multi-behavior recommendation. Specifically, we enhance the hierarchical information propagation in the fusion step using parallel knowledge (PKF). Meanwhile, in the prediction step, we decouple the representations to generate expert information and introduce a projection mechanism during aggregation to eliminate gradient conflicts and alleviate negative transfer (PME). We conduct comprehensive experiments on three real-world datasets to validate the effectiveness of our model. The results further demonstrate the rationality and effectiveness of the designed PKF and PME modules. The source code and datasets are available at https://github.com/MC-CV/PKEF.

Compressed Interaction Graph based Framework for Multi-behavior Recommendation

Mar 04, 2023

Abstract:Multi-types of user behavior data (e.g., clicking, adding to cart, and purchasing) are recorded in most real-world recommendation scenarios, which can help to learn users' multi-faceted preferences. However, it is challenging to explore multi-behavior data due to the unbalanced data distribution and sparse target behavior, which lead to the inadequate modeling of high-order relations when treating multi-behavior data ''as features'' and gradient conflict in multitask learning when treating multi-behavior data ''as labels''. In this paper, we propose CIGF, a Compressed Interaction Graph based Framework, to overcome the above limitations. Specifically, we design a novel Compressed Interaction Graph Convolution Network (CIGCN) to model instance-level high-order relations explicitly. To alleviate the potential gradient conflict when treating multi-behavior data ''as labels'', we propose a Multi-Expert with Separate Input (MESI) network with separate input on the top of CIGCN for multi-task learning. Comprehensive experiments on three large-scale real-world datasets demonstrate the superiority of CIGF. Ablation studies and in-depth analysis further validate the effectiveness of our proposed model in capturing high-order relations and alleviating gradient conflict. The source code and datasets are available at https://github.com/MC-CV/CIGF.

Coarse-to-Fine Knowledge-Enhanced Multi-Interest Learning Framework for Multi-Behavior Recommendation

Aug 05, 2022

Abstract:Multi-types of behaviors (e.g., clicking, adding to cart, purchasing, etc.) widely exist in most real-world recommendation scenarios, which are beneficial to learn users' multi-faceted preferences. As dependencies are explicitly exhibited by the multiple types of behaviors, effectively modeling complex behavior dependencies is crucial for multi-behavior prediction. The state-of-the-art multi-behavior models learn behavior dependencies indistinguishably with all historical interactions as input. However, different behaviors may reflect different aspects of user preference, which means that some irrelevant interactions may play as noises to the target behavior to be predicted. To address the aforementioned limitations, we introduce multi-interest learning to the multi-behavior recommendation. More specifically, we propose a novel Coarse-to-fine Knowledge-enhanced Multi-interest Learning (CKML) framework to learn shared and behavior-specific interests for different behaviors. CKML introduces two advanced modules, namely Coarse-grained Interest Extracting (CIE) and Fine-grained Behavioral Correlation (FBC), which work jointly to capture fine-grained behavioral dependencies. CIE uses knowledge-aware information to extract initial representations of each interest. FBC incorporates a dynamic routing scheme to further assign each behavior among interests. Additionally, we use the self-attention mechanism to correlate different behavioral information at the interest level. Empirical results on three real-world datasets verify the effectiveness and efficiency of our model in exploiting multi-behavior data. Further experiments demonstrate the effectiveness of each module and the robustness and superiority of the shared and specific modelling paradigm for multi-behavior data.

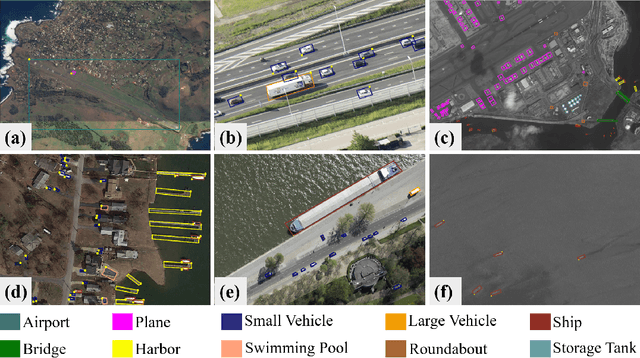

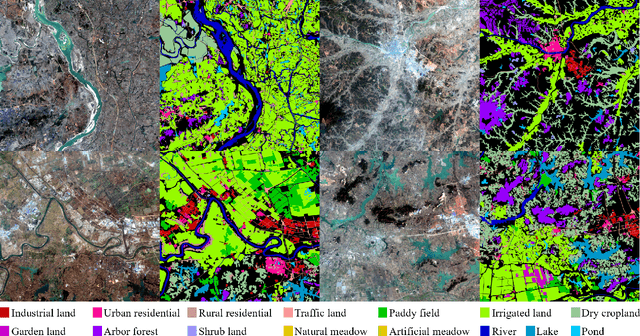

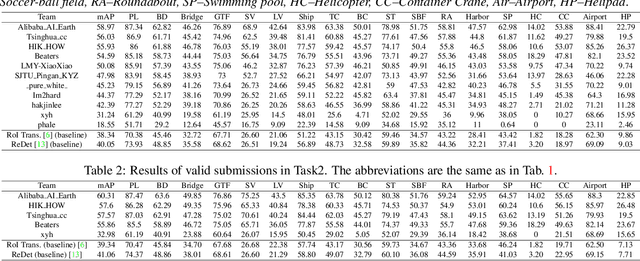

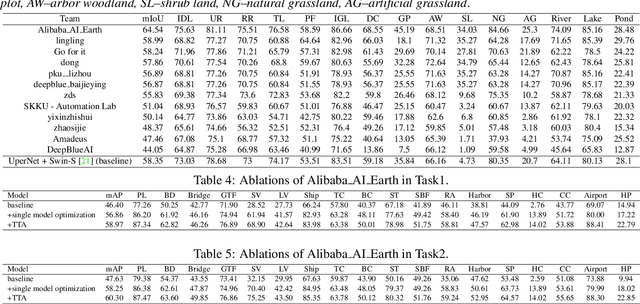

LUAI Challenge 2021 on Learning to Understand Aerial Images

Aug 30, 2021

Abstract:This report summarizes the results of Learning to Understand Aerial Images (LUAI) 2021 challenge held on ICCV 2021, which focuses on object detection and semantic segmentation in aerial images. Using DOTA-v2.0 and GID-15 datasets, this challenge proposes three tasks for oriented object detection, horizontal object detection, and semantic segmentation of common categories in aerial images. This challenge received a total of 146 registrations on the three tasks. Through the challenge, we hope to draw attention from a wide range of communities and call for more efforts on the problems of learning to understand aerial images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge