Zixuan Zhang

Shanxi Normal University, Taiyuan, China

Noise-Aware and Dynamically Adaptive Federated Defense Framework for SAR Image Target Recognition

Dec 31, 2025Abstract:As a critical application of computational intelligence in remote sensing, deep learning-based synthetic aperture radar (SAR) image target recognition facilitates intelligent perception but typically relies on centralized training, where multi-source SAR data are uploaded to a single server, raising privacy and security concerns. Federated learning (FL) provides an emerging computational intelligence paradigm for SAR image target recognition, enabling cross-site collaboration while preserving local data privacy. However, FL confronts critical security risks, where malicious clients can exploit SAR's multiplicative speckle noise to conceal backdoor triggers, severely challenging the robustness of the computational intelligence model. To address this challenge, we propose NADAFD, a noise-aware and dynamically adaptive federated defense framework that integrates frequency-domain, spatial-domain, and client-behavior analyses to counter SAR-specific backdoor threats. Specifically, we introduce a frequency-domain collaborative inversion mechanism to expose cross-client spectral inconsistencies indicative of hidden backdoor triggers. We further design a noise-aware adversarial training strategy that embeds $Γ$-distributed speckle characteristics into mask-guided adversarial sample generation to enhance robustness against both backdoor attacks and SAR speckle noise. In addition, we present a dynamic health assessment module that tracks client update behaviors across training rounds and adaptively adjusts aggregation weights to mitigate evolving malicious contributions. Experiments on MSTAR and OpenSARShip datasets demonstrate that NADAFD achieves higher accuracy on clean test samples and a lower backdoor attack success rate on triggered inputs than existing federated backdoor defenses for SAR target recognition.

LLMs Can Generate a Better Answer by Aggregating Their Own Responses

Mar 06, 2025Abstract:Large Language Models (LLMs) have shown remarkable capabilities across tasks, yet they often require additional prompting techniques when facing complex problems. While approaches like self-correction and response selection have emerged as popular solutions, recent studies have shown these methods perform poorly when relying on the LLM itself to provide feedback or selection criteria. We argue this limitation stems from the fact that common LLM post-training procedures lack explicit supervision for discriminative judgment tasks. In this paper, we propose Generative Self-Aggregation (GSA), a novel prompting method that improves answer quality without requiring the model's discriminative capabilities. GSA first samples multiple diverse responses from the LLM, then aggregates them to obtain an improved solution. Unlike previous approaches, our method does not require the LLM to correct errors or compare response quality; instead, it leverages the model's generative abilities to synthesize a new response based on the context of multiple samples. While GSA shares similarities with the self-consistency (SC) approach for response aggregation, SC requires specific verifiable tokens to enable majority voting. In contrast, our approach is more general and can be applied to open-ended tasks. Empirical evaluation demonstrates that GSA effectively improves response quality across various tasks, including mathematical reasoning, knowledge-based problems, and open-ended generation tasks such as code synthesis and conversational responses.

A Minimalist Example of Edge-of-Stability and Progressive Sharpening

Mar 04, 2025

Abstract:Recent advances in deep learning optimization have unveiled two intriguing phenomena under large learning rates: Edge of Stability (EoS) and Progressive Sharpening (PS), challenging classical Gradient Descent (GD) analyses. Current research approaches, using either generalist frameworks or minimalist examples, face significant limitations in explaining these phenomena. This paper advances the minimalist approach by introducing a two-layer network with a two-dimensional input, where one dimension is relevant to the response and the other is irrelevant. Through this model, we rigorously prove the existence of progressive sharpening and self-stabilization under large learning rates, and establish non-asymptotic analysis of the training dynamics and sharpness along the entire GD trajectory. Besides, we connect our minimalist example to existing works by reconciling the existence of a well-behaved ``stable set" between minimalist and generalist analyses, and extending the analysis of Gradient Flow Solution sharpness to our two-dimensional input scenario. These findings provide new insights into the EoS phenomenon from both parameter and input data distribution perspectives, potentially informing more effective optimization strategies in deep learning practice.

COSMOS: A Hybrid Adaptive Optimizer for Memory-Efficient Training of LLMs

Feb 26, 2025Abstract:Large Language Models (LLMs) have demonstrated remarkable success across various domains, yet their optimization remains a significant challenge due to the complex and high-dimensional loss landscapes they inhabit. While adaptive optimizers such as AdamW are widely used, they suffer from critical limitations, including an inability to capture interdependencies between coordinates and high memory consumption. Subsequent research, exemplified by SOAP, attempts to better capture coordinate interdependence but incurs greater memory overhead, limiting scalability for massive LLMs. An alternative approach aims to reduce memory consumption through low-dimensional projection, but this leads to substantial approximation errors, resulting in less effective optimization (e.g., in terms of per-token efficiency). In this paper, we propose COSMOS, a novel hybrid optimizer that leverages the varying importance of eigensubspaces in the gradient matrix to achieve memory efficiency without compromising optimization performance. The design of COSMOS is motivated by our empirical insights and practical considerations. Specifically, COSMOS applies SOAP to the leading eigensubspace, which captures the primary optimization dynamics, and MUON to the remaining eigensubspace, which is less critical but computationally expensive to handle with SOAP. This hybrid strategy significantly reduces memory consumption while maintaining robust optimization performance, making it particularly suitable for massive LLMs. Numerical experiments on various datasets and transformer architectures are provided to demonstrate the effectiveness of COSMOS. Our code is available at https://github.com/lliu606/COSMOS.

IHEval: Evaluating Language Models on Following the Instruction Hierarchy

Feb 12, 2025Abstract:The instruction hierarchy, which establishes a priority order from system messages to user messages, conversation history, and tool outputs, is essential for ensuring consistent and safe behavior in language models (LMs). Despite its importance, this topic receives limited attention, and there is a lack of comprehensive benchmarks for evaluating models' ability to follow the instruction hierarchy. We bridge this gap by introducing IHEval, a novel benchmark comprising 3,538 examples across nine tasks, covering cases where instructions in different priorities either align or conflict. Our evaluation of popular LMs highlights their struggle to recognize instruction priorities. All evaluated models experience a sharp performance decline when facing conflicting instructions, compared to their original instruction-following performance. Moreover, the most competitive open-source model only achieves 48% accuracy in resolving such conflicts. Our results underscore the need for targeted optimization in the future development of LMs.

Optimistic ε-Greedy Exploration for Cooperative Multi-Agent Reinforcement Learning

Feb 05, 2025Abstract:The Centralized Training with Decentralized Execution (CTDE) paradigm is widely used in cooperative multi-agent reinforcement learning. However, due to the representational limitations of traditional monotonic value decomposition methods, algorithms can underestimate optimal actions, leading policies to suboptimal solutions. To address this challenge, we propose Optimistic $\epsilon$-Greedy Exploration, focusing on enhancing exploration to correct value estimations. The underestimation arises from insufficient sampling of optimal actions during exploration, as our analysis indicated. We introduce an optimistic updating network to identify optimal actions and sample actions from its distribution with a probability of $\epsilon$ during exploration, increasing the selection frequency of optimal actions. Experimental results in various environments reveal that the Optimistic $\epsilon$-Greedy Exploration effectively prevents the algorithm from suboptimal solutions and significantly improves its performance compared to other algorithms.

Double Distillation Network for Multi-Agent Reinforcement Learning

Feb 05, 2025

Abstract:Multi-agent reinforcement learning typically employs a centralized training-decentralized execution (CTDE) framework to alleviate the non-stationarity in environment. However, the partial observability during execution may lead to cumulative gap errors gathered by agents, impairing the training of effective collaborative policies. To overcome this challenge, we introduce the Double Distillation Network (DDN), which incorporates two distillation modules aimed at enhancing robust coordination and facilitating the collaboration process under constrained information. The external distillation module uses a global guiding network and a local policy network, employing distillation to reconcile the gap between global training and local execution. In addition, the internal distillation module introduces intrinsic rewards, drawn from state information, to enhance the exploration capabilities of agents. Extensive experiments demonstrate that DDN significantly improves performance across multiple scenarios.

Robust Reinforcement Learning from Corrupted Human Feedback

Jun 21, 2024Abstract:Reinforcement learning from human feedback (RLHF) provides a principled framework for aligning AI systems with human preference data. For various reasons, e.g., personal bias, context ambiguity, lack of training, etc, human annotators may give incorrect or inconsistent preference labels. To tackle this challenge, we propose a robust RLHF approach -- $R^3M$, which models the potentially corrupted preference label as sparse outliers. Accordingly, we formulate the robust reward learning as an $\ell_1$-regularized maximum likelihood estimation problem. Computationally, we develop an efficient alternating optimization algorithm, which only incurs negligible computational overhead compared with the standard RLHF approach. Theoretically, we prove that under proper regularity conditions, $R^3M$ can consistently learn the underlying reward and identify outliers, provided that the number of outlier labels scales sublinearly with the preference sample size. Furthermore, we remark that $R^3M$ is versatile and can be extended to various preference optimization methods, including direct preference optimization (DPO). Our experiments on robotic control and natural language generation with large language models (LLMs) show that $R^3M$ improves robustness of the reward against several types of perturbations to the preference data.

Towards Better Generalization in Open-Domain Question Answering by Mitigating Context Memorization

Apr 02, 2024

Abstract:Open-domain Question Answering (OpenQA) aims at answering factual questions with an external large-scale knowledge corpus. However, real-world knowledge is not static; it updates and evolves continually. Such a dynamic characteristic of knowledge poses a vital challenge for these models, as the trained models need to constantly adapt to the latest information to make sure that the answers remain accurate. In addition, it is still unclear how well an OpenQA model can transfer to completely new knowledge domains. In this paper, we investigate the generalization performance of a retrieval-augmented QA model in two specific scenarios: 1) adapting to updated versions of the same knowledge corpus; 2) switching to completely different knowledge domains. We observe that the generalization challenges of OpenQA models stem from the reader's over-reliance on memorizing the knowledge from the external corpus, which hinders the model from generalizing to a new knowledge corpus. We introduce Corpus-Invariant Tuning (CIT), a simple but effective training strategy, to mitigate the knowledge over-memorization by controlling the likelihood of retrieved contexts during training. Extensive experimental results on multiple OpenQA benchmarks show that CIT achieves significantly better generalizability without compromising the model's performance in its original corpus and domain.

EVEDIT: Event-based Knowledge Editing with Deductive Editing Boundaries

Feb 17, 2024

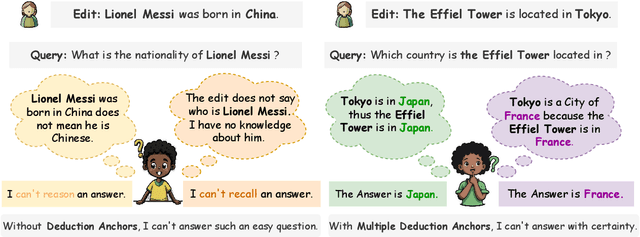

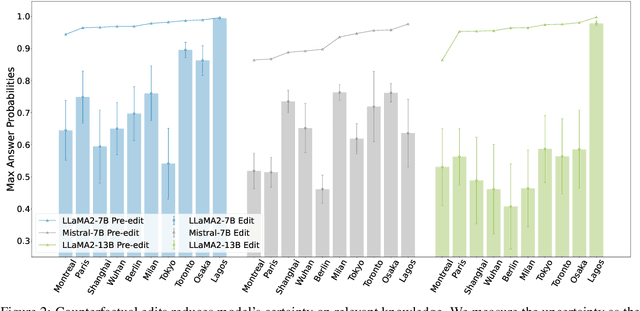

Abstract:The dynamic nature of real-world information necessitates efficient knowledge editing (KE) in large language models (LLMs) for knowledge updating. However, current KE approaches, which typically operate on (subject, relation, object) triples, ignore the contextual information and the relation among different knowledge. Such editing methods could thus encounter an uncertain editing boundary, leaving a lot of relevant knowledge in ambiguity: Queries that could be answered pre-edit cannot be reliably answered afterward. In this work, we analyze this issue by introducing a theoretical framework for KE that highlights an overlooked set of knowledge that remains unchanged and aids in knowledge deduction during editing, which we name as the deduction anchor. We further address this issue by proposing a novel task of event-based knowledge editing that pairs facts with event descriptions. This task manifests not only a closer simulation of real-world editing scenarios but also a more logically sound setting, implicitly defining the deduction anchor to address the issue of indeterminate editing boundaries. We empirically demonstrate the superiority of event-based editing over the existing setting on resolving uncertainty in edited models, and curate a new benchmark dataset EvEdit derived from the CounterFact dataset. Moreover, while we observe that the event-based setting is significantly challenging for existing approaches, we propose a novel approach Self-Edit that showcases stronger performance, achieving 55.6% consistency improvement while maintaining the naturalness of generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge