Zike Yan

CoINS: Counterfactual Interactive Navigation via Skill-Aware VLM

Jan 07, 2026Abstract:Recent Vision-Language Models (VLMs) have demonstrated significant potential in robotic planning. However, they typically function as semantic reasoners, lacking an intrinsic understanding of the specific robot's physical capabilities. This limitation is particularly critical in interactive navigation, where robots must actively modify cluttered environments to create traversable paths. Existing VLM-based navigators are predominantly confined to passive obstacle avoidance, failing to reason about when and how to interact with objects to clear blocked paths. To bridge this gap, we propose Counterfactual Interactive Navigation via Skill-aware VLM (CoINS), a hierarchical framework that integrates skill-aware reasoning and robust low-level execution. Specifically, we fine-tune a VLM, named InterNav-VLM, which incorporates skill affordance and concrete constraint parameters into the input context and grounds them into a metric-scale environmental representation. By internalizing the logic of counterfactual reasoning through fine-tuning on the proposed InterNav dataset, the model learns to implicitly evaluate the causal effects of object removal on navigation connectivity, thereby determining interaction necessity and target selection. To execute the generated high-level plans, we develop a comprehensive skill library through reinforcement learning, specifically introducing traversability-oriented strategies to manipulate diverse objects for path clearance. A systematic benchmark in Isaac Sim is proposed to evaluate both the reasoning and execution aspects of interactive navigation. Extensive simulations and real-world experiments demonstrate that CoINS significantly outperforms representative baselines, achieving a 17\% higher overall success rate and over 80\% improvement in complex long-horizon scenarios compared to the best-performing baseline

Reflection-Based Task Adaptation for Self-Improving VLA

Oct 14, 2025Abstract:Pre-trained Vision-Language-Action (VLA) models represent a major leap towards general-purpose robots, yet efficiently adapting them to novel, specific tasks in-situ remains a significant hurdle. While reinforcement learning (RL) is a promising avenue for such adaptation, the process often suffers from low efficiency, hindering rapid task mastery. We introduce Reflective Self-Adaptation, a framework for rapid, autonomous task adaptation without human intervention. Our framework establishes a self-improving loop where the agent learns from its own experience to enhance both strategy and execution. The core of our framework is a dual-pathway architecture that addresses the full adaptation lifecycle. First, a Failure-Driven Reflective RL pathway enables rapid learning by using the VLM's causal reasoning to automatically synthesize a targeted, dense reward function from failure analysis. This provides a focused learning signal that significantly accelerates policy exploration. However, optimizing such proxy rewards introduces a potential risk of "reward hacking," where the agent masters the reward function but fails the actual task. To counteract this, our second pathway, Success-Driven Quality-Guided SFT, grounds the policy in holistic success. It identifies and selectively imitates high-quality successful trajectories, ensuring the agent remains aligned with the ultimate task goal. This pathway is strengthened by a conditional curriculum mechanism to aid initial exploration. We conduct experiments in challenging manipulation tasks. The results demonstrate that our framework achieves faster convergence and higher final success rates compared to representative baselines. Our work presents a robust solution for creating self-improving agents that can efficiently and reliably adapt to new environments.

DISCOVERSE: Efficient Robot Simulation in Complex High-Fidelity Environments

Jul 29, 2025Abstract:We present the first unified, modular, open-source 3DGS-based simulation framework for Real2Sim2Real robot learning. It features a holistic Real2Sim pipeline that synthesizes hyper-realistic geometry and appearance of complex real-world scenarios, paving the way for analyzing and bridging the Sim2Real gap. Powered by Gaussian Splatting and MuJoCo, Discoverse enables massively parallel simulation of multiple sensor modalities and accurate physics, with inclusive supports for existing 3D assets, robot models, and ROS plugins, empowering large-scale robot learning and complex robotic benchmarks. Through extensive experiments on imitation learning, Discoverse demonstrates state-of-the-art zero-shot Sim2Real transfer performance compared to existing simulators. For code and demos: https://air-discoverse.github.io/.

COSMO: Combination of Selective Memorization for Low-cost Vision-and-Language Navigation

Mar 31, 2025Abstract:Vision-and-Language Navigation (VLN) tasks have gained prominence within artificial intelligence research due to their potential application in fields like home assistants. Many contemporary VLN approaches, while based on transformer architectures, have increasingly incorporated additional components such as external knowledge bases or map information to enhance performance. These additions, while boosting performance, also lead to larger models and increased computational costs. In this paper, to achieve both high performance and low computational costs, we propose a novel architecture with the COmbination of Selective MemOrization (COSMO). Specifically, COSMO integrates state-space modules and transformer modules, and incorporates two VLN-customized selective state space modules: the Round Selective Scan (RSS) and the Cross-modal Selective State Space Module (CS3). RSS facilitates comprehensive inter-modal interactions within a single scan, while the CS3 module adapts the selective state space module into a dual-stream architecture, thereby enhancing the acquisition of cross-modal interactions. Experimental validations on three mainstream VLN benchmarks, REVERIE, R2R, and R2R-CE, not only demonstrate competitive navigation performance of our model but also show a significant reduction in computational costs.

An Real-Sim-Real (RSR) Loop Framework for Generalizable Robotic Policy Transfer with Differentiable Simulation

Mar 13, 2025Abstract:The sim-to-real gap remains a critical challenge in robotics, hindering the deployment of algorithms trained in simulation to real-world systems. This paper introduces a novel Real-Sim-Real (RSR) loop framework leveraging differentiable simulation to address this gap by iteratively refining simulation parameters, aligning them with real-world conditions, and enabling robust and efficient policy transfer. A key contribution of our work is the design of an informative cost function that encourages the collection of diverse and representative real-world data, minimizing bias and maximizing the utility of each data point for simulation refinement. This cost function integrates seamlessly into existing reinforcement learning algorithms (e.g., PPO, SAC) and ensures a balanced exploration of critical regions in the real domain. Furthermore, our approach is implemented on the versatile Mujoco MJX platform, and our framework is compatible with a wide range of robotic systems. Experimental results on several robotic manipulation tasks demonstrate that our method significantly reduces the sim-to-real gap, achieving high task performance and generalizability across diverse scenarios of both explicit and implicit environmental uncertainties.

ActiveSplat: High-Fidelity Scene Reconstruction through Active Gaussian Splatting

Oct 29, 2024Abstract:We propose ActiveSplat, an autonomous high-fidelity reconstruction system leveraging Gaussian splatting. Taking advantage of efficient and realistic rendering, the system establishes a unified framework for online mapping, viewpoint selection, and path planning. The key to ActiveSplat is a hybrid map representation that integrates both dense information about the environment and a sparse abstraction of the workspace. Therefore, the system leverages sparse topology for efficient viewpoint sampling and path planning, while exploiting view-dependent dense prediction for viewpoint selection, facilitating efficient decision-making with promising accuracy and completeness. A hierarchical planning strategy based on the topological map is adopted to mitigate repetitive trajectories and improve local granularity given limited budgets, ensuring high-fidelity reconstruction with photorealistic view synthesis. Extensive experiments and ablation studies validate the efficacy of the proposed method in terms of reconstruction accuracy, data coverage, and exploration efficiency. Project page: https://li-yuetao.github.io/ActiveSplat/.

Active Neural Mapping at Scale

Sep 30, 2024

Abstract:We introduce a NeRF-based active mapping system that enables efficient and robust exploration of large-scale indoor environments. The key to our approach is the extraction of a generalized Voronoi graph (GVG) from the continually updated neural map, leading to the synergistic integration of scene geometry, appearance, topology, and uncertainty. Anchoring uncertain areas induced by the neural map to the vertices of GVG allows the exploration to undergo adaptive granularity along a safe path that traverses unknown areas efficiently. Harnessing a modern hybrid NeRF representation, the proposed system achieves competitive results in terms of reconstruction accuracy, coverage completeness, and exploration efficiency even when scaling up to large indoor environments. Extensive results at different scales validate the efficacy of the proposed system.

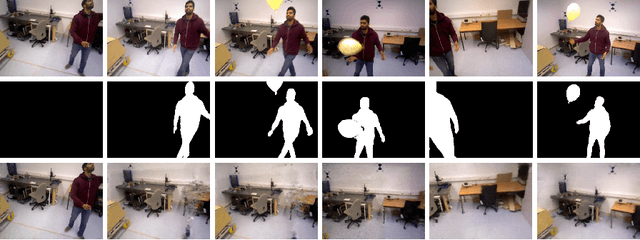

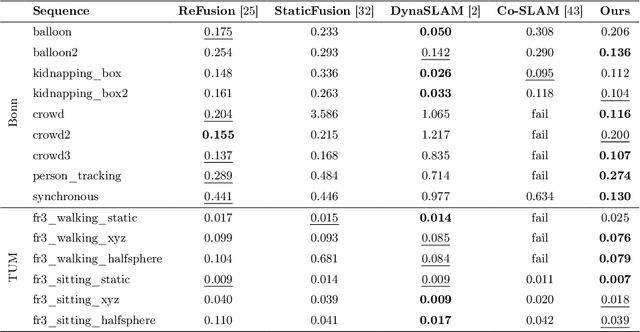

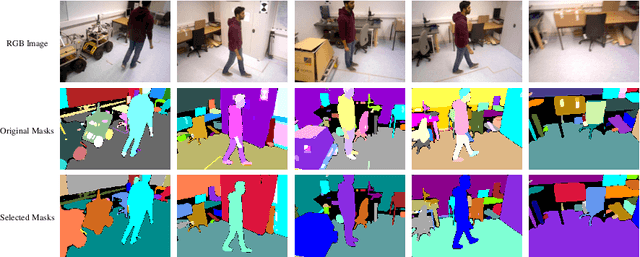

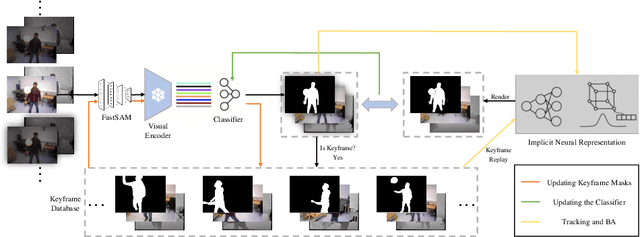

Learn to Memorize and to Forget: A Continual Learning Perspective of Dynamic SLAM

Jul 18, 2024

Abstract:Simultaneous localization and mapping (SLAM) with implicit neural representations has received extensive attention due to the expressive representation power and the innovative paradigm of continual learning. However, deploying such a system within a dynamic environment has not been well-studied. Such challenges are intractable even for conventional algorithms since observations from different views with dynamic objects involved break the geometric and photometric consistency, whereas the consistency lays the foundation for joint optimizing the camera pose and the map parameters. In this paper, we best exploit the characteristics of continual learning and propose a novel SLAM framework for dynamic environments. While past efforts have been made to avoid catastrophic forgetting by exploiting an experience replay strategy, we view forgetting as a desirable characteristic. By adaptively controlling the replayed buffer, the ambiguity caused by moving objects can be easily alleviated through forgetting. We restrain the replay of the dynamic objects by introducing a continually-learned classifier for dynamic object identification. The iterative optimization of the neural map and the classifier notably improves the robustness of the SLAM system under a dynamic environment. Experiments on challenging datasets verify the effectiveness of the proposed framework.

Camera Relocalization in Shadow-free Neural Radiance Fields

May 23, 2024

Abstract:Camera relocalization is a crucial problem in computer vision and robotics. Recent advancements in neural radiance fields (NeRFs) have shown promise in synthesizing photo-realistic images. Several works have utilized NeRFs for refining camera poses, but they do not account for lighting changes that can affect scene appearance and shadow regions, causing a degraded pose optimization process. In this paper, we propose a two-staged pipeline that normalizes images with varying lighting and shadow conditions to improve camera relocalization. We implement our scene representation upon a hash-encoded NeRF which significantly boosts up the pose optimization process. To account for the noisy image gradient computing problem in grid-based NeRFs, we further propose a re-devised truncated dynamic low-pass filter (TDLF) and a numerical gradient averaging technique to smoothen the process. Experimental results on several datasets with varying lighting conditions demonstrate that our method achieves state-of-the-art results in camera relocalization under varying lighting conditions. Code and data will be made publicly available.

Blending Distributed NeRFs with Tri-stage Robust Pose Optimization

May 05, 2024

Abstract:Due to the limited model capacity, leveraging distributed Neural Radiance Fields (NeRFs) for modeling extensive urban environments has become a necessity. However, current distributed NeRF registration approaches encounter aliasing artifacts, arising from discrepancies in rendering resolutions and suboptimal pose precision. These factors collectively deteriorate the fidelity of pose estimation within NeRF frameworks, resulting in occlusion artifacts during the NeRF blending stage. In this paper, we present a distributed NeRF system with tri-stage pose optimization. In the first stage, precise poses of images are achieved by bundle adjusting Mip-NeRF 360 with a coarse-to-fine strategy. In the second stage, we incorporate the inverting Mip-NeRF 360, coupled with the truncated dynamic low-pass filter, to enable the achievement of robust and precise poses, termed Frame2Model optimization. On top of this, we obtain a coarse transformation between NeRFs in different coordinate systems. In the third stage, we fine-tune the transformation between NeRFs by Model2Model pose optimization. After obtaining precise transformation parameters, we proceed to implement NeRF blending, showcasing superior performance metrics in both real-world and simulation scenarios. Codes and data will be publicly available at https://github.com/boilcy/Distributed-NeRF.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge