Siqi Zhang

A Unified View of Attention and Residual Sinks: Outlier-Driven Rescaling is Essential for Transformer Training

Jan 30, 2026Abstract:We investigate the functional role of emergent outliers in large language models, specifically attention sinks (a few tokens that consistently receive large attention logits) and residual sinks (a few fixed dimensions with persistently large activations across most tokens). We hypothesize that these outliers, in conjunction with the corresponding normalizations (\textit{e.g.}, softmax attention and RMSNorm), effectively rescale other non-outlier components. We term this phenomenon \textit{outlier-driven rescaling} and validate this hypothesis across different model architectures and training token counts. This view unifies the origin and mitigation of both sink types. Our main conclusions and observations include: (1) Outliers function jointly with normalization: removing normalization eliminates the corresponding outliers but degrades training stability and performance; directly clipping outliers while retaining normalization leads to degradation, indicating that outlier-driven rescaling contributes to training stability. (2) Outliers serve more as rescale factors rather than contributors, as the final contributions of attention and residual sinks are significantly smaller than those of non-outliers. (3) Outliers can be absorbed into learnable parameters or mitigated via explicit gated rescaling, leading to improved training performance (average gain of 2 points) and enhanced quantization robustness (1.2 points degradation under W4A4 quantization).

LightCom: A Generative AI-Augmented Framework for QoE-Oriented Communications

Jul 23, 2025Abstract:Data-intensive and immersive applications, such as virtual reality, impose stringent quality of experience (QoE) requirements that challenge traditional quality of service (QoS)-driven communication systems. This paper presents LightCom, a lightweight encoding and generative AI (GenAI)-augmented decoding framework, designed for QoE-oriented communications under low signal-to-noise ratio (SNR) conditions. LightCom simplifies transmitter design by applying basic low-pass filtering for source coding and minimal channel coding, significantly reducing processing complexity and energy consumption. At the receiver, GenAI models reconstruct high-fidelity content from highly compressed and degraded signals by leveraging generative priors to infer semantic and structural information beyond traditional decoding capabilities. The key design principles are analyzed, along with the sufficiency and error-resilience of the source representation. We also develop importance-aware power allocation strategies to enhance QoE and extend perceived coverage. Simulation results demonstrate that LightCom achieves up to a $14$ dB improvement in robustness and a $9$ dB gain in perceived coverage, outperforming traditional QoS-driven systems relying on sophisticated source and channel coding. This paradigm shift moves communication systems towards human-centric QoE metrics rather than bit-level fidelity, paving the way for more efficient and resilient wireless networks.

MedSG-Bench: A Benchmark for Medical Image Sequences Grounding

May 17, 2025Abstract:Visual grounding is essential for precise perception and reasoning in multimodal large language models (MLLMs), especially in medical imaging domains. While existing medical visual grounding benchmarks primarily focus on single-image scenarios, real-world clinical applications often involve sequential images, where accurate lesion localization across different modalities and temporal tracking of disease progression (e.g., pre- vs. post-treatment comparison) require fine-grained cross-image semantic alignment and context-aware reasoning. To remedy the underrepresentation of image sequences in existing medical visual grounding benchmarks, we propose MedSG-Bench, the first benchmark tailored for Medical Image Sequences Grounding. It comprises eight VQA-style tasks, formulated into two paradigms of the grounding tasks, including 1) Image Difference Grounding, which focuses on detecting change regions across images, and 2) Image Consistency Grounding, which emphasizes detection of consistent or shared semantics across sequential images. MedSG-Bench covers 76 public datasets, 10 medical imaging modalities, and a wide spectrum of anatomical structures and diseases, totaling 9,630 question-answer pairs. We benchmark both general-purpose MLLMs (e.g., Qwen2.5-VL) and medical-domain specialized MLLMs (e.g., HuatuoGPT-vision), observing that even the advanced models exhibit substantial limitations in medical sequential grounding tasks. To advance this field, we construct MedSG-188K, a large-scale instruction-tuning dataset tailored for sequential visual grounding, and further develop MedSeq-Grounder, an MLLM designed to facilitate future research on fine-grained understanding across medical sequential images. The benchmark, dataset, and model are available at https://huggingface.co/MedSG-Bench

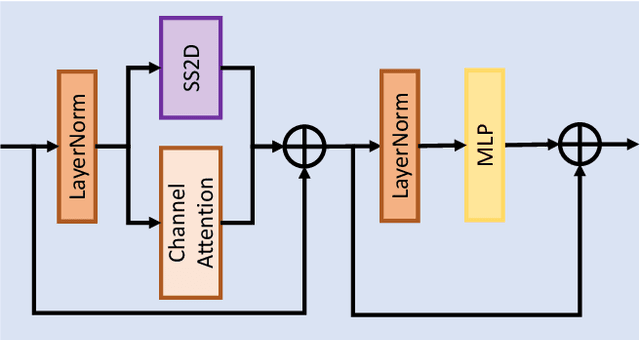

COSMO: Combination of Selective Memorization for Low-cost Vision-and-Language Navigation

Mar 31, 2025Abstract:Vision-and-Language Navigation (VLN) tasks have gained prominence within artificial intelligence research due to their potential application in fields like home assistants. Many contemporary VLN approaches, while based on transformer architectures, have increasingly incorporated additional components such as external knowledge bases or map information to enhance performance. These additions, while boosting performance, also lead to larger models and increased computational costs. In this paper, to achieve both high performance and low computational costs, we propose a novel architecture with the COmbination of Selective MemOrization (COSMO). Specifically, COSMO integrates state-space modules and transformer modules, and incorporates two VLN-customized selective state space modules: the Round Selective Scan (RSS) and the Cross-modal Selective State Space Module (CS3). RSS facilitates comprehensive inter-modal interactions within a single scan, while the CS3 module adapts the selective state space module into a dual-stream architecture, thereby enhancing the acquisition of cross-modal interactions. Experimental validations on three mainstream VLN benchmarks, REVERIE, R2R, and R2R-CE, not only demonstrate competitive navigation performance of our model but also show a significant reduction in computational costs.

FlexVLN: Flexible Adaptation for Diverse Vision-and-Language Navigation Tasks

Mar 18, 2025

Abstract:The aspiration of the Vision-and-Language Navigation (VLN) task has long been to develop an embodied agent with robust adaptability, capable of seamlessly transferring its navigation capabilities across various tasks. Despite remarkable advancements in recent years, most methods necessitate dataset-specific training, thereby lacking the capability to generalize across diverse datasets encompassing distinct types of instructions. Large language models (LLMs) have demonstrated exceptional reasoning and generalization abilities, exhibiting immense potential in robot action planning. In this paper, we propose FlexVLN, an innovative hierarchical approach to VLN that integrates the fundamental navigation ability of a supervised-learning-based Instruction Follower with the robust generalization ability of the LLM Planner, enabling effective generalization across diverse VLN datasets. Moreover, a verification mechanism and a multi-model integration mechanism are proposed to mitigate potential hallucinations by the LLM Planner and enhance execution accuracy of the Instruction Follower. We take REVERIE, SOON, and CVDN-target as out-of-domain datasets for assessing generalization ability. The generalization performance of FlexVLN surpasses that of all the previous methods to a large extent.

Importance-Aware Source-Channel Coding for Multi-Modal Task-Oriented Semantic Communication

Feb 22, 2025Abstract:This paper explores the concept of information importance in multi-modal task-oriented semantic communication systems, emphasizing the need for high accuracy and efficiency to fulfill task-specific objectives. At the transmitter, generative AI (GenAI) is employed to partition visual data objects into semantic segments, each representing distinct, task-relevant information. These segments are subsequently encoded into tokens, enabling precise and adaptive transmission control. Building on this frame work, we present importance-aware source and channel coding strategies that dynamically adjust to varying levels of significance at the segment, token, and bit levels. The proposed strategies prioritize high fidelity for essential information while permitting controlled distortion for less critical elements, optimizing overall resource utilization. Furthermore, we address the source-channel coding challenge in semantic multiuser systems, particularly in multicast scenarios, where segment importance varies among receivers. To tackle these challenges, we propose solutions such as rate-splitting coded progressive transmission, ensuring flexibility and robustness in task-specific semantic communication.

Can GNNs Learn Link Heuristics? A Concise Review and Evaluation of Link Prediction Methods

Nov 22, 2024Abstract:This paper explores the ability of Graph Neural Networks (GNNs) in learning various forms of information for link prediction, alongside a brief review of existing link prediction methods. Our analysis reveals that GNNs cannot effectively learn structural information related to the number of common neighbors between two nodes, primarily due to the nature of set-based pooling of the neighborhood aggregation scheme. Also, our extensive experiments indicate that trainable node embeddings can improve the performance of GNN-based link prediction models. Importantly, we observe that the denser the graph, the greater such the improvement. We attribute this to the characteristics of node embeddings, where the link state of each link sample could be encoded into the embeddings of nodes that are involved in the neighborhood aggregation of the two nodes in that link sample. In denser graphs, every node could have more opportunities to attend the neighborhood aggregation of other nodes and encode states of more link samples to its embedding, thus learning better node embeddings for link prediction. Lastly, we demonstrate that the insights gained from our research carry important implications in identifying the limitations of existing link prediction methods, which could guide the future development of more robust algorithms.

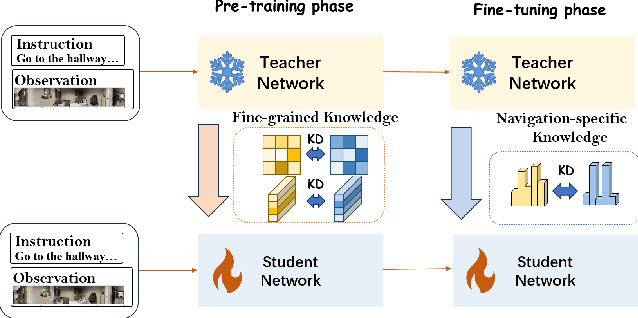

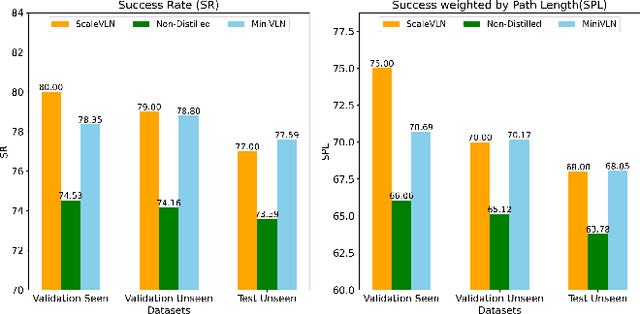

MiniVLN: Efficient Vision-and-Language Navigation by Progressive Knowledge Distillation

Sep 27, 2024

Abstract:In recent years, Embodied Artificial Intelligence (Embodied AI) has advanced rapidly, yet the increasing size of models conflicts with the limited computational capabilities of Embodied AI platforms. To address this challenge, we aim to achieve both high model performance and practical deployability. Specifically, we focus on Vision-and-Language Navigation (VLN), a core task in Embodied AI. This paper introduces a two-stage knowledge distillation framework, producing a student model, MiniVLN, and showcasing the significant potential of distillation techniques in developing lightweight models. The proposed method aims to capture fine-grained knowledge during the pretraining phase and navigation-specific knowledge during the fine-tuning phase. Our findings indicate that the two-stage distillation approach is more effective in narrowing the performance gap between the teacher model and the student model compared to single-stage distillation. On the public R2R and REVERIE benchmarks, MiniVLN achieves performance on par with the teacher model while having only about 12% of the teacher model's parameter count.

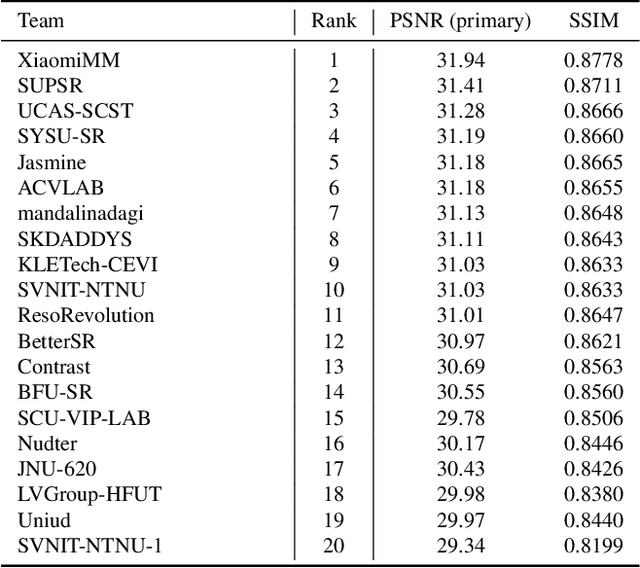

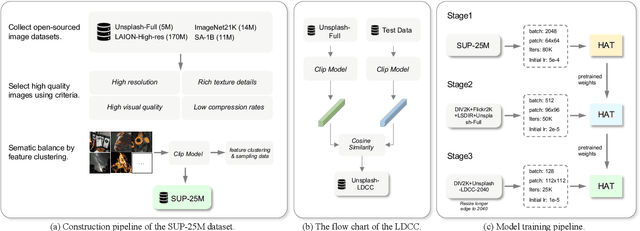

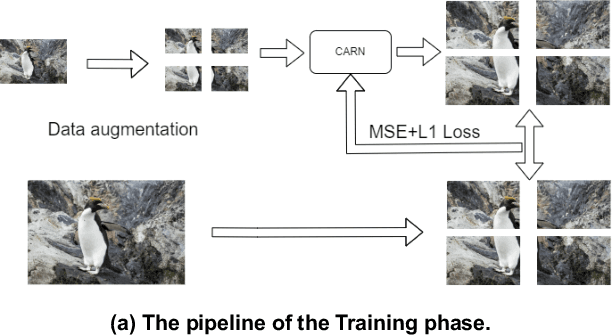

NTIRE 2024 Challenge on Image Super-Resolution ($\times$4): Methods and Results

Apr 15, 2024

Abstract:This paper reviews the NTIRE 2024 challenge on image super-resolution ($\times$4), highlighting the solutions proposed and the outcomes obtained. The challenge involves generating corresponding high-resolution (HR) images, magnified by a factor of four, from low-resolution (LR) inputs using prior information. The LR images originate from bicubic downsampling degradation. The aim of the challenge is to obtain designs/solutions with the most advanced SR performance, with no constraints on computational resources (e.g., model size and FLOPs) or training data. The track of this challenge assesses performance with the PSNR metric on the DIV2K testing dataset. The competition attracted 199 registrants, with 20 teams submitting valid entries. This collective endeavour not only pushes the boundaries of performance in single-image SR but also offers a comprehensive overview of current trends in this field.

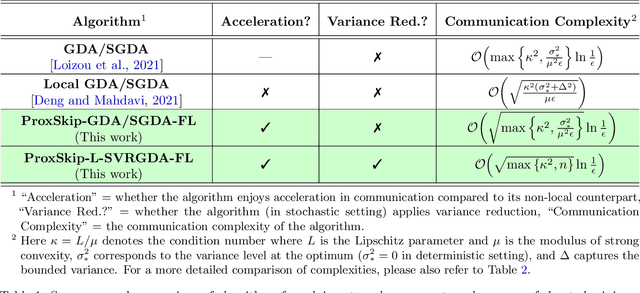

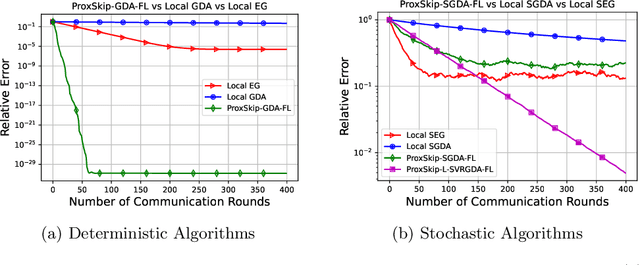

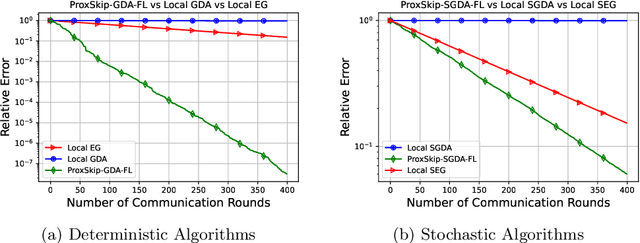

Communication-Efficient Gradient Descent-Accent Methods for Distributed Variational Inequalities: Unified Analysis and Local Updates

Jun 08, 2023

Abstract:Distributed and federated learning algorithms and techniques associated primarily with minimization problems. However, with the increase of minimax optimization and variational inequality problems in machine learning, the necessity of designing efficient distributed/federated learning approaches for these problems is becoming more apparent. In this paper, we provide a unified convergence analysis of communication-efficient local training methods for distributed variational inequality problems (VIPs). Our approach is based on a general key assumption on the stochastic estimates that allows us to propose and analyze several novel local training algorithms under a single framework for solving a class of structured non-monotone VIPs. We present the first local gradient descent-accent algorithms with provable improved communication complexity for solving distributed variational inequalities on heterogeneous data. The general algorithmic framework recovers state-of-the-art algorithms and their sharp convergence guarantees when the setting is specialized to minimization or minimax optimization problems. Finally, we demonstrate the strong performance of the proposed algorithms compared to state-of-the-art methods when solving federated minimax optimization problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge