Lu Zhang

Tony

Quasi-multimodal-based pathophysiological feature learning for retinal disease diagnosis

Feb 03, 2026Abstract:Retinal diseases spanning a broad spectrum can be effectively identified and diagnosed using complementary signals from multimodal data. However, multimodal diagnosis in ophthalmic practice is typically challenged in terms of data heterogeneity, potential invasiveness, registration complexity, and so on. As such, a unified framework that integrates multimodal data synthesis and fusion is proposed for retinal disease classification and grading. Specifically, the synthesized multimodal data incorporates fundus fluorescein angiography (FFA), multispectral imaging (MSI), and saliency maps that emphasize latent lesions as well as optic disc/cup regions. Parallel models are independently trained to learn modality-specific representations that capture cross-pathophysiological signatures. These features are then adaptively calibrated within and across modalities to perform information pruning and flexible integration according to downstream tasks. The proposed learning system is thoroughly interpreted through visualizations in both image and feature spaces. Extensive experiments on two public datasets demonstrated the superiority of our approach over state-of-the-art ones in the tasks of multi-label classification (F1-score: 0.683, AUC: 0.953) and diabetic retinopathy grading (Accuracy:0.842, Kappa: 0.861). This work not only enhances the accuracy and efficiency of retinal disease screening but also offers a scalable framework for data augmentation across various medical imaging modalities.

Community-Level Modeling of Gyral Folding Patterns for Robust and Anatomically Informed Individualized Brain Mapping

Feb 01, 2026Abstract:Cortical folding exhibits substantial inter-individual variability while preserving stable anatomical landmarks that enable fine-scale characterization of cortical organization. Among these, the three-hinge gyrus (3HG) serves as a key folding primitive, showing consistent topology yet meaningful variations in morphology, connectivity, and function. Existing landmark-based methods typically model each 3HG independently, ignoring that 3HGs form higher-order folding communities that capture mesoscale structure. This simplification weakens anatomical representation and makes one-to-one matching sensitive to positional variability and noise. We propose a spectral graph representation learning framework that models community-level folding units rather than isolated landmarks. Each 3HG is encoded using a dual-profile representation combining surface topology and structural connectivity. Subject-specific spectral clustering identifies coherent folding communities, followed by topological refinement to preserve anatomical continuity. For cross-subject correspondence, we introduce Joint Morphological-Geometric Matching, jointly optimizing geometric and morphometric similarity. Across over 1000 Human Connectome Project subjects, the resulting communities show reduced morphometric variance, stronger modular organization, improved hemispheric consistency, and superior alignment compared with atlas-based and landmark-based or embedding-based baselines. These findings demonstrate that community-level modeling provides a robust and anatomically grounded framework for individualized cortical characterization and reliable cross-subject correspondence.

Parametric Hyperbolic Conservation Laws: A Unified Framework for Conservation, Entropy Stability, and Hyperbolicity

Jan 28, 2026Abstract:We propose a parametric hyperbolic conservation law (SymCLaw) for learning hyperbolic systems directly from data while ensuring conservation, entropy stability, and hyperbolicity by design. Unlike existing approaches that typically enforce only conservation or rely on prior knowledge of the governing equations, our method parameterizes the flux functions in a form that guarantees real eigenvalues and complete eigenvectors of the flux Jacobian, thereby preserving hyperbolicity. At the same time, we embed entropy-stable design principles by jointly learning a convex entropy function and its associated flux potential, ensuring entropy dissipation and the selection of physically admissible weak solutions. A corresponding entropy-stable numerical flux scheme provides compatibility with standard discretizations, allowing seamless integration into classical solvers. Numerical experiments on benchmark problems, including Burgers, shallow water, Euler, and KPP equations, demonstrate that SymCLaw generalizes to unseen initial conditions, maintains stability under noisy training data, and achieves accurate long-time predictions, highlighting its potential as a principled foundation for data-driven modeling of hyperbolic conservation laws.

Domain-Expert-Guided Hybrid Mixture-of-Experts for Medical AI: Integrating Data-Driven Learning with Clinical Priors

Jan 25, 2026Abstract:Mixture-of-Experts (MoE) models increase representational capacity with modest computational cost, but their effectiveness in specialized domains such as medicine is limited by small datasets. In contrast, clinical practice offers rich expert knowledge, such as physician gaze patterns and diagnostic heuristics, that models cannot reliably learn from limited data. Combining data-driven experts, which capture novel patterns, with domain-expert-guided experts, which encode accumulated clinical insights, provides complementary strengths for robust and clinically meaningful learning. To this end, we propose Domain-Knowledge-Guided Hybrid MoE (DKGH-MoE), a plug-and-play and interpretable module that unifies data-driven learning with domain expertise. DKGH-MoE integrates a data-driven MoE to extract novel features from raw imaging data, and a domain-expert-guided MoE incorporates clinical priors, specifically clinician eye-gaze cues, to emphasize regions of high diagnostic relevance. By integrating domain expert insights with data-driven features, DKGH-MoE improves both performance and interpretability.

Towards Cross-Platform Generalization: Domain Adaptive 3D Detection with Augmentation and Pseudo-Labeling

Jan 13, 2026Abstract:This technical report represents the award-winning solution to the Cross-platform 3D Object Detection task in the RoboSense2025 Challenge. Our approach is built upon PVRCNN++, an efficient 3D object detection framework that effectively integrates point-based and voxel-based features. On top of this foundation, we improve cross-platform generalization by narrowing domain gaps through tailored data augmentation and a self-training strategy with pseudo-labels. These enhancements enabled our approach to secure the 3rd place in the challenge, achieving a 3D AP of 62.67% for the Car category on the phase-1 target domain, and 58.76% and 49.81% for Car and Pedestrian categories respectively on the phase-2 target domain.

The RoboSense Challenge: Sense Anything, Navigate Anywhere, Adapt Across Platforms

Jan 08, 2026Abstract:Autonomous systems are increasingly deployed in open and dynamic environments -- from city streets to aerial and indoor spaces -- where perception models must remain reliable under sensor noise, environmental variation, and platform shifts. However, even state-of-the-art methods often degrade under unseen conditions, highlighting the need for robust and generalizable robot sensing. The RoboSense 2025 Challenge is designed to advance robustness and adaptability in robot perception across diverse sensing scenarios. It unifies five complementary research tracks spanning language-grounded decision making, socially compliant navigation, sensor configuration generalization, cross-view and cross-modal correspondence, and cross-platform 3D perception. Together, these tasks form a comprehensive benchmark for evaluating real-world sensing reliability under domain shifts, sensor failures, and platform discrepancies. RoboSense 2025 provides standardized datasets, baseline models, and unified evaluation protocols, enabling large-scale and reproducible comparison of robust perception methods. The challenge attracted 143 teams from 85 institutions across 16 countries, reflecting broad community engagement. By consolidating insights from 23 winning solutions, this report highlights emerging methodological trends, shared design principles, and open challenges across all tracks, marking a step toward building robots that can sense reliably, act robustly, and adapt across platforms in real-world environments.

OpenAI GPT-5 System Card

Dec 19, 2025Abstract:This is the system card published alongside the OpenAI GPT-5 launch, August 2025. GPT-5 is a unified system with a smart and fast model that answers most questions, a deeper reasoning model for harder problems, and a real-time router that quickly decides which model to use based on conversation type, complexity, tool needs, and explicit intent (for example, if you say 'think hard about this' in the prompt). The router is continuously trained on real signals, including when users switch models, preference rates for responses, and measured correctness, improving over time. Once usage limits are reached, a mini version of each model handles remaining queries. This system card focuses primarily on gpt-5-thinking and gpt-5-main, while evaluations for other models are available in the appendix. The GPT-5 system not only outperforms previous models on benchmarks and answers questions more quickly, but -- more importantly -- is more useful for real-world queries. We've made significant advances in reducing hallucinations, improving instruction following, and minimizing sycophancy, and have leveled up GPT-5's performance in three of ChatGPT's most common uses: writing, coding, and health. All of the GPT-5 models additionally feature safe-completions, our latest approach to safety training to prevent disallowed content. Similarly to ChatGPT agent, we have decided to treat gpt-5-thinking as High capability in the Biological and Chemical domain under our Preparedness Framework, activating the associated safeguards. While we do not have definitive evidence that this model could meaningfully help a novice to create severe biological harm -- our defined threshold for High capability -- we have chosen to take a precautionary approach.

PushGen: Push Notifications Generation with LLM

Dec 16, 2025

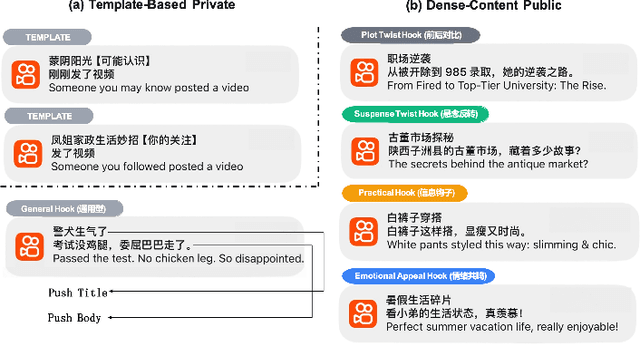

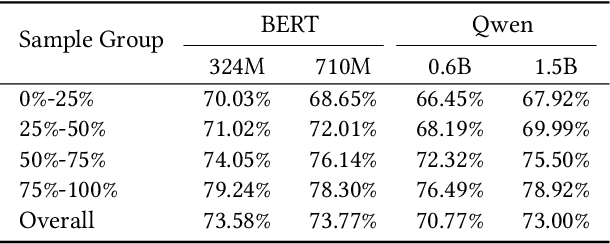

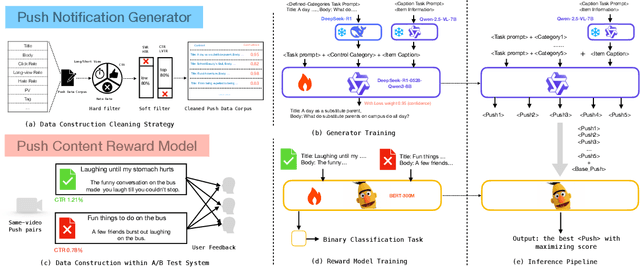

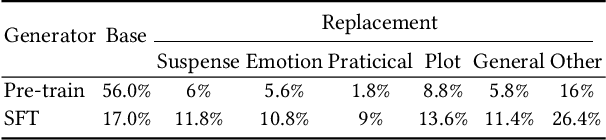

Abstract:We present PushGen, an automated framework for generating high-quality push notifications comparable to human-crafted content. With the rise of generative models, there is growing interest in leveraging LLMs for push content generation. Although LLMs make content generation straightforward and cost-effective, maintaining stylistic control and reliable quality assessment remains challenging, as both directly impact user engagement. To address these issues, PushGen combines two key components: (1) a controllable category prompt technique to guide LLM outputs toward desired styles, and (2) a reward model that ranks and selects generated candidates. Extensive offline and online experiments demonstrate its effectiveness, which has been deployed in large-scale industrial applications, serving hundreds of millions of users daily.

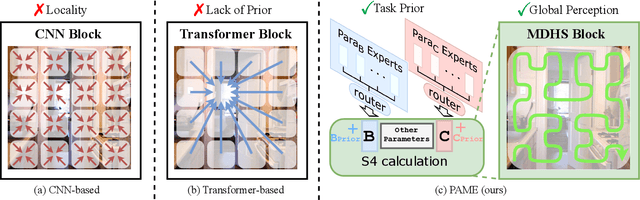

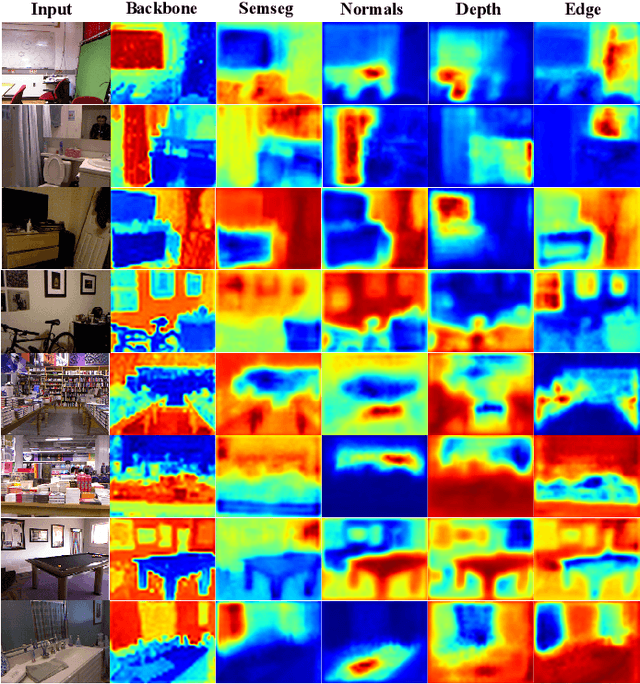

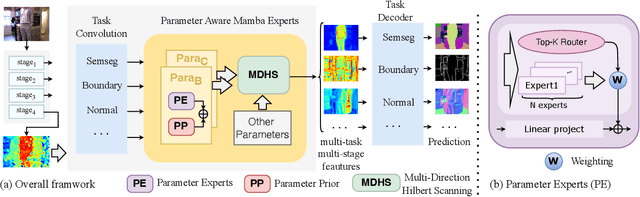

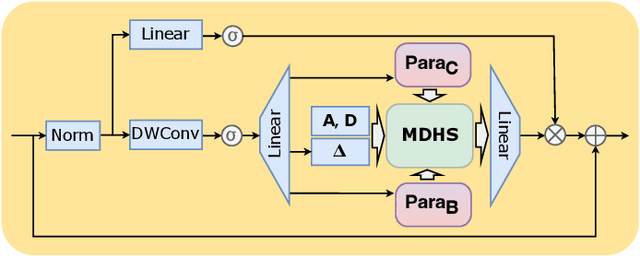

Parameter Aware Mamba Model for Multi-task Dense Prediction

Nov 18, 2025

Abstract:Understanding the inter-relations and interactions between tasks is crucial for multi-task dense prediction. Existing methods predominantly utilize convolutional layers and attention mechanisms to explore task-level interactions. In this work, we introduce a novel decoder-based framework, Parameter Aware Mamba Model (PAMM), specifically designed for dense prediction in multi-task learning setting. Distinct from approaches that employ Transformers to model holistic task relationships, PAMM leverages the rich, scalable parameters of state space models to enhance task interconnectivity. It features dual state space parameter experts that integrate and set task-specific parameter priors, capturing the intrinsic properties of each task. This approach not only facilitates precise multi-task interactions but also allows for the global integration of task priors through the structured state space sequence model (S4). Furthermore, we employ the Multi-Directional Hilbert Scanning method to construct multi-angle feature sequences, thereby enhancing the sequence model's perceptual capabilities for 2D data. Extensive experiments on the NYUD-v2 and PASCAL-Context benchmarks demonstrate the effectiveness of our proposed method. Our code is available at https://github.com/CQC-gogopro/PAMM.

FairAgent: Democratizing Fairness-Aware Machine Learning with LLM-Powered Agents

Oct 05, 2025Abstract:Training fair and unbiased machine learning models is crucial for high-stakes applications, yet it presents significant challenges. Effective bias mitigation requires deep expertise in fairness definitions, metrics, data preprocessing, and machine learning techniques. In addition, the complex process of balancing model performance with fairness requirements while properly handling sensitive attributes makes fairness-aware model development inaccessible to many practitioners. To address these challenges, we introduce FairAgent, an LLM-powered automated system that significantly simplifies fairness-aware model development. FairAgent eliminates the need for deep technical expertise by automatically analyzing datasets for potential biases, handling data preprocessing and feature engineering, and implementing appropriate bias mitigation strategies based on user requirements. Our experiments demonstrate that FairAgent achieves significant performance improvements while significantly reducing development time and expertise requirements, making fairness-aware machine learning more accessible to practitioners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge