Peng Liu

Alzheimer's Disease Neuroimaging Initiative, the Australian Imaging Biomarkers and Lifestyle flagship study of ageing

Robust Categorical Data Clustering Guided by Multi-Granular Competitive Learning

Jan 23, 2026Abstract:Data set composed of categorical features is very common in big data analysis tasks. Since categorical features are usually with a limited number of qualitative possible values, the nested granular cluster effect is prevalent in the implicit discrete distance space of categorical data. That is, data objects frequently overlap in space or subspace to form small compact clusters, and similar small clusters often form larger clusters. However, the distance space cannot be well-defined like the Euclidean distance due to the qualitative categorical data values, which brings great challenges to the cluster analysis of categorical data. In view of this, we design a Multi-Granular Competitive Penalization Learning (MGCPL) algorithm to allow potential clusters to interactively tune themselves and converge in stages with different numbers of naturally compact clusters. To leverage MGCPL, we also propose a Cluster Aggregation strategy based on MGCPL Encoding (CAME) to first encode the data objects according to the learned multi-granular distributions, and then perform final clustering on the embeddings. It turns out that the proposed MGCPL-guided Categorical Data Clustering (MCDC) approach is competent in automatically exploring the nested distribution of multi-granular clusters and highly robust to categorical data sets from various domains. Benefiting from its linear time complexity, MCDC is scalable to large-scale data sets and promising in pre-partitioning data sets or compute nodes for boosting distributed computing. Extensive experiments with statistical evidence demonstrate its superiority compared to state-of-the-art counterparts on various real public data sets.

* This paper has been published in the IEEE International Conference on Distributed Computing Systems (ICDCS 2024)

Uncovering and Understanding FPR Manipulation Attack in Industrial IoT Networks

Jan 20, 2026Abstract:In the network security domain, due to practical issues -- including imbalanced data and heterogeneous legitimate network traffic -- adversarial attacks in machine learning-based NIDSs have been viewed as attack packets misclassified as benign. Due to this prevailing belief, the possibility of (maliciously) perturbed benign packets being misclassified as attack has been largely ignored. In this paper, we demonstrate that this is not only theoretically possible, but also a particular threat to NIDS. In particular, we uncover a practical cyberattack, FPR manipulation attack (FPA), especially targeting industrial IoT networks, where domain-specific knowledge of the widely used MQTT protocol is exploited and a systematic simple packet-level perturbation is performed to alter the labels of benign traffic samples without employing traditional gradient-based or non-gradient-based methods. The experimental evaluations demonstrate that this novel attack results in a success rate of 80.19% to 100%. In addition, while estimating impacts in the Security Operations Center, we observe that even a small fraction of false positive alerts, irrespective of different budget constraints and alert traffic intensities, can increase the delay of genuine alerts investigations up to 2 hr in a single day under normal operating conditions. Furthermore, a series of relevant statistical and XAI analyses is conducted to understand the key factors behind this remarkable success. Finally, we explore the effectiveness of the FPA packets to enhance models' robustness through adversarial training and investigate the changes in decision boundaries accordingly.

NorwAI's Large Language Models: Technical Report

Jan 08, 2026Abstract:Norwegian, spoken by approximately five million people, remains underrepresented in many of the most significant breakthroughs in Natural Language Processing (NLP). To address this gap, the NorLLM team at NorwAI has developed a family of models specifically tailored to Norwegian and other Scandinavian languages, building on diverse Transformer-based architectures such as GPT, Mistral, Llama2, Mixtral and Magistral. These models are either pretrained from scratch or continually pretrained on 25B - 88.45B tokens, using a Norwegian-extended tokenizer and advanced post-training strategies to optimize performance, enhance robustness, and improve adaptability across various real-world tasks. Notably, instruction-tuned variants (e.g., Mistral-7B-Instruct and Mixtral-8x7B-Instruct) showcase strong assistant-style capabilities, underscoring their potential for practical deployment in interactive and domain-specific applications. The NorwAI large language models are openly available to Nordic organizations, companies and students for both research and experimental use. This report provides detailed documentation of the model architectures, training data, tokenizer design, fine-tuning strategies, deployment, and evaluations.

NextFlow: Unified Sequential Modeling Activates Multimodal Understanding and Generation

Jan 05, 2026Abstract:We present NextFlow, a unified decoder-only autoregressive transformer trained on 6 trillion interleaved text-image discrete tokens. By leveraging a unified vision representation within a unified autoregressive architecture, NextFlow natively activates multimodal understanding and generation capabilities, unlocking abilities of image editing, interleaved content and video generation. Motivated by the distinct nature of modalities - where text is strictly sequential and images are inherently hierarchical - we retain next-token prediction for text but adopt next-scale prediction for visual generation. This departs from traditional raster-scan methods, enabling the generation of 1024x1024 images in just 5 seconds - orders of magnitude faster than comparable AR models. We address the instabilities of multi-scale generation through a robust training recipe. Furthermore, we introduce a prefix-tuning strategy for reinforcement learning. Experiments demonstrate that NextFlow achieves state-of-the-art performance among unified models and rivals specialized diffusion baselines in visual quality.

Step-DeepResearch Technical Report

Dec 24, 2025Abstract:As LLMs shift toward autonomous agents, Deep Research has emerged as a pivotal metric. However, existing academic benchmarks like BrowseComp often fail to meet real-world demands for open-ended research, which requires robust skills in intent recognition, long-horizon decision-making, and cross-source verification. To address this, we introduce Step-DeepResearch, a cost-effective, end-to-end agent. We propose a Data Synthesis Strategy Based on Atomic Capabilities to reinforce planning and report writing, combined with a progressive training path from agentic mid-training to SFT and RL. Enhanced by a Checklist-style Judger, this approach significantly improves robustness. Furthermore, to bridge the evaluation gap in the Chinese domain, we establish ADR-Bench for realistic deep research scenarios. Experimental results show that Step-DeepResearch (32B) scores 61.4% on Scale AI Research Rubrics. On ADR-Bench, it significantly outperforms comparable models and rivals SOTA closed-source models like OpenAI and Gemini DeepResearch. These findings prove that refined training enables medium-sized models to achieve expert-level capabilities at industry-leading cost-efficiency.

FlakyGuard: Automatically Fixing Flaky Tests at Industry Scale

Nov 18, 2025Abstract:Flaky tests that non-deterministically pass or fail waste developer time and slow release cycles. While large language models (LLMs) show promise for automatically repairing flaky tests, existing approaches like FlakyDoctor fail in industrial settings due to the context problem: providing either too little context (missing critical production code) or too much context (overwhelming the LLM with irrelevant information). We present FlakyGuard, which addresses this problem by treating code as a graph structure and using selective graph exploration to find only the most relevant context. Evaluation on real-world flaky tests from industrial repositories shows that FlakyGuard repairs 47.6 % of reproducible flaky tests with 51.8 % of the fixes accepted by developers. Besides it outperforms state-of-the-art approaches by at least 22 % in repair success rate. Developer surveys confirm that 100 % find FlakyGuard's root cause explanations useful.

Towards Adaptive Humanoid Control via Multi-Behavior Distillation and Reinforced Fine-Tuning

Nov 11, 2025Abstract:Humanoid robots are promising to learn a diverse set of human-like locomotion behaviors, including standing up, walking, running, and jumping. However, existing methods predominantly require training independent policies for each skill, yielding behavior-specific controllers that exhibit limited generalization and brittle performance when deployed on irregular terrains and in diverse situations. To address this challenge, we propose Adaptive Humanoid Control (AHC) that adopts a two-stage framework to learn an adaptive humanoid locomotion controller across different skills and terrains. Specifically, we first train several primary locomotion policies and perform a multi-behavior distillation process to obtain a basic multi-behavior controller, facilitating adaptive behavior switching based on the environment. Then, we perform reinforced fine-tuning by collecting online feedback in performing adaptive behaviors on more diverse terrains, enhancing terrain adaptability for the controller. We conduct experiments in both simulation and real-world experiments in Unitree G1 robots. The results show that our method exhibits strong adaptability across various situations and terrains. Project website: https://ahc-humanoid.github.io.

An Integrated Fusion Framework for Ensemble Learning Leveraging Gradient Boosting and Fuzzy Rule-Based Models

Nov 11, 2025Abstract:The integration of different learning paradigms has long been a focus of machine learning research, aimed at overcoming the inherent limitations of individual methods. Fuzzy rule-based models excel in interpretability and have seen widespread application across diverse fields. However, they face challenges such as complex design specifications and scalability issues with large datasets. The fusion of different techniques and strategies, particularly Gradient Boosting, with Fuzzy Rule-Based Models offers a robust solution to these challenges. This paper proposes an Integrated Fusion Framework that merges the strengths of both paradigms to enhance model performance and interpretability. At each iteration, a Fuzzy Rule-Based Model is constructed and controlled by a dynamic factor to optimize its contribution to the overall ensemble. This control factor serves multiple purposes: it prevents model dominance, encourages diversity, acts as a regularization parameter, and provides a mechanism for dynamic tuning based on model performance, thus mitigating the risk of overfitting. Additionally, the framework incorporates a sample-based correction mechanism that allows for adaptive adjustments based on feedback from a validation set. Experimental results substantiate the efficacy of the presented gradient boosting framework for fuzzy rule-based models, demonstrating performance enhancement, especially in terms of mitigating overfitting and complexity typically associated with many rules. By leveraging an optimal factor to govern the contribution of each model, the framework improves performance, maintains interpretability, and simplifies the maintenance and update of the models.

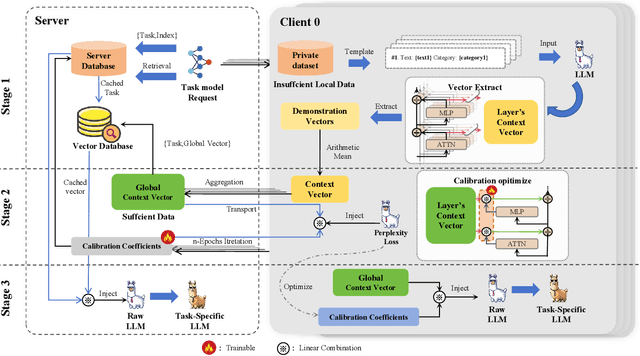

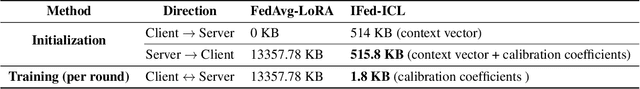

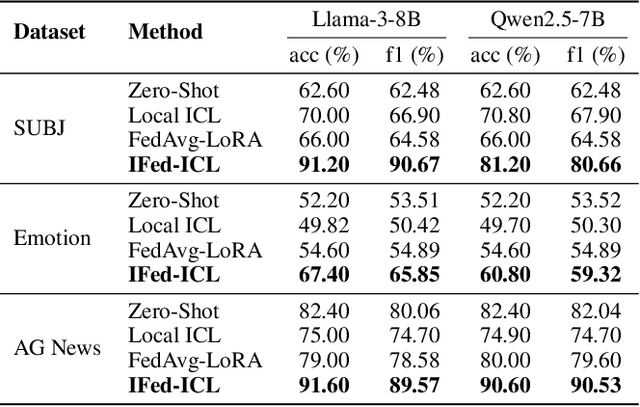

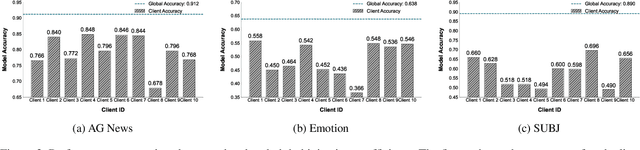

Implicit Federated In-context Learning For Task-Specific LLM Fine-Tuning

Nov 10, 2025

Abstract:As large language models continue to develop and expand, the extensive public data they rely on faces the risk of depletion. Consequently, leveraging private data within organizations to enhance the performance of large models has emerged as a key challenge. The federated learning paradigm, combined with model fine-tuning techniques, effectively reduces the number of trainable parameters. However,the necessity to process high-dimensional feature spaces results in substantial overall computational overhead. To address this issue, we propose the Implicit Federated In-Context Learning (IFed-ICL) framework. IFed-ICL draws inspiration from federated learning to establish a novel distributed collaborative paradigm, by converting client local context examples into implicit vector representations, it enables distributed collaborative computation during the inference phase and injects model residual streams to enhance model performance. Experiments demonstrate that our proposed method achieves outstanding performance across multiple text classification tasks. Compared to traditional methods, IFed-ICL avoids the extensive parameter updates required by conventional fine-tuning methods while reducing data transmission and local computation at the client level in federated learning. This enables efficient distributed context learning using local private-domain data, significantly improving model performance on specific tasks.

HaluMem: Evaluating Hallucinations in Memory Systems of Agents

Nov 05, 2025Abstract:Memory systems are key components that enable AI systems such as LLMs and AI agents to achieve long-term learning and sustained interaction. However, during memory storage and retrieval, these systems frequently exhibit memory hallucinations, including fabrication, errors, conflicts, and omissions. Existing evaluations of memory hallucinations are primarily end-to-end question answering, which makes it difficult to localize the operational stage within the memory system where hallucinations arise. To address this, we introduce the Hallucination in Memory Benchmark (HaluMem), the first operation level hallucination evaluation benchmark tailored to memory systems. HaluMem defines three evaluation tasks (memory extraction, memory updating, and memory question answering) to comprehensively reveal hallucination behaviors across different operational stages of interaction. To support evaluation, we construct user-centric, multi-turn human-AI interaction datasets, HaluMem-Medium and HaluMem-Long. Both include about 15k memory points and 3.5k multi-type questions. The average dialogue length per user reaches 1.5k and 2.6k turns, with context lengths exceeding 1M tokens, enabling evaluation of hallucinations across different context scales and task complexities. Empirical studies based on HaluMem show that existing memory systems tend to generate and accumulate hallucinations during the extraction and updating stages, which subsequently propagate errors to the question answering stage. Future research should focus on developing interpretable and constrained memory operation mechanisms that systematically suppress hallucinations and improve memory reliability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge