Fang Chen

Towards Fair Large Language Model-based Recommender Systems without Costly Retraining

Jan 24, 2026Abstract:Large Language Models (LLMs) have revolutionized Recommender Systems (RS) through advanced generative user modeling. However, LLM-based RS (LLM-RS) often inadvertently perpetuates bias present in the training data, leading to severe fairness issues. Addressing these fairness problems in LLM-RS faces two significant challenges. 1) Existing debiasing methods, designed for specific bias types, lack the generality to handle diverse or emerging biases in real-world applications. 2) Debiasing methods relying on retraining are computationally infeasible given the massive parameter scale of LLMs. To overcome these challenges, we propose FUDLR (Fast Unified Debiasing for LLM-RS). The core idea is to reformulate the debiasing problem as an efficient machine unlearning task with two stages. First, FUDLR identifies bias-inducing samples to unlearn through a novel bias-agnostic mask, optimized to balance fairness improvement with accuracy preservation. Its bias-agnostic design allows adaptability to various or co-existing biases simply by incorporating different fairness metrics. Second, FUDLR performs efficient debiasing by estimating and removing the influence of identified samples on model parameters. Extensive experiments demonstrate that FUDLR effectively and efficiently improves fairness while preserving recommendation accuracy, offering a practical path toward socially responsible LLM-RS. The code and data are available at https://github.com/JinLi-i/FUDLR.

Native Intelligence Emerges from Large-Scale Clinical Practice: A Retinal Foundation Model with Deployment Efficiency

Dec 16, 2025Abstract:Current retinal foundation models remain constrained by curated research datasets that lack authentic clinical context, and require extensive task-specific optimization for each application, limiting their deployment efficiency in low-resource settings. Here, we show that these barriers can be overcome by building clinical native intelligence directly from real-world medical practice. Our key insight is that large-scale telemedicine programs, where expert centers provide remote consultations across distributed facilities, represent a natural reservoir for learning clinical image interpretation. We present ReVision, a retinal foundation model that learns from the natural alignment between 485,980 color fundus photographs and their corresponding diagnostic reports, accumulated through a decade-long telemedicine program spanning 162 medical institutions across China. Through extensive evaluation across 27 ophthalmic benchmarks, we demonstrate that ReVison enables deployment efficiency with minimal local resources. Without any task-specific training, ReVision achieves zero-shot disease detection with an average AUROC of 0.946 across 12 public benchmarks and 0.952 on 3 independent clinical cohorts. When minimal adaptation is feasible, ReVision matches extensively fine-tuned alternatives while requiring orders of magnitude fewer trainable parameters and labeled examples. The learned representations also transfer effectively to new clinical sites, imaging domains, imaging modalities, and systemic health prediction tasks. In a prospective reader study with 33 ophthalmologists, ReVision's zero-shot assistance improved diagnostic accuracy by 14.8% across all experience levels. These results demonstrate that clinical native intelligence can be directly extracted from clinical archives without any further annotation to build medical AI systems suited to various low-resource settings.

RcAE: Recursive Reconstruction Framework for Unsupervised Industrial Anomaly Detection

Dec 12, 2025

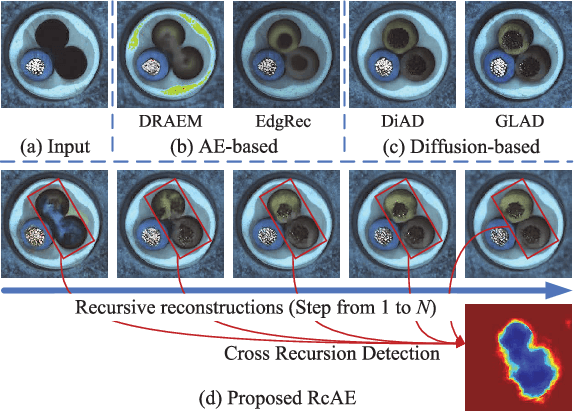

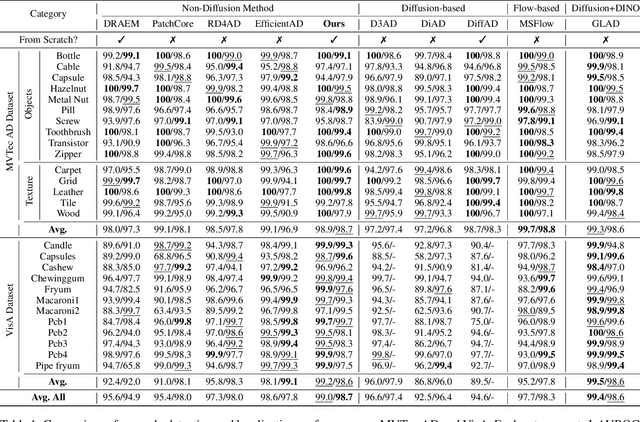

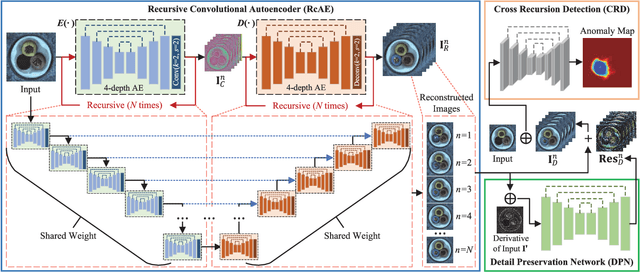

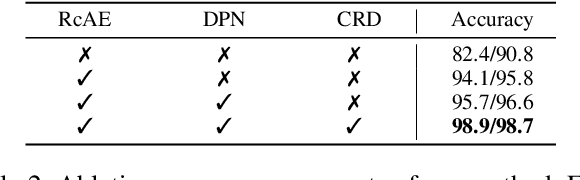

Abstract:Unsupervised industrial anomaly detection requires accurately identifying defects without labeled data. Traditional autoencoder-based methods often struggle with incomplete anomaly suppression and loss of fine details, as their single-pass decoding fails to effectively handle anomalies with varying severity and scale. We propose a recursive architecture for autoencoder (RcAE), which performs reconstruction iteratively to progressively suppress anomalies while refining normal structures. Unlike traditional single-pass models, this recursive design naturally produces a sequence of reconstructions, progressively exposing suppressed abnormal patterns. To leverage this reconstruction dynamics, we introduce a Cross Recursion Detection (CRD) module that tracks inconsistencies across recursion steps, enhancing detection of both subtle and large-scale anomalies. Additionally, we incorporate a Detail Preservation Network (DPN) to recover high-frequency textures typically lost during reconstruction. Extensive experiments demonstrate that our method significantly outperforms existing non-diffusion methods, and achieves performance on par with recent diffusion models with only 10% of their parameters and offering substantially faster inference. These results highlight the practicality and efficiency of our approach for real-world applications.

A Survey of TinyML Applications in Beekeeping for Hive Monitoring and Management

Sep 10, 2025Abstract:Honey bee colonies are essential for global food security and ecosystem stability, yet they face escalating threats from pests, diseases, and environmental stressors. Traditional hive inspections are labor-intensive and disruptive, while cloud-based monitoring solutions remain impractical for remote or resource-limited apiaries. Recent advances in Internet of Things (IoT) and Tiny Machine Learning (TinyML) enable low-power, real-time monitoring directly on edge devices, offering scalable and non-invasive alternatives. This survey synthesizes current innovations at the intersection of TinyML and apiculture, organized around four key functional areas: monitoring hive conditions, recognizing bee behaviors, detecting pests and diseases, and forecasting swarming events. We further examine supporting resources, including publicly available datasets, lightweight model architectures optimized for embedded deployment, and benchmarking strategies tailored to field constraints. Critical limitations such as data scarcity, generalization challenges, and deployment barriers in off-grid environments are highlighted, alongside emerging opportunities in ultra-efficient inference pipelines, adaptive edge learning, and dataset standardization. By consolidating research and engineering practices, this work provides a foundation for scalable, AI-driven, and ecologically informed monitoring systems to support sustainable pollinator management.

COME: Dual Structure-Semantic Learning with Collaborative MoE for Universal Lesion Detection Across Heterogeneous Ultrasound Datasets

Aug 13, 2025Abstract:Conventional single-dataset training often fails with new data distributions, especially in ultrasound (US) image analysis due to limited data, acoustic shadows, and speckle noise. Therefore, constructing a universal framework for multi-heterogeneous US datasets is imperative. However, a key challenge arises: how to effectively mitigate inter-dataset interference while preserving dataset-specific discriminative features for robust downstream task? Previous approaches utilize either a single source-specific decoder or a domain adaptation strategy, but these methods experienced a decline in performance when applied to other domains. Considering this, we propose a Universal Collaborative Mixture of Heterogeneous Source-Specific Experts (COME). Specifically, COME establishes dual structure-semantic shared experts that create a universal representation space and then collaborate with source-specific experts to extract discriminative features through providing complementary features. This design enables robust generalization by leveraging cross-datasets experience distributions and providing universal US priors for small-batch or unseen data scenarios. Extensive experiments under three evaluation modes (single-dataset, intra-organ, and inter-organ integration datasets) demonstrate COME's superiority, achieving significant mean AP improvements over state-of-the-art methods. Our project is available at: https://universalcome.github.io/UniversalCOME/.

ABE: A Unified Framework for Robust and Faithful Attribution-Based Explainability

May 03, 2025Abstract:Attribution algorithms are essential for enhancing the interpretability and trustworthiness of deep learning models by identifying key features driving model decisions. Existing frameworks, such as InterpretDL and OmniXAI, integrate multiple attribution methods but suffer from scalability limitations, high coupling, theoretical constraints, and lack of user-friendly implementations, hindering neural network transparency and interoperability. To address these challenges, we propose Attribution-Based Explainability (ABE), a unified framework that formalizes Fundamental Attribution Methods and integrates state-of-the-art attribution algorithms while ensuring compliance with attribution axioms. ABE enables researchers to develop novel attribution techniques and enhances interpretability through four customizable modules: Robustness, Interpretability, Validation, and Data & Model. This framework provides a scalable, extensible foundation for advancing attribution-based explainability and fostering transparent AI systems. Our code is available at: https://github.com/LMBTough/ABE-XAI.

MoEDiff-SR: Mixture of Experts-Guided Diffusion Model for Region-Adaptive MRI Super-Resolution

Apr 09, 2025

Abstract:Magnetic Resonance Imaging (MRI) at lower field strengths (e.g., 3T) suffers from limited spatial resolution, making it challenging to capture fine anatomical details essential for clinical diagnosis and neuroimaging research. To overcome this limitation, we propose MoEDiff-SR, a Mixture of Experts (MoE)-guided diffusion model for region-adaptive MRI Super-Resolution (SR). Unlike conventional diffusion-based SR models that apply a uniform denoising process across the entire image, MoEDiff-SR dynamically selects specialized denoising experts at a fine-grained token level, ensuring region-specific adaptation and enhanced SR performance. Specifically, our approach first employs a Transformer-based feature extractor to compute multi-scale patch embeddings, capturing both global structural information and local texture details. The extracted feature embeddings are then fed into an MoE gating network, which assigns adaptive weights to multiple diffusion-based denoisers, each specializing in different brain MRI characteristics, such as centrum semiovale, sulcal and gyral cortex, and grey-white matter junction. The final output is produced by aggregating the denoised results from these specialized experts according to dynamically assigned gating probabilities. Experimental results demonstrate that MoEDiff-SR outperforms existing state-of-the-art methods in terms of quantitative image quality metrics, perceptual fidelity, and computational efficiency. Difference maps from each expert further highlight their distinct specializations, confirming the effective region-specific denoising capability and the interpretability of expert contributions. Additionally, clinical evaluation validates its superior diagnostic capability in identifying subtle pathological features, emphasizing its practical relevance in clinical neuroimaging. Our code is available at https://github.com/ZWang78/MoEDiff-SR.

SelfMedHPM: Self Pre-training With Hard Patches Mining Masked Autoencoders For Medical Image Segmentation

Apr 03, 2025Abstract:In recent years, deep learning methods such as convolutional neural network (CNN) and transformers have made significant progress in CT multi-organ segmentation. However, CT multi-organ segmentation methods based on masked image modeling (MIM) are very limited. There are already methods using MAE for CT multi-organ segmentation task, we believe that the existing methods do not identify the most difficult areas to reconstruct. To this end, we propose a MIM self-training framework with hard patches mining masked autoencoders for CT multi-organ segmentation tasks (selfMedHPM). The method performs ViT self-pretraining on the training set of the target data and introduces an auxiliary loss predictor, which first predicts the patch loss and determines the location of the next mask. SelfMedHPM implementation is better than various competitive methods in abdominal CT multi-organ segmentation and body CT multi-organ segmentation. We have validated the performance of our method on the Multi Atlas Labeling Beyond The Cranial Vault (BTCV) dataset for abdomen mult-organ segmentation and the SinoMed Whole Body (SMWB) dataset for body multi-organ segmentation tasks.

Multimodal Machine Learning for Real Estate Appraisal: A Comprehensive Survey

Mar 28, 2025

Abstract:Real estate appraisal has undergone a significant transition from manual to automated valuation and is entering a new phase of evolution. Leveraging comprehensive attention to various data sources, a novel approach to automated valuation, multimodal machine learning, has taken shape. This approach integrates multimodal data to deeply explore the diverse factors influencing housing prices. Furthermore, multimodal machine learning significantly outperforms single-modality or fewer-modality approaches in terms of prediction accuracy, with enhanced interpretability. However, systematic and comprehensive survey work on the application in the real estate domain is still lacking. In this survey, we aim to bridge this gap by reviewing the research efforts. We begin by reviewing the background of real estate appraisal and propose two research questions from the perspecve of performance and fusion aimed at improving the accuracy of appraisal results. Subsequently, we explain the concept of multimodal machine learning and provide a comprehensive classification and definition of modalities used in real estate appraisal for the first time. To ensure clarity, we explore works related to data and techniques, along with their evaluation methods, under the framework of these two research questions. Furthermore, specific application domains are summarized. Finally, we present insights into future research directions including multimodal complementarity, technology and modality contribution.

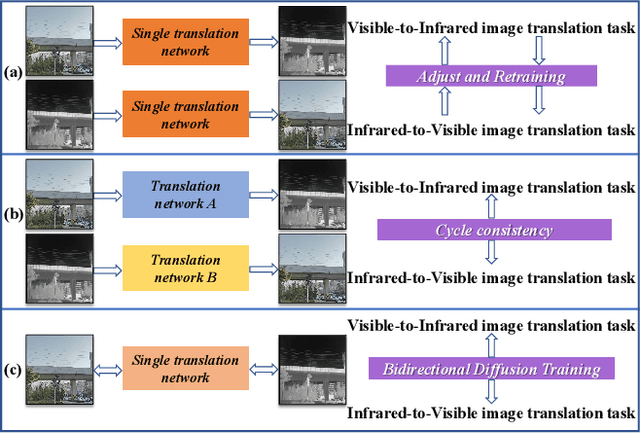

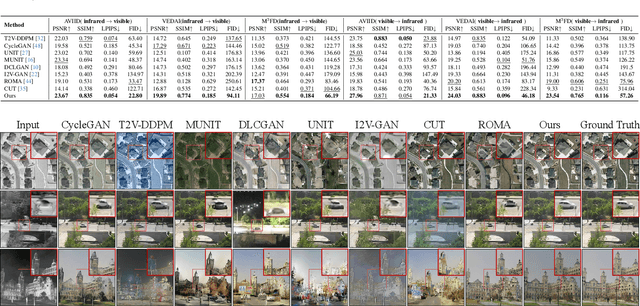

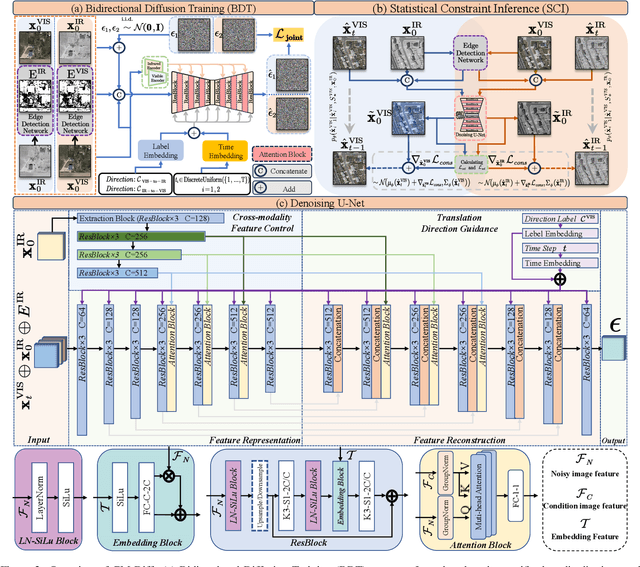

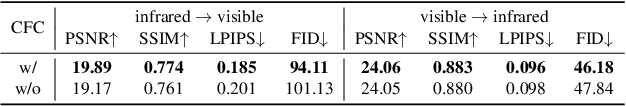

CM-Diff: A Single Generative Network for Bidirectional Cross-Modality Translation Diffusion Model Between Infrared and Visible Images

Mar 12, 2025

Abstract:The image translation method represents a crucial approach for mitigating information deficiencies in the infrared and visible modalities, while also facilitating the enhancement of modality-specific datasets. However, existing methods for infrared and visible image translation either achieve unidirectional modality translation or rely on cycle consistency for bidirectional modality translation, which may result in suboptimal performance. In this work, we present the cross-modality translation diffusion model (CM-Diff) for simultaneously modeling data distributions in both the infrared and visible modalities. We address this challenge by combining translation direction labels for guidance during training with cross-modality feature control. Specifically, we view the establishment of the mapping relationship between the two modalities as the process of learning data distributions and understanding modality differences, achieved through a novel Bidirectional Diffusion Training (BDT) strategy. Additionally, we propose a Statistical Constraint Inference (SCI) strategy to ensure the generated image closely adheres to the data distribution of the target modality. Experimental results demonstrate the superiority of our CM-Diff over state-of-the-art methods, highlighting its potential for generating dual-modality datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge