ASSIST: Interactive Scene Nodes for Scalable and Realistic Indoor Simulation

Paper and Code

Nov 10, 2023

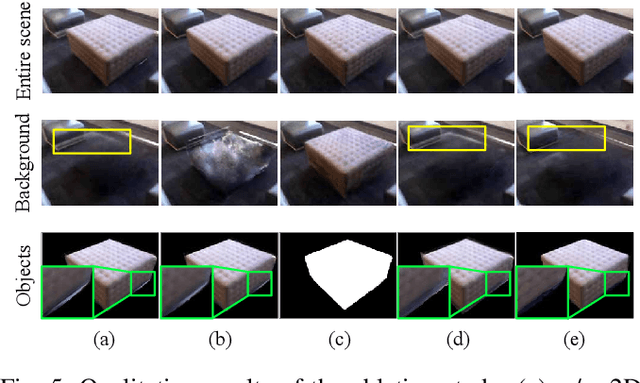

We present ASSIST, an object-wise neural radiance field as a panoptic representation for compositional and realistic simulation. Central to our approach is a novel scene node data structure that stores the information of each object in a unified fashion, allowing online interaction in both intra- and cross-scene settings. By incorporating a differentiable neural network along with the associated bounding box and semantic features, the proposed structure guarantees user-friendly interaction on independent objects to scale up novel view simulation. Objects in the scene can be queried, added, duplicated, deleted, transformed, or swapped simply through mouse/keyboard controls or language instructions. Experiments demonstrate the efficacy of the proposed method, where scaled realistic simulation can be achieved through interactive editing and compositional rendering, with color images, depth images, and panoptic segmentation masks generated in a 3D consistent manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge