Zhaoyang Wang

Thinker: Training LLMs in Hierarchical Thinking for Deep Search via Multi-Turn Interaction

Nov 14, 2025

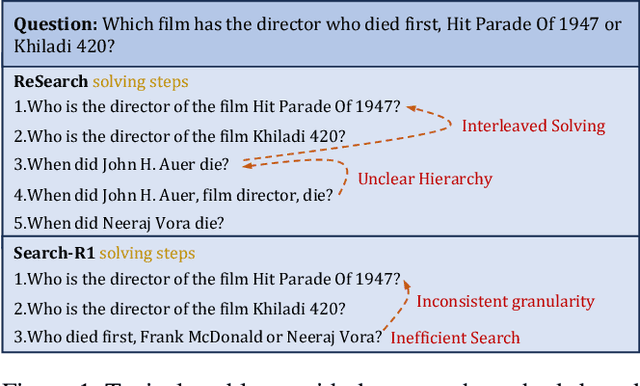

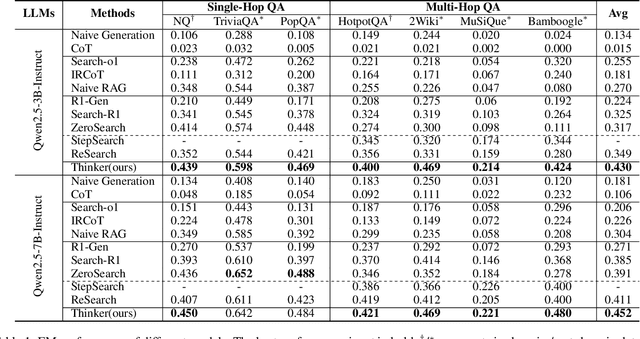

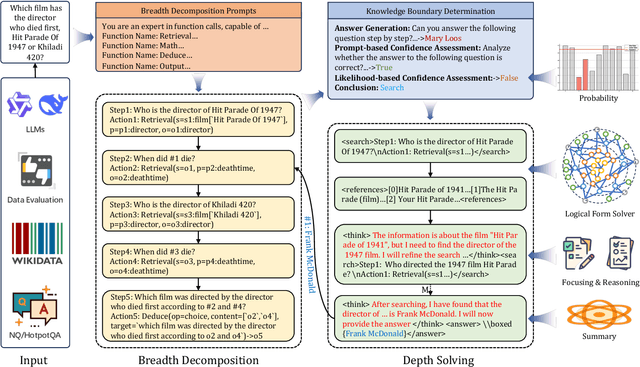

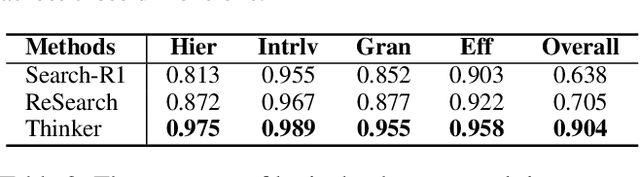

Abstract:Efficient retrieval of external knowledge bases and web pages is crucial for enhancing the reasoning abilities of LLMs. Previous works on training LLMs to leverage external retrievers for solving complex problems have predominantly employed end-to-end reinforcement learning. However, these approaches neglect supervision over the reasoning process, making it difficult to guarantee logical coherence and rigor. To address these limitations, we propose Thinker, a hierarchical thinking model for deep search through multi-turn interaction, making the reasoning process supervisable and verifiable. It decomposes complex problems into independently solvable sub-problems, each dually represented in both natural language and an equivalent logical function to support knowledge base and web searches. Concurrently, dependencies between sub-problems are passed as parameters via these logical functions, enhancing the logical coherence of the problem-solving process. To avoid unnecessary external searches, we perform knowledge boundary determination to check if a sub-problem is within the LLM's intrinsic knowledge, allowing it to answer directly. Experimental results indicate that with as few as several hundred training samples, the performance of Thinker is competitive with established baselines. Furthermore, when scaled to the full training set, Thinker significantly outperforms these methods across various datasets and model sizes. The source code is available at https://github.com/OpenSPG/KAG-Thinker.

GABFusion: Rethinking Feature Fusion for Low-Bit Quantization of Multi-Task Networks

Nov 08, 2025

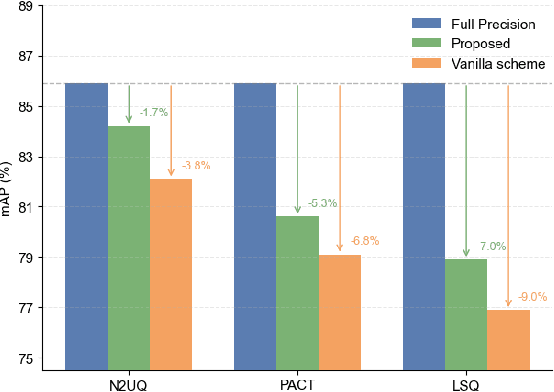

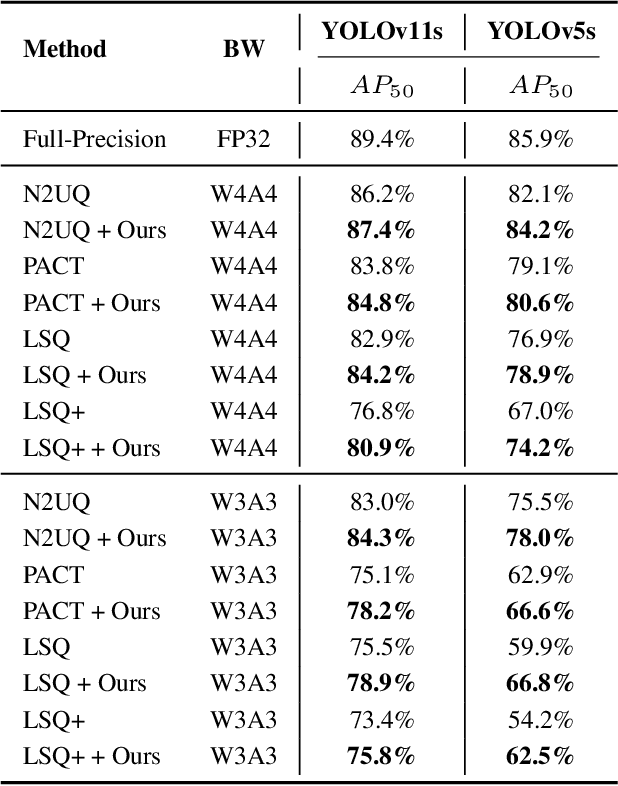

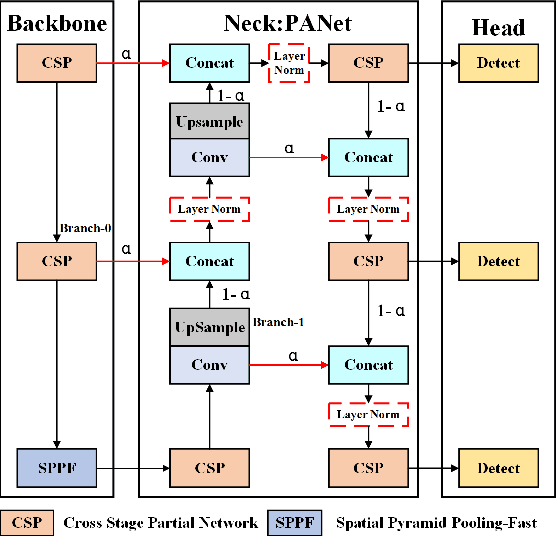

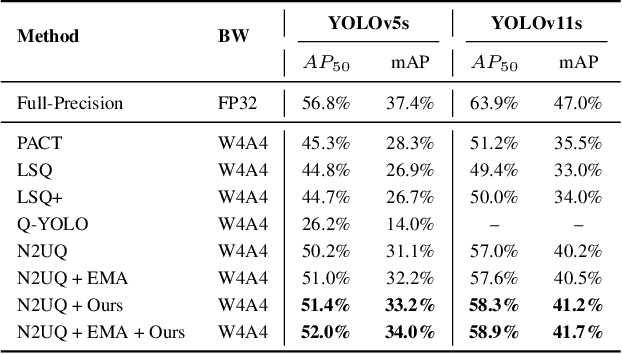

Abstract:Despite the effectiveness of quantization-aware training (QAT) in compressing deep neural networks, its performance on multi-task architectures often degrades significantly due to task-specific feature discrepancies and gradient conflicts. To address these challenges, we propose Gradient-Aware Balanced Feature Fusion (GABFusion), which dynamically balances gradient magnitudes and fuses task-specific features in a quantization-friendly manner. We further introduce Attention Distribution Alignment (ADA), a feature-level distillation strategy tailored for quantized models. Our method demonstrates strong generalization across network architectures and QAT algorithms, with theoretical guarantees on gradient bias reduction. Extensive experiments demonstrate that our strategy consistently enhances a variety of QAT methods across different network architectures and bit-widths. On PASCAL VOC and COCO datasets, the proposed approach achieves average mAP improvements of approximately 3.3% and 1.6%, respectively. When applied to YOLOv5 under 4-bit quantization, our method narrows the accuracy gap with the full-precision model to only 1.7% on VOC, showcasing its effectiveness in preserving performance under low-bit constraints. Notably, the proposed framework is modular, easy to integrate, and compatible with any existing QAT technique-enhancing the performance of quantized models without requiring modifications to the original network architecture.

Adapting Web Agents with Synthetic Supervision

Nov 08, 2025Abstract:Web agents struggle to adapt to new websites due to the scarcity of environment specific tasks and demonstrations. Recent works have explored synthetic data generation to address this challenge, however, they suffer from data quality issues where synthesized tasks contain hallucinations that cannot be executed, and collected trajectories are noisy with redundant or misaligned actions. In this paper, we propose SynthAgent, a fully synthetic supervision framework that aims at improving synthetic data quality via dual refinement of both tasks and trajectories. Our approach begins by synthesizing diverse tasks through categorized exploration of web elements, ensuring efficient coverage of the target environment. During trajectory collection, we refine tasks when conflicts with actual observations are detected, mitigating hallucinations while maintaining task consistency. After collection, we conduct trajectory refinement with a global context to mitigate potential noise or misalignments. Finally, we fine-tune open-source web agents on the refined synthetic data to adapt them to the target environment. Experimental results demonstrate that SynthAgent outperforms existing synthetic data methods, validating the importance of high-quality synthetic supervision. The code will be publicly available at https://github.com/aiming-lab/SynthAgent.

EyeSim-VQA: A Free-Energy-Guided Eye Simulation Framework for Video Quality Assessment

Jun 13, 2025Abstract:Free-energy-guided self-repair mechanisms have shown promising results in image quality assessment (IQA), but remain under-explored in video quality assessment (VQA), where temporal dynamics and model constraints pose unique challenges. Unlike static images, video content exhibits richer spatiotemporal complexity, making perceptual restoration more difficult. Moreover, VQA systems often rely on pre-trained backbones, which limits the direct integration of enhancement modules without affecting model stability. To address these issues, we propose EyeSimVQA, a novel VQA framework that incorporates free-energy-based self-repair. It adopts a dual-branch architecture, with an aesthetic branch for global perceptual evaluation and a technical branch for fine-grained structural and semantic analysis. Each branch integrates specialized enhancement modules tailored to distinct visual inputs-resized full-frame images and patch-based fragments-to simulate adaptive repair behaviors. We also explore a principled strategy for incorporating high-level visual features without disrupting the original backbone. In addition, we design a biologically inspired prediction head that models sweeping gaze dynamics to better fuse global and local representations for quality prediction. Experiments on five public VQA benchmarks demonstrate that EyeSimVQA achieves competitive or superior performance compared to state-of-the-art methods, while offering improved interpretability through its biologically grounded design.

FCA2: Frame Compression-Aware Autoencoder for Modular and Fast Compressed Video Super-Resolution

Jun 13, 2025Abstract:State-of-the-art (SOTA) compressed video super-resolution (CVSR) models face persistent challenges, including prolonged inference time, complex training pipelines, and reliance on auxiliary information. As video frame rates continue to increase, the diminishing inter-frame differences further expose the limitations of traditional frame-to-frame information exploitation methods, which are inadequate for addressing current video super-resolution (VSR) demands. To overcome these challenges, we propose an efficient and scalable solution inspired by the structural and statistical similarities between hyperspectral images (HSI) and video data. Our approach introduces a compression-driven dimensionality reduction strategy that reduces computational complexity, accelerates inference, and enhances the extraction of temporal information across frames. The proposed modular architecture is designed for seamless integration with existing VSR frameworks, ensuring strong adaptability and transferability across diverse applications. Experimental results demonstrate that our method achieves performance on par with, or surpassing, the current SOTA models, while significantly reducing inference time. By addressing key bottlenecks in CVSR, our work offers a practical and efficient pathway for advancing VSR technology. Our code will be publicly available at https://github.com/handsomewzy/FCA2.

Efficient Long CoT Reasoning in Small Language Models

May 24, 2025Abstract:Recent large reasoning models such as DeepSeek-R1 exhibit strong complex problems solving abilities by generating long chain-of-thought (CoT) reasoning steps. It is challenging to directly train small language models (SLMs) to emerge long CoT. Thus, distillation becomes a practical method to enable SLMs for such reasoning ability. However, the long CoT often contains a lot of redundant contents (e.g., overthinking steps) which may make SLMs hard to learn considering their relatively poor capacity and generalization. To address this issue, we propose a simple-yet-effective method to prune unnecessary steps in long CoT, and then employ an on-policy method for the SLM itself to curate valid and useful long CoT training data. In this way, SLMs can effectively learn efficient long CoT reasoning and preserve competitive performance at the same time. Experimental results across a series of mathematical reasoning benchmarks demonstrate the effectiveness of the proposed method in distilling long CoT reasoning ability into SLMs which maintains the competitive performance but significantly reduces generating redundant reasoning steps.

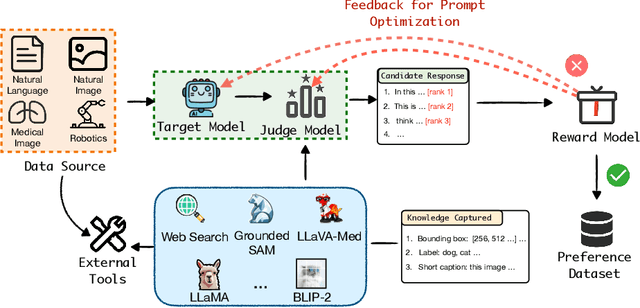

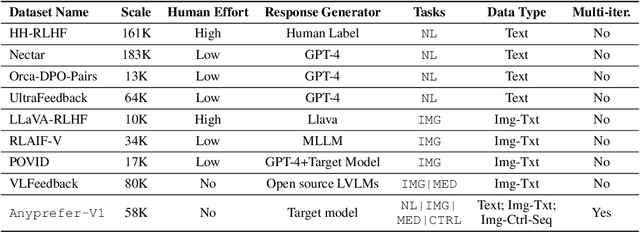

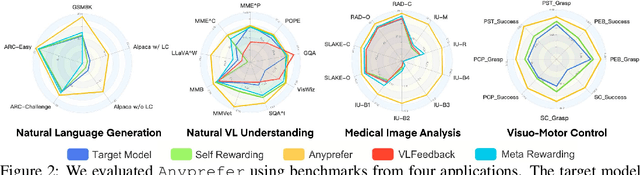

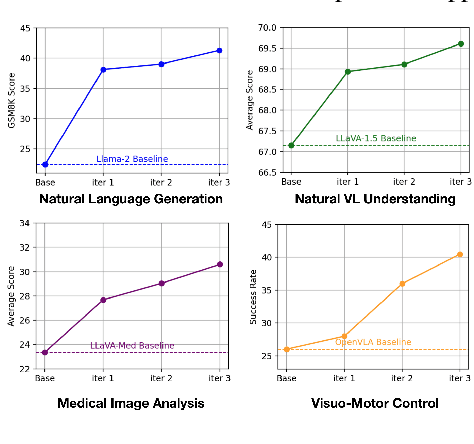

Anyprefer: An Agentic Framework for Preference Data Synthesis

Apr 27, 2025

Abstract:High-quality preference data is essential for aligning foundation models with human values through preference learning. However, manual annotation of such data is often time-consuming and costly. Recent methods often adopt a self-rewarding approach, where the target model generates and annotates its own preference data, but this can lead to inaccuracies since the reward model shares weights with the target model, thereby amplifying inherent biases. To address these issues, we propose Anyprefer, a framework designed to synthesize high-quality preference data for aligning the target model. Anyprefer frames the data synthesis process as a cooperative two-player Markov Game, where the target model and the judge model collaborate together. Here, a series of external tools are introduced to assist the judge model in accurately rewarding the target model's responses, mitigating biases in the rewarding process. In addition, a feedback mechanism is introduced to optimize prompts for both models, enhancing collaboration and improving data quality. The synthesized data is compiled into a new preference dataset, Anyprefer-V1, consisting of 58K high-quality preference pairs. Extensive experiments show that Anyprefer significantly improves model alignment performance across four main applications, covering 21 datasets, achieving average improvements of 18.55% in five natural language generation datasets, 3.66% in nine vision-language understanding datasets, 30.05% in three medical image analysis datasets, and 16.00% in four visuo-motor control tasks.

Synergistic Weak-Strong Collaboration by Aligning Preferences

Apr 22, 2025Abstract:Current Large Language Models (LLMs) excel in general reasoning yet struggle with specialized tasks requiring proprietary or domain-specific knowledge. Fine-tuning large models for every niche application is often infeasible due to black-box constraints and high computational overhead. To address this, we propose a collaborative framework that pairs a specialized weak model with a general strong model. The weak model, tailored to specific domains, produces initial drafts and background information, while the strong model leverages its advanced reasoning to refine these drafts, extending LLMs' capabilities to critical yet specialized tasks. To optimize this collaboration, we introduce a collaborative feedback to fine-tunes the weak model, which quantifies the influence of the weak model's contributions in the collaboration procedure and establishes preference pairs to guide preference tuning of the weak model. We validate our framework through experiments on three domains. We find that the collaboration significantly outperforms each model alone by leveraging complementary strengths. Moreover, aligning the weak model with the collaborative preference further enhances overall performance.

Verifiable Format Control for Large Language Model Generations

Feb 06, 2025Abstract:Recent Large Language Models (LLMs) have demonstrated satisfying general instruction following ability. However, small LLMs with about 7B parameters still struggle fine-grained format following (e.g., JSON format), which seriously hinder the advancements of their applications. Most existing methods focus on benchmarking general instruction following while overlook how to improve the specific format following ability for small LLMs. Besides, these methods often rely on evaluations based on advanced LLMs (e.g., GPT-4), which can introduce the intrinsic bias of LLMs and be costly due to the API calls. In this paper, we first curate a fully verifiable format following dataset VFF. In contrast to existing works often adopting external LLMs for instruction-following validations, every sample of VFF can be easily validated with a Python function. Further, we propose to leverage this verifiable feature to synthesize massive data for progressively training small LLMs, in order to improve their format following abilities. Experimental results highlight the prevalent limitations in the format following capabilities of 7B level open-source LLMs and demonstrate the effectiveness of our method in enhancing this essential ability.

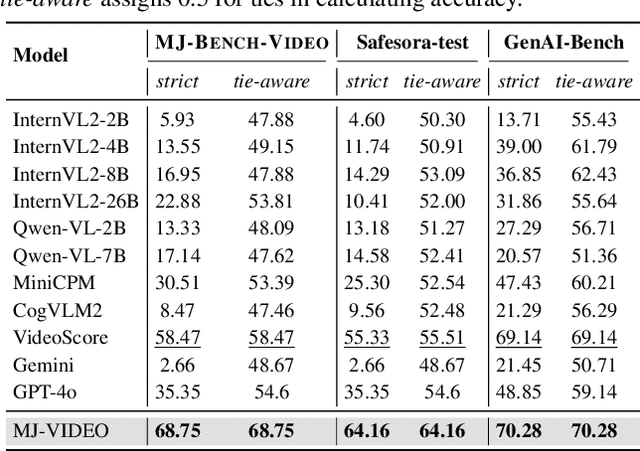

MJ-VIDEO: Fine-Grained Benchmarking and Rewarding Video Preferences in Video Generation

Feb 03, 2025

Abstract:Recent advancements in video generation have significantly improved the ability to synthesize videos from text instructions. However, existing models still struggle with key challenges such as instruction misalignment, content hallucination, safety concerns, and bias. Addressing these limitations, we introduce MJ-BENCH-VIDEO, a large-scale video preference benchmark designed to evaluate video generation across five critical aspects: Alignment, Safety, Fineness, Coherence & Consistency, and Bias & Fairness. This benchmark incorporates 28 fine-grained criteria to provide a comprehensive evaluation of video preference. Building upon this dataset, we propose MJ-VIDEO, a Mixture-of-Experts (MoE)-based video reward model designed to deliver fine-grained reward. MJ-VIDEO can dynamically select relevant experts to accurately judge the preference based on the input text-video pair. This architecture enables more precise and adaptable preference judgments. Through extensive benchmarking on MJ-BENCH-VIDEO, we analyze the limitations of existing video reward models and demonstrate the superior performance of MJ-VIDEO in video preference assessment, achieving 17.58% and 15.87% improvements in overall and fine-grained preference judgments, respectively. Additionally, introducing MJ-VIDEO for preference tuning in video generation enhances the alignment performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge