Yixuan Zhang

Information-Theoretic Multi-Model Fusion for Target-Oriented Adaptive Sampling in Materials Design

Feb 03, 2026Abstract:Target-oriented discovery under limited evaluation budgets requires making reliable progress in high-dimensional, heterogeneous design spaces where each new measurement is costly, whether experimental or high-fidelity simulation. We present an information-theoretic framework for target-oriented adaptive sampling that reframes optimization as trajectory discovery: instead of approximating the full response surface, the method maintains and refines a low-entropy information state that concentrates search on target-relevant directions. The approach couples data, model beliefs, and physics/structure priors through dimension-aware information budgeting, adaptive bootstrapped distillation over a heterogeneous surrogate reservoir, and structure-aware candidate manifold analysis with Kalman-inspired multi-model fusion to balance consensus-driven exploitation and disagreement-driven exploration. Evaluated under a single unified protocol without dataset-specific tuning, the framework improves sample efficiency and reliability across 14 single- and multi-objective materials design tasks spanning candidate pools from $600$ to $4 \times 10^6$ and feature dimensions from $10$ to $10^3$, typically reaching top-performing regions within 100 evaluations. Complementary 20-dimensional synthetic benchmarks (Ackley, Rastrigin, Schwefel) further demonstrate robustness to rugged and multimodal landscapes.

Thinker: A vision-language foundation model for embodied intelligence

Jan 29, 2026Abstract:When large vision-language models are applied to the field of robotics, they encounter problems that are simple for humans yet error-prone for models. Such issues include confusion between third-person and first-person perspectives and a tendency to overlook information in video endings during temporal reasoning. To address these challenges, we propose Thinker, a large vision-language foundation model designed for embodied intelligence. We tackle the aforementioned issues from two perspectives. Firstly, we construct a large-scale dataset tailored for robotic perception and reasoning, encompassing ego-view videos, visual grounding, spatial understanding, and chain-of-thought data. Secondly, we introduce a simple yet effective approach that substantially enhances the model's capacity for video comprehension by jointly incorporating key frames and full video sequences as inputs. Our model achieves state-of-the-art results on two of the most commonly used benchmark datasets in the field of task planning.

Wasserstein-p Central Limit Theorem Rates: From Local Dependence to Markov Chains

Jan 13, 2026Abstract:Finite-time central limit theorem (CLT) rates play a central role in modern machine learning (ML). In this paper, we study CLT rates for multivariate dependent data in Wasserstein-$p$ ($\mathcal W_p$) distance, for general $p\ge 1$. We focus on two fundamental dependence structures that commonly arise in ML: locally dependent sequences and geometrically ergodic Markov chains. In both settings, we establish the \textit{first optimal} $\mathcal O(n^{-1/2})$ rate in $\mathcal W_1$, as well as the first $\mathcal W_p$ ($p\ge 2$) CLT rates under mild moment assumptions, substantially improving the best previously known bounds in these dependent-data regimes. As an application of our optimal $\mathcal W_1$ rate for locally dependent sequences, we further obtain the first optimal $\mathcal W_1$--CLT rate for multivariate $U$-statistics. On the technical side, we derive a tractable auxiliary bound for $\mathcal W_1$ Gaussian approximation errors that is well suited to studying dependent data. For Markov chains, we further prove that the regeneration time of the split chain associated with a geometrically ergodic chain has a geometric tail without assuming strong aperiodicity or other restrictive conditions. These tools may be of independent interests and enable our optimal $\mathcal W_1$ rates and underpin our $\mathcal W_p$ ($p\ge 2$) results.

FreqDINO: Frequency-Guided Adaptation for Generalized Boundary-Aware Ultrasound Image Segmentation

Dec 12, 2025

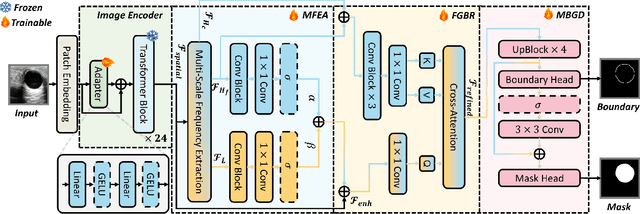

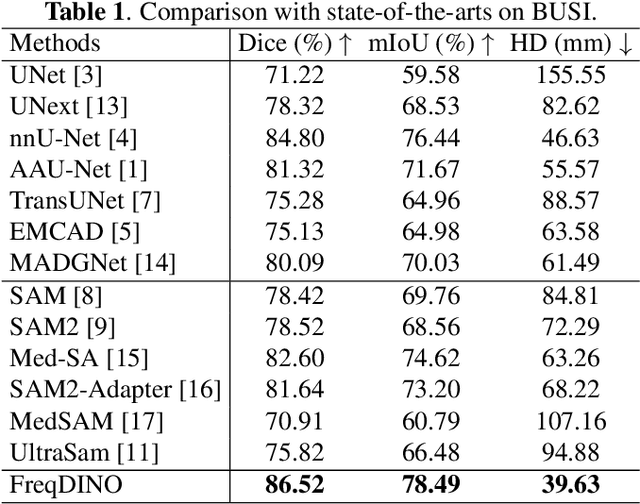

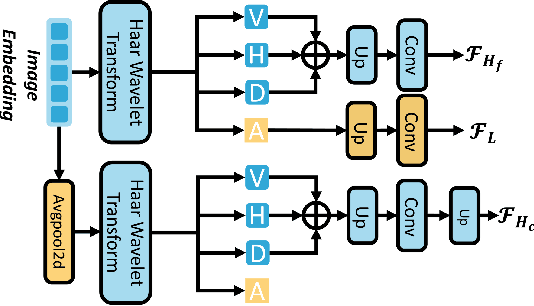

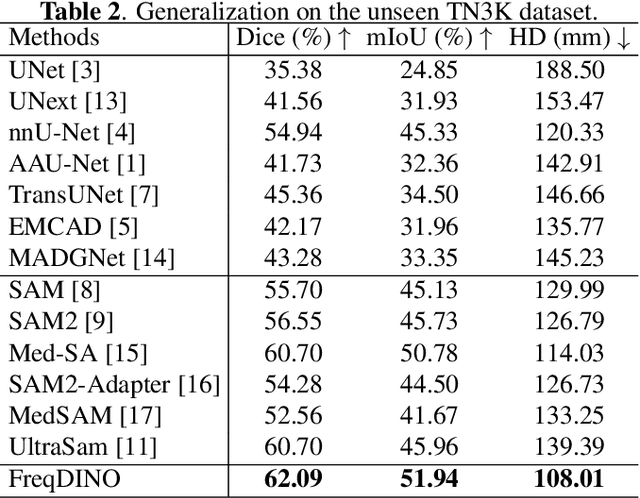

Abstract:Ultrasound image segmentation is pivotal for clinical diagnosis, yet challenged by speckle noise and imaging artifacts. Recently, DINOv3 has shown remarkable promise in medical image segmentation with its powerful representation capabilities. However, DINOv3, pre-trained on natural images, lacks sensitivity to ultrasound-specific boundary degradation. To address this limitation, we propose FreqDINO, a frequency-guided segmentation framework that enhances boundary perception and structural consistency. Specifically, we devise a Multi-scale Frequency Extraction and Alignment (MFEA) strategy to separate low-frequency structures and multi-scale high-frequency boundary details, and align them via learnable attention. We also introduce a Frequency-Guided Boundary Refinement (FGBR) module that extracts boundary prototypes from high-frequency components and refines spatial features. Furthermore, we design a Multi-task Boundary-Guided Decoder (MBGD) to ensure spatial coherence between boundary and semantic predictions. Extensive experiments demonstrate that FreqDINO surpasses state-of-the-art methods with superior achieves remarkable generalization capability. The code is at https://github.com/MingLang-FD/FreqDINO.

Fair Bayesian Data Selection via Generalized Discrepancy Measures

Nov 10, 2025

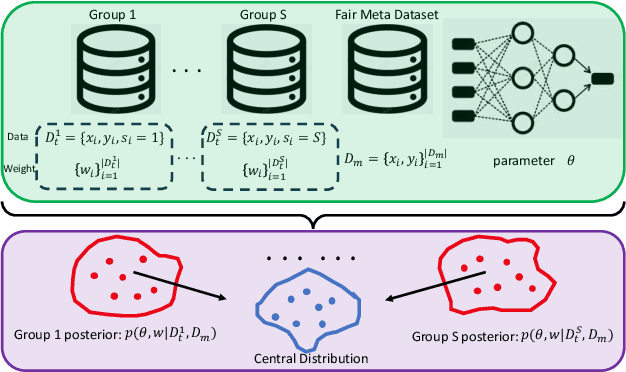

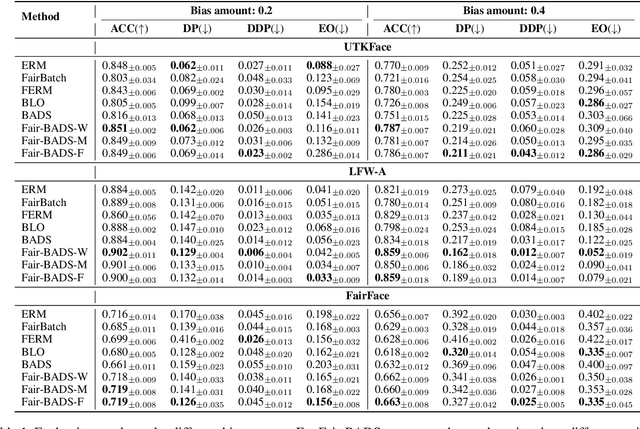

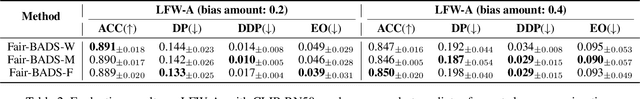

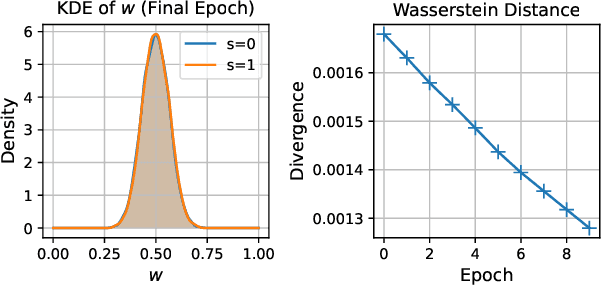

Abstract:Fairness concerns are increasingly critical as machine learning models are deployed in high-stakes applications. While existing fairness-aware methods typically intervene at the model level, they often suffer from high computational costs, limited scalability, and poor generalization. To address these challenges, we propose a Bayesian data selection framework that ensures fairness by aligning group-specific posterior distributions of model parameters and sample weights with a shared central distribution. Our framework supports flexible alignment via various distributional discrepancy measures, including Wasserstein distance, maximum mean discrepancy, and $f$-divergence, allowing geometry-aware control without imposing explicit fairness constraints. This data-centric approach mitigates group-specific biases in training data and improves fairness in downstream tasks, with theoretical guarantees. Experiments on benchmark datasets show that our method consistently outperforms existing data selection and model-based fairness methods in both fairness and accuracy.

Medverse: A Universal Model for Full-Resolution 3D Medical Image Segmentation, Transformation and Enhancement

Sep 11, 2025Abstract:In-context learning (ICL) offers a promising paradigm for universal medical image analysis, enabling models to perform diverse image processing tasks without retraining. However, current ICL models for medical imaging remain limited in two critical aspects: they cannot simultaneously achieve high-fidelity predictions and global anatomical understanding, and there is no unified model trained across diverse medical imaging tasks (e.g., segmentation and enhancement) and anatomical regions. As a result, the full potential of ICL in medical imaging remains underexplored. Thus, we present \textbf{Medverse}, a universal ICL model for 3D medical imaging, trained on 22 datasets covering diverse tasks in universal image segmentation, transformation, and enhancement across multiple organs, imaging modalities, and clinical centers. Medverse employs a next-scale autoregressive in-context learning framework that progressively refines predictions from coarse to fine, generating consistent, full-resolution volumetric outputs and enabling multi-scale anatomical awareness. We further propose a blockwise cross-attention module that facilitates long-range interactions between context and target inputs while preserving computational efficiency through spatial sparsity. Medverse is extensively evaluated on a broad collection of held-out datasets covering previously unseen clinical centers, organs, species, and imaging modalities. Results demonstrate that Medverse substantially outperforms existing ICL baselines and establishes a novel paradigm for in-context learning. Code and model weights will be made publicly available. Our model are publicly available at https://github.com/jiesihu/Medverse.

Towards Hallucination-Free Music: A Reinforcement Learning Preference Optimization Framework for Reliable Song Generation

Aug 07, 2025Abstract:Recent advances in audio-based generative language models have accelerated AI-driven lyric-to-song generation. However, these models frequently suffer from content hallucination, producing outputs misaligned with the input lyrics and undermining musical coherence. Current supervised fine-tuning (SFT) approaches, limited by passive label-fitting, exhibit constrained self-improvement and poor hallucination mitigation. To address this core challenge, we propose a novel reinforcement learning (RL) framework leveraging preference optimization for hallucination control. Our key contributions include: (1) Developing a robust hallucination preference dataset constructed via phoneme error rate (PER) computation and rule-based filtering to capture alignment with human expectations; (2) Implementing and evaluating three distinct preference optimization strategies within the RL framework: Direct Preference Optimization (DPO), Proximal Policy Optimization (PPO), and Group Relative Policy Optimization (GRPO). DPO operates off-policy to enhance positive token likelihood, achieving a significant 7.4% PER reduction. PPO and GRPO employ an on-policy approach, training a PER-based reward model to iteratively optimize sequences via reward maximization and KL-regularization, yielding PER reductions of 4.9% and 4.7%, respectively. Comprehensive objective and subjective evaluations confirm that our methods effectively suppress hallucinations while preserving musical quality. Crucially, this work presents a systematic, RL-based solution to hallucination control in lyric-to-song generation. The framework's transferability also unlocks potential for music style adherence and musicality enhancement, opening new avenues for future generative song research.

Negative Binomial Variational Autoencoders for Overdispersed Latent Modeling

Aug 07, 2025

Abstract:Biological neurons communicate through spike trains, discrete, irregular bursts of activity that exhibit variability far beyond the modeling capacity of conventional variational autoencoders (VAEs). Recent work, such as the Poisson-VAE, makes a biologically inspired move by modeling spike counts using the Poisson distribution. However, they impose a rigid constraint: equal mean and variance, which fails to reflect the true stochastic nature of neural activity. In this work, we challenge this constraint and introduce NegBio-VAE, a principled extension of the VAE framework that models spike counts using the negative binomial distribution. This shift grants explicit control over dispersion, unlocking a broader and more accurate family of neural representations. We further develop two ELBO optimization schemes and two differentiable reparameterization strategies tailored to the negative binomial setting. By introducing one additional dispersion parameter, NegBio-VAE generalizes the Poisson latent model to a negative binomial formulation. Empirical results demonstrate this minor yet impactful change leads to significant gains in reconstruction fidelity, highlighting the importance of explicitly modeling overdispersion in spike-like activations.

Contextual Online Pricing with (Biased) Offline Data

Jul 03, 2025Abstract:We study contextual online pricing with biased offline data. For the scalar price elasticity case, we identify the instance-dependent quantity $\delta^2$ that measures how far the offline data lies from the (unknown) online optimum. We show that the time length $T$, bias bound $V$, size $N$ and dispersion $\lambda_{\min}(\hat{\Sigma})$ of the offline data, and $\delta^2$ jointly determine the statistical complexity. An Optimism-in-the-Face-of-Uncertainty (OFU) policy achieves a minimax-optimal, instance-dependent regret bound $\tilde{\mathcal{O}}\big(d\sqrt{T} \wedge (V^2T + \frac{dT}{\lambda_{\min}(\hat{\Sigma}) + (N \wedge T) \delta^2})\big)$. For general price elasticity, we establish a worst-case, minimax-optimal rate $\tilde{\mathcal{O}}\big(d\sqrt{T} \wedge (V^2T + \frac{dT }{\lambda_{\min}(\hat{\Sigma})})\big)$ and provide a generalized OFU algorithm that attains it. When the bias bound $V$ is unknown, we design a robust variant that always guarantees sub-linear regret and strictly improves on purely online methods whenever the exact bias is small. These results deliver the first tight regret guarantees for contextual pricing in the presence of biased offline data. Our techniques also transfer verbatim to stochastic linear bandits with biased offline data, yielding analogous bounds.

LeVo: High-Quality Song Generation with Multi-Preference Alignment

Jun 09, 2025

Abstract:Recent advances in large language models (LLMs) and audio language models have significantly improved music generation, particularly in lyrics-to-song generation. However, existing approaches still struggle with the complex composition of songs and the scarcity of high-quality data, leading to limitations in sound quality, musicality, instruction following, and vocal-instrument harmony. To address these challenges, we introduce LeVo, an LM-based framework consisting of LeLM and a music codec. LeLM is capable of parallelly modeling two types of tokens: mixed tokens, which represent the combined audio of vocals and accompaniment to achieve vocal-instrument harmony, and dual-track tokens, which separately encode vocals and accompaniment for high-quality song generation. It employs two decoder-only transformers and a modular extension training strategy to prevent interference between different token types. To further enhance musicality and instruction following, we introduce a multi-preference alignment method based on Direct Preference Optimization (DPO). This method handles diverse human preferences through a semi-automatic data construction process and DPO post-training. Experimental results demonstrate that LeVo consistently outperforms existing methods on both objective and subjective metrics. Ablation studies further justify the effectiveness of our designs. Audio examples are available at https://levo-demo.github.io/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge