Siyu Zhang

Shape of Thought: Progressive Object Assembly via Visual Chain-of-Thought

Jan 28, 2026Abstract:Multimodal models for text-to-image generation have achieved strong visual fidelity, yet they remain brittle under compositional structural constraints-notably generative numeracy, attribute binding, and part-level relations. To address these challenges, we propose Shape-of-Thought (SoT), a visual CoT framework that enables progressive shape assembly via coherent 2D projections without external engines at inference time. SoT trains a unified multimodal autoregressive model to generate interleaved textual plans and rendered intermediate states, helping the model capture shape-assembly logic without producing explicit geometric representations. To support this paradigm, we introduce SoT-26K, a large-scale dataset of grounded assembly traces derived from part-based CAD hierarchies, and T2S-CompBench, a benchmark for evaluating structural integrity and trace faithfulness. Fine-tuning on SoT-26K achieves 88.4% on component numeracy and 84.8% on structural topology, outperforming text-only baselines by around 20%. SoT establishes a new paradigm for transparent, process-supervised compositional generation. The code is available at https://anonymous.4open.science/r/16FE/. The SoT-26K dataset will be released upon acceptance.

Multimodal Interpretation of Remote Sensing Images: Dynamic Resolution Input Strategy and Multi-scale Vision-Language Alignment Mechanism

Dec 29, 2025Abstract:Multimodal fusion of remote sensing images serves as a core technology for overcoming the limitations of single-source data and improving the accuracy of surface information extraction, which exhibits significant application value in fields such as environmental monitoring and urban planning. To address the deficiencies of existing methods, including the failure of fixed resolutions to balance efficiency and detail, as well as the lack of semantic hierarchy in single-scale alignment, this study proposes a Vision-language Model (VLM) framework integrated with two key innovations: the Dynamic Resolution Input Strategy (DRIS) and the Multi-scale Vision-language Alignment Mechanism (MS-VLAM).Specifically, the DRIS adopts a coarse-to-fine approach to adaptively allocate computational resources according to the complexity of image content, thereby preserving key fine-grained features while reducing redundant computational overhead. The MS-VLAM constructs a three-tier alignment mechanism covering object, local-region and global levels, which systematically captures cross-modal semantic consistency and alleviates issues of semantic misalignment and granularity imbalance.Experimental results on the RS-GPT4V dataset demonstrate that the proposed framework significantly improves the accuracy of semantic understanding and computational efficiency in tasks including image captioning and cross-modal retrieval. Compared with conventional methods, it achieves superior performance in evaluation metrics such as BLEU-4 and CIDEr for image captioning, as well as R@10 for cross-modal retrieval. This technical framework provides a novel approach for constructing efficient and robust multimodal remote sensing systems, laying a theoretical foundation and offering technical guidance for the engineering application of intelligent remote sensing interpretation.

Toward Faithfulness-guided Ensemble Interpretation of Neural Network

Sep 04, 2025Abstract:Interpretable and faithful explanations for specific neural inferences are crucial for understanding and evaluating model behavior. Our work introduces \textbf{F}aithfulness-guided \textbf{E}nsemble \textbf{I}nterpretation (\textbf{FEI}), an innovative framework that enhances the breadth and effectiveness of faithfulness, advancing interpretability by providing superior visualization. Through an analysis of existing evaluation benchmarks, \textbf{FEI} employs a smooth approximation to elevate quantitative faithfulness scores. Diverse variations of \textbf{FEI} target enhanced faithfulness in hidden layer encodings, expanding interpretability. Additionally, we propose a novel qualitative metric that assesses hidden layer faithfulness. In extensive experiments, \textbf{FEI} surpasses existing methods, demonstrating substantial advances in qualitative visualization and quantitative faithfulness scores. Our research establishes a comprehensive framework for elevating faithfulness in neural network explanations, emphasizing both breadth and precision

Decoupling Continual Semantic Segmentation

Aug 07, 2025Abstract:Continual Semantic Segmentation (CSS) requires learning new classes without forgetting previously acquired knowledge, addressing the fundamental challenge of catastrophic forgetting in dense prediction tasks. However, existing CSS methods typically employ single-stage encoder-decoder architectures where segmentation masks and class labels are tightly coupled, leading to interference between old and new class learning and suboptimal retention-plasticity balance. We introduce DecoupleCSS, a novel two-stage framework for CSS. By decoupling class-aware detection from class-agnostic segmentation, DecoupleCSS enables more effective continual learning, preserving past knowledge while learning new classes. The first stage leverages pre-trained text and image encoders, adapted using LoRA, to encode class-specific information and generate location-aware prompts. In the second stage, the Segment Anything Model (SAM) is employed to produce precise segmentation masks, ensuring that segmentation knowledge is shared across both new and previous classes. This approach improves the balance between retention and adaptability in CSS, achieving state-of-the-art performance across a variety of challenging tasks. Our code is publicly available at: https://github.com/euyis1019/Decoupling-Continual-Semantic-Segmentation.

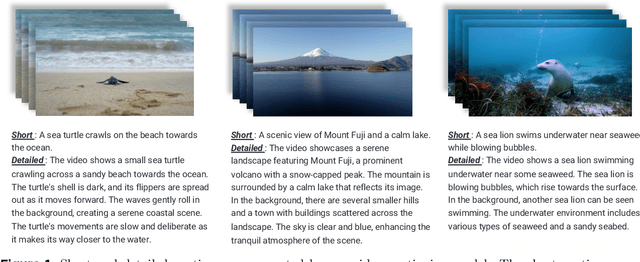

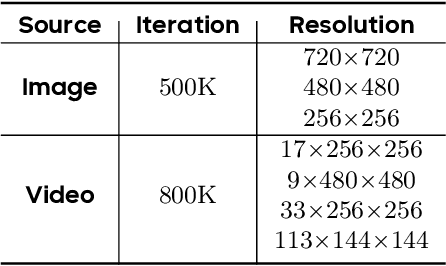

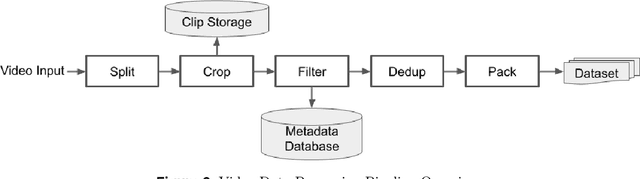

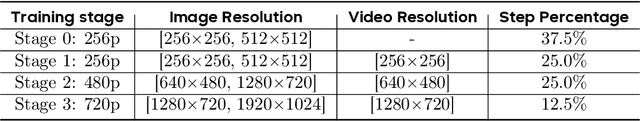

Seaweed-7B: Cost-Effective Training of Video Generation Foundation Model

Apr 11, 2025

Abstract:This technical report presents a cost-efficient strategy for training a video generation foundation model. We present a mid-sized research model with approximately 7 billion parameters (7B) called Seaweed-7B trained from scratch using 665,000 H100 GPU hours. Despite being trained with moderate computational resources, Seaweed-7B demonstrates highly competitive performance compared to contemporary video generation models of much larger size. Design choices are especially crucial in a resource-constrained setting. This technical report highlights the key design decisions that enhance the performance of the medium-sized diffusion model. Empirically, we make two observations: (1) Seaweed-7B achieves performance comparable to, or even surpasses, larger models trained on substantially greater GPU resources, and (2) our model, which exhibits strong generalization ability, can be effectively adapted across a wide range of downstream applications either by lightweight fine-tuning or continue training. See the project page at https://seaweed.video/

Improving vision-language alignment with graph spiking hybrid Networks

Jan 31, 2025Abstract:To bridge the semantic gap between vision and language (VL), it is necessary to develop a good alignment strategy, which includes handling semantic diversity, abstract representation of visual information, and generalization ability of models. Recent works use detector-based bounding boxes or patches with regular partitions to represent visual semantics. While current paradigms have made strides, they are still insufficient for fully capturing the nuanced contextual relations among various objects. This paper proposes a comprehensive visual semantic representation module, necessitating the utilization of panoptic segmentation to generate coherent fine-grained semantic features. Furthermore, we propose a novel Graph Spiking Hybrid Network (GSHN) that integrates the complementary advantages of Spiking Neural Networks (SNNs) and Graph Attention Networks (GATs) to encode visual semantic information. Intriguingly, the model not only encodes the discrete and continuous latent variables of instances but also adeptly captures both local and global contextual features, thereby significantly enhancing the richness and diversity of semantic representations. Leveraging the spatiotemporal properties inherent in SNNs, we employ contrastive learning (CL) to enhance the similarity-based representation of embeddings. This strategy alleviates the computational overhead of the model and enriches meaningful visual representations by constructing positive and negative sample pairs. We design an innovative pre-training method, Spiked Text Learning (STL), which uses text features to improve the encoding ability of discrete semantics. Experiments show that the proposed GSHN exhibits promising results on multiple VL downstream tasks.

RP-SLAM: Real-time Photorealistic SLAM with Efficient 3D Gaussian Splatting

Dec 13, 2024

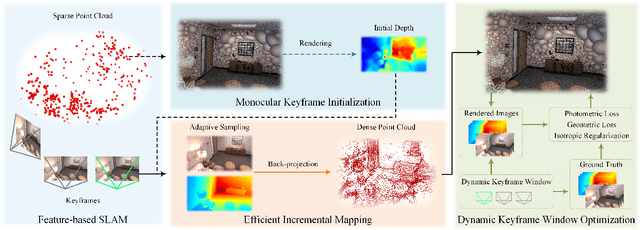

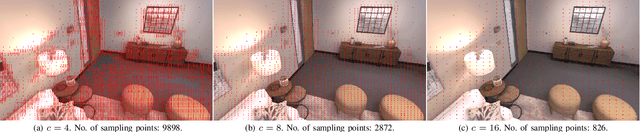

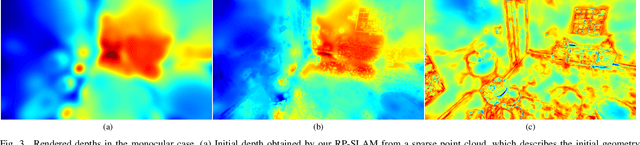

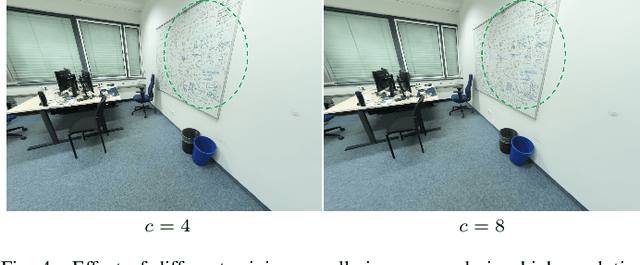

Abstract:3D Gaussian Splatting has emerged as a promising technique for high-quality 3D rendering, leading to increasing interest in integrating 3DGS into realism SLAM systems. However, existing methods face challenges such as Gaussian primitives redundancy, forgetting problem during continuous optimization, and difficulty in initializing primitives in monocular case due to lack of depth information. In order to achieve efficient and photorealistic mapping, we propose RP-SLAM, a 3D Gaussian splatting-based vision SLAM method for monocular and RGB-D cameras. RP-SLAM decouples camera poses estimation from Gaussian primitives optimization and consists of three key components. Firstly, we propose an efficient incremental mapping approach to achieve a compact and accurate representation of the scene through adaptive sampling and Gaussian primitives filtering. Secondly, a dynamic window optimization method is proposed to mitigate the forgetting problem and improve map consistency. Finally, for the monocular case, a monocular keyframe initialization method based on sparse point cloud is proposed to improve the initialization accuracy of Gaussian primitives, which provides a geometric basis for subsequent optimization. The results of numerous experiments demonstrate that RP-SLAM achieves state-of-the-art map rendering accuracy while ensuring real-time performance and model compactness.

StreamMOTP: Streaming and Unified Framework for Joint 3D Multi-Object Tracking and Trajectory Prediction

Jun 28, 2024Abstract:3D multi-object tracking and trajectory prediction are two crucial modules in autonomous driving systems. Generally, the two tasks are handled separately in traditional paradigms and a few methods have started to explore modeling these two tasks in a joint manner recently. However, these approaches suffer from the limitations of single-frame training and inconsistent coordinate representations between tracking and prediction tasks. In this paper, we propose a streaming and unified framework for joint 3D Multi-Object Tracking and trajectory Prediction (StreamMOTP) to address the above challenges. Firstly, we construct the model in a streaming manner and exploit a memory bank to preserve and leverage the long-term latent features for tracked objects more effectively. Secondly, a relative spatio-temporal positional encoding strategy is introduced to bridge the gap of coordinate representations between the two tasks and maintain the pose-invariance for trajectory prediction. Thirdly, we further improve the quality and consistency of predicted trajectories with a dual-stream predictor. We conduct extensive experiments on popular nuSences dataset and the experimental results demonstrate the effectiveness and superiority of StreamMOTP, which outperforms previous methods significantly on both tasks. Furthermore, we also prove that the proposed framework has great potential and advantages in actual applications of autonomous driving.

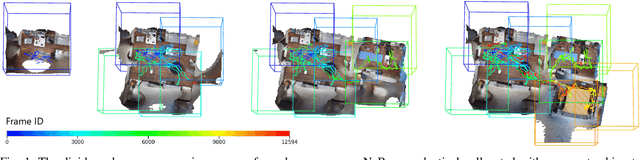

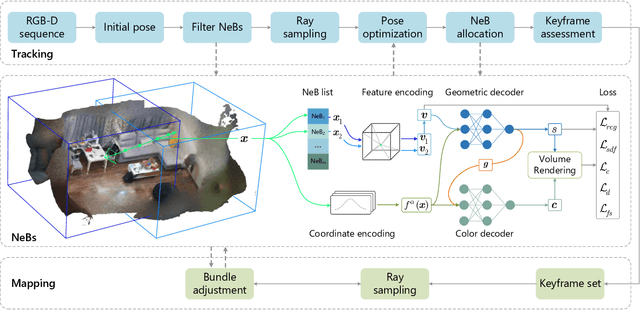

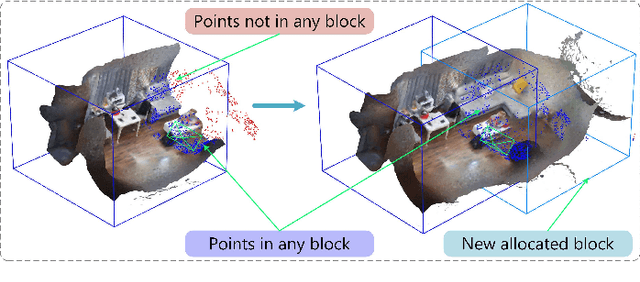

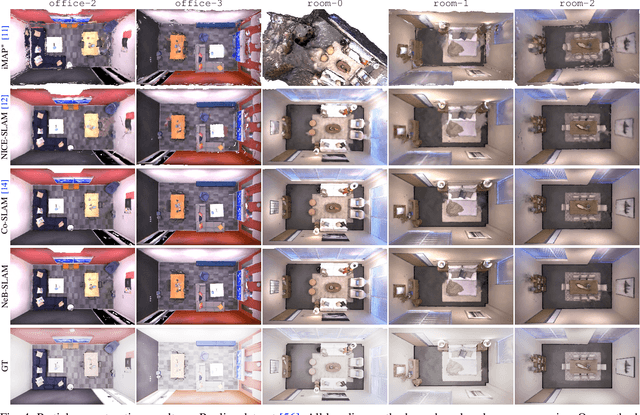

NeB-SLAM: Neural Blocks-based Salable RGB-D SLAM for Unknown Scenes

May 24, 2024

Abstract:Neural implicit representations have recently demonstrated considerable potential in the field of visual simultaneous localization and mapping (SLAM). This is due to their inherent advantages, including low storage overhead and representation continuity. However, these methods necessitate the size of the scene as input, which is impractical for unknown scenes. Consequently, we propose NeB-SLAM, a neural block-based scalable RGB-D SLAM for unknown scenes. Specifically, we first propose a divide-and-conquer mapping strategy that represents the entire unknown scene as a set of sub-maps. These sub-maps are a set of neural blocks of fixed size. Then, we introduce an adaptive map growth strategy to achieve adaptive allocation of neural blocks during camera tracking and gradually cover the whole unknown scene. Finally, extensive evaluations on various datasets demonstrate that our method is competitive in both mapping and tracking when targeting unknown environments.

SparseAD: Sparse Query-Centric Paradigm for Efficient End-to-End Autonomous Driving

Apr 10, 2024

Abstract:End-to-End paradigms use a unified framework to implement multi-tasks in an autonomous driving system. Despite simplicity and clarity, the performance of end-to-end autonomous driving methods on sub-tasks is still far behind the single-task methods. Meanwhile, the widely used dense BEV features in previous end-to-end methods make it costly to extend to more modalities or tasks. In this paper, we propose a Sparse query-centric paradigm for end-to-end Autonomous Driving (SparseAD), where the sparse queries completely represent the whole driving scenario across space, time and tasks without any dense BEV representation. Concretely, we design a unified sparse architecture for perception tasks including detection, tracking, and online mapping. Moreover, we revisit motion prediction and planning, and devise a more justifiable motion planner framework. On the challenging nuScenes dataset, SparseAD achieves SOTA full-task performance among end-to-end methods and significantly narrows the performance gap between end-to-end paradigms and single-task methods. Codes will be released soon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge