Wendong Mao

CDM-QTA: Quantized Training Acceleration for Efficient LoRA Fine-Tuning of Diffusion Model

Apr 08, 2025Abstract:Fine-tuning large diffusion models for custom applications demands substantial power and time, which poses significant challenges for efficient implementation on mobile devices. In this paper, we develop a novel training accelerator specifically for Low-Rank Adaptation (LoRA) of diffusion models, aiming to streamline the process and reduce computational complexity. By leveraging a fully quantized training scheme for LoRA fine-tuning, we achieve substantial reductions in memory usage and power consumption while maintaining high model fidelity. The proposed accelerator features flexible dataflow, enabling high utilization for irregular and variable tensor shapes during the LoRA process. Experimental results show up to 1.81x training speedup and 5.50x energy efficiency improvements compared to the baseline, with minimal impact on image generation quality.

P$^2$-ViT: Power-of-Two Post-Training Quantization and Acceleration for Fully Quantized Vision Transformer

May 30, 2024Abstract:Vision Transformers (ViTs) have excelled in computer vision tasks but are memory-consuming and computation-intensive, challenging their deployment on resource-constrained devices. To tackle this limitation, prior works have explored ViT-tailored quantization algorithms but retained floating-point scaling factors, which yield non-negligible re-quantization overhead, limiting ViTs' hardware efficiency and motivating more hardware-friendly solutions. To this end, we propose \emph{P$^2$-ViT}, the first \underline{P}ower-of-Two (PoT) \underline{p}ost-training quantization and acceleration framework to accelerate fully quantized ViTs. Specifically, {as for quantization,} we explore a dedicated quantization scheme to effectively quantize ViTs with PoT scaling factors, thus minimizing the re-quantization overhead. Furthermore, we propose coarse-to-fine automatic mixed-precision quantization to enable better accuracy-efficiency trade-offs. {In terms of hardware,} we develop {a dedicated chunk-based accelerator} featuring multiple tailored sub-processors to individually handle ViTs' different types of operations, alleviating reconfigurable overhead. Additionally, we design {a tailored row-stationary dataflow} to seize the pipeline processing opportunity introduced by our PoT scaling factors, thereby enhancing throughput. Extensive experiments consistently validate P$^2$-ViT's effectiveness. {Particularly, we offer comparable or even superior quantization performance with PoT scaling factors when compared to the counterpart with floating-point scaling factors. Besides, we achieve up to $\mathbf{10.1\times}$ speedup and $\mathbf{36.8\times}$ energy saving over GPU's Turing Tensor Cores, and up to $\mathbf{1.84\times}$ higher computation utilization efficiency against SOTA quantization-based ViT accelerators. Codes are available at \url{https://github.com/shihuihong214/P2-ViT}.

Trio-ViT: Post-Training Quantization and Acceleration for Softmax-Free Efficient Vision Transformer

May 06, 2024

Abstract:Motivated by the huge success of Transformers in the field of natural language processing (NLP), Vision Transformers (ViTs) have been rapidly developed and achieved remarkable performance in various computer vision tasks. However, their huge model sizes and intensive computations hinder ViTs' deployment on embedded devices, calling for effective model compression methods, such as quantization. Unfortunately, due to the existence of hardware-unfriendly and quantization-sensitive non-linear operations, particularly {Softmax}, it is non-trivial to completely quantize all operations in ViTs, yielding either significant accuracy drops or non-negligible hardware costs. In response to challenges associated with \textit{standard ViTs}, we focus our attention towards the quantization and acceleration for \textit{efficient ViTs}, which not only eliminate the troublesome Softmax but also integrate linear attention with low computational complexity, and propose \emph{Trio-ViT} accordingly. Specifically, at the algorithm level, we develop a {tailored post-training quantization engine} taking the unique activation distributions of Softmax-free efficient ViTs into full consideration, aiming to boost quantization accuracy. Furthermore, at the hardware level, we build an accelerator dedicated to the specific Convolution-Transformer hybrid architecture of efficient ViTs, thereby enhancing hardware efficiency. Extensive experimental results consistently prove the effectiveness of our Trio-ViT framework. {Particularly, we can gain up to $\uparrow$$\mathbf{7.2}\times$ and $\uparrow$$\mathbf{14.6}\times$ FPS under comparable accuracy over state-of-the-art ViT accelerators, as well as $\uparrow$$\mathbf{5.9}\times$ and $\uparrow$$\mathbf{2.0}\times$ DSP efficiency.} Codes will be released publicly upon acceptance.

An FPGA-Based Reconfigurable Accelerator for Convolution-Transformer Hybrid EfficientViT

Mar 29, 2024

Abstract:Vision Transformers (ViTs) have achieved significant success in computer vision. However, their intensive computations and massive memory footprint challenge ViTs' deployment on embedded devices, calling for efficient ViTs. Among them, EfficientViT, the state-of-the-art one, features a Convolution-Transformer hybrid architecture, enhancing both accuracy and hardware efficiency. Unfortunately, existing accelerators cannot fully exploit the hardware benefits of EfficientViT due to its unique architecture. In this paper, we propose an FPGA-based accelerator for EfficientViT to advance the hardware efficiency frontier of ViTs. Specifically, we design a reconfigurable architecture to efficiently support various operation types, including lightweight convolutions and attention, boosting hardware utilization. Additionally, we present a time-multiplexed and pipelined dataflow to facilitate both intra- and inter-layer fusions, reducing off-chip data access costs. Experimental results show that our accelerator achieves up to 780.2 GOPS in throughput and 105.1 GOPS/W in energy efficiency at 200MHz on the Xilinx ZCU102 FPGA, which significantly outperforms prior works.

A Computationally Efficient Neural Video Compression Accelerator Based on a Sparse CNN-Transformer Hybrid Network

Dec 19, 2023

Abstract:Video compression is widely used in digital television, surveillance systems, and virtual reality. Real-time video decoding is crucial in practical scenarios. Recently, neural video compression (NVC) combines traditional coding with deep learning, achieving impressive compression efficiency. Nevertheless, the NVC models involve high computational costs and complex memory access patterns, challenging real-time hardware implementations. To relieve this burden, we propose an algorithm and hardware co-design framework named NVCA for video decoding on resource-limited devices. Firstly, a CNN-Transformer hybrid network is developed to improve compression performance by capturing multi-scale non-local features. In addition, we propose a fast algorithm-based sparse strategy that leverages the dual advantages of pruning and fast algorithms, sufficiently reducing computational complexity while maintaining video compression efficiency. Secondly, a reconfigurable sparse computing core is designed to flexibly support sparse convolutions and deconvolutions based on the fast algorithm-based sparse strategy. Furthermore, a novel heterogeneous layer chaining dataflow is incorporated to reduce off-chip memory traffic stemming from extensive inter-frame motion and residual information. Thirdly, the overall architecture of NVCA is designed and synthesized in TSMC 28nm CMOS technology. Extensive experiments demonstrate that our design provides superior coding quality and up to 22.7x decoding speed improvements over other video compression designs. Meanwhile, our design achieves up to 2.2x improvements in energy efficiency compared to prior accelerators.

S2R: Exploring a Double-Win Transformer-Based Framework for Ideal and Blind Super-Resolution

Aug 16, 2023

Abstract:Nowadays, deep learning based methods have demonstrated impressive performance on ideal super-resolution (SR) datasets, but most of these methods incur dramatically performance drops when directly applied in real-world SR reconstruction tasks with unpredictable blur kernels. To tackle this issue, blind SR methods are proposed to improve the visual results on random blur kernels, which causes unsatisfactory reconstruction effects on ideal low-resolution images similarly. In this paper, we propose a double-win framework for ideal and blind SR task, named S2R, including a light-weight transformer-based SR model (S2R transformer) and a novel coarse-to-fine training strategy, which can achieve excellent visual results on both ideal and random fuzzy conditions. On algorithm level, S2R transformer smartly combines some efficient and light-weight blocks to enhance the representation ability of extracted features with relatively low number of parameters. For training strategy, a coarse-level learning process is firstly performed to improve the generalization of the network with the help of a large-scale external dataset, and then, a fast fine-tune process is developed to transfer the pre-trained model to real-world SR tasks by mining the internal features of the image. Experimental results show that the proposed S2R outperforms other single-image SR models in ideal SR condition with only 578K parameters. Meanwhile, it can achieve better visual results than regular blind SR models in blind fuzzy conditions with only 10 gradient updates, which improve convergence speed by 300 times, significantly accelerating the transfer-learning process in real-world situations.

An Efficient FPGA-based Accelerator for Deep Forest

Nov 04, 2022Abstract:Deep Forest is a prominent machine learning algorithm known for its high accuracy in forecasting. Compared with deep neural networks, Deep Forest has almost no multiplication operations and has better performance on small datasets. However, due to the deep structure and large forest quantity, it suffers from large amounts of calculation and memory consumption. In this paper, an efficient hardware accelerator is proposed for deep forest models, which is also the first work to implement Deep Forest on FPGA. Firstly, a delicate node computing unit (NCU) is designed to improve inference speed. Secondly, based on NCU, an efficient architecture and an adaptive dataflow are proposed, in order to alleviate the problem of node computing imbalance in the classification process. Moreover, an optimized storage scheme in this design also improves hardware utilization and power efficiency. The proposed design is implemented on an FPGA board, Intel Stratix V, and it is evaluated by two typical datasets, ADULT and Face Mask Detection. The experimental results show that the proposed design can achieve around 40x speedup compared to that on a 40 cores high performance x86 CPU.

Accelerate Three-Dimensional Generative Adversarial Networks Using Fast Algorithm

Oct 18, 2022

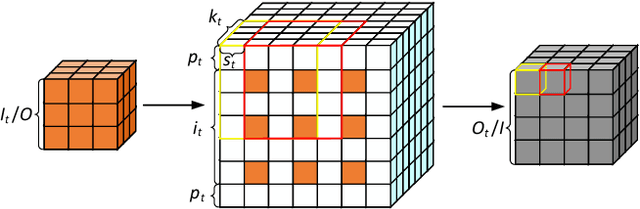

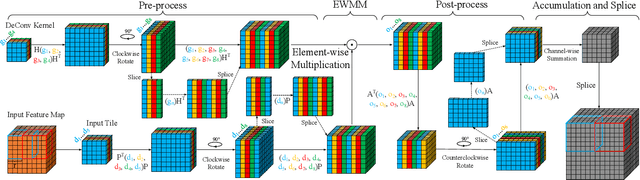

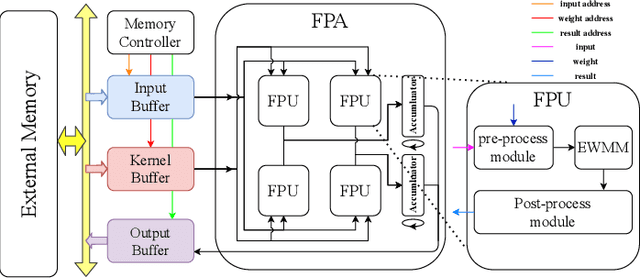

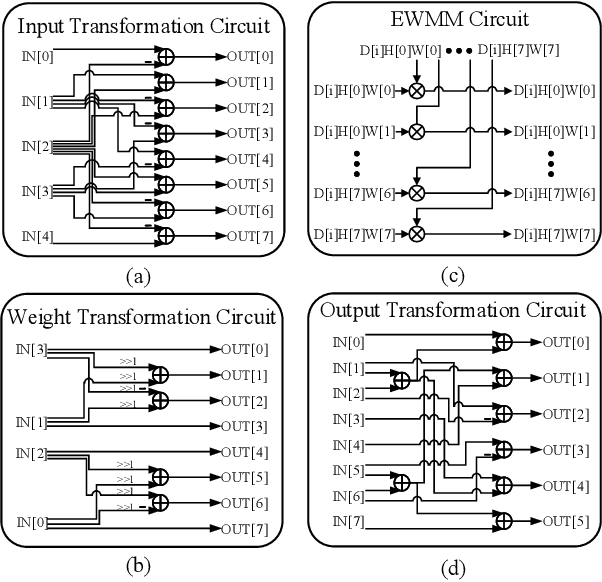

Abstract:Three-dimensional generative adversarial networks (3D-GAN) have attracted widespread attention in three-dimension (3D) visual tasks. 3D deconvolution (DeConv), as an important computation of 3D-GAN, significantly increases computational complexity compared with 2D DeConv. 3D DeConv has become a bottleneck for the acceleration of 3D-GAN. Previous accelerators suffer from several problems, such as large memory requirements and resource underutilization. To handle the above issues, a fast algorithm for 3D DeConv (F3DC) is proposed in this paper. F3DC applies a fast algorithm to reduce the number of multiplications and achieves a significant algorithmic strength reduction. Besides, F3DC removes the extra memory requirement for overlapped partial sums and avoids computational imbalance to fully utilize resources. Moreover, we design an F3DC-based hardware architecture, which consists of four fast processing units (FPUs). Each FPU includes a pre-process module, a EWMM module and a post-process module for F3DC transformation. By implementing our design on the Xilinx VC709 platform for 3D-GAN, we achieve a throughput up to 1700 GOPS and 4$\times$ computational efficiency improvement compared with prior works.

An Efficient FPGA Accelerator for Point Cloud

Oct 14, 2022

Abstract:Deep learning-based point cloud processing plays an important role in various vision tasks, such as autonomous driving, virtual reality (VR), and augmented reality (AR). The submanifold sparse convolutional network (SSCN) has been widely used for the point cloud due to its unique advantages in terms of visual results. However, existing convolutional neural network accelerators suffer from non-trivial performance degradation when employed to accelerate SSCN because of the extreme and unstructured sparsity, and the complex computational dependency between the sparsity of the central activation and the neighborhood ones. In this paper, we propose a high performance FPGA-based accelerator for SSCN. Firstly, we develop a zero removing strategy to remove the coarse-grained redundant regions, thus significantly improving computational efficiency. Secondly, we propose a concise encoding scheme to obtain the matching information for efficient point-wise multiplications. Thirdly, we develop a sparse data matching unit and a computing core based on the proposed encoding scheme, which can convert the irregular sparse operations into regular multiply-accumulate operations. Finally, an efficient hardware architecture for the submanifold sparse convolutional layer is developed and implemented on the Xilinx ZCU102 field-programmable gate array board, where the 3D submanifold sparse U-Net is taken as the benchmark. The experimental results demonstrate that our design drastically improves computational efficiency, and can dramatically improve the power efficiency by 51 times compared to GPU.

Multi-scale Convolution Aggregation and Stochastic Feature Reuse for DenseNets

Oct 02, 2018

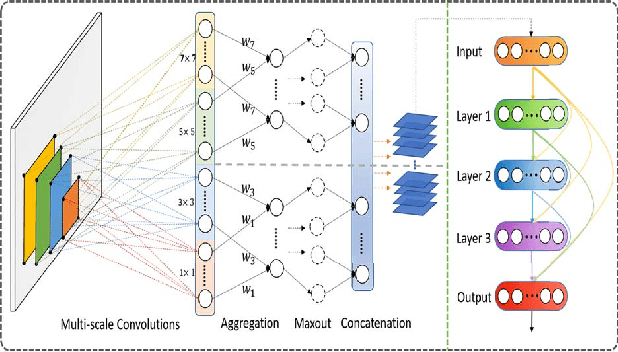

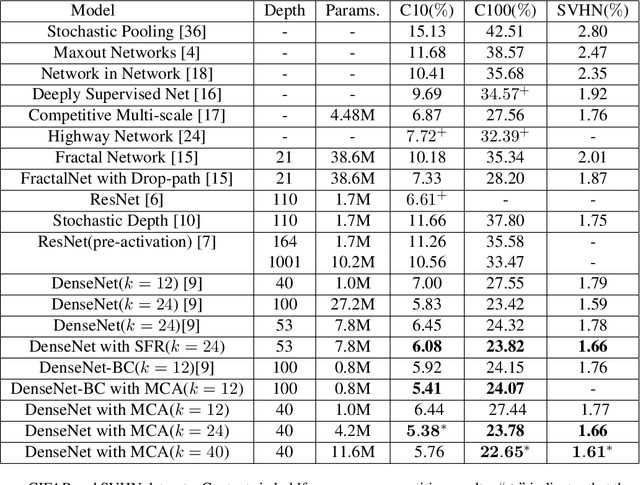

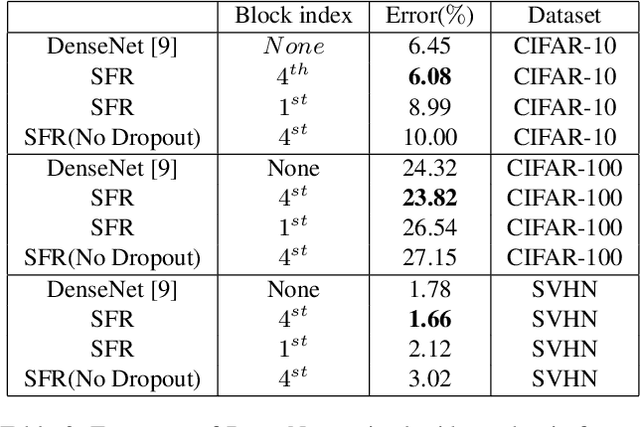

Abstract:Recently, Convolution Neural Networks (CNNs) obtained huge success in numerous vision tasks. In particular, DenseNets have demonstrated that feature reuse via dense skip connections can effectively alleviate the difficulty of training very deep networks and that reusing features generated by the initial layers in all subsequent layers has strong impact on performance. To feed even richer information into the network, a novel adaptive Multi-scale Convolution Aggregation module is presented in this paper. Composed of layers for multi-scale convolutions, trainable cross-scale aggregation, maxout, and concatenation, this module is highly non-linear and can boost the accuracy of DenseNet while using much fewer parameters. In addition, due to high model complexity, the network with extremely dense feature reuse is prone to overfitting. To address this problem, a regularization method named Stochastic Feature Reuse is also presented. Through randomly dropping a set of feature maps to be reused for each mini-batch during the training phase, this regularization method reduces training costs and prevents co-adaptation. Experimental results on CIFAR-10, CIFAR-100 and SVHN benchmarks demonstrated the effectiveness of the proposed methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge