Sergios Gatidis

Towards a Unified Theoretical Framework for Self-Supervised MRI Reconstruction

Jan 08, 2026Abstract:The demand for high-resolution, non-invasive imaging continues to drive innovation in magnetic resonance imaging (MRI), yet prolonged acquisition times hinder accessibility and real-time applications. While deep learning-based reconstruction methods have accelerated MRI, their predominant supervised paradigm depends on fully-sampled reference data that are challenging to acquire. Recently, self-supervised learning (SSL) approaches have emerged as promising alternatives, but most are empirically designed and fragmented. Therefore, we introduce UNITS (Unified Theory for Self-supervision), a general framework for self-supervised MRI reconstruction. UNITS unifies prior SSL strategies within a common formalism, enabling consistent interpretation and systematic benchmarking. We prove that SSL can achieve the same expected performance as supervised learning. Under this theoretical guarantee, we introduce sampling stochasticity and flexible data utilization, which improve network generalization under out-of-domain distributions and stabilize training. Together, these contributions establish UNITS as a theoretical foundation and a practical paradigm for interpretable, generalizable, and clinically applicable self-supervised MRI reconstruction.

Retrospective motion correction in MRI using disentangled embeddings

Nov 11, 2025Abstract:Physiological motion can affect the diagnostic quality of magnetic resonance imaging (MRI). While various retrospective motion correction methods exist, many struggle to generalize across different motion types and body regions. In particular, machine learning (ML)-based corrections are often tailored to specific applications and datasets. We hypothesize that motion artifacts, though diverse, share underlying patterns that can be disentangled and exploited. To address this, we propose a hierarchical vector-quantized (VQ) variational auto-encoder that learns a disentangled embedding of motion-to-clean image features. A codebook is deployed to capture finite collection of motion patterns at multiple resolutions, enabling coarse-to-fine correction. An auto-regressive model is trained to learn the prior distribution of motion-free images and is used at inference to guide the correction process. Unlike conventional approaches, our method does not require artifact-specific training and can generalize to unseen motion patterns. We demonstrate the approach on simulated whole-body motion artifacts and observe robust correction across varying motion severity. Our results suggest that the model effectively disentangled physical motion of the simulated motion-effective scans, therefore, improving the generalizability of the ML-based MRI motion correction. Our work of disentangling the motion features shed a light on its potential application across anatomical regions and motion types.

Adaptable Cardiovascular Disease Risk Prediction from Heterogeneous Data using Large Language Models

May 30, 2025Abstract:Cardiovascular disease (CVD) risk prediction models are essential for identifying high-risk individuals and guiding preventive actions. However, existing models struggle with the challenges of real-world clinical practice as they oversimplify patient profiles, rely on rigid input schemas, and are sensitive to distribution shifts. We developed AdaCVD, an adaptable CVD risk prediction framework built on large language models extensively fine-tuned on over half a million participants from the UK Biobank. In benchmark comparisons, AdaCVD surpasses established risk scores and standard machine learning approaches, achieving state-of-the-art performance. Crucially, for the first time, it addresses key clinical challenges across three dimensions: it flexibly incorporates comprehensive yet variable patient information; it seamlessly integrates both structured data and unstructured text; and it rapidly adapts to new patient populations using minimal additional data. In stratified analyses, it demonstrates robust performance across demographic, socioeconomic, and clinical subgroups, including underrepresented cohorts. AdaCVD offers a promising path toward more flexible, AI-driven clinical decision support tools suited to the realities of heterogeneous and dynamic healthcare environments.

Self-supervised feature learning for cardiac Cine MR image reconstruction

May 29, 2025Abstract:We propose a self-supervised feature learning assisted reconstruction (SSFL-Recon) framework for MRI reconstruction to address the limitation of existing supervised learning methods. Although recent deep learning-based methods have shown promising performance in MRI reconstruction, most require fully-sampled images for supervised learning, which is challenging in practice considering long acquisition times under respiratory or organ motion. Moreover, nearly all fully-sampled datasets are obtained from conventional reconstruction of mildly accelerated datasets, thus potentially biasing the achievable performance. The numerous undersampled datasets with different accelerations in clinical practice, hence, remain underutilized. To address these issues, we first train a self-supervised feature extractor on undersampled images to learn sampling-insensitive features. The pre-learned features are subsequently embedded in the self-supervised reconstruction network to assist in removing artifacts. Experiments were conducted retrospectively on an in-house 2D cardiac Cine dataset, including 91 cardiovascular patients and 38 healthy subjects. The results demonstrate that the proposed SSFL-Recon framework outperforms existing self-supervised MRI reconstruction methods and even exhibits comparable or better performance to supervised learning up to $16\times$ retrospective undersampling. The feature learning strategy can effectively extract global representations, which have proven beneficial in removing artifacts and increasing generalization ability during reconstruction.

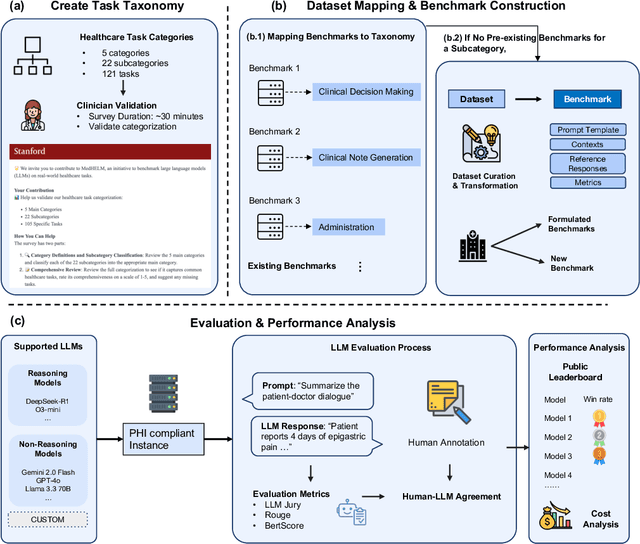

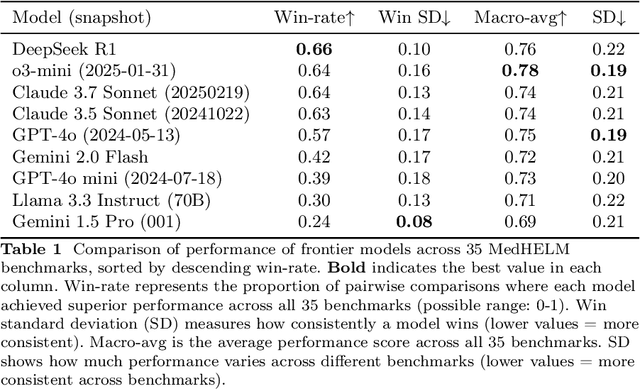

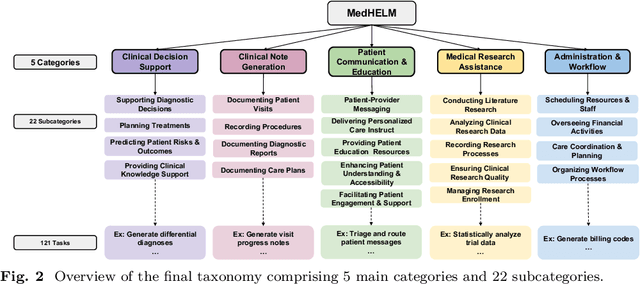

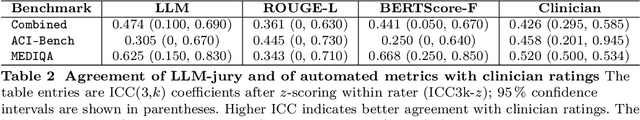

MedHELM: Holistic Evaluation of Large Language Models for Medical Tasks

May 26, 2025

Abstract:While large language models (LLMs) achieve near-perfect scores on medical licensing exams, these evaluations inadequately reflect the complexity and diversity of real-world clinical practice. We introduce MedHELM, an extensible evaluation framework for assessing LLM performance for medical tasks with three key contributions. First, a clinician-validated taxonomy spanning 5 categories, 22 subcategories, and 121 tasks developed with 29 clinicians. Second, a comprehensive benchmark suite comprising 35 benchmarks (17 existing, 18 newly formulated) providing complete coverage of all categories and subcategories in the taxonomy. Third, a systematic comparison of LLMs with improved evaluation methods (using an LLM-jury) and a cost-performance analysis. Evaluation of 9 frontier LLMs, using the 35 benchmarks, revealed significant performance variation. Advanced reasoning models (DeepSeek R1: 66% win-rate; o3-mini: 64% win-rate) demonstrated superior performance, though Claude 3.5 Sonnet achieved comparable results at 40% lower estimated computational cost. On a normalized accuracy scale (0-1), most models performed strongly in Clinical Note Generation (0.73-0.85) and Patient Communication & Education (0.78-0.83), moderately in Medical Research Assistance (0.65-0.75), and generally lower in Clinical Decision Support (0.56-0.72) and Administration & Workflow (0.53-0.63). Our LLM-jury evaluation method achieved good agreement with clinician ratings (ICC = 0.47), surpassing both average clinician-clinician agreement (ICC = 0.43) and automated baselines including ROUGE-L (0.36) and BERTScore-F1 (0.44). Claude 3.5 Sonnet achieved comparable performance to top models at lower estimated cost. These findings highlight the importance of real-world, task-specific evaluation for medical use of LLMs and provides an open source framework to enable this.

Best Practices for Large Language Models in Radiology

Dec 02, 2024

Abstract:At the heart of radiological practice is the challenge of integrating complex imaging data with clinical information to produce actionable insights. Nuanced application of language is key for various activities, including managing requests, describing and interpreting imaging findings in the context of clinical data, and concisely documenting and communicating the outcomes. The emergence of large language models (LLMs) offers an opportunity to improve the management and interpretation of the vast data in radiology. Despite being primarily general-purpose, these advanced computational models demonstrate impressive capabilities in specialized language-related tasks, even without specific training. Unlocking the potential of LLMs for radiology requires basic understanding of their foundations and a strategic approach to navigate their idiosyncrasies. This review, drawing from practical radiology and machine learning expertise and recent literature, provides readers insight into the potential of LLMs in radiology. It examines best practices that have so far stood the test of time in the rapidly evolving landscape of LLMs. This includes practical advice for optimizing LLM characteristics for radiology practices along with limitations, effective prompting, and fine-tuning strategies.

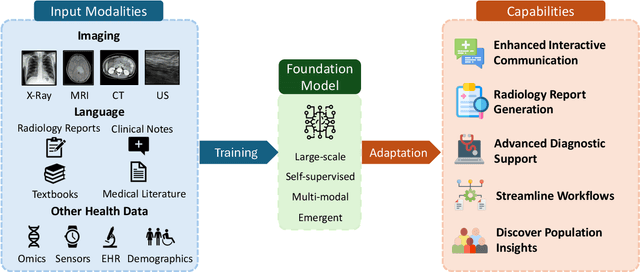

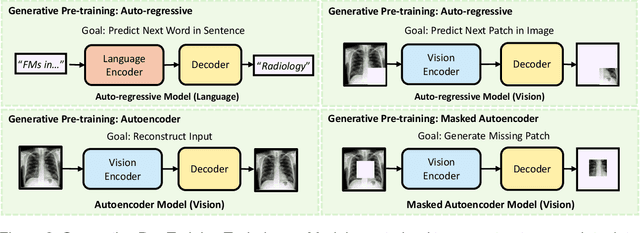

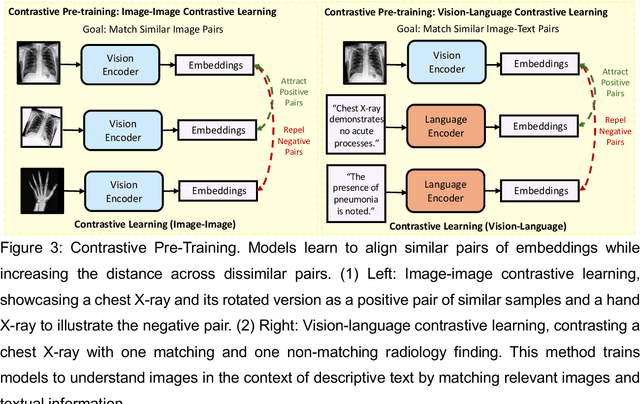

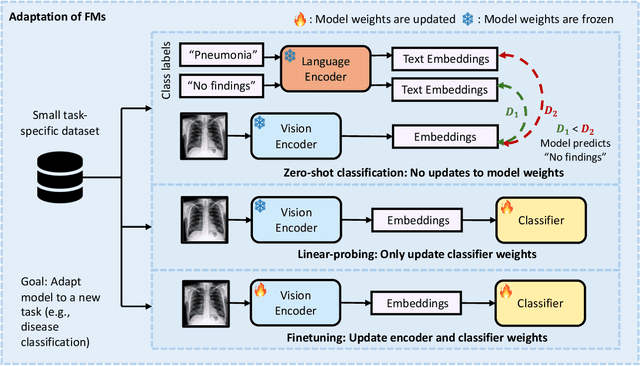

Foundation Models in Radiology: What, How, When, Why and Why Not

Nov 27, 2024

Abstract:Recent advances in artificial intelligence have witnessed the emergence of large-scale deep learning models capable of interpreting and generating both textual and imaging data. Such models, typically referred to as foundation models, are trained on extensive corpora of unlabeled data and demonstrate high performance across various tasks. Foundation models have recently received extensive attention from academic, industry, and regulatory bodies. Given the potentially transformative impact that foundation models can have on the field of radiology, this review aims to establish a standardized terminology concerning foundation models, with a specific focus on the requirements of training data, model training paradigms, model capabilities, and evaluation strategies. We further outline potential pathways to facilitate the training of radiology-specific foundation models, with a critical emphasis on elucidating both the benefits and challenges associated with such models. Overall, we envision that this review can unify technical advances and clinical needs in the training of foundation models for radiology in a safe and responsible manner, for ultimately benefiting patients, providers, and radiologists.

Evaluating and Improving the Effectiveness of Synthetic Chest X-Rays for Medical Image Analysis

Nov 27, 2024Abstract:Purpose: To explore best-practice approaches for generating synthetic chest X-ray images and augmenting medical imaging datasets to optimize the performance of deep learning models in downstream tasks like classification and segmentation. Materials and Methods: We utilized a latent diffusion model to condition the generation of synthetic chest X-rays on text prompts and/or segmentation masks. We explored methods like using a proxy model and using radiologist feedback to improve the quality of synthetic data. These synthetic images were then generated from relevant disease information or geometrically transformed segmentation masks and added to ground truth training set images from the CheXpert, CANDID-PTX, SIIM, and RSNA Pneumonia datasets to measure improvements in classification and segmentation model performance on the test sets. F1 and Dice scores were used to evaluate classification and segmentation respectively. One-tailed t-tests with Bonferroni correction assessed the statistical significance of performance improvements with synthetic data. Results: Across all experiments, the synthetic data we generated resulted in a maximum mean classification F1 score improvement of 0.150453 (CI: 0.099108-0.201798; P=0.0031) compared to using only real data. For segmentation, the maximum Dice score improvement was 0.14575 (CI: 0.108267-0.183233; P=0.0064). Conclusion: Best practices for generating synthetic chest X-ray images for downstream tasks include conditioning on single-disease labels or geometrically transformed segmentation masks, as well as potentially using proxy modeling for fine-tuning such generations.

Highly efficient non-rigid registration in k-space with application to cardiac Magnetic Resonance Imaging

Oct 24, 2024

Abstract:In Magnetic Resonance Imaging (MRI), high temporal-resolved motion can be useful for image acquisition and reconstruction, MR-guided radiotherapy, dynamic contrast-enhancement, flow and perfusion imaging, and functional assessment of motion patterns in cardiovascular, abdominal, peristaltic, fetal, or musculoskeletal imaging. Conventionally, these motion estimates are derived through image-based registration, a particularly challenging task for complex motion patterns and high dynamic resolution. The accelerated scans in such applications result in imaging artifacts that compromise the motion estimation. In this work, we propose a novel self-supervised deep learning-based framework, dubbed the Local-All Pass Attention Network (LAPANet), for non-rigid motion estimation directly from the acquired accelerated Fourier space, i.e. k-space. The proposed approach models non-rigid motion as the cumulative sum of local translational displacements, following the Local All-Pass (LAP) registration technique. LAPANet was evaluated on cardiac motion estimation across various sampling trajectories and acceleration rates. Our results demonstrate superior accuracy compared to prior conventional and deep learning-based registration methods, accommodating as few as 2 lines/frame in a Cartesian trajectory and 3 spokes/frame in a non-Cartesian trajectory. The achieved high temporal resolution (less than 5 ms) for non-rigid motion opens new avenues for motion detection, tracking and correction in dynamic and real-time MRI applications.

Benchmarking Dependence Measures to Prevent Shortcut Learning in Medical Imaging

Jul 29, 2024

Abstract:Medical imaging cohorts are often confounded by factors such as acquisition devices, hospital sites, patient backgrounds, and many more. As a result, deep learning models tend to learn spurious correlations instead of causally related features, limiting their generalizability to new and unseen data. This problem can be addressed by minimizing dependence measures between intermediate representations of task-related and non-task-related variables. These measures include mutual information, distance correlation, and the performance of adversarial classifiers. Here, we benchmark such dependence measures for the task of preventing shortcut learning. We study a simplified setting using Morpho-MNIST and a medical imaging task with CheXpert chest radiographs. Our results provide insights into how to mitigate confounding factors in medical imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge