Roman Vaculin

AssetOpsBench: Benchmarking AI Agents for Task Automation in Industrial Asset Operations and Maintenance

Jun 04, 2025Abstract:AI for Industrial Asset Lifecycle Management aims to automate complex operational workflows -- such as condition monitoring, maintenance planning, and intervention scheduling -- to reduce human workload and minimize system downtime. Traditional AI/ML approaches have primarily tackled these problems in isolation, solving narrow tasks within the broader operational pipeline. In contrast, the emergence of AI agents and large language models (LLMs) introduces a next-generation opportunity: enabling end-to-end automation across the entire asset lifecycle. This paper envisions a future where AI agents autonomously manage tasks that previously required distinct expertise and manual coordination. To this end, we introduce AssetOpsBench -- a unified framework and environment designed to guide the development, orchestration, and evaluation of domain-specific agents tailored for Industry 4.0 applications. We outline the key requirements for such holistic systems and provide actionable insights into building agents that integrate perception, reasoning, and control for real-world industrial operations. The software is available at https://github.com/IBM/AssetOpsBench.

A Supervised Contrastive Learning Pretrain-Finetune Approach for Time Series

Nov 21, 2023

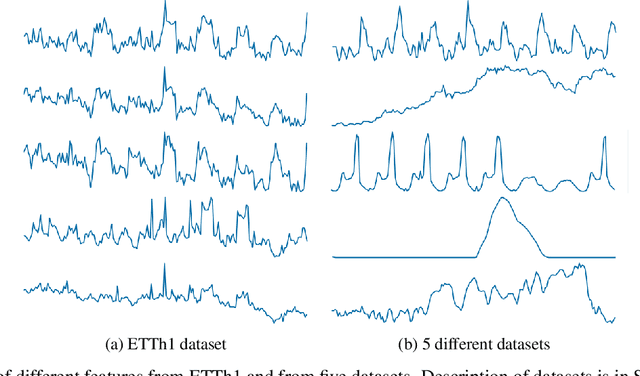

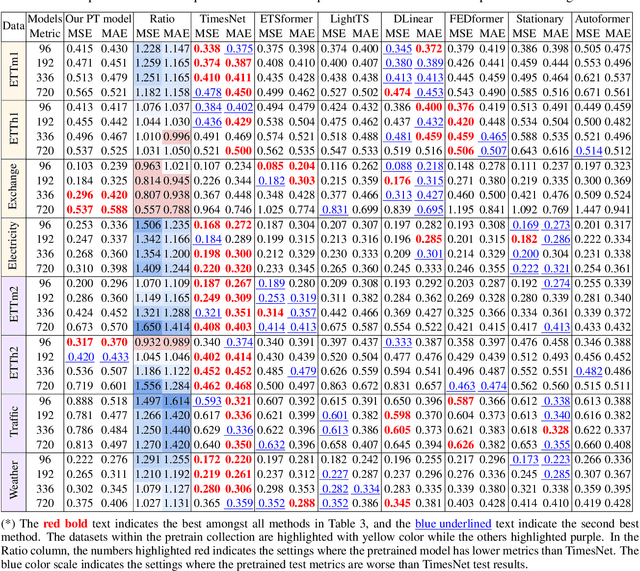

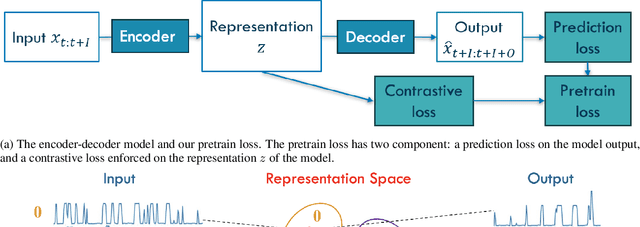

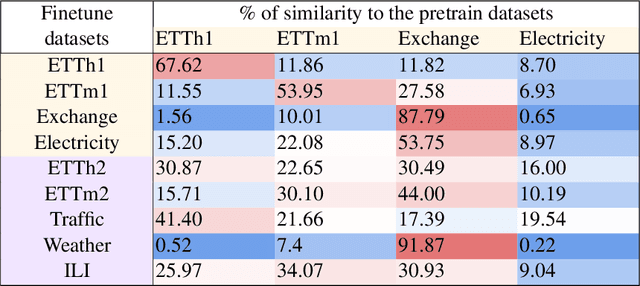

Abstract:Foundation models have recently gained attention within the field of machine learning thanks to its efficiency in broad data processing. While researchers had attempted to extend this success to time series models, the main challenge is effectively extracting representations and transferring knowledge from pretraining datasets to the target finetuning dataset. To tackle this issue, we introduce a novel pretraining procedure that leverages supervised contrastive learning to distinguish features within each pretraining dataset. This pretraining phase enables a probabilistic similarity metric, which assesses the likelihood of a univariate sample being closely related to one of the pretraining datasets. Subsequently, using this similarity metric as a guide, we propose a fine-tuning procedure designed to enhance the accurate prediction of the target data by aligning it more closely with the learned dynamics of the pretraining datasets. Our experiments have shown promising results which demonstrate the efficacy of our approach.

An End-to-End Time Series Model for Simultaneous Imputation and Forecast

Jun 01, 2023

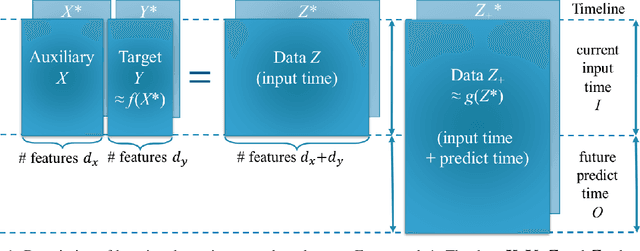

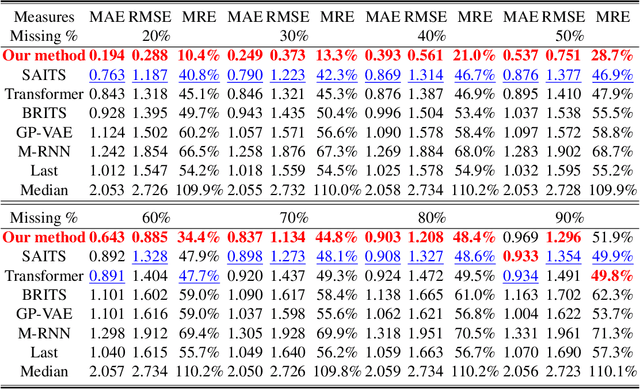

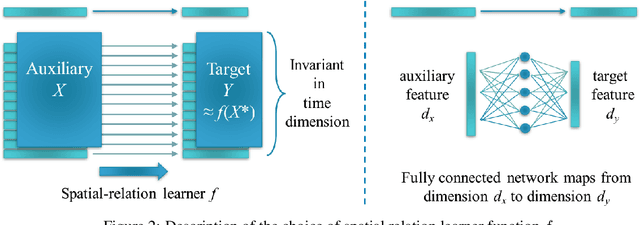

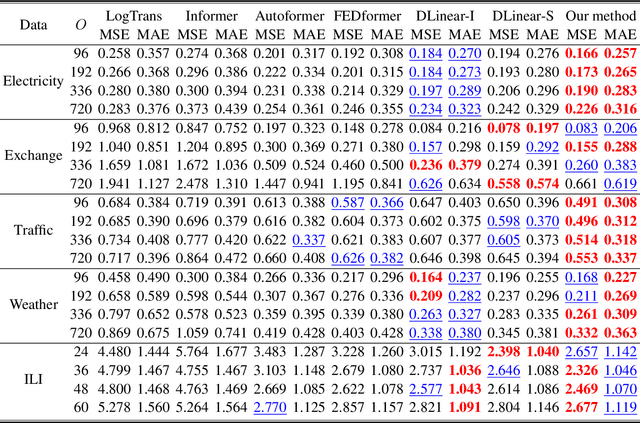

Abstract:Time series forecasting using historical data has been an interesting and challenging topic, especially when the data is corrupted by missing values. In many industrial problem, it is important to learn the inference function between the auxiliary observations and target variables as it provides additional knowledge when the data is not fully observed. We develop an end-to-end time series model that aims to learn the such inference relation and make a multiple-step ahead forecast. Our framework trains jointly two neural networks, one to learn the feature-wise correlations and the other for the modeling of temporal behaviors. Our model is capable of simultaneously imputing the missing entries and making a multiple-step ahead prediction. The experiments show good overall performance of our framework over existing methods in both imputation and forecasting tasks.

TsSHAP: Robust model agnostic feature-based explainability for time series forecasting

Mar 22, 2023

Abstract:A trustworthy machine learning model should be accurate as well as explainable. Understanding why a model makes a certain decision defines the notion of explainability. While various flavors of explainability have been well-studied in supervised learning paradigms like classification and regression, literature on explainability for time series forecasting is relatively scarce. In this paper, we propose a feature-based explainability algorithm, TsSHAP, that can explain the forecast of any black-box forecasting model. The method is agnostic of the forecasting model and can provide explanations for a forecast in terms of interpretable features defined by the user a prior. The explanations are in terms of the SHAP values obtained by applying the TreeSHAP algorithm on a surrogate model that learns a mapping between the interpretable feature space and the forecast of the black-box model. Moreover, we formalize the notion of local, semi-local, and global explanations in the context of time series forecasting, which can be useful in several scenarios. We validate the efficacy and robustness of TsSHAP through extensive experiments on multiple datasets.

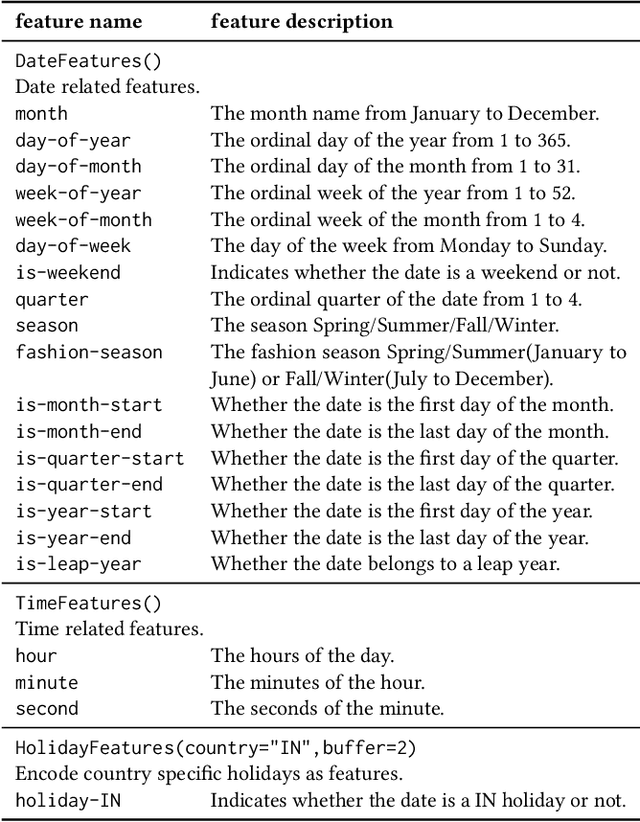

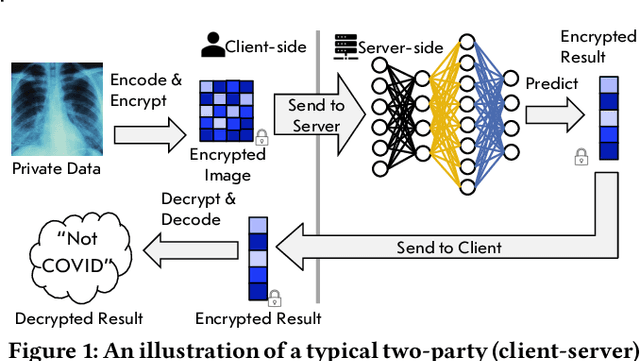

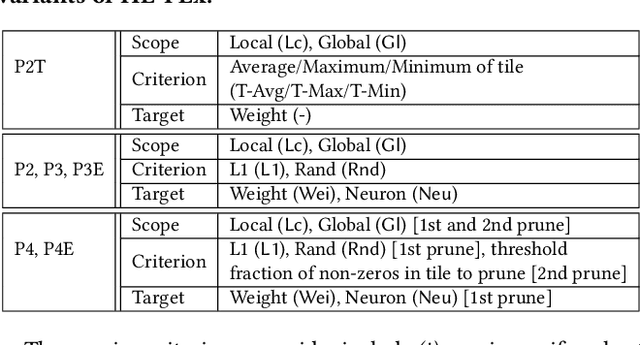

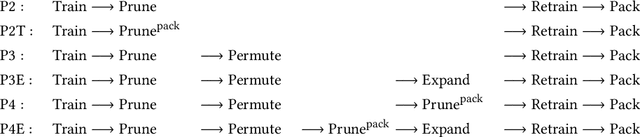

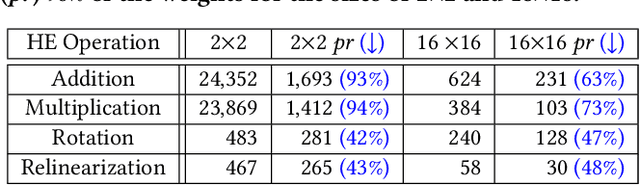

HE-PEx: Efficient Machine Learning under Homomorphic Encryption using Pruning, Permutation and Expansion

Jul 07, 2022

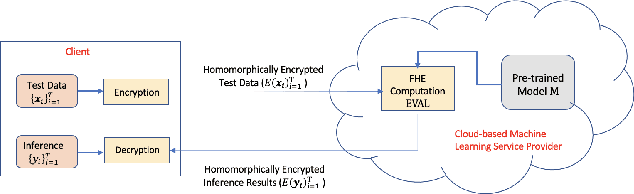

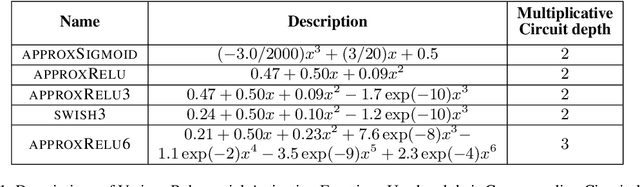

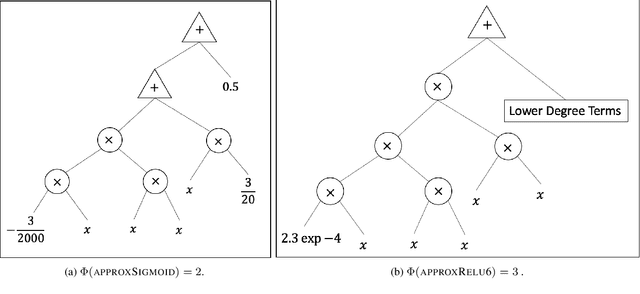

Abstract:Privacy-preserving neural network (NN) inference solutions have recently gained significant traction with several solutions that provide different latency-bandwidth trade-offs. Of these, many rely on homomorphic encryption (HE), a method of performing computations over encrypted data. However, HE operations even with state-of-the-art schemes are still considerably slow compared to their plaintext counterparts. Pruning the parameters of a NN model is a well-known approach to improving inference latency. However, pruning methods that are useful in the plaintext context may lend nearly negligible improvement in the HE case, as has also been demonstrated in recent work. In this work, we propose a novel set of pruning methods that reduce the latency and memory requirement, thus bringing the effectiveness of plaintext pruning methods to HE. Crucially, our proposal employs two key techniques, viz. permutation and expansion of the packed model weights, that enable pruning significantly more ciphertexts and recuperating most of the accuracy loss, respectively. We demonstrate the advantage of our method on fully connected layers where the weights are packed using a recently proposed packing technique called tile tensors, which allows executing deep NN inference in a non-interactive mode. We evaluate our methods on various autoencoder architectures and demonstrate that for a small mean-square reconstruction loss of 1.5*10^{-5} on MNIST, we reduce the memory requirement and latency of HE-enabled inference by 60%.

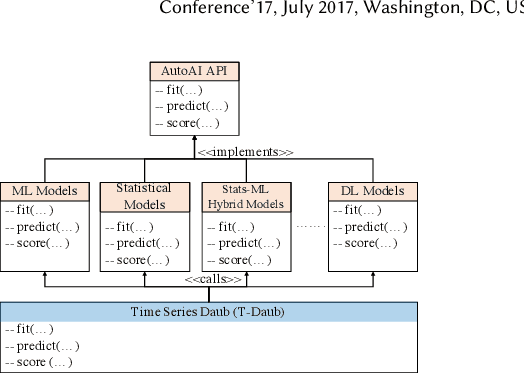

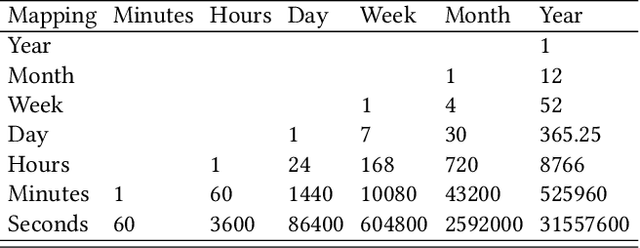

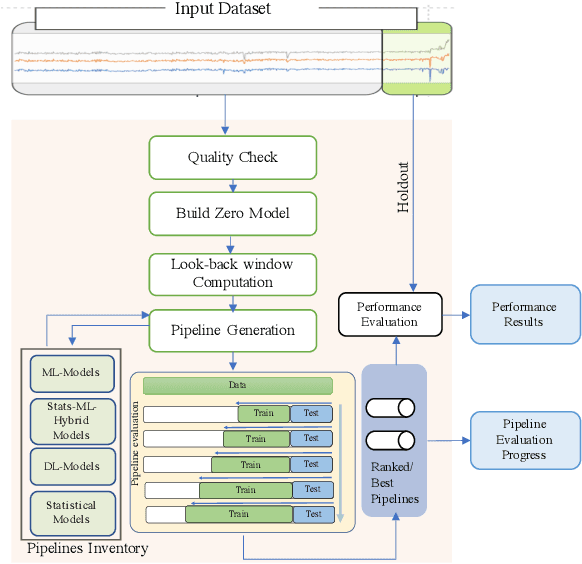

AutoAI-TS: AutoAI for Time Series Forecasting

Mar 08, 2021

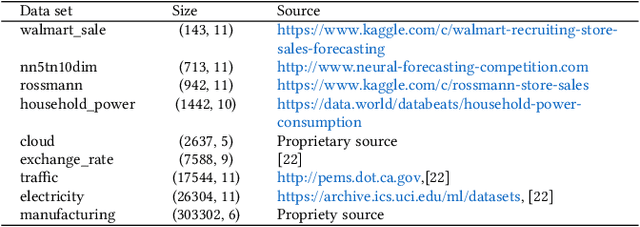

Abstract:A large number of time series forecasting models including traditional statistical models, machine learning models and more recently deep learning have been proposed in the literature. However, choosing the right model along with good parameter values that performs well on a given data is still challenging. Automatically providing a good set of models to users for a given dataset saves both time and effort from using trial-and-error approaches with a wide variety of available models along with parameter optimization. We present AutoAI for Time Series Forecasting (AutoAI-TS) that provides users with a zero configuration (zero-conf ) system to efficiently train, optimize and choose best forecasting model among various classes of models for the given dataset. With its flexible zero-conf design, AutoAI-TS automatically performs all the data preparation, model creation, parameter optimization, training and model selection for users and provides a trained model that is ready to use. For given data, AutoAI-TS utilizes a wide variety of models including classical statistical models, Machine Learning (ML) models, statistical-ML hybrid models and deep learning models along with various transformations to create forecasting pipelines. It then evaluates and ranks pipelines using the proposed T-Daub mechanism to choose the best pipeline. The paper describe in detail all the technical aspects of AutoAI-TS along with extensive benchmarking on a variety of real world data sets for various use-cases. Benchmark results show that AutoAI-TS, with no manual configuration from the user, automatically trains and selects pipelines that on average outperform existing state-of-the-art time series forecasting toolkits.

Efficient Encrypted Inference on Ensembles of Decision Trees

Mar 05, 2021

Abstract:Data privacy concerns often prevent the use of cloud-based machine learning services for sensitive personal data. While homomorphic encryption (HE) offers a potential solution by enabling computations on encrypted data, the challenge is to obtain accurate machine learning models that work within the multiplicative depth constraints of a leveled HE scheme. Existing approaches for encrypted inference either make ad-hoc simplifications to a pre-trained model (e.g., replace hard comparisons in a decision tree with soft comparators) at the cost of accuracy or directly train a new depth-constrained model using the original training set. In this work, we propose a framework to transfer knowledge extracted by complex decision tree ensembles to shallow neural networks (referred to as DTNets) that are highly conducive to encrypted inference. Our approach minimizes the accuracy loss by searching for the best DTNet architecture that operates within the given depth constraints and training this DTNet using only synthetic data sampled from the training data distribution. Extensive experiments on real-world datasets demonstrate that these characteristics are critical in ensuring that DTNet accuracy approaches that of the original tree ensemble. Our system is highly scalable and can perform efficient inference on batched encrypted (134 bits of security) data with amortized time in milliseconds. This is approximately three orders of magnitude faster than the standard approach of applying soft comparison at the internal nodes of the ensemble trees.

Differentially Private Distributed Data Summarization under Covariate Shift

Oct 28, 2019

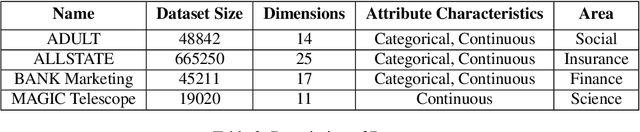

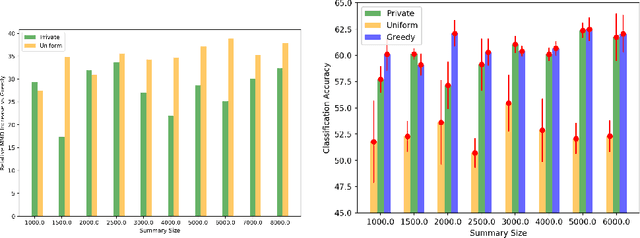

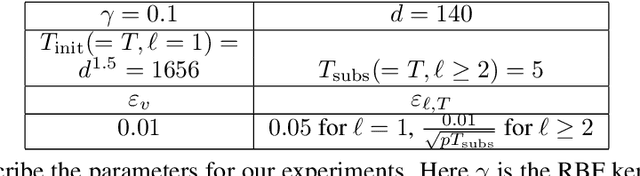

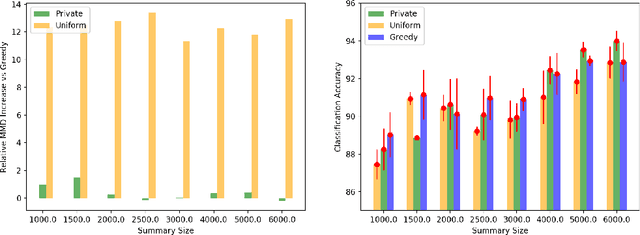

Abstract:We envision AI marketplaces to be platforms where consumers, with very less data for a target task, can obtain a relevant model by accessing many private data sources with vast number of data samples. One of the key challenges is to construct a training dataset that matches a target task without compromising on privacy of the data sources. To this end, we consider the following distributed data summarizataion problem. Given K private source datasets denoted by $[D_i]_{i\in [K]}$ and a small target validation set $D_v$, which may involve a considerable covariate shift with respect to the sources, compute a summary dataset $D_s\subseteq \bigcup_{i\in [K]} D_i$ such that its statistical distance from the validation dataset $D_v$ is minimized. We use the popular Maximum Mean Discrepancy as the measure of statistical distance. The non-private problem has received considerable attention in prior art, for example in prototype selection (Kim et al., NIPS 2016). Our work is the first to obtain strong differential privacy guarantees while ensuring the quality guarantees of the non-private version. We study this problem in a Parsimonious Curator Privacy Model, where a trusted curator coordinates the summarization process while minimizing the amount of private information accessed. Our central result is a novel protocol that (a) ensures the curator accesses at most $O(K^{\frac{1}{3}}|D_s| + |D_v|)$ points (b) has formal privacy guarantees on the leakage of information between the data owners and (c) closely matches the best known non-private greedy algorithm. Our protocol uses two hash functions, one inspired by the Rahimi-Recht random features method and the second leverages state of the art differential privacy mechanisms. We introduce a novel "noiseless" differentially private auctioning protocol for winner notification and demonstrate the efficacy of our protocol using real-world datasets.

Trusted Multi-Party Computation and Verifiable Simulations: A Scalable Blockchain Approach

Sep 22, 2018

Abstract:Large-scale computational experiments, often running over weeks and over large datasets, are used extensively in fields such as epidemiology, meteorology, computational biology, and healthcare to understand phenomena, and design high-stakes policies affecting everyday health and economy. For instance, the OpenMalaria framework is a computationally-intensive simulation used by various non-governmental and governmental agencies to understand malarial disease spread and effectiveness of intervention strategies, and subsequently design healthcare policies. Given that such shared results form the basis of inferences drawn, technological solutions designed, and day-to-day policies drafted, it is essential that the computations are validated and trusted. In particular, in a multi-agent environment involving several independent computing agents, a notion of trust in results generated by peers is critical in facilitating transparency, accountability, and collaboration. Using a novel combination of distributed validation of atomic computation blocks and a blockchain-based immutable audits mechanism, this work proposes a universal framework for distributed trust in computations. In particular we address the scalaibility problem by reducing the storage and communication costs using a lossy compression scheme. This framework guarantees not only verifiability of final results, but also the validity of local computations, and its cost-benefit tradeoffs are studied using a synthetic example of training a neural network.

Similarity Preserving Representation Learning for Time Series Analysis

Mar 09, 2017

Abstract:A considerable amount of machine learning algorithms take instance-feature matrices as their inputs. As such, they cannot directly analyze time series data due to its temporal nature, usually unequal lengths, and complex properties. This is a great pity since many of these algorithms are effective, robust, efficient, and easy to use. In this paper, we bridge this gap by proposing an efficient representation learning framework that is able to convert a set of time series with equal or unequal lengths to a matrix format. In particular, we guarantee that the pairwise similarities between time series are well preserved after the transformation. The learned feature representation is particularly suitable to the class of learning problems that are sensitive to data similarities. Given a set of $n$ time series, we first construct an $n\times n$ partially observed similarity matrix by randomly sampling $O(n \log n)$ pairs of time series and computing their pairwise similarities. We then propose an extremely efficient algorithm that solves a highly non-convex and NP-hard problem to learn new features based on the partially observed similarity matrix. We use the learned features to conduct experiments on both data classification and clustering tasks. Our extensive experimental results demonstrate that the proposed framework is both effective and efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge