Nupur Aggarwal

TsSHAP: Robust model agnostic feature-based explainability for time series forecasting

Mar 22, 2023

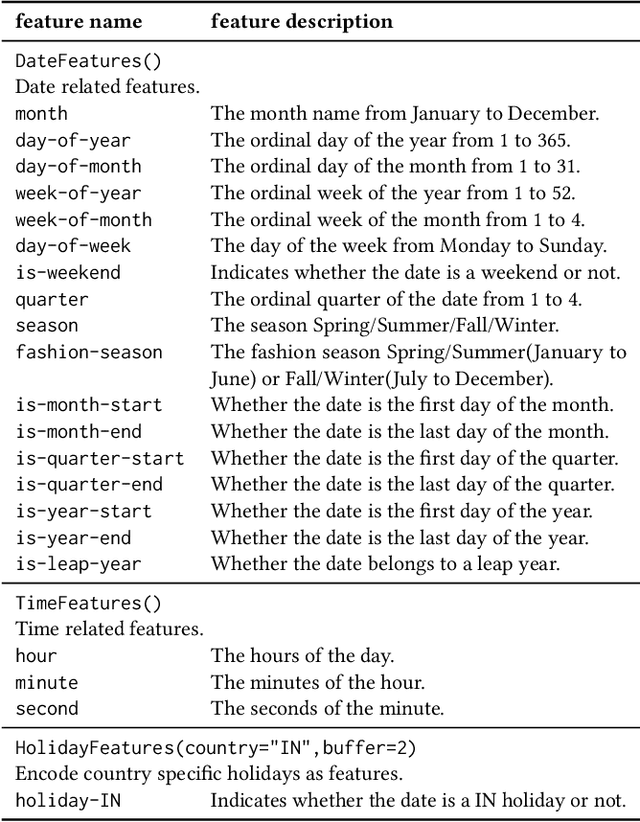

Abstract:A trustworthy machine learning model should be accurate as well as explainable. Understanding why a model makes a certain decision defines the notion of explainability. While various flavors of explainability have been well-studied in supervised learning paradigms like classification and regression, literature on explainability for time series forecasting is relatively scarce. In this paper, we propose a feature-based explainability algorithm, TsSHAP, that can explain the forecast of any black-box forecasting model. The method is agnostic of the forecasting model and can provide explanations for a forecast in terms of interpretable features defined by the user a prior. The explanations are in terms of the SHAP values obtained by applying the TreeSHAP algorithm on a surrogate model that learns a mapping between the interpretable feature space and the forecast of the black-box model. Moreover, we formalize the notion of local, semi-local, and global explanations in the context of time series forecasting, which can be useful in several scenarios. We validate the efficacy and robustness of TsSHAP through extensive experiments on multiple datasets.

Hyper-local sustainable assortment planning

Jul 27, 2020

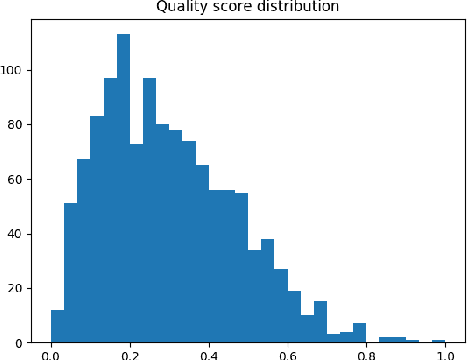

Abstract:Assortment planning, an important seasonal activity for any retailer, involves choosing the right subset of products to stock in each store.While existing approaches only maximize the expected revenue, we propose including the environmental impact too, through the Higg Material Sustainability Index. The trade-off between revenue and environmental impact is balanced through a multi-objective optimization approach, that yields a Pareto-front of optimal assortments for merchandisers to choose from. Using the proposed approach on a few product categories of a leading fashion retailer shows that choosing assortments with lower environmental impact with a minimal impact on revenue is possible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge