Syed Yousaf Shah

AutoAI-TS: AutoAI for Time Series Forecasting

Mar 08, 2021

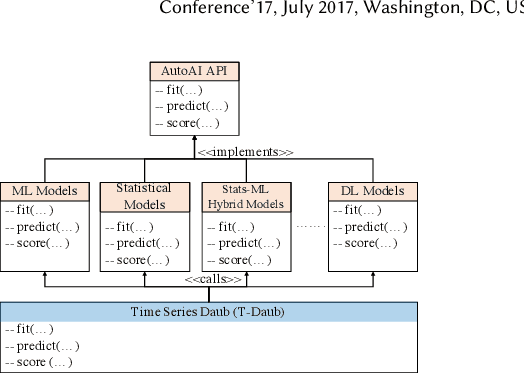

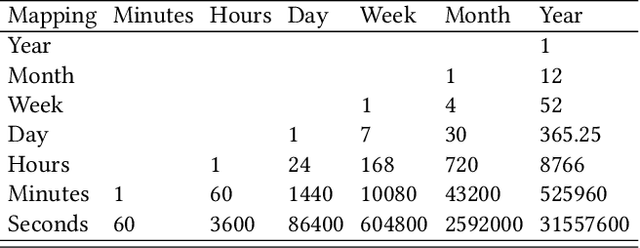

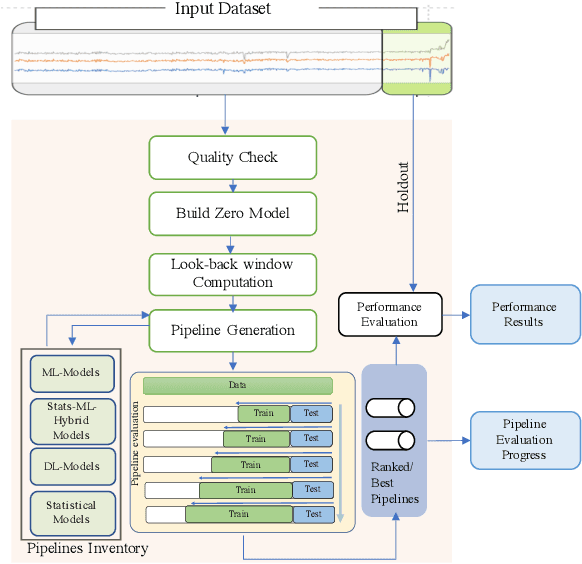

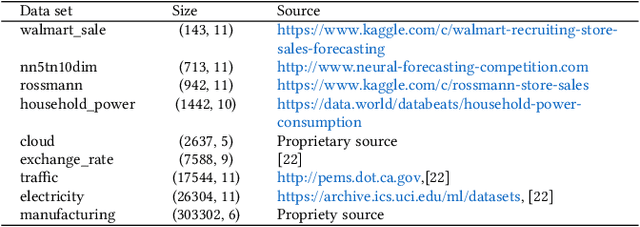

Abstract:A large number of time series forecasting models including traditional statistical models, machine learning models and more recently deep learning have been proposed in the literature. However, choosing the right model along with good parameter values that performs well on a given data is still challenging. Automatically providing a good set of models to users for a given dataset saves both time and effort from using trial-and-error approaches with a wide variety of available models along with parameter optimization. We present AutoAI for Time Series Forecasting (AutoAI-TS) that provides users with a zero configuration (zero-conf ) system to efficiently train, optimize and choose best forecasting model among various classes of models for the given dataset. With its flexible zero-conf design, AutoAI-TS automatically performs all the data preparation, model creation, parameter optimization, training and model selection for users and provides a trained model that is ready to use. For given data, AutoAI-TS utilizes a wide variety of models including classical statistical models, Machine Learning (ML) models, statistical-ML hybrid models and deep learning models along with various transformations to create forecasting pipelines. It then evaluates and ranks pipelines using the proposed T-Daub mechanism to choose the best pipeline. The paper describe in detail all the technical aspects of AutoAI-TS along with extensive benchmarking on a variety of real world data sets for various use-cases. Benchmark results show that AutoAI-TS, with no manual configuration from the user, automatically trains and selects pipelines that on average outperform existing state-of-the-art time series forecasting toolkits.

"The Squawk Bot": Joint Learning of Time Series and Text Data Modalities for Automated Financial Information Filtering

Dec 20, 2019

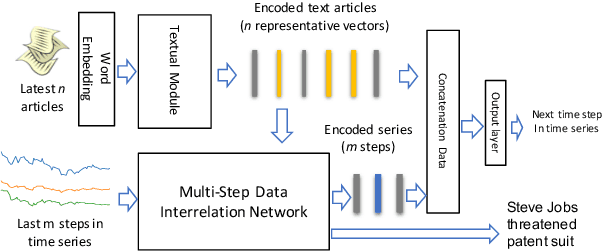

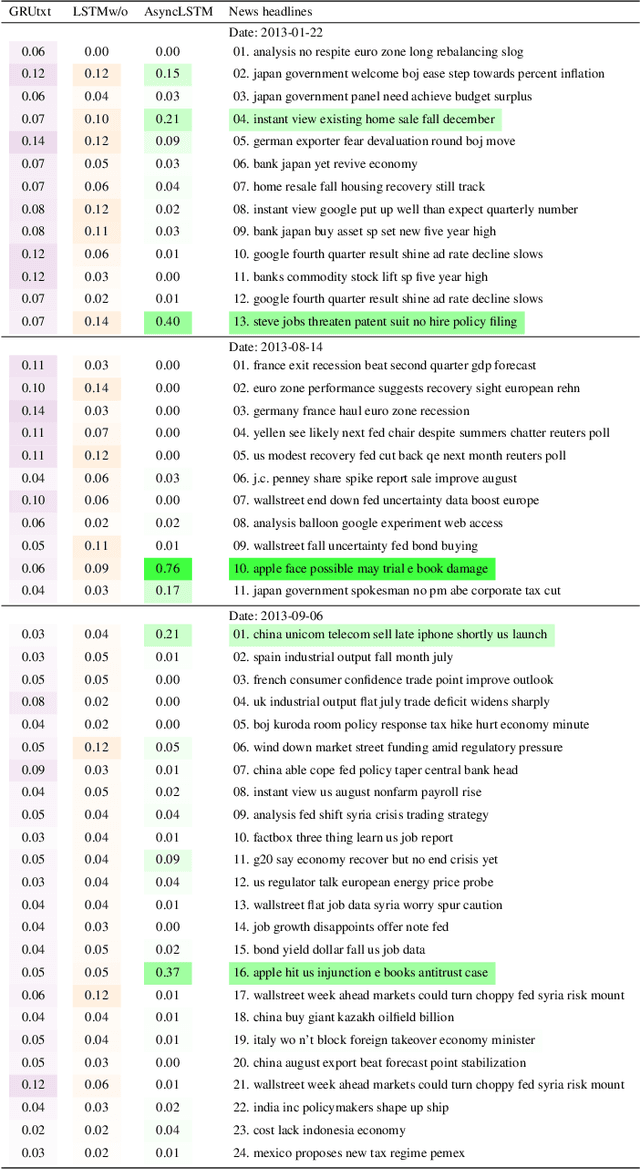

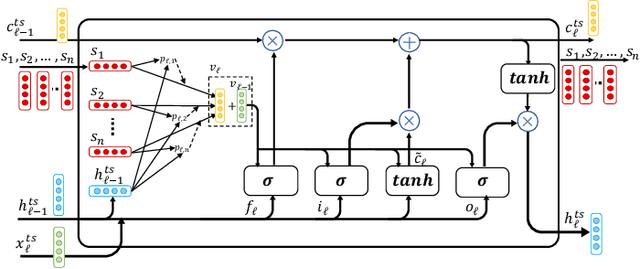

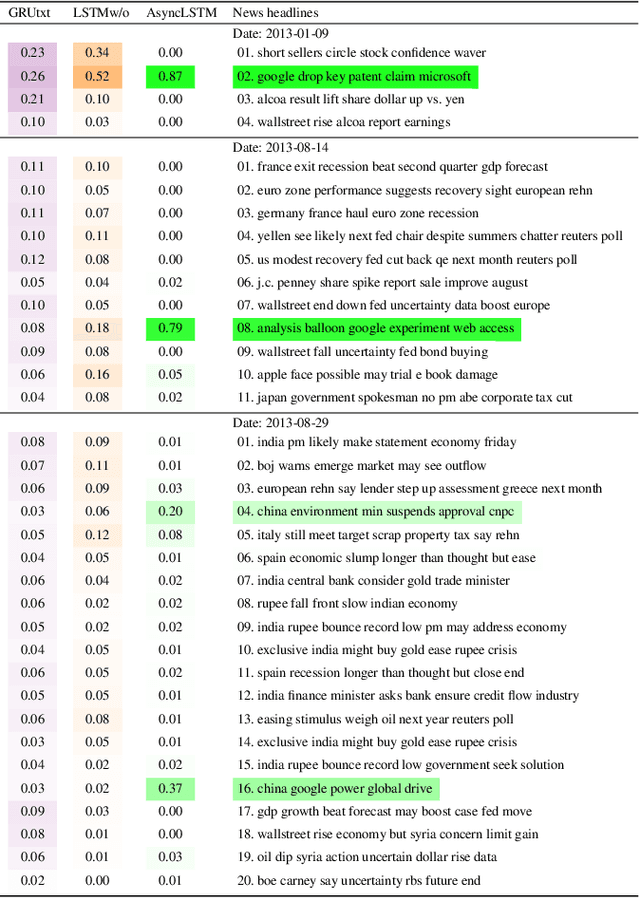

Abstract:Multimodal analysis that uses numerical time series and textual corpora as input data sources is becoming a promising approach, especially in the financial industry. However, the main focus of such analysis has been on achieving high prediction accuracy while little effort has been spent on the important task of understanding the association between the two data modalities. Performance on the time series hence receives little explanation though human-understandable textual information is available. In this work, we address the problem of given a numerical time series, and a general corpus of textual stories collected in the same period of the time series, the task is to timely discover a succinct set of textual stories associated with that time series. Towards this goal, we propose a novel multi-modal neural model called MSIN that jointly learns both numerical time series and categorical text articles in order to unearth the association between them. Through multiple steps of data interrelation between the two data modalities, MSIN learns to focus on a small subset of text articles that best align with the performance in the time series. This succinct set is timely discovered and presented as recommended documents, acting as automated information filtering, for the given time series. We empirically evaluate the performance of our model on discovering relevant news articles for two stock time series from Apple and Google companies, along with the daily news articles collected from the Thomson Reuters over a period of seven consecutive years. The experimental results demonstrate that MSIN achieves up to 84.9% and 87.2% in recalling the ground truth articles respectively to the two examined time series, far more superior to state-of-the-art algorithms that rely on conventional attention mechanism in deep learning.

seq2graph: Discovering Dynamic Dependencies from Multivariate Time Series with Multi-level Attention

Dec 07, 2018

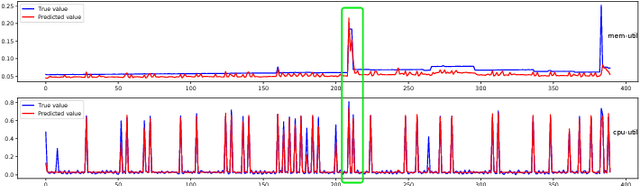

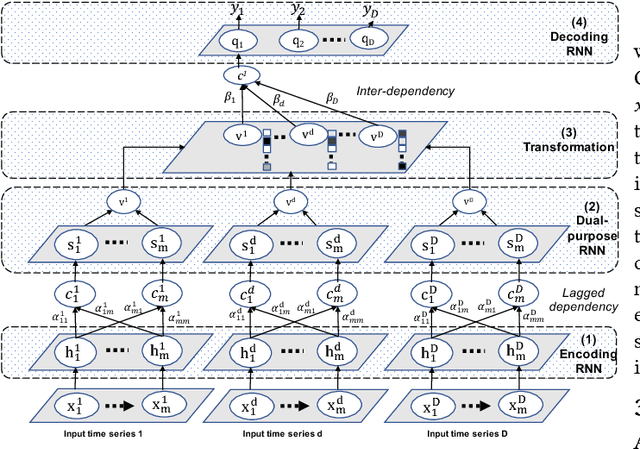

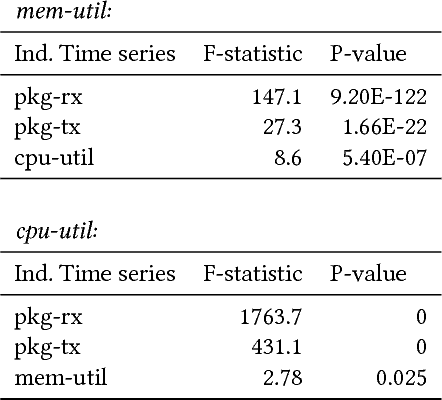

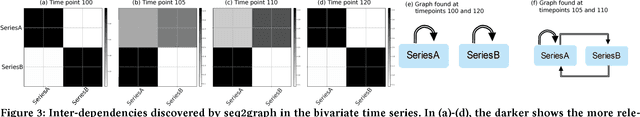

Abstract:Discovering temporal lagged and inter-dependencies in multivariate time series data is an important task. However, in many real-world applications, such as commercial cloud management, manufacturing predictive maintenance, and portfolios performance analysis, such dependencies can be non-linear and time-variant, which makes it more challenging to extract such dependencies through traditional methods such as Granger causality or clustering. In this work, we present a novel deep learning model that uses multiple layers of customized gated recurrent units (GRUs) for discovering both time lagged behaviors as well as inter-timeseries dependencies in the form of directed weighted graphs. We introduce a key component of Dual-purpose recurrent neural network that decodes information in the temporal domain to discover lagged dependencies within each time series, and encodes them into a set of vectors which, collected from all component time series, form the informative inputs to discover inter-dependencies. Though the discovery of two types of dependencies are separated at different hierarchical levels, they are tightly connected and jointly trained in an end-to-end manner. With this joint training, learning of one type of dependency immediately impacts the learning of the other one, leading to overall accurate dependencies discovery. We empirically test our model on synthetic time series data in which the exact form of (non-linear) dependencies is known. We also evaluate its performance on two real-world applications, (i) performance monitoring data from a commercial cloud provider, which exhibit highly dynamic, non-linear, and volatile behavior and, (ii) sensor data from a manufacturing plant. We further show how our approach is able to capture these dependency behaviors via intuitive and interpretable dependency graphs and use them to generate highly accurate forecasts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge