Qi Luo

Multi-hop Reasoning via Early Knowledge Alignment

Dec 23, 2025

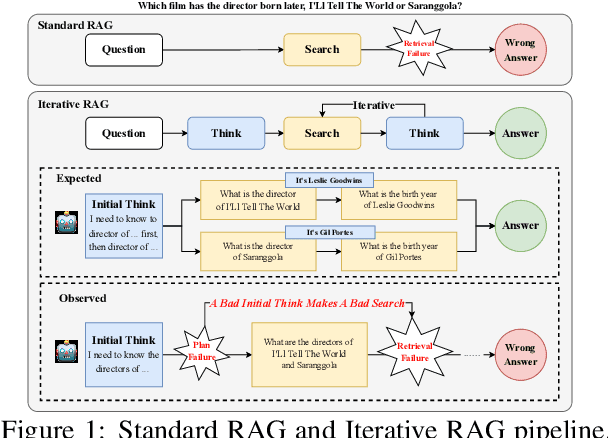

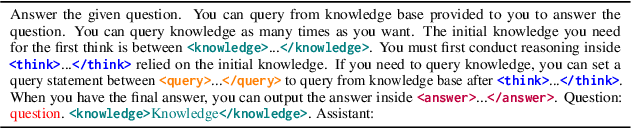

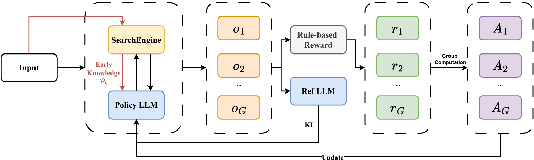

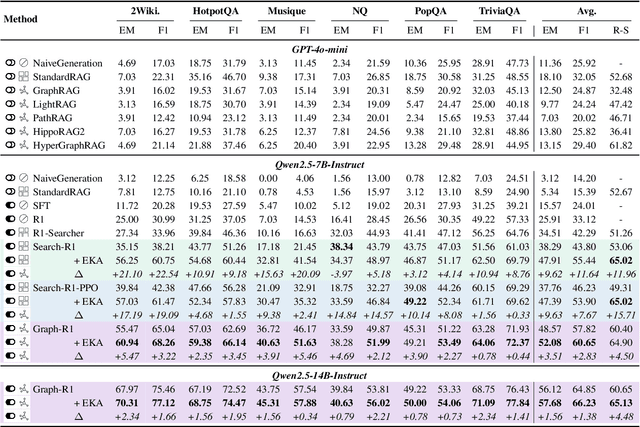

Abstract:Retrieval-Augmented Generation (RAG) has emerged as a powerful paradigm for Large Language Models (LLMs) to address knowledge-intensive queries requiring domain-specific or up-to-date information. To handle complex multi-hop questions that are challenging for single-step retrieval, iterative RAG approaches incorporating reinforcement learning have been proposed. However, existing iterative RAG systems typically plan to decompose questions without leveraging information about the available retrieval corpus, leading to inefficient retrieval and reasoning chains that cascade into suboptimal performance. In this paper, we introduce Early Knowledge Alignment (EKA), a simple but effective module that aligns LLMs with retrieval set before planning in iterative RAG systems with contextually relevant retrieved knowledge. Extensive experiments on six standard RAG datasets demonstrate that by establishing a stronger reasoning foundation, EKA significantly improves retrieval precision, reduces cascading errors, and enhances both performance and efficiency. Our analysis from an entropy perspective demonstrate that incorporating early knowledge reduces unnecessary exploration during the reasoning process, enabling the model to focus more effectively on relevant information subsets. Moreover, EKA proves effective as a versatile, training-free inference strategy that scales seamlessly to large models. Generalization tests across diverse datasets and retrieval corpora confirm the robustness of our approach. Overall, EKA advances the state-of-the-art in iterative RAG systems while illuminating the critical interplay between structured reasoning and efficient exploration in reinforcement learning-augmented frameworks. The code is released at \href{https://github.com/yxzwang/EarlyKnowledgeAlignment}{Github}.

Terahertz Signal Coverage Enhancement in Hall Scenarios Based on Single-Hop and Dual-Hop Reconfigurable Intelligent Surfaces

Dec 16, 2025Abstract:Terahertz (THz) communication offers ultra-high data rates and has emerged as a promising technology for future wireless networks. However, the inherently high free-space path loss of THz waves significantly limits the coverage range of THz communication systems. Therefore, extending the effective coverage area is a key challenge for the practical deployment of THz networks. Reconfigurable intelligent surfaces (RIS), which can dynamically manipulate electromagnetic wave propagation, provide a solution to enhance THz coverage. To investigate multi-RIS deployment scenarios, this work integrates an antenna array-based RIS model into the ray-tracing simulation platform. Using an indoor hall as a representative case study, the enhancement effects of single-hop and dual-hop RIS configurations on indoor signal coverage are evaluated under various deployment schemes. The developed framework offers valuable insights and design references for optimizing RIS-assisted indoor THz communication and coverage estimation.

GUIDE: Gaussian Unified Instance Detection for Enhanced Obstacle Perception in Autonomous Driving

Nov 17, 2025Abstract:In the realm of autonomous driving, accurately detecting surrounding obstacles is crucial for effective decision-making. Traditional methods primarily rely on 3D bounding boxes to represent these obstacles, which often fail to capture the complexity of irregularly shaped, real-world objects. To overcome these limitations, we present GUIDE, a novel framework that utilizes 3D Gaussians for instance detection and occupancy prediction. Unlike conventional occupancy prediction methods, GUIDE also offers robust tracking capabilities. Our framework employs a sparse representation strategy, using Gaussian-to-Voxel Splatting to provide fine-grained, instance-level occupancy data without the computational demands associated with dense voxel grids. Experimental validation on the nuScenes dataset demonstrates GUIDE's performance, with an instance occupancy mAP of 21.61, marking a 50\% improvement over existing methods, alongside competitive tracking capabilities. GUIDE establishes a new benchmark in autonomous perception systems, effectively combining precision with computational efficiency to better address the complexities of real-world driving environments.

MARAG-R1: Beyond Single Retriever via Reinforcement-Learned Multi-Tool Agentic Retrieval

Oct 31, 2025

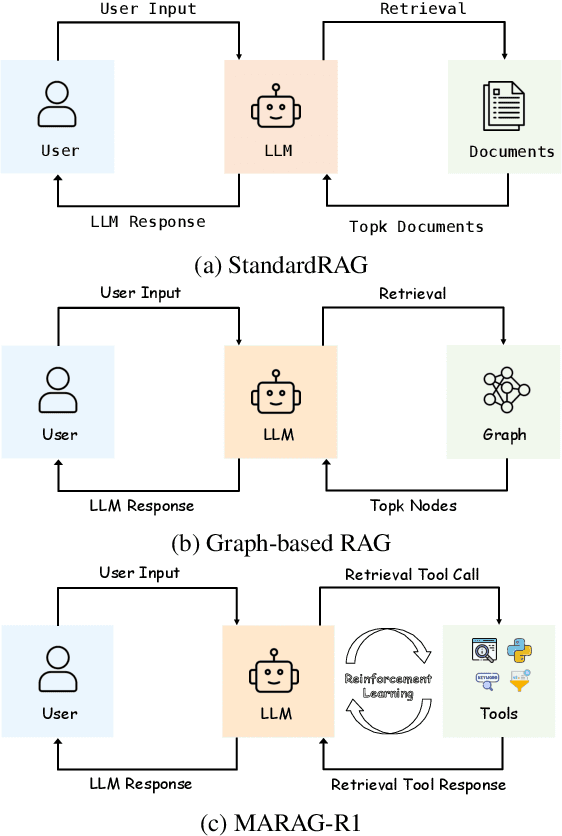

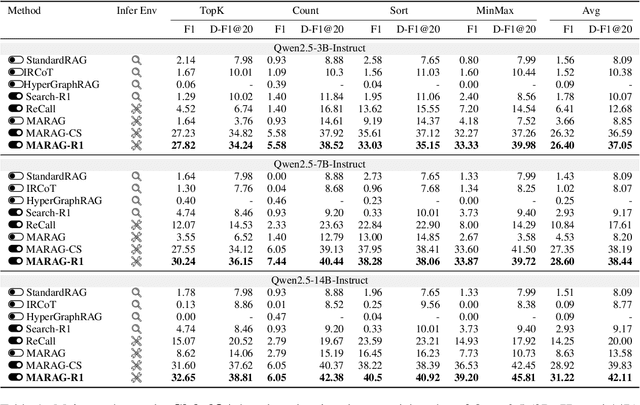

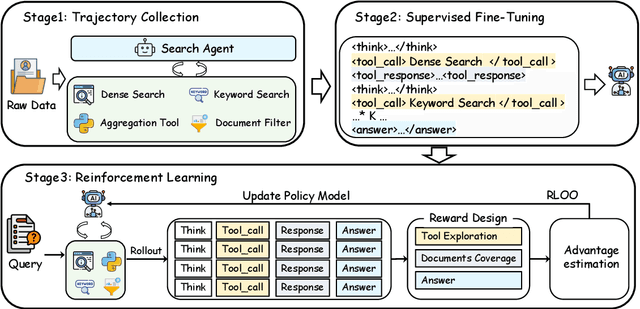

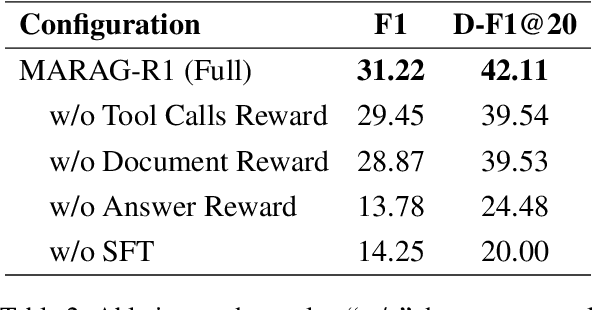

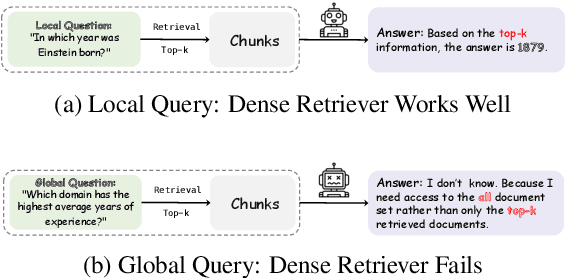

Abstract:Large Language Models (LLMs) excel at reasoning and generation but are inherently limited by static pretraining data, resulting in factual inaccuracies and weak adaptability to new information. Retrieval-Augmented Generation (RAG) addresses this issue by grounding LLMs in external knowledge; However, the effectiveness of RAG critically depends on whether the model can adequately access relevant information. Existing RAG systems rely on a single retriever with fixed top-k selection, restricting access to a narrow and static subset of the corpus. As a result, this single-retriever paradigm has become the primary bottleneck for comprehensive external information acquisition, especially in tasks requiring corpus-level reasoning. To overcome this limitation, we propose MARAG-R1, a reinforcement-learned multi-tool RAG framework that enables LLMs to dynamically coordinate multiple retrieval mechanisms for broader and more precise information access. MARAG-R1 equips the model with four retrieval tools -- semantic search, keyword search, filtering, and aggregation -- and learns both how and when to use them through a two-stage training process: supervised fine-tuning followed by reinforcement learning. This design allows the model to interleave reasoning and retrieval, progressively gathering sufficient evidence for corpus-level synthesis. Experiments on GlobalQA, HotpotQA, and 2WikiMultiHopQA demonstrate that MARAG-R1 substantially outperforms strong baselines and achieves new state-of-the-art results in corpus-level reasoning tasks.

Towards Global Retrieval Augmented Generation: A Benchmark for Corpus-Level Reasoning

Oct 30, 2025

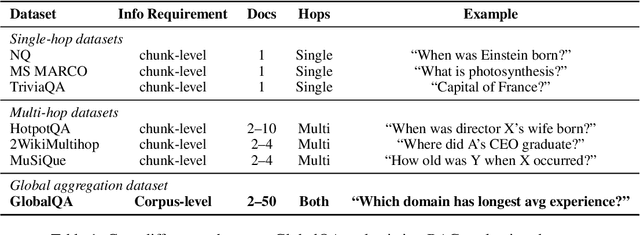

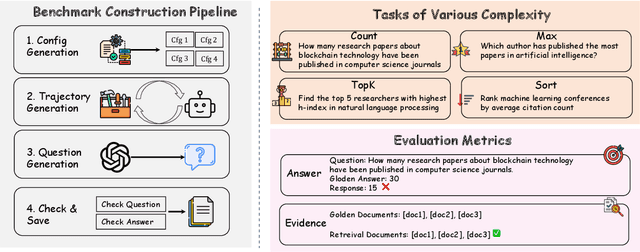

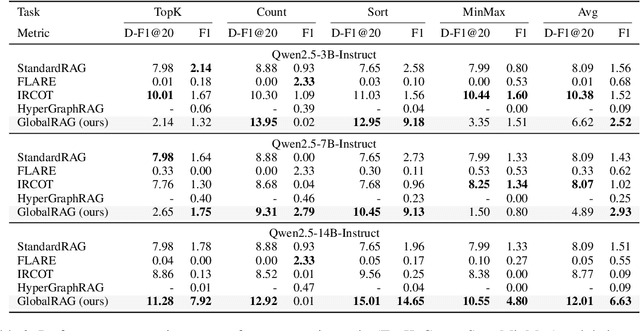

Abstract:Retrieval-augmented generation (RAG) has emerged as a leading approach to reducing hallucinations in large language models (LLMs). Current RAG evaluation benchmarks primarily focus on what we call local RAG: retrieving relevant chunks from a small subset of documents to answer queries that require only localized understanding within specific text chunks. However, many real-world applications require a fundamentally different capability -- global RAG -- which involves aggregating and analyzing information across entire document collections to derive corpus-level insights (for example, "What are the top 10 most cited papers in 2023?"). In this paper, we introduce GlobalQA -- the first benchmark specifically designed to evaluate global RAG capabilities, covering four core task types: counting, extremum queries, sorting, and top-k extraction. Through systematic evaluation across different models and baselines, we find that existing RAG methods perform poorly on global tasks, with the strongest baseline achieving only 1.51 F1 score. To address these challenges, we propose GlobalRAG, a multi-tool collaborative framework that preserves structural coherence through chunk-level retrieval, incorporates LLM-driven intelligent filters to eliminate noisy documents, and integrates aggregation modules for precise symbolic computation. On the Qwen2.5-14B model, GlobalRAG achieves 6.63 F1 compared to the strongest baseline's 1.51 F1, validating the effectiveness of our method.

R3-RAG: Learning Step-by-Step Reasoning and Retrieval for LLMs via Reinforcement Learning

May 26, 2025

Abstract:Retrieval-Augmented Generation (RAG) integrates external knowledge with Large Language Models (LLMs) to enhance factual correctness and mitigate hallucination. However, dense retrievers often become the bottleneck of RAG systems due to their limited parameters compared to LLMs and their inability to perform step-by-step reasoning. While prompt-based iterative RAG attempts to address these limitations, it is constrained by human-designed workflows. To address these limitations, we propose $\textbf{R3-RAG}$, which uses $\textbf{R}$einforcement learning to make the LLM learn how to $\textbf{R}$eason and $\textbf{R}$etrieve step by step, thus retrieving comprehensive external knowledge and leading to correct answers. R3-RAG is divided into two stages. We first use cold start to make the model learn the manner of iteratively interleaving reasoning and retrieval. Then we use reinforcement learning to further harness its ability to better explore the external retrieval environment. Specifically, we propose two rewards for R3-RAG: 1) answer correctness for outcome reward, which judges whether the trajectory leads to a correct answer; 2) relevance-based document verification for process reward, encouraging the model to retrieve documents that are relevant to the user question, through which we can let the model learn how to iteratively reason and retrieve relevant documents to get the correct answer. Experimental results show that R3-RAG significantly outperforms baselines and can transfer well to different retrievers. We release R3-RAG at https://github.com/Yuan-Li-FNLP/R3-RAG.

Evaluation of Switching Technologies for Reflective and Transmissive RISs at Sub-THz Frequencies

Apr 28, 2025Abstract:For the upcoming 6G wireless networks, reconfigurable intelligent surfaces are an essential technology, enabling dynamic beamforming and signal manipulation in both reflective and transmissive modes. It is expected to utilize frequency bands in the millimeter-wave and THz, which presents unique opportunities but also significant challenges. The selection of switching technologies that can support high-frequency operation with minimal loss and high efficiency is particularly complex. In this work, we demonstrate the potential of advanced components such as Schottky diodes, memristor switches, liquid metal-based switches, phase change materials, and RF-SOI technology in RIS designs as an alternative to overcome limitations inherent in traditional technologies in D-band (110-170 GHz).

Grammar-Based Code Representation: Is It a Worthy Pursuit for LLMs?

Mar 07, 2025

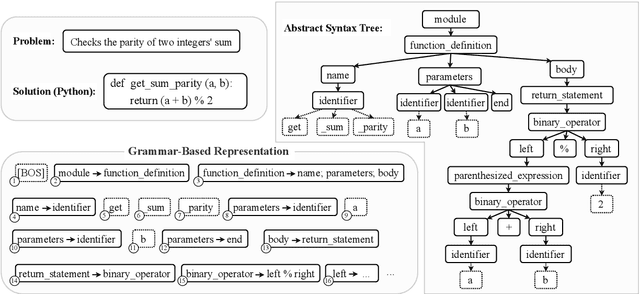

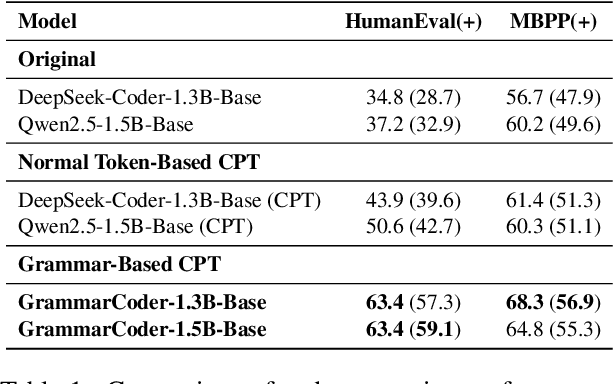

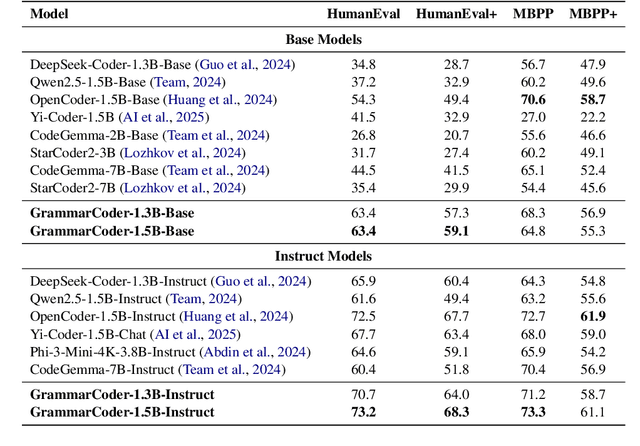

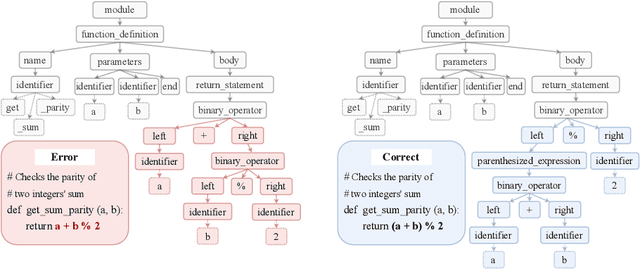

Abstract:Grammar serves as a cornerstone in programming languages and software engineering, providing frameworks to define the syntactic space and program structure. Existing research demonstrates the effectiveness of grammar-based code representations in small-scale models, showing their ability to reduce syntax errors and enhance performance. However, as language models scale to the billion level or beyond, syntax-level errors become rare, making it unclear whether grammar information still provides performance benefits. To explore this, we develop a series of billion-scale GrammarCoder models, incorporating grammar rules in the code generation process. Experiments on HumanEval (+) and MBPP (+) demonstrate a notable improvement in code generation accuracy. Further analysis shows that grammar-based representations enhance LLMs' ability to discern subtle code differences, reducing semantic errors caused by minor variations. These findings suggest that grammar-based code representations remain valuable even in billion-scale models, not only by maintaining syntax correctness but also by improving semantic differentiation.

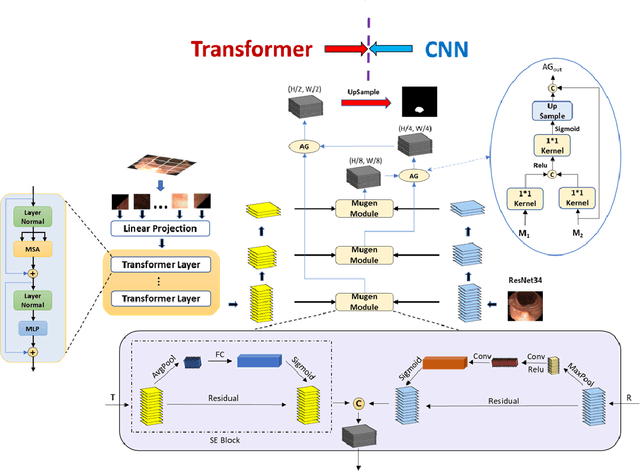

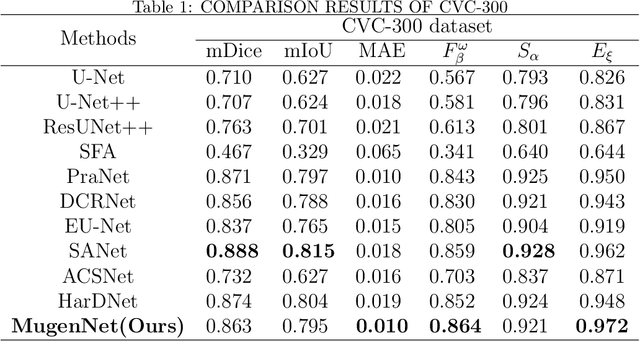

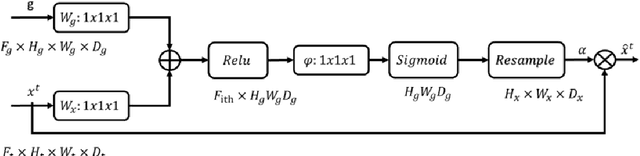

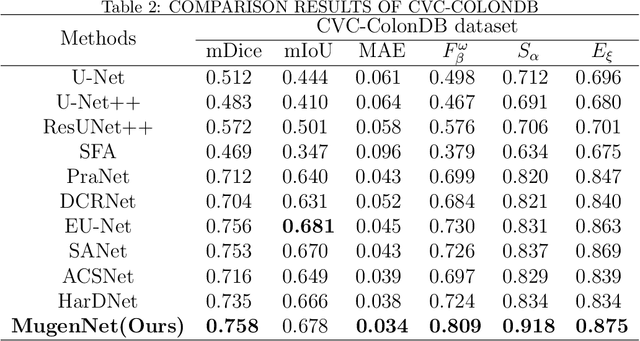

MugenNet: A Novel Combined Convolution Neural Network and Transformer Network with its Application for Colonic Polyp Image Segmentation

Mar 31, 2024

Abstract:Biomedical image segmentation is a very important part in disease diagnosis. The term "colonic polyps" refers to polypoid lesions that occur on the surface of the colonic mucosa within the intestinal lumen. In clinical practice, early detection of polyps is conducted through colonoscopy examinations and biomedical image processing. Therefore, the accurate polyp image segmentation is of great significance in colonoscopy examinations. Convolutional Neural Network (CNN) is a common automatic segmentation method, but its main disadvantage is the long training time. Transformer utilizes a self-attention mechanism, which essentially assigns different importance weights to each piece of information, thus achieving high computational efficiency during segmentation. However, a potential drawback is the risk of information loss. In the study reported in this paper, based on the well-known hybridization principle, we proposed a method to combine CNN and Transformer to retain the strengths of both, and we applied this method to build a system called MugenNet for colonic polyp image segmentation. We conducted a comprehensive experiment to compare MugenNet with other CNN models on five publicly available datasets. The ablation experiment on MugentNet was conducted as well. The experimental results show that MugenNet achieves significantly higher processing speed and accuracy compared with CNN alone. The generalized implication with our work is a method to optimally combine two complimentary methods of machine learning.

PyroTrack: Belief-Based Deep Reinforcement Learning Path Planning for Aerial Wildfire Monitoring in Partially Observable Environments

Mar 17, 2024Abstract:Motivated by agility, 3D mobility, and low-risk operation compared to human-operated management systems of autonomous unmanned aerial vehicles (UAVs), this work studies UAV-based active wildfire monitoring where a UAV detects fire incidents in remote areas and tracks the fire frontline. A UAV path planning solution is proposed considering realistic wildfire management missions, where a single low-altitude drone with limited power and flight time is available. Noting the limited field of view of commercial low-altitude UAVs, the problem formulates as a partially observable Markov decision process (POMDP), in which wildfire progression outside the field of view causes inaccurate state representation that prevents the UAV from finding the optimal path to track the fire front in limited time. Common deep reinforcement learning (DRL)-based trajectory planning solutions require diverse drone-recorded wildfire data to generalize pre-trained models to real-time systems, which is not currently available at a diverse and standard scale. To narrow down the gap caused by partial observability in the space of possible policies, a belief-based state representation with broad, extensive simulated data is proposed where the beliefs (i.e., ignition probabilities of different grid areas) are updated using a Bayesian framework for the cells within the field of view. The performance of the proposed solution in terms of the ratio of detected fire cells and monitored ignited area (MIA) is evaluated in a complex fire scenario with multiple rapidly growing fire batches, indicating that the belief state representation outperforms the observation state representation both in fire coverage and the distance to fire frontline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge