Paarth Shah

A Systematic Study of Data Modalities and Strategies for Co-training Large Behavior Models for Robot Manipulation

Feb 01, 2026Abstract:Large behavior models have shown strong dexterous manipulation capabilities by extending imitation learning to large-scale training on multi-task robot data, yet their generalization remains limited by the insufficient robot data coverage. To expand this coverage without costly additional data collection, recent work relies on co-training: jointly learning from target robot data and heterogeneous data modalities. However, how different co-training data modalities and strategies affect policy performance remains poorly understood. We present a large-scale empirical study examining five co-training data modalities: standard vision-language data, dense language annotations for robot trajectories, cross-embodiment robot data, human videos, and discrete robot action tokens across single- and multi-phase training strategies. Our study leverages 4,000 hours of robot and human manipulation data and 50M vision-language samples to train vision-language-action policies. We evaluate 89 policies over 58,000 simulation rollouts and 2,835 real-world rollouts. Our results show that co-training with forms of vision-language and cross-embodiment robot data substantially improves generalization to distribution shifts, unseen tasks, and language following, while discrete action token variants yield no significant benefits. Combining effective modalities produces cumulative gains and enables rapid adaptation to unseen long-horizon dexterous tasks via fine-tuning. Training exclusively on robot data degrades the visiolinguistic understanding of the vision-language model backbone, while co-training with effective modalities restores these capabilities. Explicitly conditioning action generation on chain-of-thought traces learned from co-training data does not improve performance in our simulation benchmark. Together, these results provide practical guidance for building scalable generalist robot policies.

Video Generators are Robot Policies

Aug 01, 2025Abstract:Despite tremendous progress in dexterous manipulation, current visuomotor policies remain fundamentally limited by two challenges: they struggle to generalize under perceptual or behavioral distribution shifts, and their performance is constrained by the size of human demonstration data. In this paper, we use video generation as a proxy for robot policy learning to address both limitations simultaneously. We propose Video Policy, a modular framework that combines video and action generation that can be trained end-to-end. Our results demonstrate that learning to generate videos of robot behavior allows for the extraction of policies with minimal demonstration data, significantly improving robustness and sample efficiency. Our method shows strong generalization to unseen objects, backgrounds, and tasks, both in simulation and the real world. We further highlight that task success is closely tied to the generated video, with action-free video data providing critical benefits for generalizing to novel tasks. By leveraging large-scale video generative models, we achieve superior performance compared to traditional behavior cloning, paving the way for more scalable and data-efficient robot policy learning.

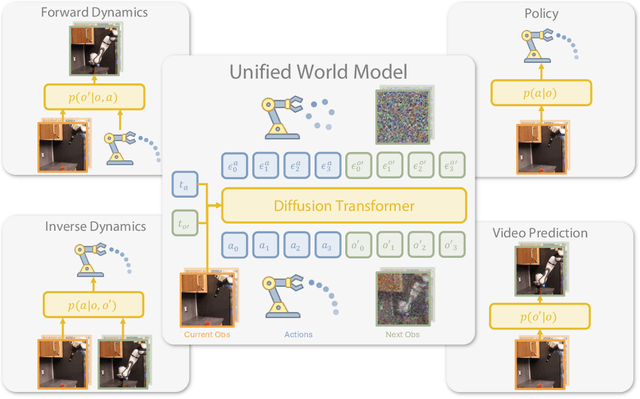

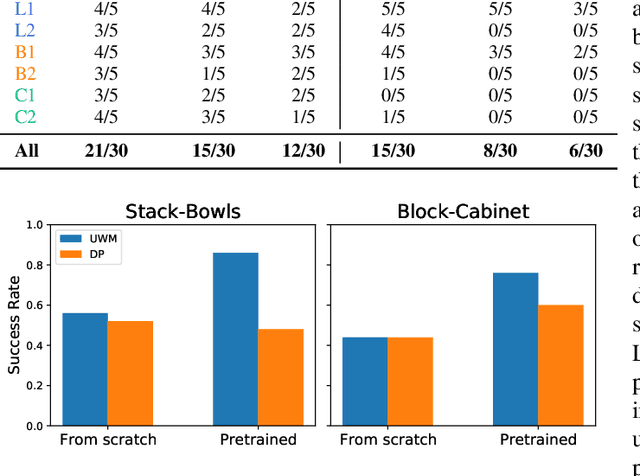

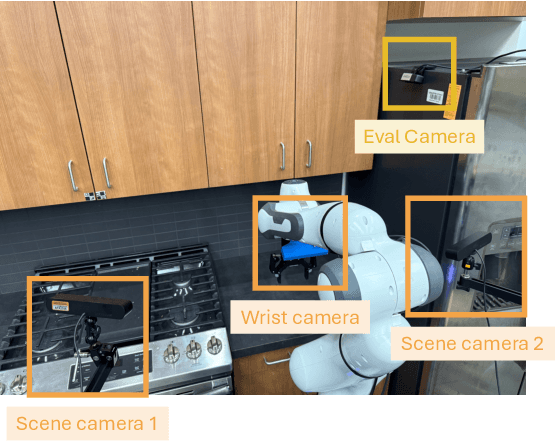

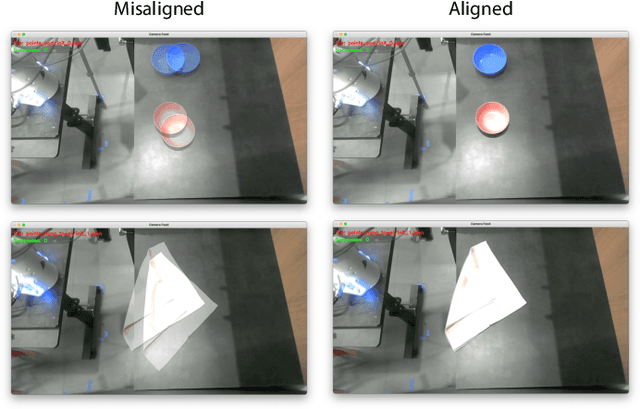

Unified World Models: Coupling Video and Action Diffusion for Pretraining on Large Robotic Datasets

Apr 03, 2025

Abstract:Imitation learning has emerged as a promising approach towards building generalist robots. However, scaling imitation learning for large robot foundation models remains challenging due to its reliance on high-quality expert demonstrations. Meanwhile, large amounts of video data depicting a wide range of environments and diverse behaviors are readily available. This data provides a rich source of information about real-world dynamics and agent-environment interactions. Leveraging this data directly for imitation learning, however, has proven difficult due to the lack of action annotation required for most contemporary methods. In this work, we present Unified World Models (UWM), a framework that allows for leveraging both video and action data for policy learning. Specifically, a UWM integrates an action diffusion process and a video diffusion process within a unified transformer architecture, where independent diffusion timesteps govern each modality. We show that by simply controlling each diffusion timestep, UWM can flexibly represent a policy, a forward dynamics, an inverse dynamics, and a video generator. Through simulated and real-world experiments, we show that: (1) UWM enables effective pretraining on large-scale multitask robot datasets with both dynamics and action predictions, resulting in more generalizable and robust policies than imitation learning, (2) UWM naturally facilitates learning from action-free video data through independent control of modality-specific diffusion timesteps, further improving the performance of finetuned policies. Our results suggest that UWM offers a promising step toward harnessing large, heterogeneous datasets for scalable robot learning, and provides a simple unification between the often disparate paradigms of imitation learning and world modeling. Videos and code are available at https://weirdlabuw.github.io/uwm/.

Can We Detect Failures Without Failure Data? Uncertainty-Aware Runtime Failure Detection for Imitation Learning Policies

Mar 11, 2025Abstract:Recent years have witnessed impressive robotic manipulation systems driven by advances in imitation learning and generative modeling, such as diffusion- and flow-based approaches. As robot policy performance increases, so does the complexity and time horizon of achievable tasks, inducing unexpected and diverse failure modes that are difficult to predict a priori. To enable trustworthy policy deployment in safety-critical human environments, reliable runtime failure detection becomes important during policy inference. However, most existing failure detection approaches rely on prior knowledge of failure modes and require failure data during training, which imposes a significant challenge in practicality and scalability. In response to these limitations, we present FAIL-Detect, a modular two-stage approach for failure detection in imitation learning-based robotic manipulation. To accurately identify failures from successful training data alone, we frame the problem as sequential out-of-distribution (OOD) detection. We first distill policy inputs and outputs into scalar signals that correlate with policy failures and capture epistemic uncertainty. FAIL-Detect then employs conformal prediction (CP) as a versatile framework for uncertainty quantification with statistical guarantees. Empirically, we thoroughly investigate both learned and post-hoc scalar signal candidates on diverse robotic manipulation tasks. Our experiments show learned signals to be mostly consistently effective, particularly when using our novel flow-based density estimator. Furthermore, our method detects failures more accurately and faster than state-of-the-art (SOTA) failure detection baselines. These results highlight the potential of FAIL-Detect to enhance the safety and reliability of imitation learning-based robotic systems as they progress toward real-world deployment.

Robot Learning as an Empirical Science: Best Practices for Policy Evaluation

Sep 14, 2024

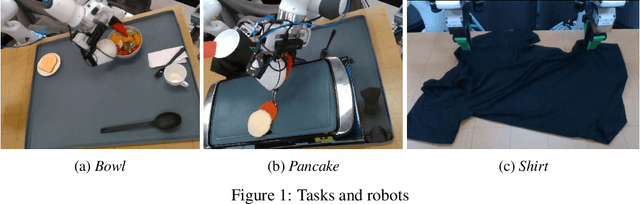

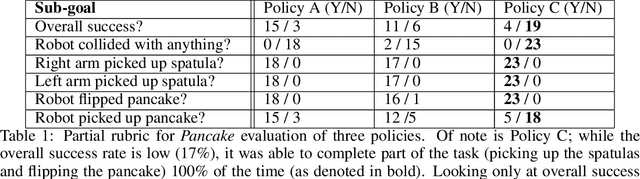

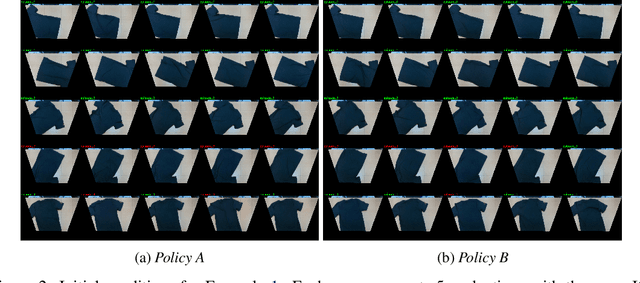

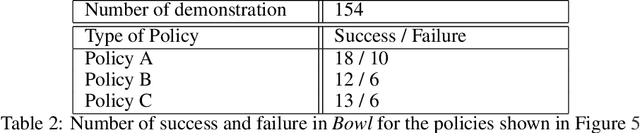

Abstract:The robot learning community has made great strides in recent years, proposing new architectures and showcasing impressive new capabilities; however, the dominant metric used in the literature, especially for physical experiments, is "success rate", i.e. the percentage of runs that were successful. Furthermore, it is common for papers to report this number with little to no information regarding the number of runs, the initial conditions, and the success criteria, little to no narrative description of the behaviors and failures observed, and little to no statistical analysis of the findings. In this paper we argue that to move the field forward, researchers should provide a nuanced evaluation of their methods, especially when evaluating and comparing learned policies on physical robots. To do so, we propose best practices for future evaluations: explicitly reporting the experimental conditions, evaluating several metrics designed to complement success rate, conducting statistical analysis, and adding a qualitative description of failures modes. We illustrate these through an evaluation on physical robots of several learned policies for manipulation tasks.

How Generalizable Is My Behavior Cloning Policy? A Statistical Approach to Trustworthy Performance Evaluation

May 08, 2024

Abstract:With the rise of stochastic generative models in robot policy learning, end-to-end visuomotor policies are increasingly successful at solving complex tasks by learning from human demonstrations. Nevertheless, since real-world evaluation costs afford users only a small number of policy rollouts, it remains a challenge to accurately gauge the performance of such policies. This is exacerbated by distribution shifts causing unpredictable changes in performance during deployment. To rigorously evaluate behavior cloning policies, we present a framework that provides a tight lower-bound on robot performance in an arbitrary environment, using a minimal number of experimental policy rollouts. Notably, by applying the standard stochastic ordering to robot performance distributions, we provide a worst-case bound on the entire distribution of performance (via bounds on the cumulative distribution function) for a given task. We build upon established statistical results to ensure that the bounds hold with a user-specified confidence level and tightness, and are constructed from as few policy rollouts as possible. In experiments we evaluate policies for visuomotor manipulation in both simulation and hardware. Specifically, we (i) empirically validate the guarantees of the bounds in simulated manipulation settings, (ii) find the degree to which a learned policy deployed on hardware generalizes to new real-world environments, and (iii) rigorously compare two policies tested in out-of-distribution settings. Our experimental data, code, and implementation of confidence bounds are open-source.

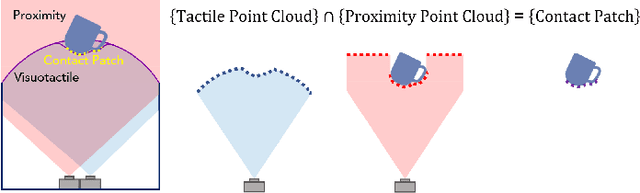

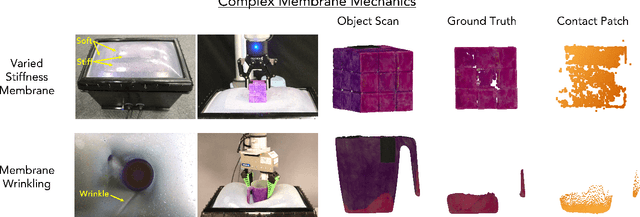

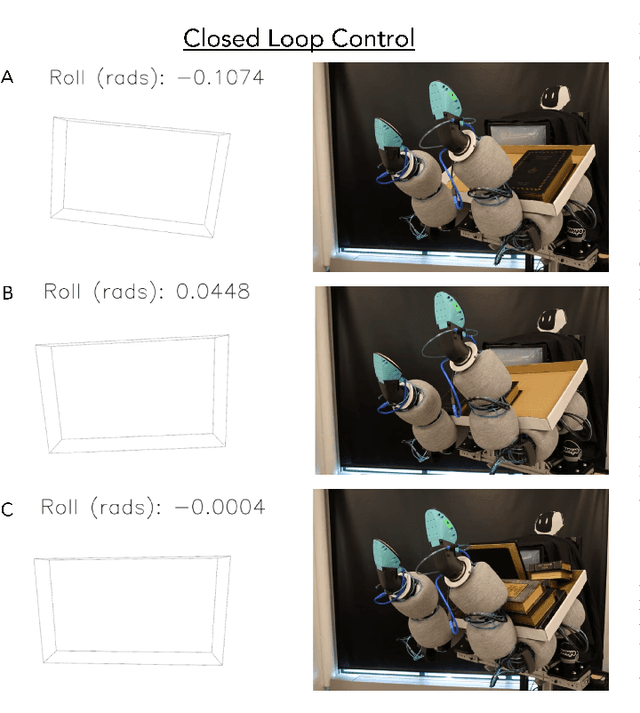

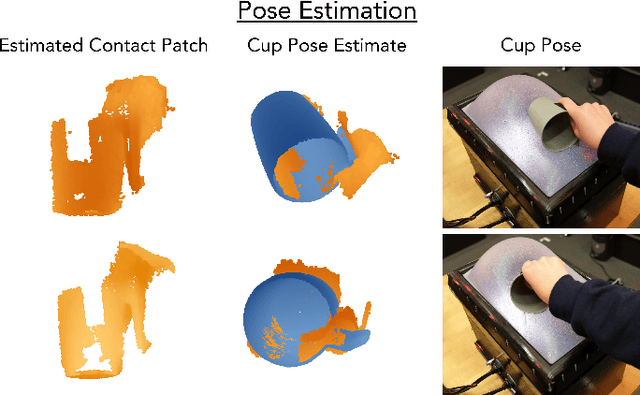

Proximity and Visuotactile Point Cloud Fusion for Contact Patches in Extreme Deformation

Jul 07, 2023

Abstract:Equipping robots with the sense of touch is critical to emulating the capabilities of humans in real world manipulation tasks. Visuotactile sensors are a popular tactile sensing strategy due to data output compatible with computer vision algorithms and accurate, high resolution estimates of local object geometry. However, these sensors struggle to accommodate high deformations of the sensing surface during object interactions, hindering more informative contact with cm-scale objects frequently encountered in the real world. The soft interfaces of visuotactile sensors are often made of hyperelastic elastomers, which are difficult to simulate quickly and accurately when extremely deformed for tactile information. Additionally, many visuotactile sensors that rely on strict internal light conditions or pattern tracking will fail if the surface is highly deformed. In this work, we propose an algorithm that fuses proximity and visuotactile point clouds for contact patch segmentation that is entirely independent from membrane mechanics. This algorithm exploits the synchronous, high-res proximity and visuotactile modalities enabled by an extremely deformable, selectively transmissive soft membrane, which uses visible light for visuotactile sensing and infrared light for proximity depth. We present the hardware design, membrane fabrication, and evaluation of our contact patch algorithm in low (10%), medium (60%), and high (100%+) membrane strain states. We compare our algorithm against three baselines: proximity-only, tactile-only, and a membrane mechanics model. Our proposed algorithm outperforms all baselines with an average RMSE under 2.8mm of the contact patch geometry across all strain ranges. We demonstrate our contact patch algorithm in four applications: varied stiffness membranes, torque and shear-induced wrinkling, closed loop control for whole body manipulation, and pose estimation.

Visual-Inertial and Leg Odometry Fusion for Dynamic Locomotion

Oct 10, 2022

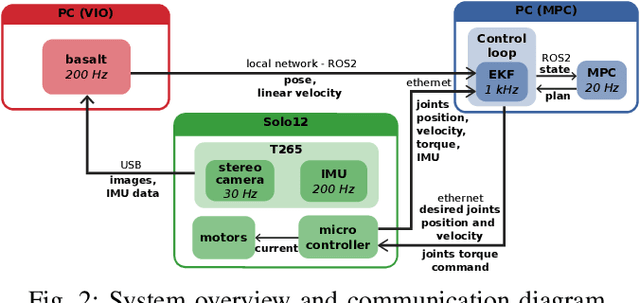

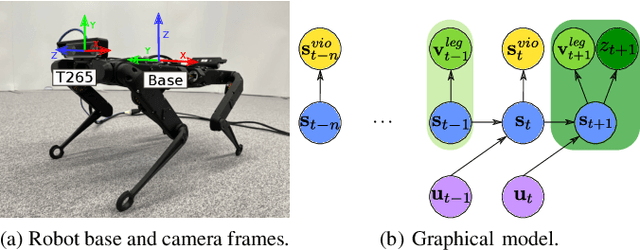

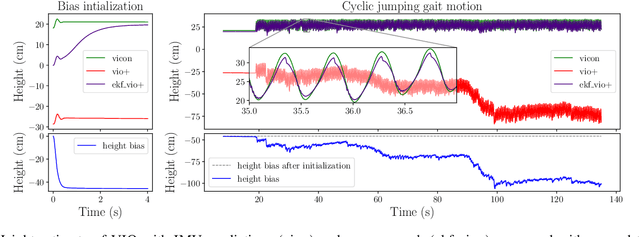

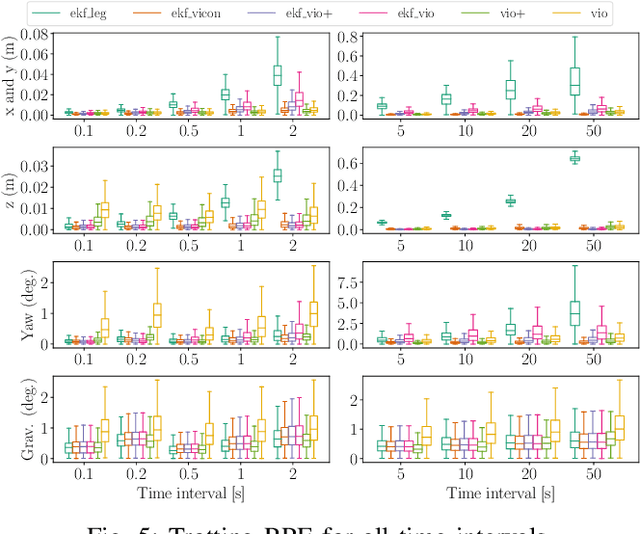

Abstract:Implementing dynamic locomotion behaviors on legged robots requires a high-quality state estimation module. Especially when the motion includes flight phases, state-of-the-art approaches fail to produce reliable estimation of the robot posture, in particular base height. In this paper, we propose a novel approach for combining visual-inertial odometry (VIO) with leg odometry in an extended Kalman filter (EKF) based state estimator. The VIO module uses a stereo camera and IMU to yield low-drift 3D position and yaw orientation and drift-free pitch and roll orientation of the robot base link in the inertial frame. However, these values have a considerable amount of latency due to image processing and optimization, while the rate of update is quite low which is not suitable for low-level control. To reduce the latency, we predict the VIO state estimate at the rate of the IMU measurements of the VIO sensor. The EKF module uses the base pose and linear velocity predicted by VIO, fuses them further with a second high-rate IMU and leg odometry measurements, and produces robot state estimates with a high frequency and small latency suitable for control. We integrate this lightweight estimation framework with a nonlinear model predictive controller and show successful implementation of a set of agile locomotion behaviors, including trotting and jumping at varying horizontal speeds, on a torque-controlled quadruped robot.

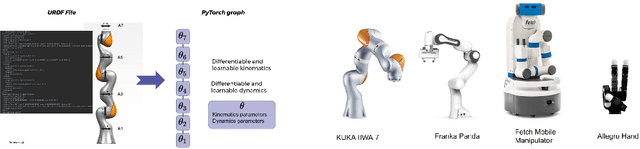

Differentiable and Learnable Robot Models

Feb 22, 2022

Abstract:Building differentiable simulations of physical processes has recently received an increasing amount of attention. Specifically, some efforts develop differentiable robotic physics engines motivated by the computational benefits of merging rigid body simulations with modern differentiable machine learning libraries. Here, we present a library that focuses on the ability to combine data driven methods with analytical rigid body computations. More concretely, our library \emph{Differentiable Robot Models} implements both \emph{differentiable} and \emph{learnable} models of the kinematics and dynamics of robots in Pytorch. The source-code is available at \url{https://github.com/facebookresearch/differentiable-robot-model}

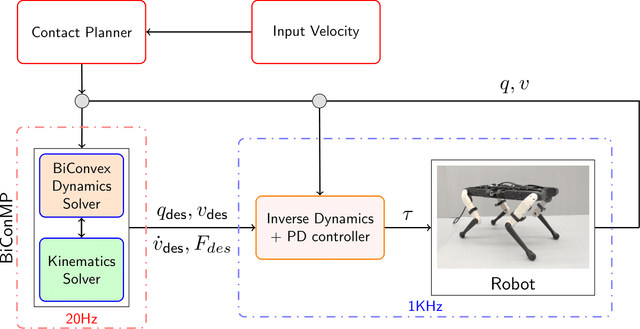

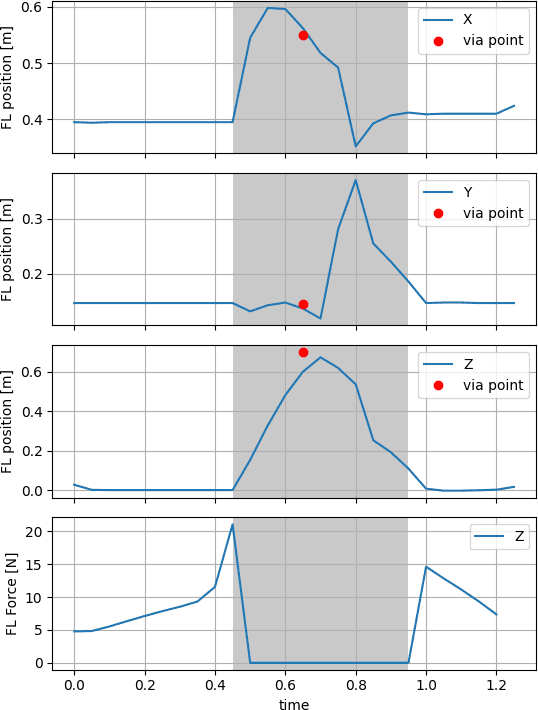

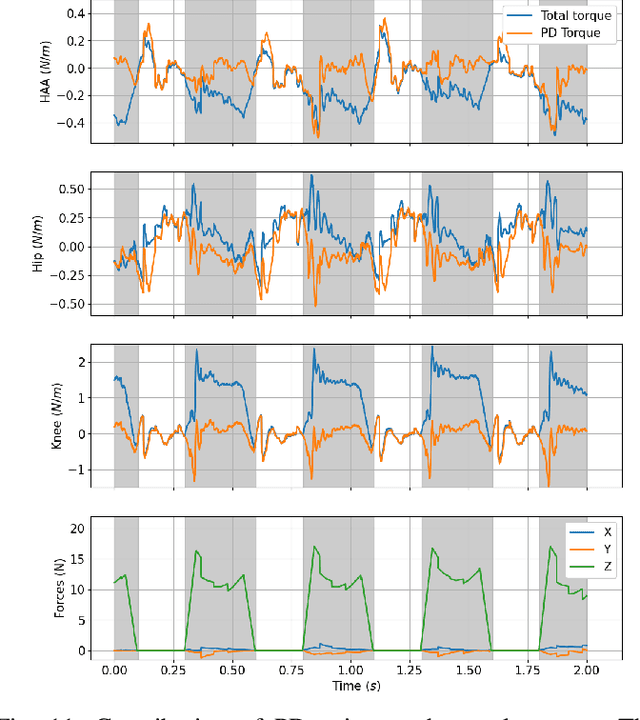

BiConMP: A Nonlinear Model Predictive Control Framework for Whole Body Motion Planning

Jan 19, 2022

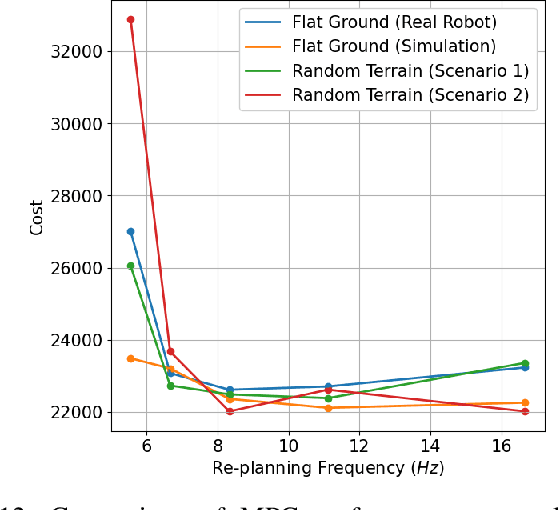

Abstract:Online planning of whole-body motions for legged robots is challenging due to the inherent nonlinearity in the robot dynamics. In this work, we propose a nonlinear MPC framework, the BiConMP which can generate whole body trajectories online by efficiently exploiting the structure of the robot dynamics. BiConMP is used to generate various cyclic gaits on a real quadruped robot and its performance is evaluated on different terrain, countering unforeseen pushes and transitioning online between different gaits. Further, the ability of BiConMP to generate non-trivial acyclic whole-body dynamic motions on the robot is presented. Finally, an extensive empirical analysis on the effects of planning horizon and frequency on the nonlinear MPC framework is reported and discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge