Giovanni Sutanto

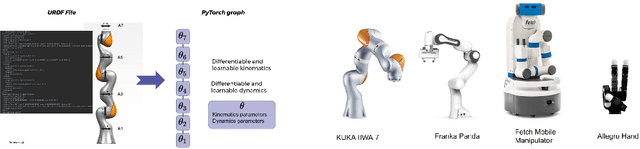

Differentiable and Learnable Robot Models

Feb 22, 2022

Abstract:Building differentiable simulations of physical processes has recently received an increasing amount of attention. Specifically, some efforts develop differentiable robotic physics engines motivated by the computational benefits of merging rigid body simulations with modern differentiable machine learning libraries. Here, we present a library that focuses on the ability to combine data driven methods with analytical rigid body computations. More concretely, our library \emph{Differentiable Robot Models} implements both \emph{differentiable} and \emph{learnable} models of the kinematics and dynamics of robots in Pytorch. The source-code is available at \url{https://github.com/facebookresearch/differentiable-robot-model}

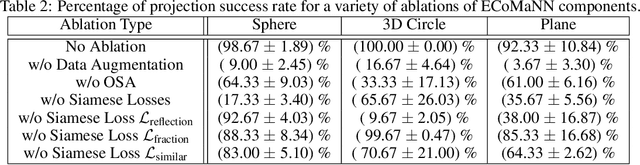

Learning Equality Constraints for Motion Planning on Manifolds

Sep 24, 2020

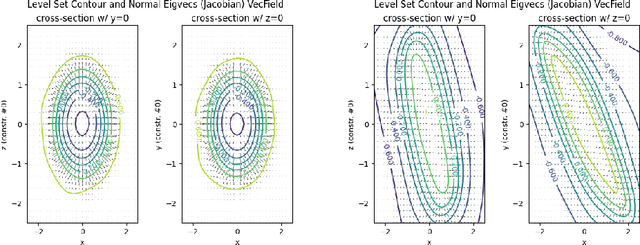

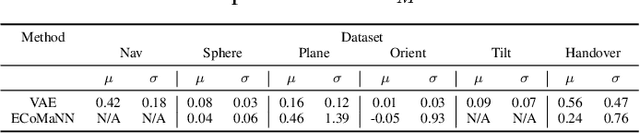

Abstract:Constrained robot motion planning is a widely used technique to solve complex robot tasks. We consider the problem of learning representations of constraints from demonstrations with a deep neural network, which we call Equality Constraint Manifold Neural Network (ECoMaNN). The key idea is to learn a level-set function of the constraint suitable for integration into a constrained sampling-based motion planner. Learning proceeds by aligning subspaces in the network with subspaces of the data. We combine both learned constraints and analytically described constraints into the planner and use a projection-based strategy to find valid points. We evaluate ECoMaNN on its representation capabilities of constraint manifolds, the impact of its individual loss terms, and the motions produced when incorporated into a planner.

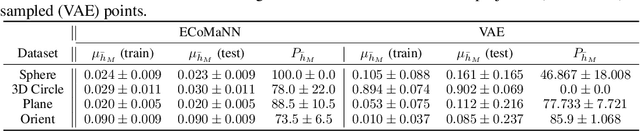

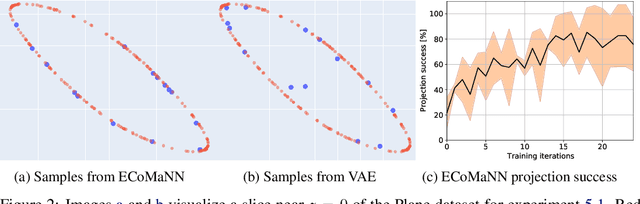

Learning Manifolds for Sequential Motion Planning

Jul 04, 2020

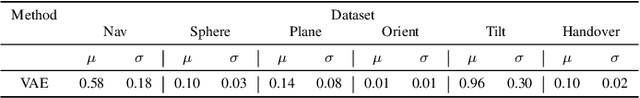

Abstract:Motion planning with constraints is an important part of many real-world robotic systems. In this work, we study manifold learning methods to learn such constraints from data. We explore two methods for learning implicit constraint manifolds from data: Variational Autoencoders (VAE), and a new method, Equality Constraint Manifold Neural Network (ECoMaNN). With the aim of incorporating learned constraints into a sampling-based motion planning framework, we evaluate the approaches on their ability to learn representations of constraints from various datasets and on the quality of paths produced during planning.

Supervised Learning and Reinforcement Learning of Feedback Models for Reactive Behaviors: Tactile Feedback Testbed

Jun 29, 2020

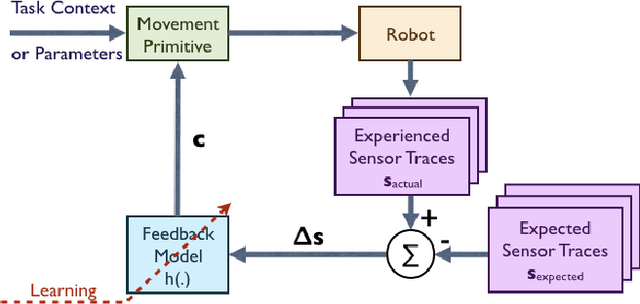

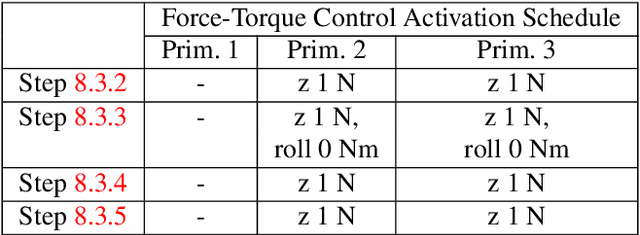

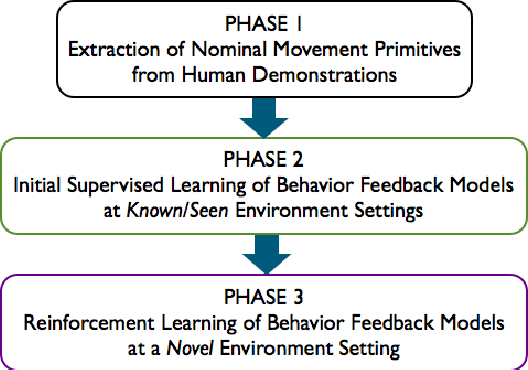

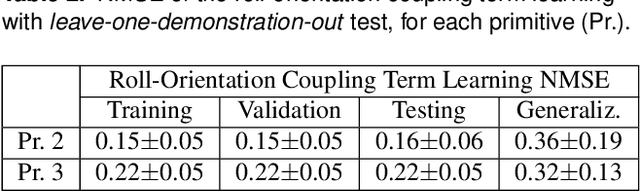

Abstract:Robots need to be able to adapt to unexpected changes in the environment such that they can autonomously succeed in their tasks. However, hand-designing feedback models for adaptation is tedious, if at all possible, making data-driven methods a promising alternative. In this paper we introduce a full framework for learning feedback models for reactive motion planning. Our pipeline starts by segmenting demonstrations of a complete task into motion primitives via a semi-automated segmentation algorithm. Then, given additional demonstrations of successful adaptation behaviors, we learn initial feedback models through learning from demonstrations. In the final phase, a sample-efficient reinforcement learning algorithm fine-tunes these feedback models for novel task settings through few real system interactions. We evaluate our approach on a real anthropomorphic robot in learning a tactile feedback task.

Encoding Physical Constraints in Differentiable Newton-Euler Algorithm

Feb 19, 2020

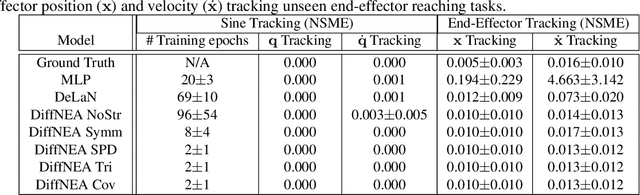

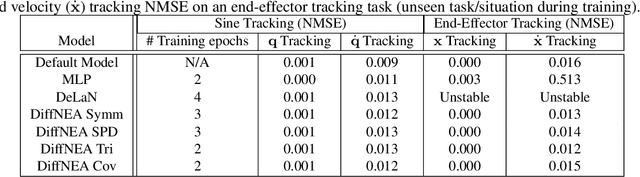

Abstract:The recursive Newton-Euler Algorithm (RNEA) is a popular technique in robotics for computing the dynamics of robots. The computed dynamics can then be used for torque control with inverse dynamics, or for forward dynamics computations. RNEA can be framed as a differentiable computational graph, enabling the dynamics parameters of the robot to be learned from data via modern auto-differentiation toolboxes. However, the dynamics parameters learned in this manner can be physically implausible. In this work, we incorporate physical constraints in the learning by adding structure to the learned parameters. This results in a framework that can learn physically plausible dynamics via gradient descent, improving the training speed as well as generalization of the learned dynamics models. We evaluate our method on real-time inverse dynamics predictions of a 7 degree of freedom robot arm, both in simulation and on the real robot. Our experiments study a spectrum of structure added to learned dynamics, and compare their performance and generalization.

Learning Latent Space Dynamics for Tactile Servoing

Apr 15, 2019

Abstract:To achieve a dexterous robotic manipulation, we need to endow our robot with tactile feedback capability, i.e. the ability to drive action based on tactile sensing. In this paper, we specifically address the challenge of tactile servoing, i.e. given the current tactile sensing and a target/goal tactile sensing --memorized from a successful task execution in the past-- what is the action that will bring the current tactile sensing to move closer towards the target tactile sensing at the next time step. We develop a data-driven approach to acquire a dynamics model for tactile servoing by learning from demonstration. Moreover, our method represents the tactile sensing information as to lie on a surface --or a 2D manifold-- and perform a manifold learning, making it applicable to any tactile skin geometry. We evaluate our method on a contact point tracking task using a robot equipped with a tactile finger. A video demonstrating our approach can be seen in https://youtu.be/0QK0-Vx7WkI

Learning Sensor Feedback Models from Demonstrations via Phase-Modulated Neural Networks

Mar 15, 2018

Abstract:In order to robustly execute a task under environmental uncertainty, a robot needs to be able to reactively adapt to changes arising in its environment. The environment changes are usually reflected in deviation from expected sensory traces. These deviations in sensory traces can be used to drive the motion adaptation, and for this purpose, a feedback model is required. The feedback model maps the deviations in sensory traces to the motion plan adaptation. In this paper, we develop a general data-driven framework for learning a feedback model from demonstrations. We utilize a variant of a radial basis function network structure --with movement phases as kernel centers-- which can generally be applied to represent any feedback models for movement primitives. To demonstrate the effectiveness of our framework, we test it on the task of scraping on a tilt board. In this task, we are learning a reactive policy in the form of orientation adaptation, based on deviations of tactile sensor traces. As a proof of concept of our method, we provide evaluations on an anthropomorphic robot. A video demonstrating our approach and its results can be seen in https://youtu.be/7Dx5imy1Kcw

Learning Feedback Terms for Reactive Planning and Control

Mar 03, 2017

Abstract:With the advancement of robotics, machine learning, and machine perception, increasingly more robots will enter human environments to assist with daily tasks. However, dynamically-changing human environments requires reactive motion plans. Reactivity can be accomplished through replanning, e.g. model-predictive control, or through a reactive feedback policy that modifies on-going behavior in response to sensory events. In this paper, we investigate how to use machine learning to add reactivity to a previously learned nominal skilled behavior. We approach this by learning a reactive modification term for movement plans represented by nonlinear differential equations. In particular, we use dynamic movement primitives (DMPs) to represent a skill and a neural network to learn a reactive policy from human demonstrations. We use the well explored domain of obstacle avoidance for robot manipulation as a test bed. Our approach demonstrates how a neural network can be combined with physical insights to ensure robust behavior across different obstacle settings and movement durations. Evaluations on an anthropomorphic robotic system demonstrate the effectiveness of our work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge