Avinash Uttamchandani

Proximity and Visuotactile Point Cloud Fusion for Contact Patches in Extreme Deformation

Jul 07, 2023

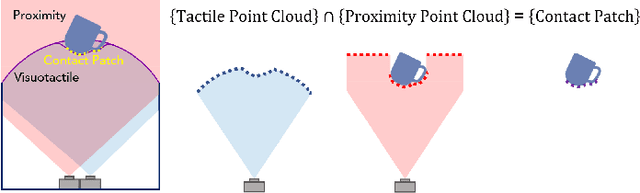

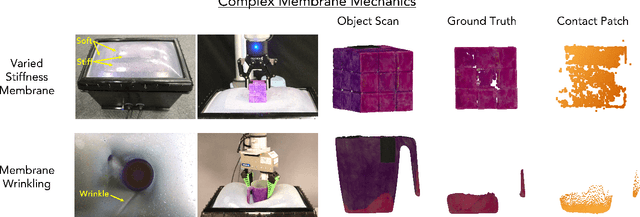

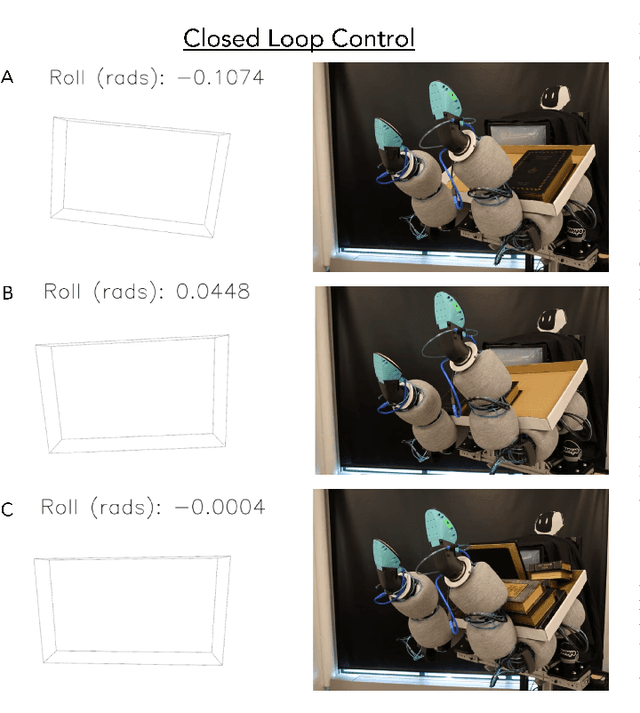

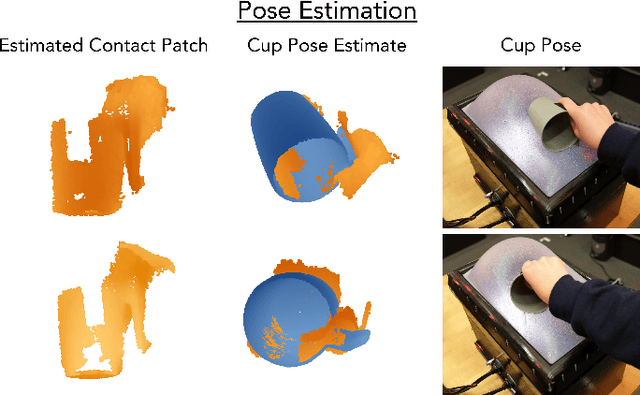

Abstract:Equipping robots with the sense of touch is critical to emulating the capabilities of humans in real world manipulation tasks. Visuotactile sensors are a popular tactile sensing strategy due to data output compatible with computer vision algorithms and accurate, high resolution estimates of local object geometry. However, these sensors struggle to accommodate high deformations of the sensing surface during object interactions, hindering more informative contact with cm-scale objects frequently encountered in the real world. The soft interfaces of visuotactile sensors are often made of hyperelastic elastomers, which are difficult to simulate quickly and accurately when extremely deformed for tactile information. Additionally, many visuotactile sensors that rely on strict internal light conditions or pattern tracking will fail if the surface is highly deformed. In this work, we propose an algorithm that fuses proximity and visuotactile point clouds for contact patch segmentation that is entirely independent from membrane mechanics. This algorithm exploits the synchronous, high-res proximity and visuotactile modalities enabled by an extremely deformable, selectively transmissive soft membrane, which uses visible light for visuotactile sensing and infrared light for proximity depth. We present the hardware design, membrane fabrication, and evaluation of our contact patch algorithm in low (10%), medium (60%), and high (100%+) membrane strain states. We compare our algorithm against three baselines: proximity-only, tactile-only, and a membrane mechanics model. Our proposed algorithm outperforms all baselines with an average RMSE under 2.8mm of the contact patch geometry across all strain ranges. We demonstrate our contact patch algorithm in four applications: varied stiffness membranes, torque and shear-induced wrinkling, closed loop control for whole body manipulation, and pose estimation.

Punyo-1: Soft tactile-sensing upper-body robot for large object manipulation and physical human interaction

Nov 17, 2021

Abstract:The manipulation of large objects and the ability to safely operate in the vicinity of humans are key capabilities of a general purpose domestic robotic assistant. We present the design of a soft, tactile-sensing humanoid upper-body robot and demonstrate whole-body rich-contact manipulation strategies for handling large objects. We demonstrate our hardware design philosophy for outfitting off-the-shelf hard robot arms and other upper-body components with soft tactile-sensing modules, including: (i) low-cost, cut-resistant, contact pressure localizing coverings for the arms, (ii) paws based on TRI's Soft-bubble sensors for the end effectors, and (iii) compliant force/geometry sensors for the coarse geometry-sensing surface/chest. We leverage the mechanical intelligence and tactile sensing of these modules to develop and demonstrate motion primitives for whole-body grasping control. We evaluate the hardware's effectiveness in achieving grasps of varying strengths over a variety of large domestic objects. Our results demonstrate the importance of exploiting softness and tactile sensing in contact-rich manipulation strategies, as well as a path forward for whole-body force-controlled interactions with the world.

Soft-Bubble grippers for robust and perceptive manipulation

Apr 28, 2020

Abstract:Manipulation in cluttered environments like homes requires stable grasps, precise placement and robustness against external contact. We present the Soft-Bubble gripper system with a highly compliant gripping surface and dense-geometry visuotactile sensing, capable of multiple kinds of tactile perception. We first present various mechanical design advances and a fabrication technique to deposit custom patterns to the internal surface of the sensor that enable tracking of shear-induced displacement of the manipuland. The depth maps output by the internal imaging sensor are used in an in-hand proximity pose estimation framework -- the method better captures distances to corners or edges on the manipuland geometry. We also extend our previous work on tactile classification and integrate the system within a robust manipulation pipeline for cluttered home environments. The capabilities of the proposed system are demonstrated through robust execution multiple real-world manipulation tasks. A video of the system in action can be found here: [https://youtu.be/G_wBsbQyBfc].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge