Naveen Kuppuswamy

PolyTouch: A Robust Multi-Modal Tactile Sensor for Contact-rich Manipulation Using Tactile-Diffusion Policies

Apr 27, 2025Abstract:Achieving robust dexterous manipulation in unstructured domestic environments remains a significant challenge in robotics. Even with state-of-the-art robot learning methods, haptic-oblivious control strategies (i.e. those relying only on external vision and/or proprioception) often fall short due to occlusions, visual complexities, and the need for precise contact interaction control. To address these limitations, we introduce PolyTouch, a novel robot finger that integrates camera-based tactile sensing, acoustic sensing, and peripheral visual sensing into a single design that is compact and durable. PolyTouch provides high-resolution tactile feedback across multiple temporal scales, which is essential for efficiently learning complex manipulation tasks. Experiments demonstrate an at least 20-fold increase in lifespan over commercial tactile sensors, with a design that is both easy to manufacture and scalable. We then use this multi-modal tactile feedback along with visuo-proprioceptive observations to synthesize a tactile-diffusion policy from human demonstrations; the resulting contact-aware control policy significantly outperforms haptic-oblivious policies in multiple contact-aware manipulation policies. This paper highlights how effectively integrating multi-modal contact sensing can hasten the development of effective contact-aware manipulation policies, paving the way for more reliable and versatile domestic robots. More information can be found at https://polytouch.alanz.info/

Adaptive Compliance Policy: Learning Approximate Compliance for Diffusion Guided Control

Oct 12, 2024

Abstract:Compliance plays a crucial role in manipulation, as it balances between the concurrent control of position and force under uncertainties. Yet compliance is often overlooked by today's visuomotor policies that solely focus on position control. This paper introduces Adaptive Compliance Policy (ACP), a novel framework that learns to dynamically adjust system compliance both spatially and temporally for given manipulation tasks from human demonstrations, improving upon previous approaches that rely on pre-selected compliance parameters or assume uniform constant stiffness. However, computing full compliance parameters from human demonstrations is an ill-defined problem. Instead, we estimate an approximate compliance profile with two useful properties: avoiding large contact forces and encouraging accurate tracking. Our approach enables robots to handle complex contact-rich manipulation tasks and achieves over 50\% performance improvement compared to state-of-the-art visuomotor policy methods. For result videos, see https://adaptive-compliance.github.io/

Robot Learning as an Empirical Science: Best Practices for Policy Evaluation

Sep 14, 2024

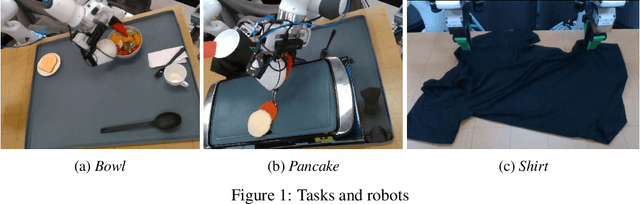

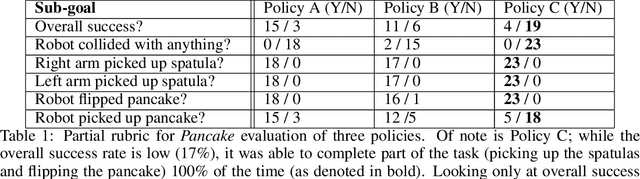

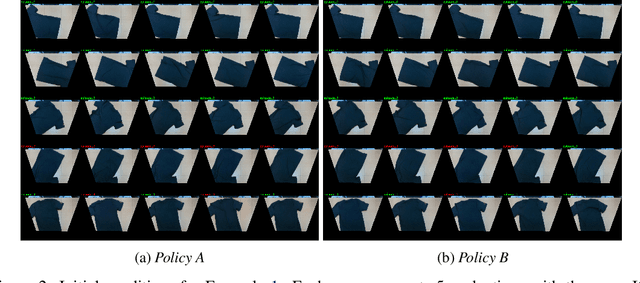

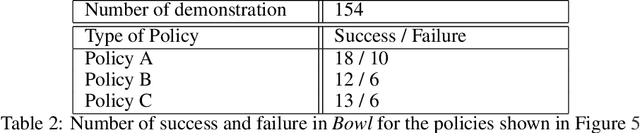

Abstract:The robot learning community has made great strides in recent years, proposing new architectures and showcasing impressive new capabilities; however, the dominant metric used in the literature, especially for physical experiments, is "success rate", i.e. the percentage of runs that were successful. Furthermore, it is common for papers to report this number with little to no information regarding the number of runs, the initial conditions, and the success criteria, little to no narrative description of the behaviors and failures observed, and little to no statistical analysis of the findings. In this paper we argue that to move the field forward, researchers should provide a nuanced evaluation of their methods, especially when evaluating and comparing learned policies on physical robots. To do so, we propose best practices for future evaluations: explicitly reporting the experimental conditions, evaluating several metrics designed to complement success rate, conducting statistical analysis, and adding a qualitative description of failures modes. We illustrate these through an evaluation on physical robots of several learned policies for manipulation tasks.

Vegetable Peeling: A Case Study in Constrained Dexterous Manipulation

Jul 10, 2024Abstract:Recent studies have made significant progress in addressing dexterous manipulation problems, particularly in in-hand object reorientation. However, there are few existing works that explore the potential utilization of developed dexterous manipulation controllers for downstream tasks. In this study, we focus on constrained dexterous manipulation for food peeling. Food peeling presents various constraints on the reorientation controller, such as the requirement for the hand to securely hold the object after reorientation for peeling. We propose a simple system for learning a reorientation controller that facilitates the subsequent peeling task. Videos are available at: https://taochenshh.github.io/projects/veg-peeling.

ManiWAV: Learning Robot Manipulation from In-the-Wild Audio-Visual Data

Jun 27, 2024

Abstract:Audio signals provide rich information for the robot interaction and object properties through contact. These information can surprisingly ease the learning of contact-rich robot manipulation skills, especially when the visual information alone is ambiguous or incomplete. However, the usage of audio data in robot manipulation has been constrained to teleoperated demonstrations collected by either attaching a microphone to the robot or object, which significantly limits its usage in robot learning pipelines. In this work, we introduce ManiWAV: an 'ear-in-hand' data collection device to collect in-the-wild human demonstrations with synchronous audio and visual feedback, and a corresponding policy interface to learn robot manipulation policy directly from the demonstrations. We demonstrate the capabilities of our system through four contact-rich manipulation tasks that require either passively sensing the contact events and modes, or actively sensing the object surface materials and states. In addition, we show that our system can generalize to unseen in-the-wild environments, by learning from diverse in-the-wild human demonstrations. Project website: https://mani-wav.github.io/

Stretch with Stretch: Physical Therapy Exercise Games Led by a Mobile Manipulator

Dec 21, 2023

Abstract:Physical therapy (PT) is a key component of many rehabilitation regimens, such as treatments for Parkinson's disease (PD). However, there are shortages of physical therapists and adherence to self-guided PT is low. Robots have the potential to support physical therapists and increase adherence to self-guided PT, but prior robotic systems have been large and immobile, which can be a barrier to use in homes and clinics. We present Stretch with Stretch (SWS), a novel robotic system for leading stretching exercise games for older adults with PD. SWS consists of a compact and lightweight mobile manipulator (Hello Robot Stretch RE1) that visually and verbally guides users through PT exercises. The robot's soft end effector serves as a target that users repetitively reach towards and press with a hand, foot, or knee. For each exercise, target locations are customized for the individual via a visually estimated kinematic model, a haptically estimated range of motion, and the person's exercise performance. The system includes sound effects and verbal feedback from the robot to keep users engaged throughout a session and augment physical exercise with cognitive exercise. We conducted a user study for which people with PD (n=10) performed 6 exercises with the system. Participants perceived the SWS to be useful and easy to use. They also reported mild to moderate perceived exertion (RPE).

Proximity and Visuotactile Point Cloud Fusion for Contact Patches in Extreme Deformation

Jul 07, 2023

Abstract:Equipping robots with the sense of touch is critical to emulating the capabilities of humans in real world manipulation tasks. Visuotactile sensors are a popular tactile sensing strategy due to data output compatible with computer vision algorithms and accurate, high resolution estimates of local object geometry. However, these sensors struggle to accommodate high deformations of the sensing surface during object interactions, hindering more informative contact with cm-scale objects frequently encountered in the real world. The soft interfaces of visuotactile sensors are often made of hyperelastic elastomers, which are difficult to simulate quickly and accurately when extremely deformed for tactile information. Additionally, many visuotactile sensors that rely on strict internal light conditions or pattern tracking will fail if the surface is highly deformed. In this work, we propose an algorithm that fuses proximity and visuotactile point clouds for contact patch segmentation that is entirely independent from membrane mechanics. This algorithm exploits the synchronous, high-res proximity and visuotactile modalities enabled by an extremely deformable, selectively transmissive soft membrane, which uses visible light for visuotactile sensing and infrared light for proximity depth. We present the hardware design, membrane fabrication, and evaluation of our contact patch algorithm in low (10%), medium (60%), and high (100%+) membrane strain states. We compare our algorithm against three baselines: proximity-only, tactile-only, and a membrane mechanics model. Our proposed algorithm outperforms all baselines with an average RMSE under 2.8mm of the contact patch geometry across all strain ranges. We demonstrate our contact patch algorithm in four applications: varied stiffness membranes, torque and shear-induced wrinkling, closed loop control for whole body manipulation, and pose estimation.

Punyo-1: Soft tactile-sensing upper-body robot for large object manipulation and physical human interaction

Nov 17, 2021

Abstract:The manipulation of large objects and the ability to safely operate in the vicinity of humans are key capabilities of a general purpose domestic robotic assistant. We present the design of a soft, tactile-sensing humanoid upper-body robot and demonstrate whole-body rich-contact manipulation strategies for handling large objects. We demonstrate our hardware design philosophy for outfitting off-the-shelf hard robot arms and other upper-body components with soft tactile-sensing modules, including: (i) low-cost, cut-resistant, contact pressure localizing coverings for the arms, (ii) paws based on TRI's Soft-bubble sensors for the end effectors, and (iii) compliant force/geometry sensors for the coarse geometry-sensing surface/chest. We leverage the mechanical intelligence and tactile sensing of these modules to develop and demonstrate motion primitives for whole-body grasping control. We evaluate the hardware's effectiveness in achieving grasps of varying strengths over a variety of large domestic objects. Our results demonstrate the importance of exploiting softness and tactile sensing in contact-rich manipulation strategies, as well as a path forward for whole-body force-controlled interactions with the world.

SEED: Series Elastic End Effectors in 6D for Visuotactile Tool Use

Nov 02, 2021

Abstract:We propose the framework of Series Elastic End Effectors in 6D (SEED), which combines a spatially compliant element with visuotactile sensing to grasp and manipulate tools in the wild. Our framework generalizes the benefits of series elasticity to 6-dof, while providing an abstraction of control using visuotactile sensing. We propose an algorithm for relative pose estimation from visuotactile sensing, and a spatial hybrid force-position controller capable of achieving stable force interaction with the environment. We demonstrate the effectiveness of our framework on tools that require regulation of spatial forces. Video link: https://youtu.be/2-YuIfspDrk

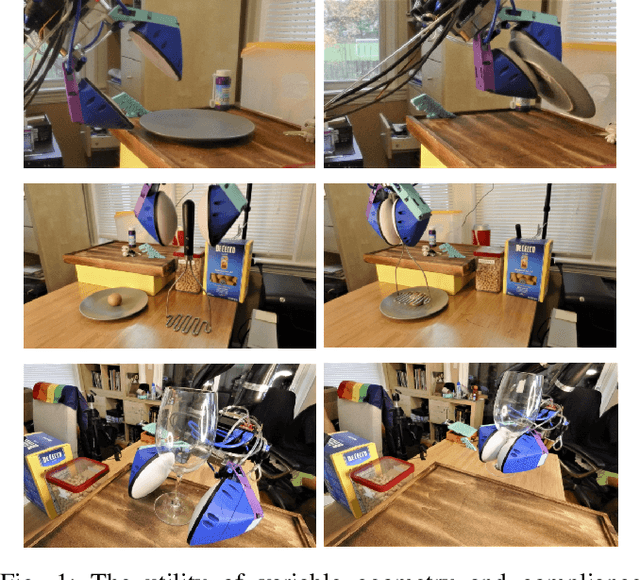

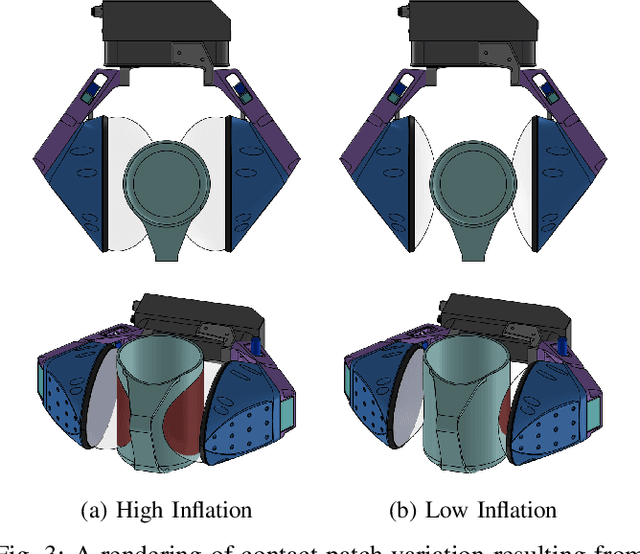

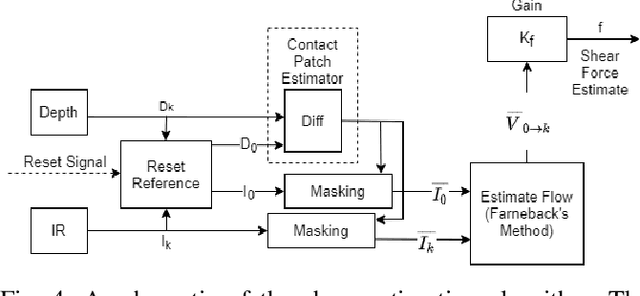

Variable compliance and geometry regulation of Soft-Bubble grippers with active pressure control

Mar 15, 2021

Abstract:While compliant grippers have become increasingly commonplace in robot manipulation, finding the right stiffness and geometry for grasping the widest variety of objects remains a key challenge. Adjusting both stiffness and gripper geometry on the fly may provide the versatility needed to manipulate the large range of objects found in domestic environments. We present a system for actively controlling the geometry (inflation level) and compliance of Soft-bubble grippers - air filled, highly compliant parallel gripper fingers incorporating visuotactile sensing. The proposed system enables large, controlled changes in gripper finger geometry and grasp stiffness, as well as simple in-hand manipulation. We also demonstrate, despite these changes, the continued viability of advanced perception capabilities such as dense geometry and shear force measurement - we present a straightforward extension of our previously presented approach for measuring shear induced displacements using the internal imaging sensor and taking into account pressure and geometry changes. We quantify the controlled variation of grasp-free geometry, grasp stiffness and contact patch geometry resulting from pressure regulation and we demonstrate new capabilities for the gripper in the home by grasping in constrained spaces, manipulating tools requiring lower and higher stiffness grasps, as well as contact patch modulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge