James Pikul

Active Learning Design: Modeling Force Output for Axisymmetric Soft Pneumatic Actuators

Apr 01, 2025Abstract:Soft pneumatic actuators (SPA) made from elastomeric materials can provide large strain and large force. The behavior of locally strain-restricted hyperelastic materials under inflation has been investigated thoroughly for shape reconfiguration, but requires further investigation for trajectories involving external force. In this work we model force-pressure-height relationships for a concentrically strain-limited class of soft pneumatic actuators and demonstrate the use of this model to design SPA response for object lifting. We predict relationships under different loadings by solving energy minimization equations and verify this theory by using an automated test rig to collect rich data for n=22 Ecoflex 00-30 membranes. We collect this data using an active learning pipeline to efficiently model the design space. We show that this learned material model outperforms the theory-based model and naive curve-fitting approaches. We use our model to optimize membrane design for different lift tasks and compare this performance to other designs. These contributions represent a step towards understanding the natural response for this class of actuator and embodying intelligent lifts in a single-pressure input actuator system.

Learning In-Hand Translation Using Tactile Skin With Shear and Normal Force Sensing

Jul 10, 2024Abstract:Recent progress in reinforcement learning (RL) and tactile sensing has significantly advanced dexterous manipulation. However, these methods often utilize simplified tactile signals due to the gap between tactile simulation and the real world. We introduce a sensor model for tactile skin that enables zero-shot sim-to-real transfer of ternary shear and binary normal forces. Using this model, we develop an RL policy that leverages sliding contact for dexterous in-hand translation. We conduct extensive real-world experiments to assess how tactile sensing facilitates policy adaptation to various unseen object properties and robot hand orientations. We demonstrate that our 3-axis tactile policies consistently outperform baselines that use only shear forces, only normal forces, or only proprioception. Website: https://jessicayin.github.io/tactile-skin-rl/

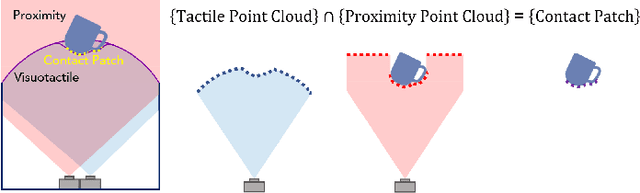

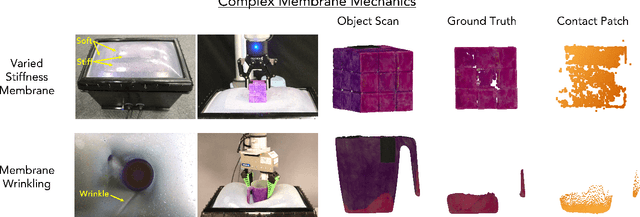

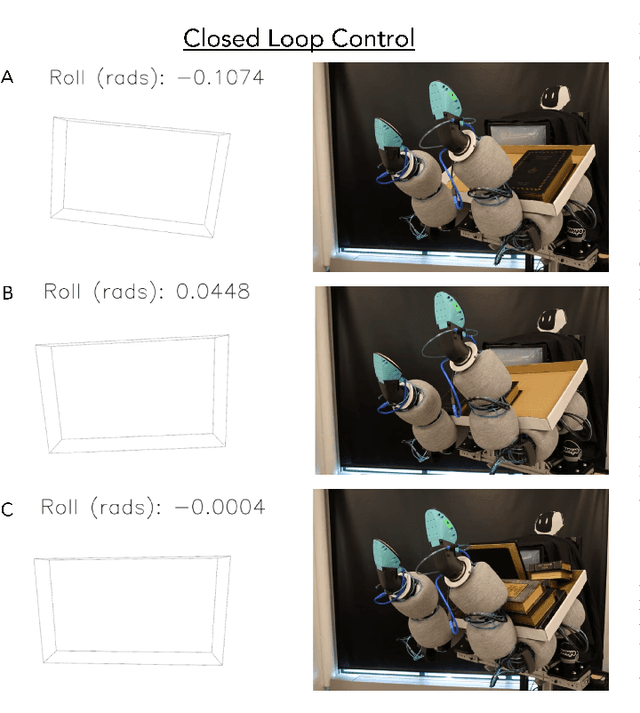

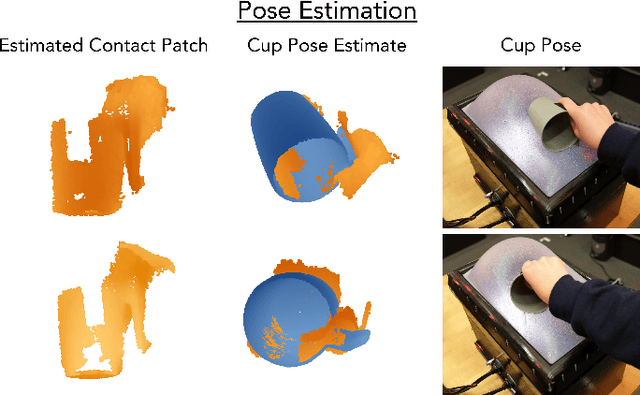

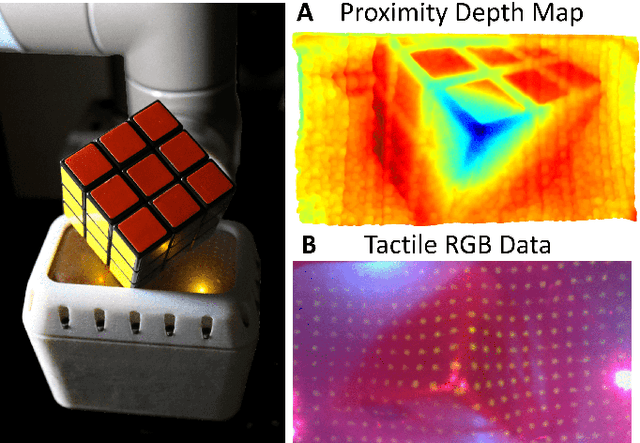

Proximity and Visuotactile Point Cloud Fusion for Contact Patches in Extreme Deformation

Jul 07, 2023

Abstract:Equipping robots with the sense of touch is critical to emulating the capabilities of humans in real world manipulation tasks. Visuotactile sensors are a popular tactile sensing strategy due to data output compatible with computer vision algorithms and accurate, high resolution estimates of local object geometry. However, these sensors struggle to accommodate high deformations of the sensing surface during object interactions, hindering more informative contact with cm-scale objects frequently encountered in the real world. The soft interfaces of visuotactile sensors are often made of hyperelastic elastomers, which are difficult to simulate quickly and accurately when extremely deformed for tactile information. Additionally, many visuotactile sensors that rely on strict internal light conditions or pattern tracking will fail if the surface is highly deformed. In this work, we propose an algorithm that fuses proximity and visuotactile point clouds for contact patch segmentation that is entirely independent from membrane mechanics. This algorithm exploits the synchronous, high-res proximity and visuotactile modalities enabled by an extremely deformable, selectively transmissive soft membrane, which uses visible light for visuotactile sensing and infrared light for proximity depth. We present the hardware design, membrane fabrication, and evaluation of our contact patch algorithm in low (10%), medium (60%), and high (100%+) membrane strain states. We compare our algorithm against three baselines: proximity-only, tactile-only, and a membrane mechanics model. Our proposed algorithm outperforms all baselines with an average RMSE under 2.8mm of the contact patch geometry across all strain ranges. We demonstrate our contact patch algorithm in four applications: varied stiffness membranes, torque and shear-induced wrinkling, closed loop control for whole body manipulation, and pose estimation.

Electroadhesive Clutches for Programmable Shape Morphing of Soft Actuators

Nov 14, 2022

Abstract:Soft robotic actuators are safe and adaptable devices with inherent compliance, which makes them attractive for manipulating delicate and complex objects. Researchers have integrated stiff materials into soft actuators to increase their force capacity and direct their deformation. However, these embedded materials have largely been pre-prescribed and static, which constrains the actuators to a predetermined range of motion. In this work, electroadhesive (EA) clutches integrated on a single-chamber soft pneumatic actuator (SPA) provide local programmable stiffness modulation to control the actuator deformation. We show that activating different clutch patterns inflates a silicone membrane into pyramidal, round, and plateau shapes. Curvatures from these shapes are combined during actuation to apply forces on both a 3.7 g and 820 g object along five different degrees of freedom (DoF). The actuator workspace is up to 12 mm for light objects. Clutch deactivation, which results in local elastomeric expansion, rapidly applies forces up to 3.2 N to an object resting on the surface and launches a 3.7 g object in controlled directions. The actuator also rotates a heavier, 820 g, object by 5 degrees and rapidly restores it to horizontal alignment after clutch deactivation. This actuator is fully powered by a 5 V battery, AA battery, DC-DC transformer, and 4.5 V (63 g) DC air pump. These results demonstrate a first step towards realizing a soft actuator with high DoF shape change that preserves the inherent benefits of pneumatic actuation while gaining the electrical controllability and strength of EA clutches. We envision such a system supplying human contact forces in the form of a low-profile sit-to-stand assistance device, bed-ridden patient manipulator, or other ergonomic mechanism. This technology was also demonstrated at ICRA 2022: https://www.youtube.com/watch?v=6Y6-iHWNi6s

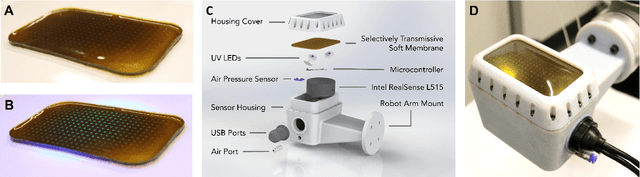

Multimodal Proximity and Visuotactile Sensing With a Selectively Transmissive Soft Membrane

Apr 18, 2022

Abstract:The most common sensing modalities found in a robot perception system are vision and touch, which together can provide global and highly localized data for manipulation. However, these sensing modalities often fail to adequately capture the behavior of target objects during the critical moments as they transition out of static, controlled contact with an end-effector to dynamic and uncontrolled motion. In this work, we present a novel multimodal visuotactile sensor that provides simultaneous visuotactile and proximity depth data. The sensor integrates an RGB camera and air pressure sensor to sense touch with an infrared time-of-flight (ToF) camera to sense proximity by leveraging a selectively transmissive soft membrane to enable the dual sensing modalities. We present the mechanical design, fabrication techniques, algorithm implementations, and evaluation of the sensor's tactile and proximity modalities. The sensor is demonstrated in three open-loop robotic tasks: approaching and contacting an object, catching, and throwing. The fusion of tactile and proximity data could be used to capture key information about a target object's transition behavior for sensor-based control in dynamic manipulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge