Jie Mei

Sherman

A Cascaded Information Interaction Network for Precise Image Segmentation

Jan 02, 2026Abstract:Visual perception plays a pivotal role in enabling autonomous behavior, offering a cost-effective and efficient alternative to complex multi-sensor systems. However, robust segmentation remains a challenge in complex scenarios. To address this, this paper proposes a cascaded convolutional neural network integrated with a novel Global Information Guidance Module. This module is designed to effectively fuse low-level texture details with high-level semantic features across multiple layers, thereby overcoming the inherent limitations of single-scale feature extraction. This architectural innovation significantly enhances segmentation accuracy, particularly in visually cluttered or blurred environments where traditional methods often fail. Experimental evaluations on benchmark image segmentation datasets demonstrate that the proposed framework achieves superior precision, outperforming existing state-of-the-art methods. The results highlight the effectiveness of the approach and its promising potential for deployment in practical robotic applications.

ISS Policy : Scalable Diffusion Policy with Implicit Scene Supervision

Dec 17, 2025Abstract:Vision-based imitation learning has enabled impressive robotic manipulation skills, but its reliance on object appearance while ignoring the underlying 3D scene structure leads to low training efficiency and poor generalization. To address these challenges, we introduce \emph{Implicit Scene Supervision (ISS) Policy}, a 3D visuomotor DiT-based diffusion policy that predicts sequences of continuous actions from point cloud observations. We extend DiT with a novel implicit scene supervision module that encourages the model to produce outputs consistent with the scene's geometric evolution, thereby improving the performance and robustness of the policy. Notably, ISS Policy achieves state-of-the-art performance on both single-arm manipulation tasks (MetaWorld) and dexterous hand manipulation (Adroit). In real-world experiments, it also demonstrates strong generalization and robustness. Additional ablation studies show that our method scales effectively with both data and parameters. Code and videos will be released.

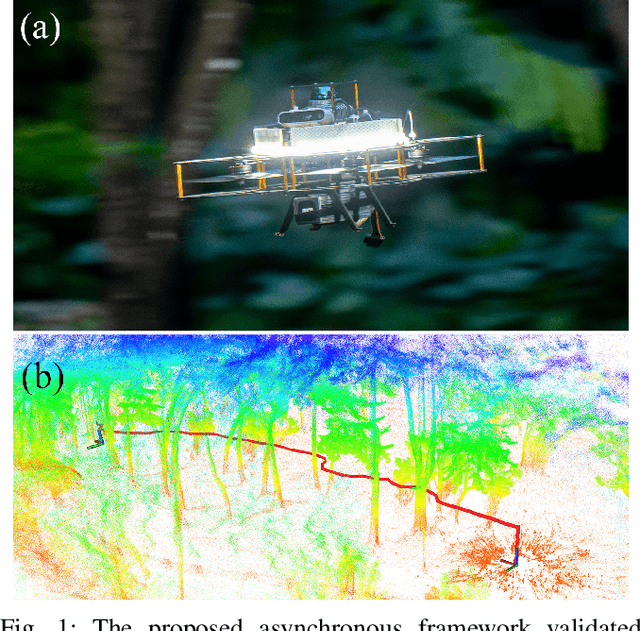

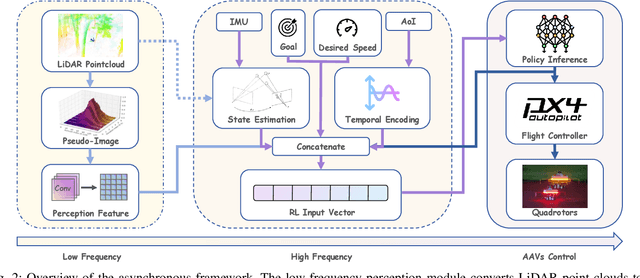

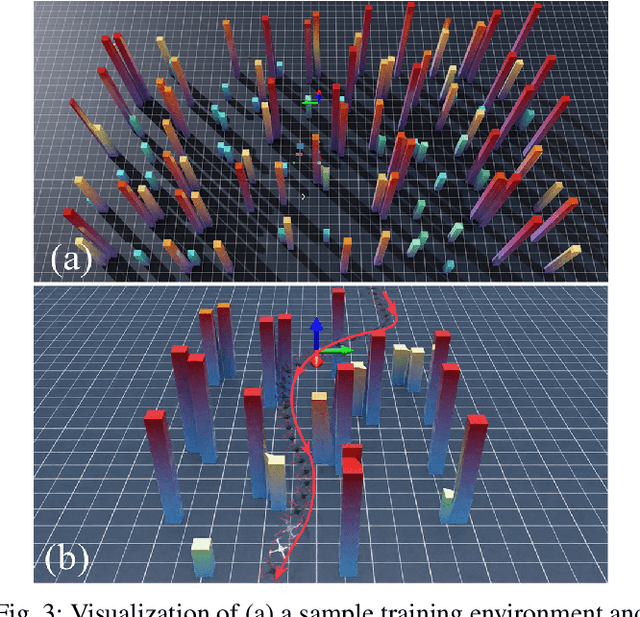

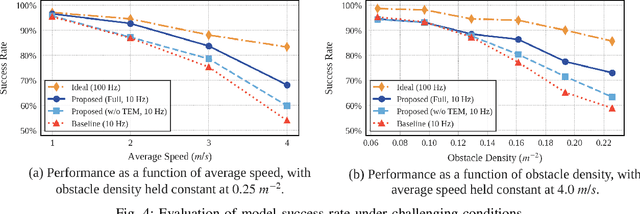

Agile in the Face of Delay: Asynchronous End-to-End Learning for Real-World Aerial Navigation

Sep 17, 2025

Abstract:Robust autonomous navigation for Autonomous Aerial Vehicles (AAVs) in complex environments is a critical capability. However, modern end-to-end navigation faces a key challenge: the high-frequency control loop needed for agile flight conflicts with low-frequency perception streams, which are limited by sensor update rates and significant computational cost. This mismatch forces conventional synchronous models into undesirably low control rates. To resolve this, we propose an asynchronous reinforcement learning framework that decouples perception and control, enabling a high-frequency policy to act on the latest IMU state for immediate reactivity, while incorporating perception features asynchronously. To manage the resulting data staleness, we introduce a theoretically-grounded Temporal Encoding Module (TEM) that explicitly conditions the policy on perception delays, a strategy complemented by a two-stage curriculum to ensure stable and efficient training. Validated in extensive simulations, our method was successfully deployed in zero-shot sim-to-real transfer on an onboard NUC, where it sustains a 100~Hz control rate and demonstrates robust, agile navigation in cluttered real-world environments. Our source code will be released for community reference.

Kimi K2: Open Agentic Intelligence

Jul 28, 2025

Abstract:We introduce Kimi K2, a Mixture-of-Experts (MoE) large language model with 32 billion activated parameters and 1 trillion total parameters. We propose the MuonClip optimizer, which improves upon Muon with a novel QK-clip technique to address training instability while enjoying the advanced token efficiency of Muon. Based on MuonClip, K2 was pre-trained on 15.5 trillion tokens with zero loss spike. During post-training, K2 undergoes a multi-stage post-training process, highlighted by a large-scale agentic data synthesis pipeline and a joint reinforcement learning (RL) stage, where the model improves its capabilities through interactions with real and synthetic environments. Kimi K2 achieves state-of-the-art performance among open-source non-thinking models, with strengths in agentic capabilities. Notably, K2 obtains 66.1 on Tau2-Bench, 76.5 on ACEBench (En), 65.8 on SWE-Bench Verified, and 47.3 on SWE-Bench Multilingual -- surpassing most open and closed-sourced baselines in non-thinking settings. It also exhibits strong capabilities in coding, mathematics, and reasoning tasks, with a score of 53.7 on LiveCodeBench v6, 49.5 on AIME 2025, 75.1 on GPQA-Diamond, and 27.1 on OJBench, all without extended thinking. These results position Kimi K2 as one of the most capable open-source large language models to date, particularly in software engineering and agentic tasks. We release our base and post-trained model checkpoints to facilitate future research and applications of agentic intelligence.

VoI-Driven Joint Optimization of Control and Communication in Vehicular Digital Twin Network

May 12, 2025Abstract:The vision of sixth-generation (6G) wireless networks paves the way for the seamless integration of digital twins into vehicular networks, giving rise to a Vehicular Digital Twin Network (VDTN). The large amount of computing resources as well as the massive amount of spatial-temporal data in Digital Twin (DT) domain can be utilized to enhance the communication and control performance of Internet of Vehicle (IoV) systems. In this article, we first propose the architecture of VDTN, emphasizing key modules that center on functions related to the joint optimization of control and communication. We then delve into the intricacies of the multitimescale decision process inherent in joint optimization in VDTN, specifically investigating the dynamic interplay between control and communication. To facilitate the joint optimization, we define two Value of Information (VoI) concepts rooted in control performance. Subsequently, utilizing VoI as a bridge between control and communication, we introduce a novel joint optimization framework, which involves iterative processing of two Deep Reinforcement Learning (DRL) modules corresponding to control and communication to derive the optimal policy. Finally, we conduct simulations of the proposed framework applied to a platoon scenario to demonstrate its effectiveness in ensu

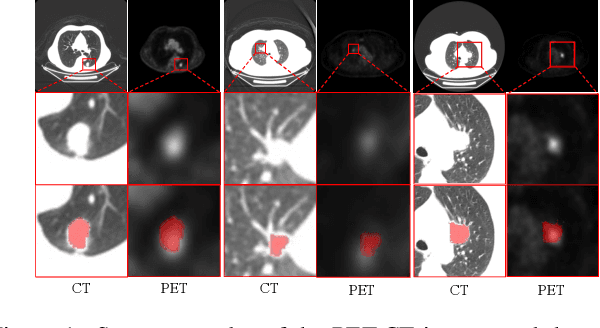

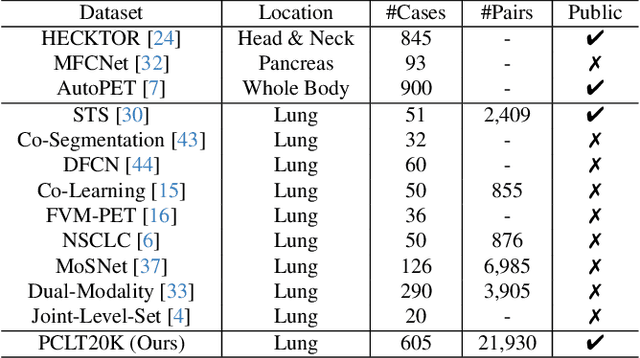

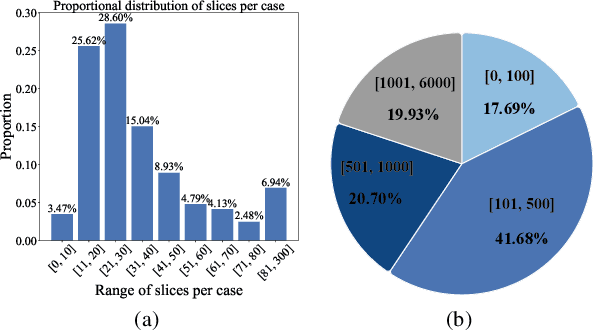

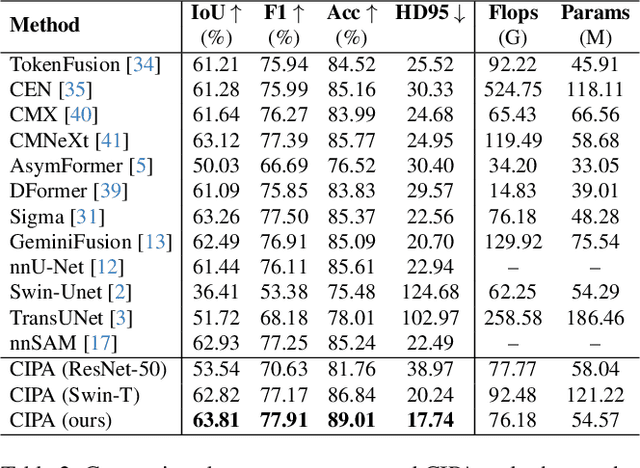

Cross-Modal Interactive Perception Network with Mamba for Lung Tumor Segmentation in PET-CT Images

Mar 21, 2025

Abstract:Lung cancer is a leading cause of cancer-related deaths globally. PET-CT is crucial for imaging lung tumors, providing essential metabolic and anatomical information, while it faces challenges such as poor image quality, motion artifacts, and complex tumor morphology. Deep learning-based models are expected to address these problems, however, existing small-scale and private datasets limit significant performance improvements for these methods. Hence, we introduce a large-scale PET-CT lung tumor segmentation dataset, termed PCLT20K, which comprises 21,930 pairs of PET-CT images from 605 patients. Furthermore, we propose a cross-modal interactive perception network with Mamba (CIPA) for lung tumor segmentation in PET-CT images. Specifically, we design a channel-wise rectification module (CRM) that implements a channel state space block across multi-modal features to learn correlated representations and helps filter out modality-specific noise. A dynamic cross-modality interaction module (DCIM) is designed to effectively integrate position and context information, which employs PET images to learn regional position information and serves as a bridge to assist in modeling the relationships between local features of CT images. Extensive experiments on a comprehensive benchmark demonstrate the effectiveness of our CIPA compared to the current state-of-the-art segmentation methods. We hope our research can provide more exploration opportunities for medical image segmentation. The dataset and code are available at https://github.com/mj129/CIPA.

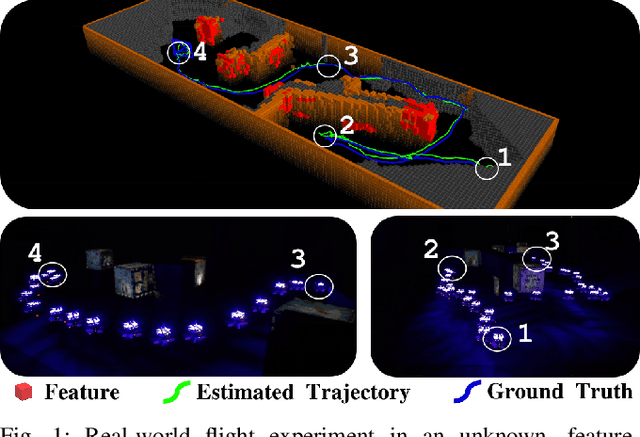

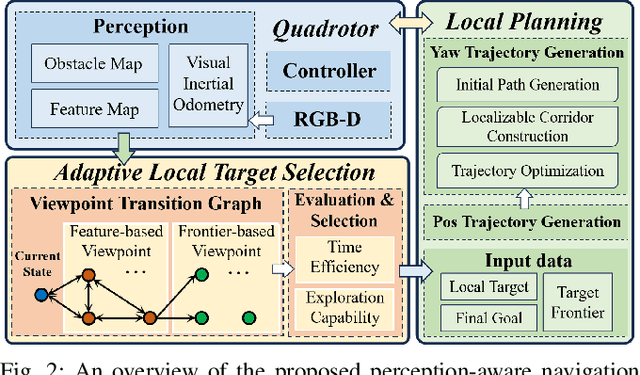

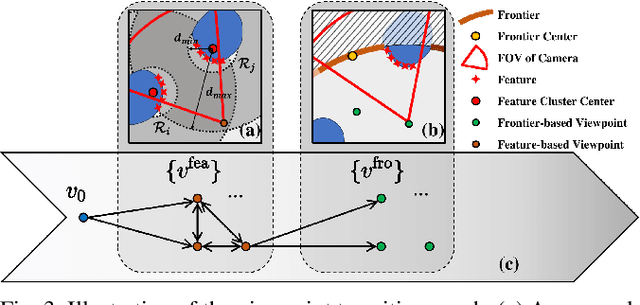

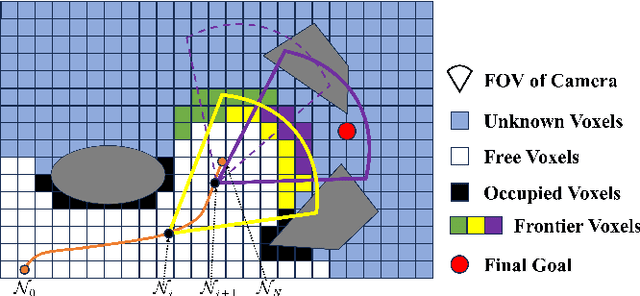

Perception-aware Planning for Quadrotor Flight in Unknown and Feature-limited Environments

Mar 19, 2025

Abstract:Various studies on perception-aware planning have been proposed to enhance the state estimation accuracy of quadrotors in visually degraded environments. However, many existing methods heavily rely on prior environmental knowledge and face significant limitations in previously unknown environments with sparse localization features, which greatly limits their practical application. In this paper, we present a perception-aware planning method for quadrotor flight in unknown and feature-limited environments that properly allocates perception resources among environmental information during navigation. We introduce a viewpoint transition graph that allows for the adaptive selection of local target viewpoints, which guide the quadrotor to efficiently navigate to the goal while maintaining sufficient localizability and without being trapped in feature-limited regions. During the local planning, a novel yaw trajectory generation method that simultaneously considers exploration capability and localizability is presented. It constructs a localizable corridor via feature co-visibility evaluation to ensure localization robustness in a computationally efficient way. Through validations conducted in both simulation and real-world experiments, we demonstrate the feasibility and real-time performance of the proposed method. The source code will be released to benefit the community.

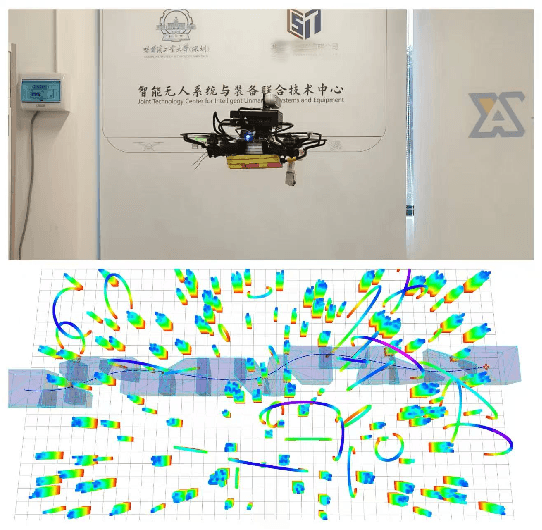

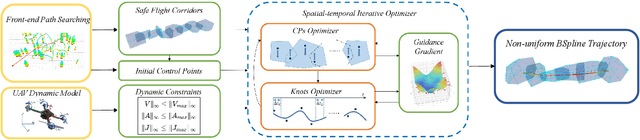

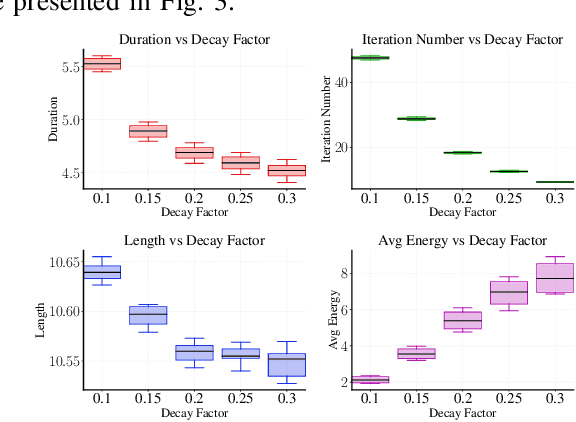

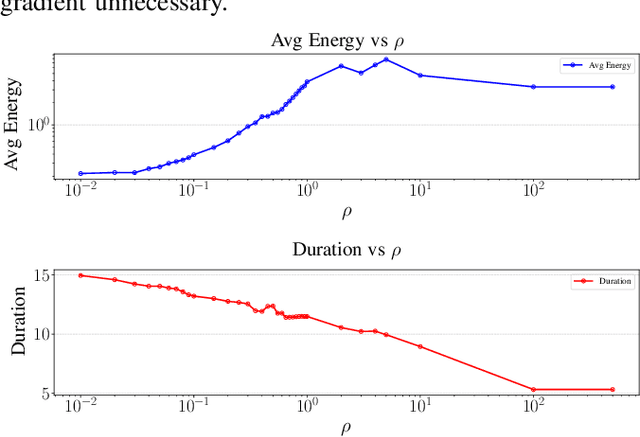

STORM: Spatial-Temporal Iterative Optimization for Reliable Multicopter Trajectory Generation

Mar 05, 2025

Abstract:Efficient and safe trajectory planning plays a critical role in the application of quadrotor unmanned aerial vehicles. Currently, the inherent trade-off between constraint compliance and computational efficiency enhancement in UAV trajectory optimization problems has not been sufficiently addressed. To enhance the performance of UAV trajectory optimization, we propose a spatial-temporal iterative optimization framework. Firstly, B-splines are utilized to represent UAV trajectories, with rigorous safety assurance achieved through strict enforcement of constraints on control points. Subsequently, a set of QP-LP subproblems via spatial-temporal decoupling and constraint linearization is derived. Finally, an iterative optimization strategy incorporating guidance gradients is employed to obtain high-performance UAV trajectories in different scenarios. Both simulation and real-world experimental results validate the efficiency and high-performance of the proposed optimization framework in generating safe and fast trajectories. Our source codes will be released for community reference at https://hitsz-mas.github.io/STORM

Improving the adaptive and continuous learning capabilities of artificial neural networks: Lessons from multi-neuromodulatory dynamics

Jan 12, 2025

Abstract:Continuous, adaptive learning-the ability to adapt to the environment and improve performance-is a hallmark of both natural and artificial intelligence. Biological organisms excel in acquiring, transferring, and retaining knowledge while adapting to dynamic environments, making them a rich source of inspiration for artificial neural networks (ANNs). This study explores how neuromodulation, a fundamental feature of biological learning systems, can help address challenges such as catastrophic forgetting and enhance the robustness of ANNs in continuous learning scenarios. Driven by neuromodulators including dopamine (DA), acetylcholine (ACh), serotonin (5-HT) and noradrenaline (NA), neuromodulatory processes in the brain operate at multiple scales, facilitating dynamic responses to environmental changes through mechanisms ranging from local synaptic plasticity to global network-wide adaptability. Importantly, the relationship between neuromodulators, and their interplay in the modulation of sensory and cognitive processes are more complex than expected, demonstrating a "many-to-one" neuromodulator-to-task mapping. To inspire the design of novel neuromodulation-aware learning rules, we highlight (i) how multi-neuromodulatory interactions enrich single-neuromodulator-driven learning, (ii) the impact of neuromodulators at multiple spatial and temporal scales, and correspondingly, (iii) strategies to integrate neuromodulated learning into or approximate it in ANNs. To illustrate these principles, we present a case study to demonstrate how neuromodulation-inspired mechanisms, such as DA-driven reward processing and NA-based cognitive flexibility, can enhance ANN performance in a Go/No-Go task. By integrating multi-scale neuromodulation, we aim to bridge the gap between biological learning and artificial systems, paving the way for ANNs with greater flexibility, robustness, and adaptability.

Developing a Reliable, General-Purpose Hallucination Detection and Mitigation Service: Insights and Lessons Learned

Jul 22, 2024

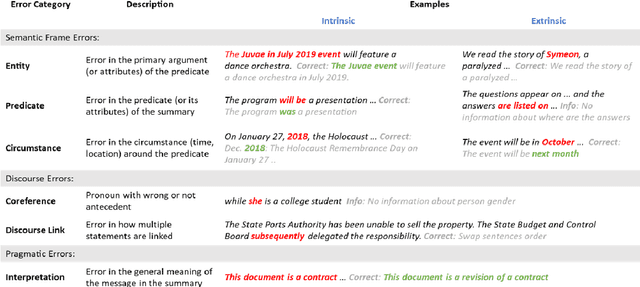

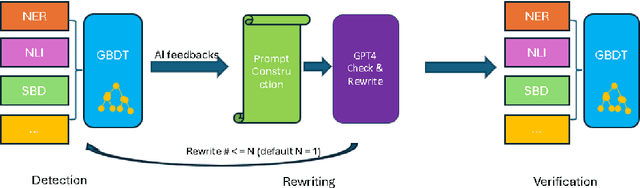

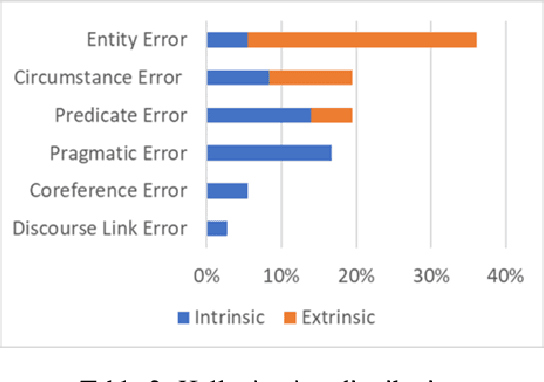

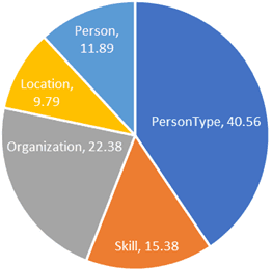

Abstract:Hallucination, a phenomenon where large language models (LLMs) produce output that is factually incorrect or unrelated to the input, is a major challenge for LLM applications that require accuracy and dependability. In this paper, we introduce a reliable and high-speed production system aimed at detecting and rectifying the hallucination issue within LLMs. Our system encompasses named entity recognition (NER), natural language inference (NLI), span-based detection (SBD), and an intricate decision tree-based process to reliably detect a wide range of hallucinations in LLM responses. Furthermore, our team has crafted a rewriting mechanism that maintains an optimal mix of precision, response time, and cost-effectiveness. We detail the core elements of our framework and underscore the paramount challenges tied to response time, availability, and performance metrics, which are crucial for real-world deployment of these technologies. Our extensive evaluation, utilizing offline data and live production traffic, confirms the efficacy of our proposed framework and service.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge