Weiren Wu

A Cascaded Information Interaction Network for Precise Image Segmentation

Jan 02, 2026Abstract:Visual perception plays a pivotal role in enabling autonomous behavior, offering a cost-effective and efficient alternative to complex multi-sensor systems. However, robust segmentation remains a challenge in complex scenarios. To address this, this paper proposes a cascaded convolutional neural network integrated with a novel Global Information Guidance Module. This module is designed to effectively fuse low-level texture details with high-level semantic features across multiple layers, thereby overcoming the inherent limitations of single-scale feature extraction. This architectural innovation significantly enhances segmentation accuracy, particularly in visually cluttered or blurred environments where traditional methods often fail. Experimental evaluations on benchmark image segmentation datasets demonstrate that the proposed framework achieves superior precision, outperforming existing state-of-the-art methods. The results highlight the effectiveness of the approach and its promising potential for deployment in practical robotic applications.

Cascaded Interaction with Eroded Deep Supervision for Salient Object Detection

Nov 30, 2023

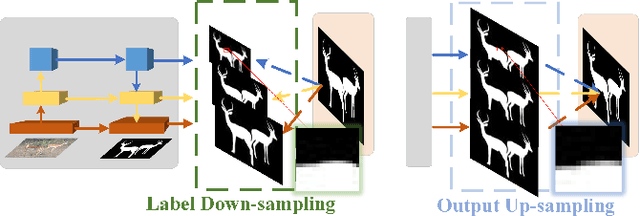

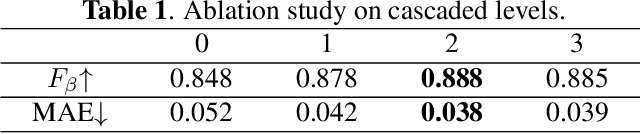

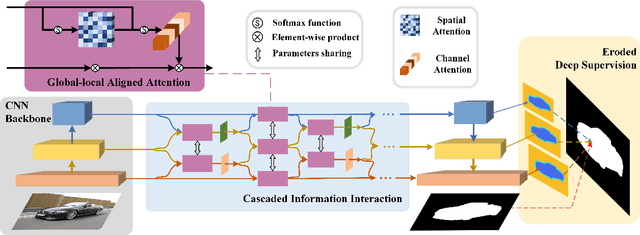

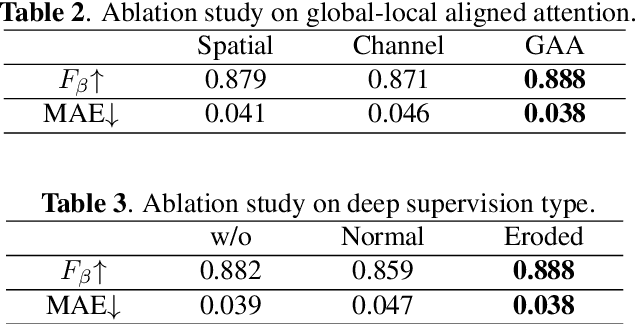

Abstract:Deep convolutional neural networks have been widely applied in salient object detection and have achieved remarkable results in this field. However, existing models suffer from information distortion caused by interpolation during up-sampling and down-sampling. In response to this drawback, this article starts from two directions in the network: feature and label. On the one hand, a novel cascaded interaction network with a guidance module named global-local aligned attention (GAA) is designed to reduce the negative impact of interpolation on the feature side. On the other hand, a deep supervision strategy based on edge erosion is proposed to reduce the negative guidance of label interpolation on lateral output. Extensive experiments on five popular datasets demonstrate the superiority of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge