Martin Messmer

Lightweight Multi-Frame Integration for Robust YOLO Object Detection in Videos

Jun 25, 2025Abstract:Modern image-based object detection models, such as YOLOv7, primarily process individual frames independently, thus ignoring valuable temporal context naturally present in videos. Meanwhile, existing video-based detection methods often introduce complex temporal modules, significantly increasing model size and computational complexity. In practical applications such as surveillance and autonomous driving, transient challenges including motion blur, occlusions, and abrupt appearance changes can severely degrade single-frame detection performance. To address these issues, we propose a straightforward yet highly effective strategy: stacking multiple consecutive frames as input to a YOLO-based detector while supervising only the output corresponding to a single target frame. This approach leverages temporal information with minimal modifications to existing architectures, preserving simplicity, computational efficiency, and real-time inference capability. Extensive experiments on the challenging MOT20Det and our BOAT360 datasets demonstrate that our method improves detection robustness, especially for lightweight models, effectively narrowing the gap between compact and heavy detection networks. Additionally, we contribute the BOAT360 benchmark dataset, comprising annotated fisheye video sequences captured from a boat, to support future research in multi-frame video object detection in challenging real-world scenarios.

Learning-Based Distance Estimation for 360° Single-Sensor Setups

Jun 25, 2025Abstract:Accurate distance estimation is a fundamental challenge in robotic perception, particularly in omnidirectional imaging, where traditional geometric methods struggle with lens distortions and environmental variability. In this work, we propose a neural network-based approach for monocular distance estimation using a single 360{\deg} fisheye lens camera. Unlike classical trigonometric techniques that rely on precise lens calibration, our method directly learns and infers the distance of objects from raw omnidirectional inputs, offering greater robustness and adaptability across diverse conditions. We evaluate our approach on three 360{\deg} datasets (LOAF, ULM360, and a newly captured dataset Boat360), each representing distinct environmental and sensor setups. Our experimental results demonstrate that the proposed learning-based model outperforms traditional geometry-based methods and other learning baselines in both accuracy and robustness. These findings highlight the potential of deep learning for real-time omnidirectional distance estimation, making our approach particularly well-suited for low-cost applications in robotics, autonomous navigation, and surveillance.

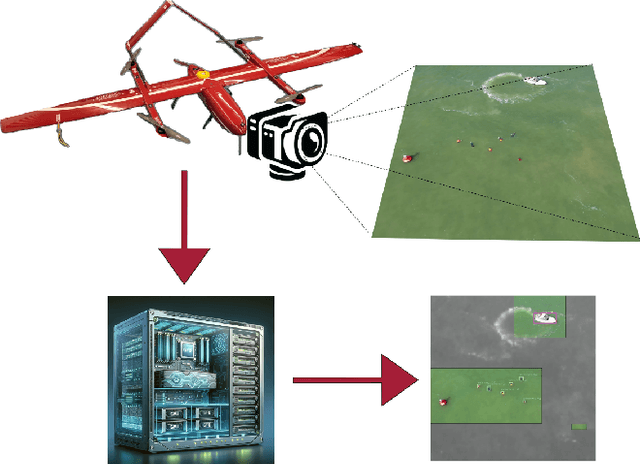

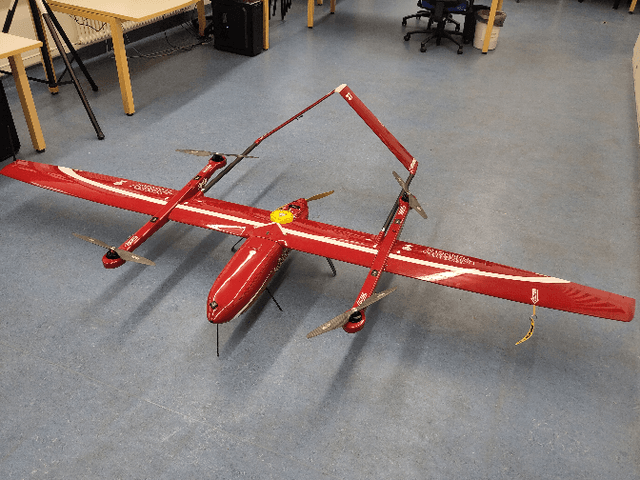

UAV-Assisted Maritime Search and Rescue: A Holistic Approach

Mar 21, 2024

Abstract:In this paper, we explore the application of Unmanned Aerial Vehicles (UAVs) in maritime search and rescue (mSAR) missions, focusing on medium-sized fixed-wing drones and quadcopters. We address the challenges and limitations inherent in operating some of the different classes of UAVs, particularly in search operations. Our research includes the development of a comprehensive software framework designed to enhance the efficiency and efficacy of SAR operations. This framework combines preliminary detection onboard UAVs with advanced object detection at ground stations, aiming to reduce visual strain and improve decision-making for operators. It will be made publicly available upon publication. We conduct experiments to evaluate various Region of Interest (RoI) proposal methods, especially by imposing simulated limited bandwidth on them, an important consideration when flying remote or offshore operations. This forces the algorithm to prioritize some predictions over others.

Evaluating UAV Path Planning Algorithms for Realistic Maritime Search and Rescue Missions

Feb 02, 2024Abstract:Unmanned Aerial Vehicles (UAVs) are emerging as very important tools in search and rescue (SAR) missions at sea, enabling swift and efficient deployment for locating individuals or vessels in distress. The successful execution of these critical missions heavily relies on effective path planning algorithms that navigate UAVs through complex maritime environments while considering dynamic factors such as water currents and wind flow. Furthermore, they need to account for the uncertainty in search target locations. However, existing path planning methods often fail to address the inherent uncertainty associated with the precise location of search targets and the uncertainty of oceanic forces. In this paper, we develop a framework to develop and investigate trajectory planning algorithms for maritime SAR scenarios employing UAVs. We adopt it to compare multiple planning strategies, some of them used in practical applications by the United States Coast Guard. Furthermore, we propose a novel planner that aims at bridging the gap between computation heavy, precise algorithms and lightweight strategies applicable to real-world scenarios.

The 2nd Workshop on Maritime Computer Vision 2024

Nov 23, 2023

Abstract:The 2nd Workshop on Maritime Computer Vision (MaCVi) 2024 addresses maritime computer vision for Unmanned Aerial Vehicles (UAV) and Unmanned Surface Vehicles (USV). Three challenges categories are considered: (i) UAV-based Maritime Object Tracking with Re-identification, (ii) USV-based Maritime Obstacle Segmentation and Detection, (iii) USV-based Maritime Boat Tracking. The USV-based Maritime Obstacle Segmentation and Detection features three sub-challenges, including a new embedded challenge addressing efficicent inference on real-world embedded devices. This report offers a comprehensive overview of the findings from the challenges. We provide both statistical and qualitative analyses, evaluating trends from over 195 submissions. All datasets, evaluation code, and the leaderboard are available to the public at https://macvi.org/workshop/macvi24.

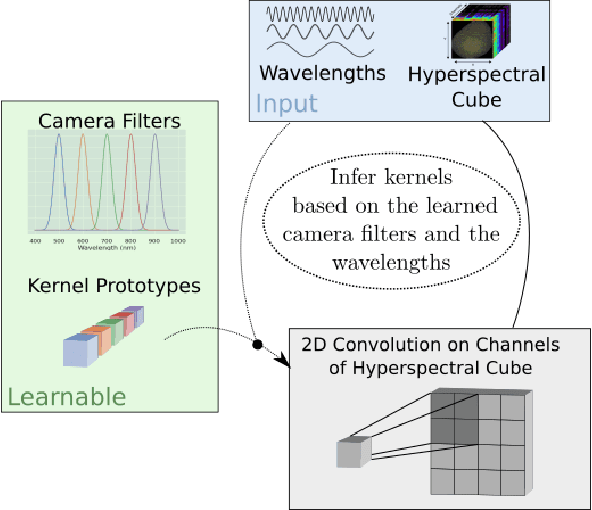

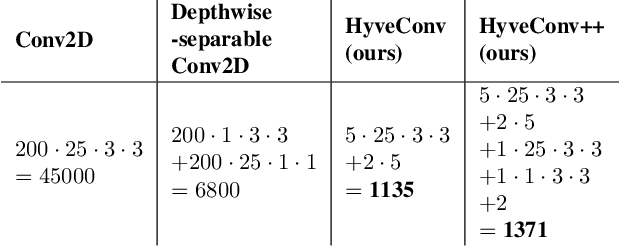

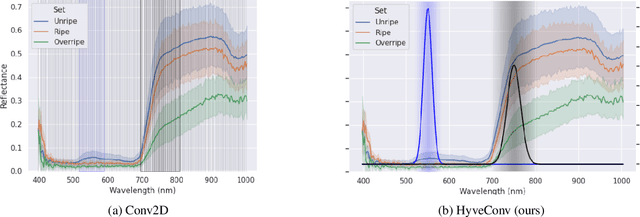

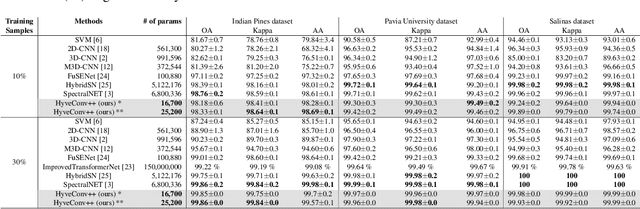

Wavelength-aware 2D Convolutions for Hyperspectral Imaging

Sep 05, 2022

Abstract:Deep Learning could drastically boost the classification accuracy for Hyperspectral Imaging (HSI). Still, the training on the mostly small hyperspectral data sets is not trivial. Two key challenges are the large channel dimension of the recordings and the incompatibility between cameras of different manufacturers. By introducing a suitable model bias and continuously defining the channel dimension, we propose a 2D convolution optimized for these challenges of Hyperspectral Imaging. We evaluate the method based on two different hyperspectral applications (inline inspection and remote sensing). Besides the shown superiority of the model, the modification adds additional explanatory power. In addition, the model learns the necessary camera filters in a data-driven manner. Based on these camera filters, an optimal camera can be designed.

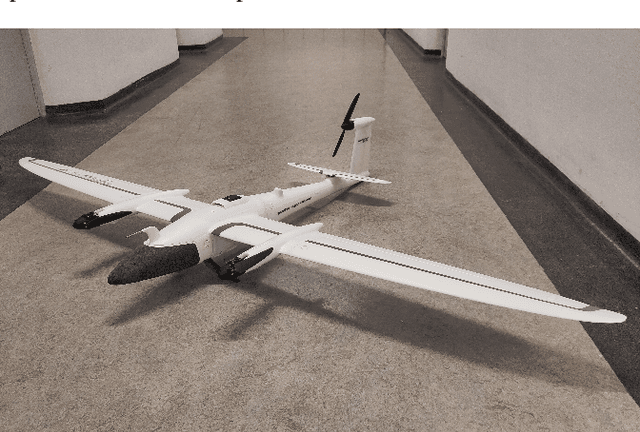

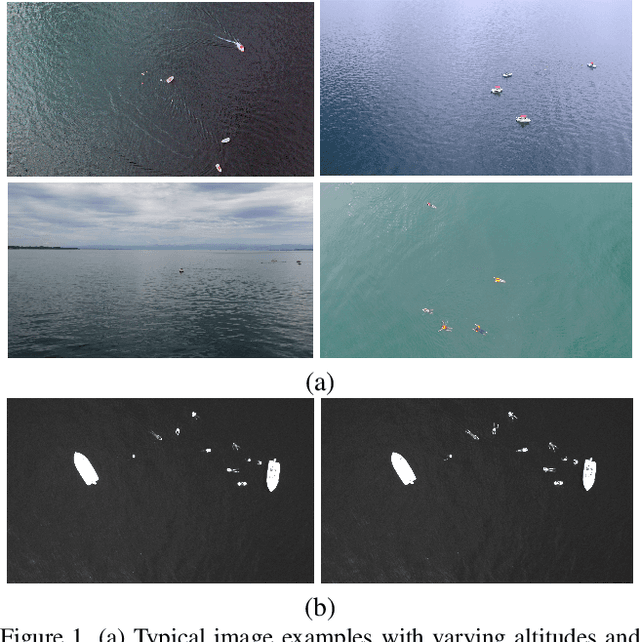

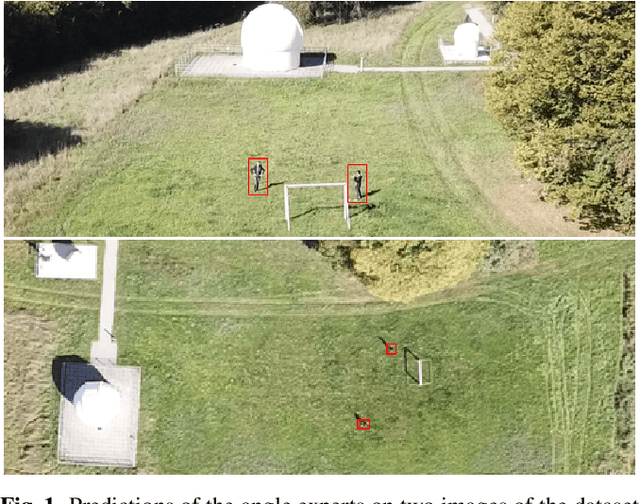

SeaDronesSee: A Maritime Benchmark for Detecting Humans in Open Water

May 05, 2021

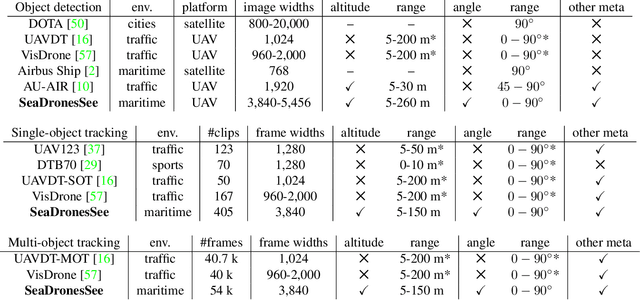

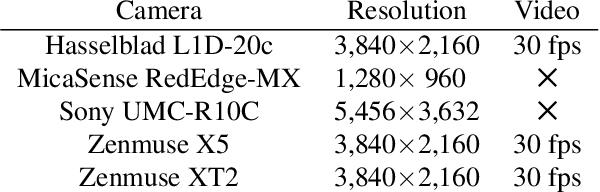

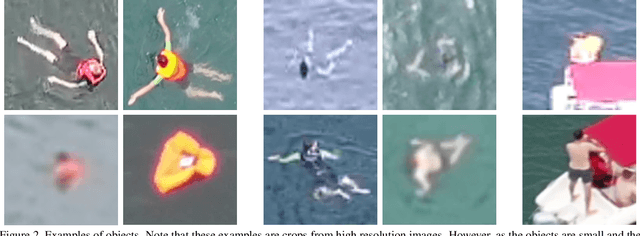

Abstract:Unmanned Aerial Vehicles (UAVs) are of crucial importance in search and rescue missions in maritime environments due to their flexible and fast operation capabilities. Modern computer vision algorithms are of great interest in aiding such missions. However, they are dependent on large amounts of real-case training data from UAVs, which is only available for traffic scenarios on land. Moreover, current object detection and tracking data sets only provide limited environmental information or none at all, neglecting a valuable source of information. Therefore, this paper introduces a large-scaled visual object detection and tracking benchmark (SeaDronesSee) aiming to bridge the gap from land-based vision systems to sea-based ones. We collect and annotate over 54,000 frames with 400,000 instances captured from various altitudes and viewing angles ranging from 5 to 260 meters and 0 to 90 degrees while providing the respective meta information for altitude, viewing angle and other meta data. We evaluate multiple state-of-the-art computer vision algorithms on this newly established benchmark serving as baselines. We provide an evaluation server where researchers can upload their prediction and compare their results on a central leaderboard

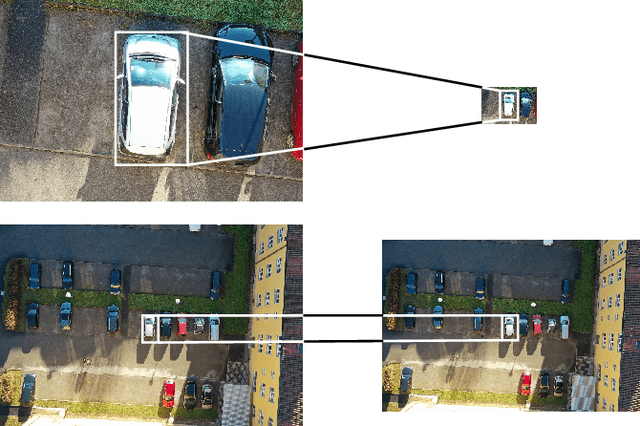

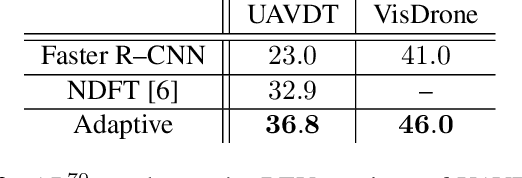

Gaining Scale Invariance in UAV Bird's Eye View Object Detection by Adaptive Resizing

Jan 29, 2021

Abstract:In this work, we introduce a new preprocessing step applicable to UAV bird's eye view imagery, which we call Adaptive Resizing. It is constructed to adjust the vast variances in objects' scales, which are naturally inherent to UAV data sets. Furthermore, it improves inference speed by four to five times on average. We test this extensively on UAVDT, VisDrone, and on a new data set, we captured ourselves. On UAVDT, we achieve more than 100 % relative improvement in AP50. Moreover, we show how this method can be applied to a general UAV object detection task. Additionally, we successfully test our method on a domain transfer task where we train on some interval of altitudes and test on a different one. Code will be made available at our website.

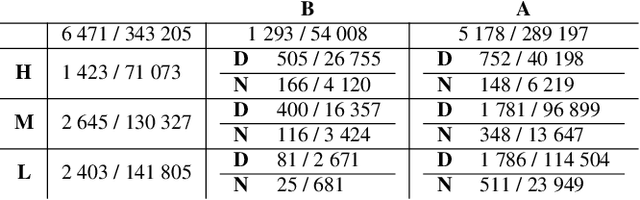

Leveraging domain labels for object detection from UAVs

Jan 29, 2021

Abstract:Object detection from Unmanned Aerial Vehicles (UAVs) is of great importance in many aerial vision-based applications. Despite the great success of generic object detection methods, a large performance drop is observed when applied to images captured by UAVs. This is due to large variations in imaging conditions, such as varying altitudes, dynamically changing viewing angles, and different capture times. We demonstrate that domain knowledge is a valuable source of information and thus propose domain-aware object detectors by using freely accessible sensor data. By splitting the model into cross-domain and domain-specific parts, substantial performance improvements are achieved on multiple datasets across multiple models and metrics. In particular, we achieve a new state-of-the-art performance on UAVDT for real-time detectors. Furthermore, we create a new airborne image dataset by annotating 13 713 objects in 2 900 images featuring precise altitude and viewing angle annotations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge