Janez Perš

Grading Handwritten Engineering Exams with Multimodal Large Language Models

Jan 02, 2026Abstract:Handwritten STEM exams capture open-ended reasoning and diagrams, but manual grading is slow and difficult to scale. We present an end-to-end workflow for grading scanned handwritten engineering quizzes with multimodal large language models (LLMs) that preserves the standard exam process (A4 paper, unconstrained student handwriting). The lecturer provides only a handwritten reference solution (100%) and a short set of grading rules; the reference is converted into a text-only summary that conditions grading without exposing the reference scan. Reliability is achieved through a multi-stage design with a format/presence check to prevent grading blank answers, an ensemble of independent graders, supervisor aggregation, and rigid templates with deterministic validation to produce auditable, machine-parseable reports. We evaluate the frozen pipeline in a clean-room protocol on a held-out real course quiz in Slovenian, including hand-drawn circuit schematics. With state-of-the-art backends (GPT-5.2 and Gemini-3 Pro), the full pipeline achieves $\approx$8-point mean absolute difference to lecturer grades with low bias and an estimated manual-review trigger rate of $\approx$17% at $D_{\max}=40$. Ablations show that trivial prompting and removing the reference solution substantially degrade accuracy and introduce systematic over-grading, confirming that structured prompting and reference grounding are essential.

MULTIAQUA: A multimodal maritime dataset and robust training strategies for multimodal semantic segmentation

Dec 19, 2025

Abstract:Unmanned surface vehicles can encounter a number of varied visual circumstances during operation, some of which can be very difficult to interpret. While most cases can be solved only using color camera images, some weather and lighting conditions require additional information. To expand the available maritime data, we present a novel multimodal maritime dataset MULTIAQUA (Multimodal Aquatic Dataset). Our dataset contains synchronized, calibrated and annotated data captured by sensors of different modalities, such as RGB, thermal, IR, LIDAR, etc. The dataset is aimed at developing supervised methods that can extract useful information from these modalities in order to provide a high quality of scene interpretation regardless of potentially poor visibility conditions. To illustrate the benefits of the proposed dataset, we evaluate several multimodal methods on our difficult nighttime test set. We present training approaches that enable multimodal methods to be trained in a more robust way, thus enabling them to retain reliable performance even in near-complete darkness. Our approach allows for training a robust deep neural network only using daytime images, thus significantly simplifying data acquisition, annotation, and the training process.

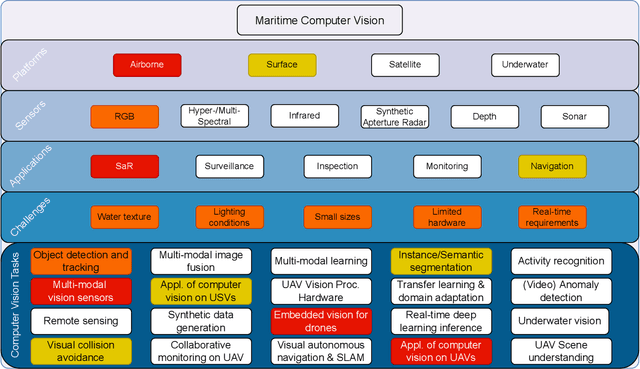

3rd Workshop on Maritime Computer Vision (MaCVi) 2025: Challenge Results

Jan 17, 2025Abstract:The 3rd Workshop on Maritime Computer Vision (MaCVi) 2025 addresses maritime computer vision for Unmanned Surface Vehicles (USV) and underwater. This report offers a comprehensive overview of the findings from the challenges. We provide both statistical and qualitative analyses, evaluating trends from over 700 submissions. All datasets, evaluation code, and the leaderboard are available to the public at https://macvi.org/workshop/macvi25.

The 2nd Workshop on Maritime Computer Vision 2024

Nov 23, 2023

Abstract:The 2nd Workshop on Maritime Computer Vision (MaCVi) 2024 addresses maritime computer vision for Unmanned Aerial Vehicles (UAV) and Unmanned Surface Vehicles (USV). Three challenges categories are considered: (i) UAV-based Maritime Object Tracking with Re-identification, (ii) USV-based Maritime Obstacle Segmentation and Detection, (iii) USV-based Maritime Boat Tracking. The USV-based Maritime Obstacle Segmentation and Detection features three sub-challenges, including a new embedded challenge addressing efficicent inference on real-world embedded devices. This report offers a comprehensive overview of the findings from the challenges. We provide both statistical and qualitative analyses, evaluating trends from over 195 submissions. All datasets, evaluation code, and the leaderboard are available to the public at https://macvi.org/workshop/macvi24.

LaRS: A Diverse Panoptic Maritime Obstacle Detection Dataset and Benchmark

Aug 18, 2023

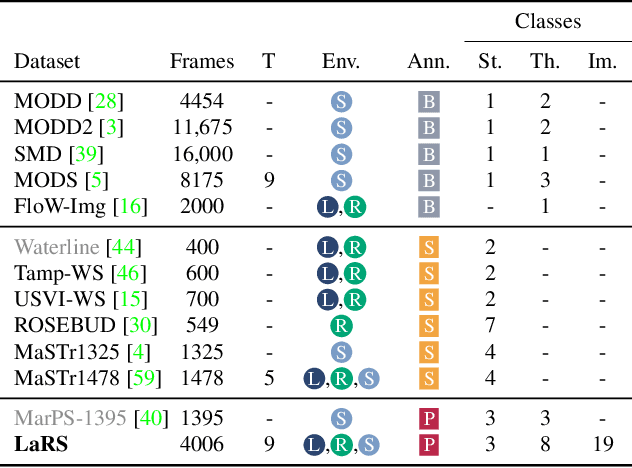

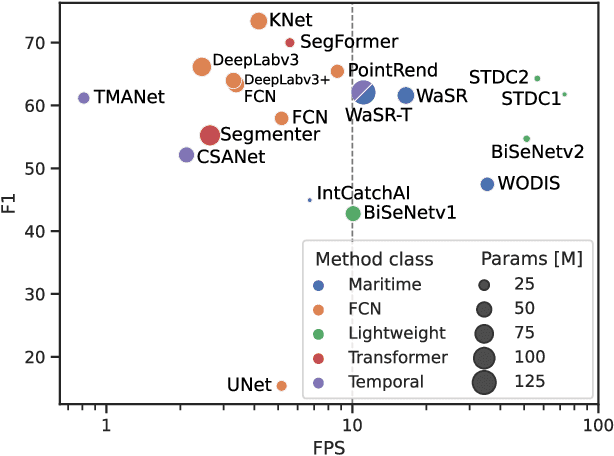

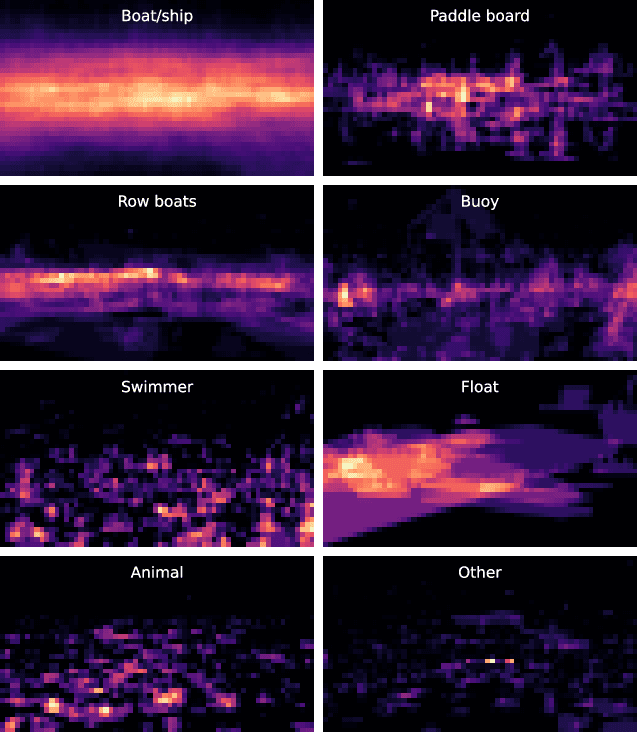

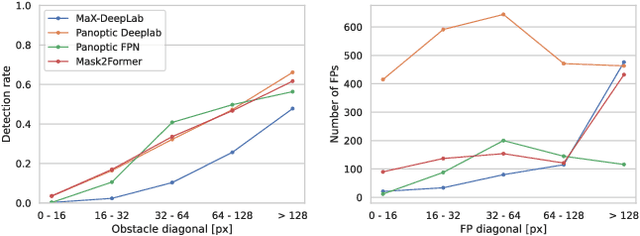

Abstract:The progress in maritime obstacle detection is hindered by the lack of a diverse dataset that adequately captures the complexity of general maritime environments. We present the first maritime panoptic obstacle detection benchmark LaRS, featuring scenes from Lakes, Rivers and Seas. Our major contribution is the new dataset, which boasts the largest diversity in recording locations, scene types, obstacle classes, and acquisition conditions among the related datasets. LaRS is composed of over 4000 per-pixel labeled key frames with nine preceding frames to allow utilization of the temporal texture, amounting to over 40k frames. Each key frame is annotated with 8 thing, 3 stuff classes and 19 global scene attributes. We report the results of 27 semantic and panoptic segmentation methods, along with several performance insights and future research directions. To enable objective evaluation, we have implemented an online evaluation server. The LaRS dataset, evaluation toolkit and benchmark are publicly available at: https://lojzezust.github.io/lars-dataset

1st Workshop on Maritime Computer Vision 2023: Challenge Results

Nov 28, 2022

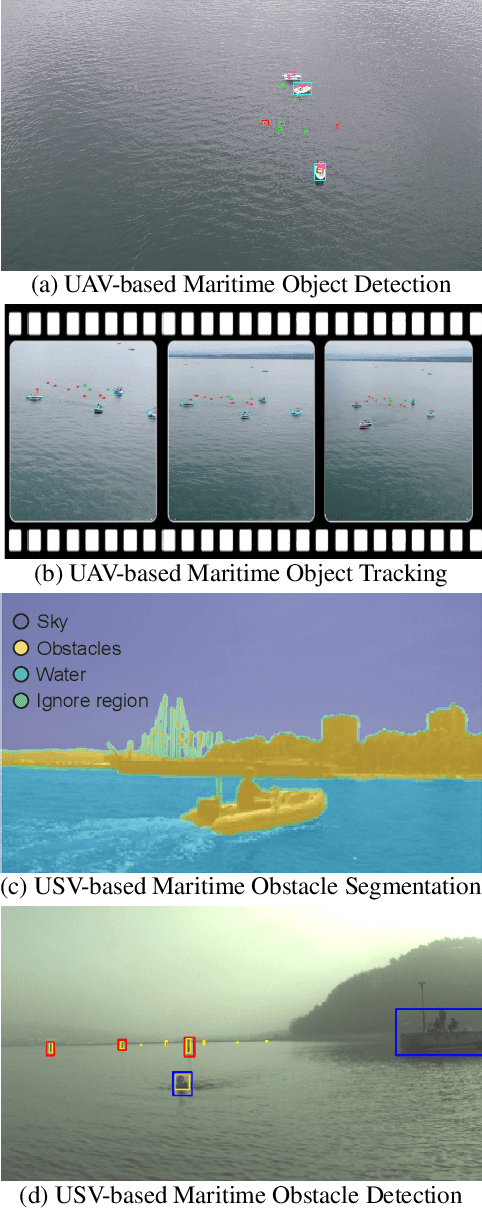

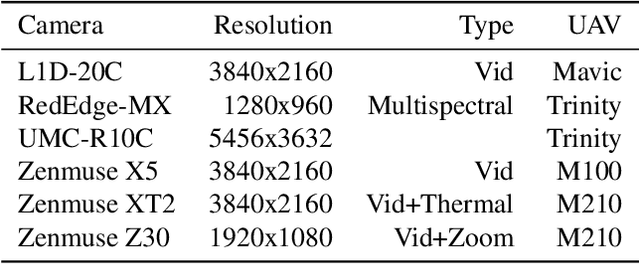

Abstract:The 1$^{\text{st}}$ Workshop on Maritime Computer Vision (MaCVi) 2023 focused on maritime computer vision for Unmanned Aerial Vehicles (UAV) and Unmanned Surface Vehicle (USV), and organized several subchallenges in this domain: (i) UAV-based Maritime Object Detection, (ii) UAV-based Maritime Object Tracking, (iii) USV-based Maritime Obstacle Segmentation and (iv) USV-based Maritime Obstacle Detection. The subchallenges were based on the SeaDronesSee and MODS benchmarks. This report summarizes the main findings of the individual subchallenges and introduces a new benchmark, called SeaDronesSee Object Detection v2, which extends the previous benchmark by including more classes and footage. We provide statistical and qualitative analyses, and assess trends in the best-performing methodologies of over 130 submissions. The methods are summarized in the appendix. The datasets, evaluation code and the leaderboard are publicly available at https://seadronessee.cs.uni-tuebingen.de/macvi.

MODS -- A USV-oriented object detection and obstacle segmentation benchmark

May 05, 2021

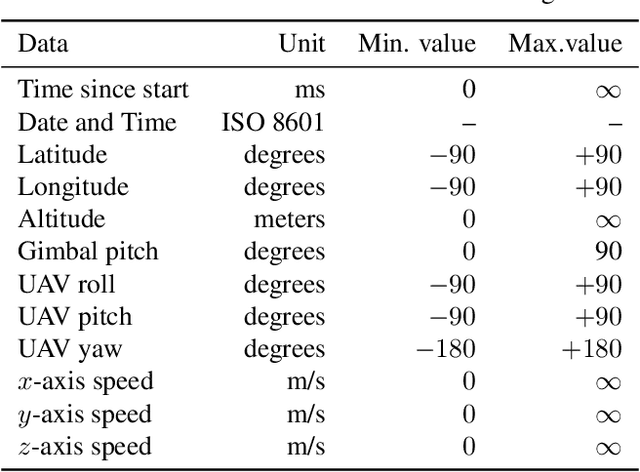

Abstract:Small-sized unmanned surface vehicles (USV) are coastal water devices with a broad range of applications such as environmental control and surveillance. A crucial capability for autonomous operation is obstacle detection for timely reaction and collision avoidance, which has been recently explored in the context of camera-based visual scene interpretation. Owing to curated datasets, substantial advances in scene interpretation have been made in a related field of unmanned ground vehicles. However, the current maritime datasets do not adequately capture the complexity of real-world USV scenes and the evaluation protocols are not standardised, which makes cross-paper comparison of different methods difficult and hiders the progress. To address these issues, we introduce a new obstacle detection benchmark MODS, which considers two major perception tasks: maritime object detection and the more general maritime obstacle segmentation. We present a new diverse maritime evaluation dataset containing approximately 81k stereo images synchronized with an on-board IMU, with over 60k objects annotated. We propose a new obstacle segmentation performance evaluation protocol that reflects the detection accuracy in a way meaningful for practical USV navigation. Seventeen recent state-of-the-art object detection and obstacle segmentation methods are evaluated using the proposed protocol, creating a benchmark to facilitate development of the field.

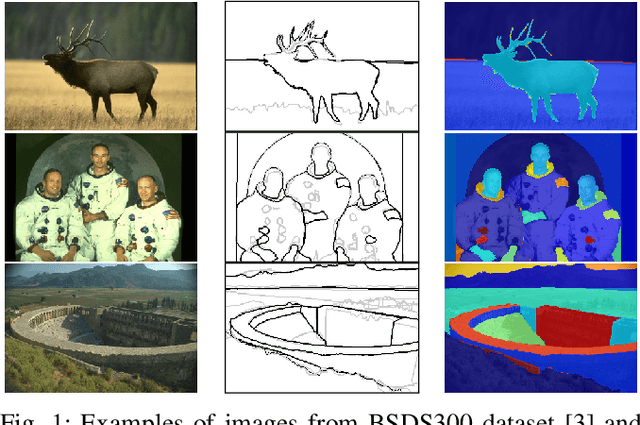

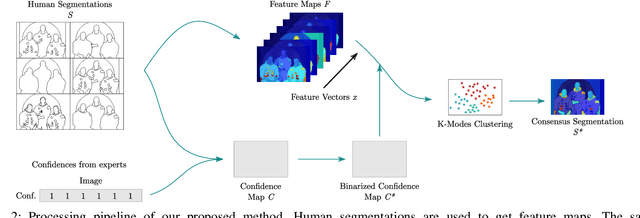

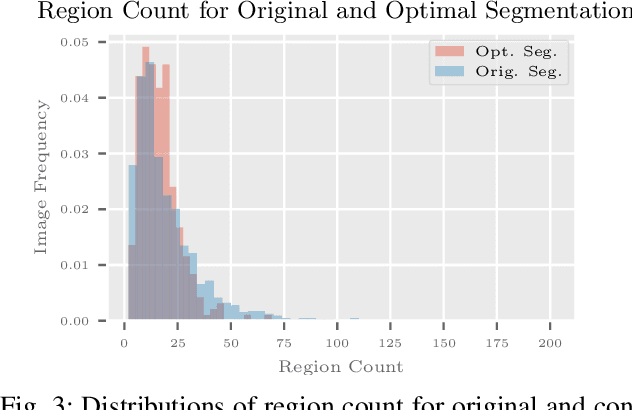

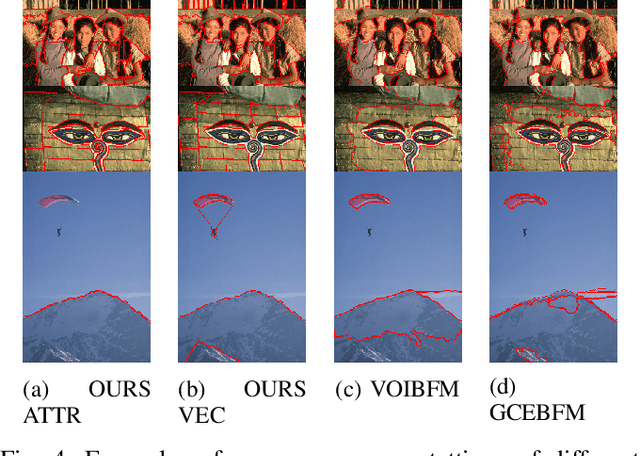

Human-Centered Unsupervised Segmentation Fusion

Jul 22, 2020

Abstract:Segmentation is generally an ill-posed problem since it results in multiple solutions and is, therefore, hard to define ground truth data to evaluate algorithms. The problem can be naively surpassed by using only one annotator per image, but such acquisition doesn't represent the cognitive perception of an image by the majority of people. Nowadays, it is not difficult to obtain multiple segmentations with crowdsourcing, so the only problem that stays is how to get one ground truth segmentation per image. There already exist numerous algorithmic solutions, but most methods are supervised or don't consider confidence per human segmentation. In this paper, we introduce a new segmentation fusion model that is based on K-Modes clustering. Results obtained from publicly available datasets with human ground truth segmentations clearly show that our model outperforms the state-of-the-art on human segmentations.

Correcting Decalibration of Stereo Cameras in Self-Driving Vehicles

Jan 15, 2020

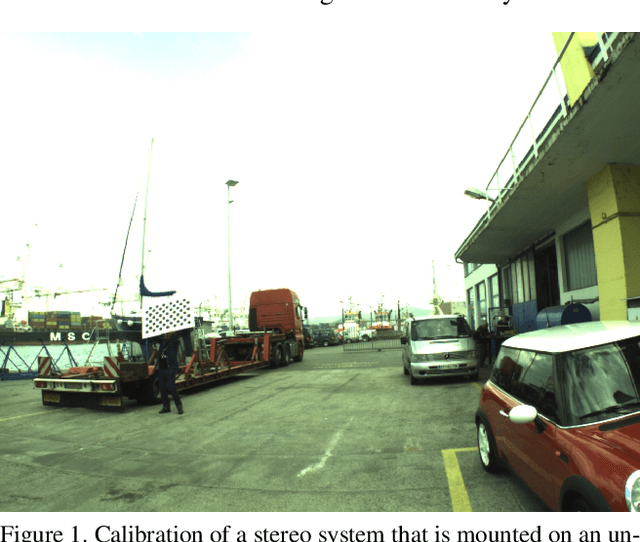

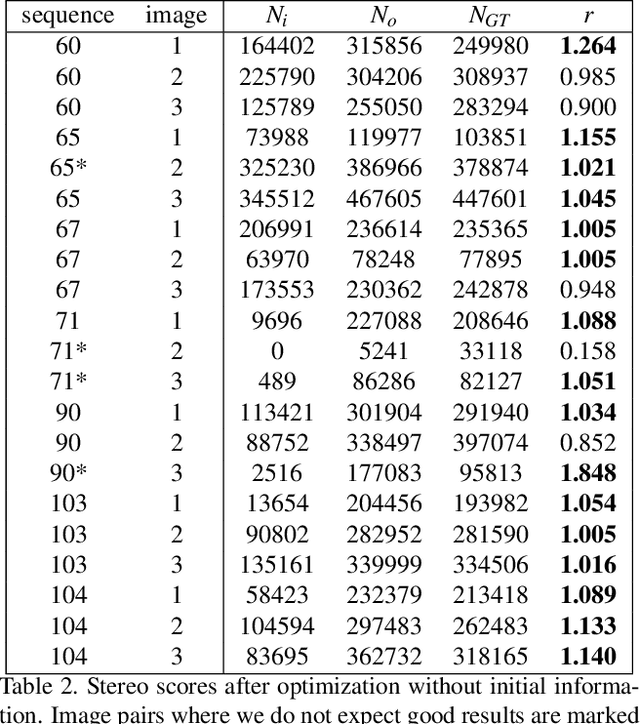

Abstract:We address the problem of optical decalibration in mobile stereo camera setups, especially in context of autonomous vehicles. In real world conditions, an optical system is subject to various sources of anticipated and unanticipated mechanical stress (vibration, rough handling, collisions). Mechanical stress changes the geometry between the cameras that make up the stereo pair, and as a consequence, the pre-calculated epipolar geometry is no longer valid. Our method is based on optimization of camera geometry parameters and plugs directly into the output of the stereo matching algorithm. Therefore, it is able to recover calibration parameters on image pairs obtained from a decalibrated stereo system with minimal use of additional computing resources. The number of successfully recovered depth pixels is used as an objective function, which we aim to maximize. Our simulation confirms that the method can run constantly in parallel to stereo estimation and thus help keep the system calibrated in real time. Results confirm that the method is able to recalibrate all the parameters except for the baseline distance, which scales the absolute depth readings. However, that scaling factor could be uniquely determined using any kind of absolute range finding methods (e.g. a single beam time-of-flight sensor).

Stereo obstacle detection for unmanned surface vehicles by IMU-assisted semantic segmentation

Feb 22, 2018

Abstract:A new obstacle detection algorithm for unmanned surface vehicles (USVs) is presented. A state-of-the-art graphical model for semantic segmentation is extended to incorporate boat pitch and roll measurements from the on-board inertial measurement unit (IMU), and a stereo verification algorithm that consolidates tentative detections obtained from the segmentation is proposed. The IMU readings are used to estimate the location of horizon line in the image, which automatically adjusts the priors in the probabilistic semantic segmentation model. We derive the equations for projecting the horizon into images, propose an efficient optimization algorithm for the extended graphical model, and offer a practical IMU-camera-USV calibration procedure. Using an USV equipped with multiple synchronized sensors, we captured a new challenging multi-modal dataset, and annotated its images with water edge and obstacles. Experimental results show that the proposed algorithm significantly outperforms the state of the art, with nearly 30% improvement in water-edge detection accuracy, an over 21% reduction of false positive rate, an almost 60% reduction of false negative rate, and an over 65% increase of true positive rate, while its Matlab implementation runs in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge