Fabio Poiesi

OpenHype: Hyperbolic Embeddings for Hierarchical Open-Vocabulary Radiance Fields

Oct 24, 2025Abstract:Modeling the inherent hierarchical structure of 3D objects and 3D scenes is highly desirable, as it enables a more holistic understanding of environments for autonomous agents. Accomplishing this with implicit representations, such as Neural Radiance Fields, remains an unexplored challenge. Existing methods that explicitly model hierarchical structures often face significant limitations: they either require multiple rendering passes to capture embeddings at different levels of granularity, significantly increasing inference time, or rely on predefined, closed-set discrete hierarchies that generalize poorly to the diverse and nuanced structures encountered by agents in the real world. To address these challenges, we propose OpenHype, a novel approach that represents scene hierarchies using a continuous hyperbolic latent space. By leveraging the properties of hyperbolic geometry, OpenHype naturally encodes multi-scale relationships and enables smooth traversal of hierarchies through geodesic paths in latent space. Our method outperforms state-of-the-art approaches on standard benchmarks, demonstrating superior efficiency and adaptability in 3D scene understanding.

GRASPLAT: Enabling dexterous grasping through novel view synthesis

Oct 22, 2025Abstract:Achieving dexterous robotic grasping with multi-fingered hands remains a significant challenge. While existing methods rely on complete 3D scans to predict grasp poses, these approaches face limitations due to the difficulty of acquiring high-quality 3D data in real-world scenarios. In this paper, we introduce GRASPLAT, a novel grasping framework that leverages consistent 3D information while being trained solely on RGB images. Our key insight is that by synthesizing physically plausible images of a hand grasping an object, we can regress the corresponding hand joints for a successful grasp. To achieve this, we utilize 3D Gaussian Splatting to generate high-fidelity novel views of real hand-object interactions, enabling end-to-end training with RGB data. Unlike prior methods, our approach incorporates a photometric loss that refines grasp predictions by minimizing discrepancies between rendered and real images. We conduct extensive experiments on both synthetic and real-world grasping datasets, demonstrating that GRASPLAT improves grasp success rates up to 36.9% over existing image-based methods. Project page: https://mbortolon97.github.io/grasplat/

Masked Clustering Prediction for Unsupervised Point Cloud Pre-training

Aug 12, 2025

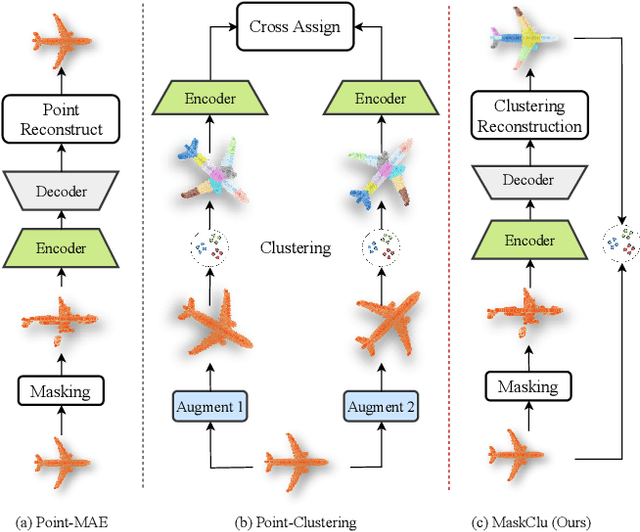

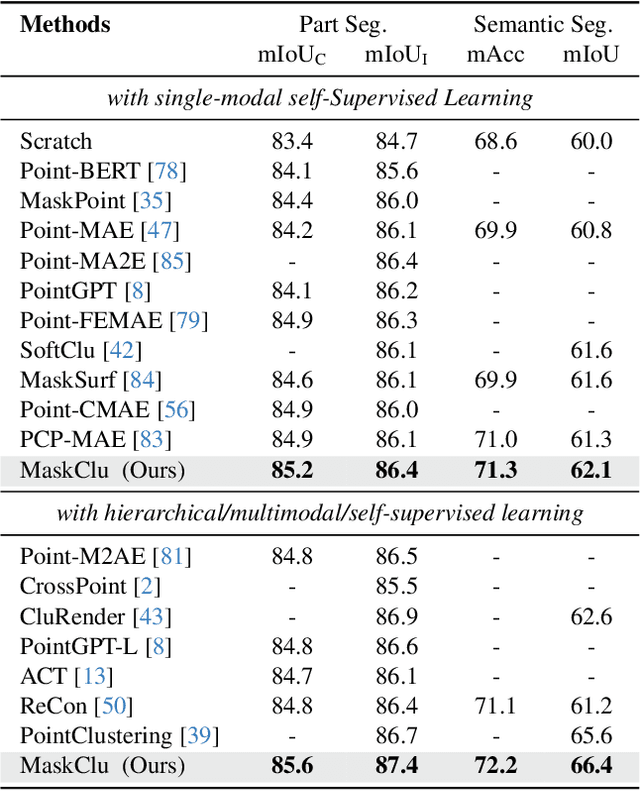

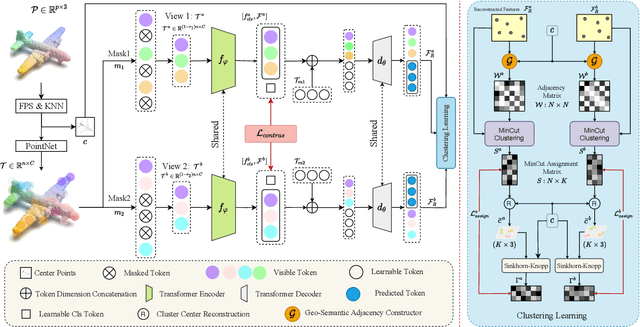

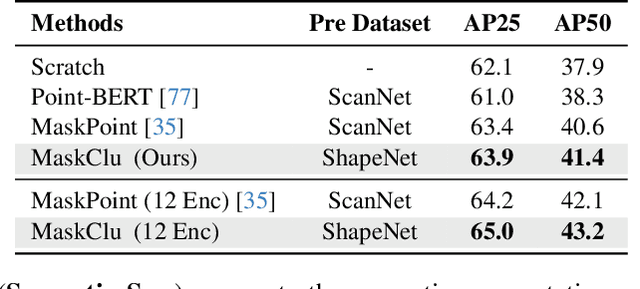

Abstract:Vision transformers (ViTs) have recently been widely applied to 3D point cloud understanding, with masked autoencoding as the predominant pre-training paradigm. However, the challenge of learning dense and informative semantic features from point clouds via standard ViTs remains underexplored. We propose MaskClu, a novel unsupervised pre-training method for ViTs on 3D point clouds that integrates masked point modeling with clustering-based learning. MaskClu is designed to reconstruct both cluster assignments and cluster centers from masked point clouds, thus encouraging the model to capture dense semantic information. Additionally, we introduce a global contrastive learning mechanism that enhances instance-level feature learning by contrasting different masked views of the same point cloud. By jointly optimizing these complementary objectives, i.e., dense semantic reconstruction, and instance-level contrastive learning. MaskClu enables ViTs to learn richer and more semantically meaningful representations from 3D point clouds. We validate the effectiveness of our method via multiple 3D tasks, including part segmentation, semantic segmentation, object detection, and classification, where MaskClu sets new competitive results. The code and models will be released at:https://github.com/Amazingren/maskclu.

AI-driven visual monitoring of industrial assembly tasks

Jun 18, 2025Abstract:Visual monitoring of industrial assembly tasks is critical for preventing equipment damage due to procedural errors and ensuring worker safety. Although commercial solutions exist, they typically require rigid workspace setups or the application of visual markers to simplify the problem. We introduce ViMAT, a novel AI-driven system for real-time visual monitoring of assembly tasks that operates without these constraints. ViMAT combines a perception module that extracts visual observations from multi-view video streams with a reasoning module that infers the most likely action being performed based on the observed assembly state and prior task knowledge. We validate ViMAT on two assembly tasks, involving the replacement of LEGO components and the reconfiguration of hydraulic press molds, demonstrating its effectiveness through quantitative and qualitative analysis in challenging real-world scenarios characterized by partial and uncertain visual observations. Project page: https://tev-fbk.github.io/ViMAT

Accurate and efficient zero-shot 6D pose estimation with frozen foundation models

Jun 11, 2025Abstract:Estimating the 6D pose of objects from RGBD data is a fundamental problem in computer vision, with applications in robotics and augmented reality. A key challenge is achieving generalization to novel objects that were not seen during training. Most existing approaches address this by scaling up training on synthetic data tailored to the task, a process that demands substantial computational resources. But is task-specific training really necessary for accurate and efficient 6D pose estimation of novel objects? To answer No!, we introduce FreeZeV2, the second generation of FreeZe: a training-free method that achieves strong generalization to unseen objects by leveraging geometric and vision foundation models pre-trained on unrelated data. FreeZeV2 improves both accuracy and efficiency over FreeZe through three key contributions: (i) a sparse feature extraction strategy that reduces inference-time computation without sacrificing accuracy; (ii) a feature-aware scoring mechanism that improves both pose selection during RANSAC-based 3D registration and the final ranking of pose candidates; and (iii) a modular design that supports ensembles of instance segmentation models, increasing robustness to segmentation masks errors. We evaluate FreeZeV2 on the seven core datasets of the BOP Benchmark, where it establishes a new state-of-the-art in 6D pose estimation of unseen objects. When using the same segmentation masks, FreeZeV2 achieves a remarkable 8x speedup over FreeZe while also improving accuracy by 5%. When using ensembles of segmentation models, FreeZeV2 gains an additional 8% in accuracy while still running 2.5x faster than FreeZe. FreeZeV2 was awarded Best Overall Method at the BOP Challenge 2024.

CHIP: A multi-sensor dataset for 6D pose estimation of chairs in industrial settings

Jun 11, 2025Abstract:Accurate 6D pose estimation of complex objects in 3D environments is essential for effective robotic manipulation. Yet, existing benchmarks fall short in evaluating 6D pose estimation methods under realistic industrial conditions, as most datasets focus on household objects in domestic settings, while the few available industrial datasets are limited to artificial setups with objects placed on tables. To bridge this gap, we introduce CHIP, the first dataset designed for 6D pose estimation of chairs manipulated by a robotic arm in a real-world industrial environment. CHIP includes seven distinct chairs captured using three different RGBD sensing technologies and presents unique challenges, such as distractor objects with fine-grained differences and severe occlusions caused by the robotic arm and human operators. CHIP comprises 77,811 RGBD images annotated with ground-truth 6D poses automatically derived from the robot's kinematics, averaging 11,115 annotations per chair. We benchmark CHIP using three zero-shot 6D pose estimation methods, assessing performance across different sensor types, localization priors, and occlusion levels. Results show substantial room for improvement, highlighting the unique challenges posed by the dataset. CHIP will be publicly released.

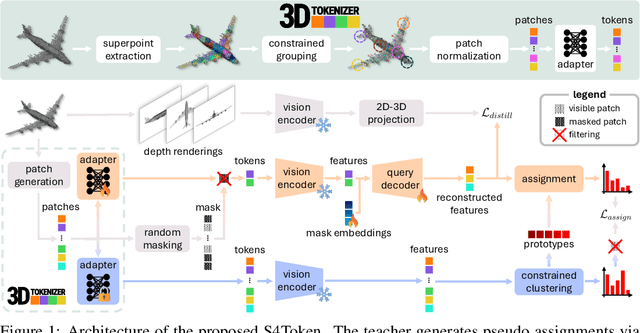

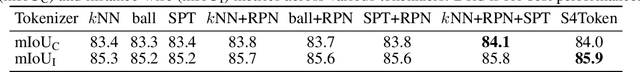

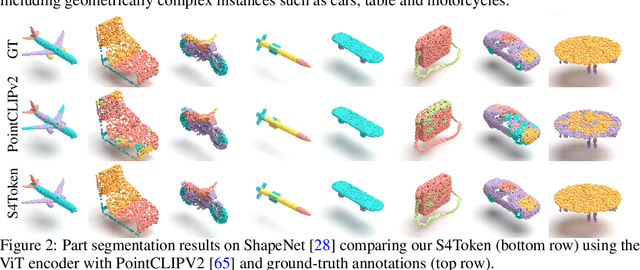

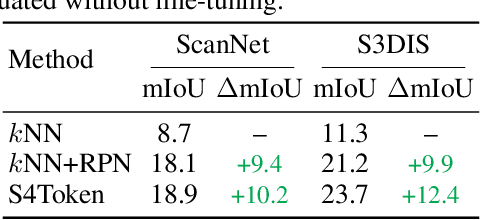

Self-Supervised and Generalizable Tokenization for CLIP-Based 3D Understanding

May 24, 2025

Abstract:Vision-language models like CLIP can offer a promising foundation for 3D scene understanding when extended with 3D tokenizers. However, standard approaches, such as k-nearest neighbor or radius-based tokenization, struggle with cross-domain generalization due to sensitivity to dataset-specific spatial scales. We present a universal 3D tokenizer designed for scale-invariant representation learning with a frozen CLIP backbone. We show that combining superpoint-based grouping with coordinate scale normalization consistently outperforms conventional methods through extensive experimental analysis. Specifically, we introduce S4Token, a tokenization pipeline that produces semantically-informed tokens regardless of scene scale. Our tokenizer is trained without annotations using masked point modeling and clustering-based objectives, along with cross-modal distillation to align 3D tokens with 2D multi-view image features. For dense prediction tasks, we propose a superpoint-level feature propagation module to recover point-level detail from sparse tokens.

The RaspGrade Dataset: Towards Automatic Raspberry Ripeness Grading with Deep Learning

May 14, 2025Abstract:This research investigates the application of computer vision for rapid, accurate, and non-invasive food quality assessment, focusing on the novel challenge of real-time raspberry grading into five distinct classes within an industrial environment as the fruits move along a conveyor belt. To address this, a dedicated dataset of raspberries, namely RaspGrade, was acquired and meticulously annotated. Instance segmentation experiments revealed that accurate fruit-level masks can be obtained; however, the classification of certain raspberry grades presents challenges due to color similarities and occlusion, while others are more readily distinguishable based on color. The acquired and annotated RaspGrade dataset is accessible on Hugging Face at: https://huggingface.co/datasets/FBK-TeV/RaspGrade.

Distilling 3D distinctive local descriptors for 6D pose estimation

Mar 20, 2025Abstract:Three-dimensional local descriptors are crucial for encoding geometric surface properties, making them essential for various point cloud understanding tasks. Among these descriptors, GeDi has demonstrated strong zero-shot 6D pose estimation capabilities but remains computationally impractical for real-world applications due to its expensive inference process. Can we retain GeDi's effectiveness while significantly improving its efficiency? In this paper, we explore this question by introducing a knowledge distillation framework that trains an efficient student model to regress local descriptors from a GeDi teacher. Our key contributions include: an efficient large-scale training procedure that ensures robustness to occlusions and partial observations while operating under compute and storage constraints, and a novel loss formulation that handles weak supervision from non-distinctive teacher descriptors. We validate our approach on five BOP Benchmark datasets and demonstrate a significant reduction in inference time while maintaining competitive performance with existing methods, bringing zero-shot 6D pose estimation closer to real-time feasibility. Project Website: https://tev-fbk.github.io/dGeDi/

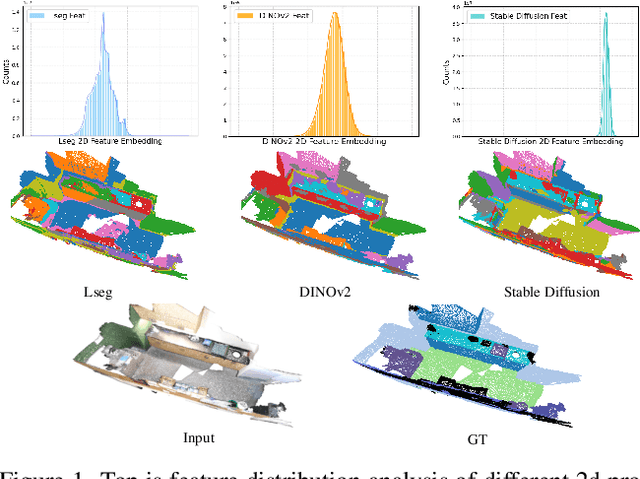

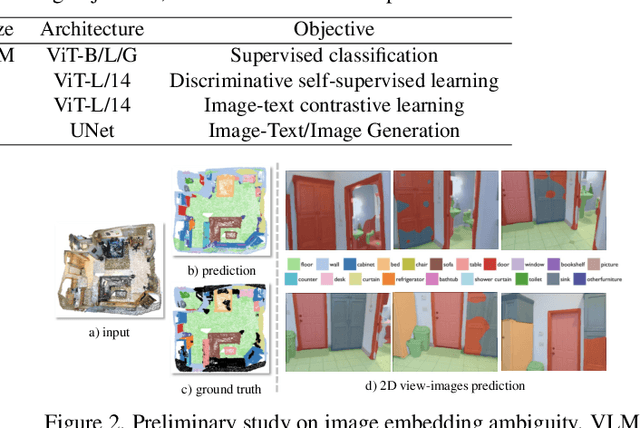

Cross-Modal and Uncertainty-Aware Agglomeration for Open-Vocabulary 3D Scene Understanding

Mar 20, 2025

Abstract:The lack of a large-scale 3D-text corpus has led recent works to distill open-vocabulary knowledge from vision-language models (VLMs). owever, these methods typically rely on a single VLM to align the feature spaces of 3D models within a common language space, which limits the potential of 3D models to leverage the diverse spatial and semantic capabilities encapsulated in various foundation models. In this paper, we propose Cross-modal and Uncertainty-aware Agglomeration for Open-vocabulary 3D Scene Understanding dubbed CUA-O3D, the first model to integrate multiple foundation models-such as CLIP, DINOv2, and Stable Diffusion-into 3D scene understanding. We further introduce a deterministic uncertainty estimation to adaptively distill and harmonize the heterogeneous 2D feature embeddings from these models. Our method addresses two key challenges: (1) incorporating semantic priors from VLMs alongside the geometric knowledge of spatially-aware vision foundation models, and (2) using a novel deterministic uncertainty estimation to capture model-specific uncertainties across diverse semantic and geometric sensitivities, helping to reconcile heterogeneous representations during training. Extensive experiments on ScanNetV2 and Matterport3D demonstrate that our method not only advances open-vocabulary segmentation but also achieves robust cross-domain alignment and competitive spatial perception capabilities. The code will be available at \href{https://github.com/TyroneLi/CUA_O3D}{CUA_O3D}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge