Jinlong Li

University of Science and Technology of China

RADAR: Accelerating Large Language Model Inference With RL-Based Dynamic Draft Trees

Dec 16, 2025

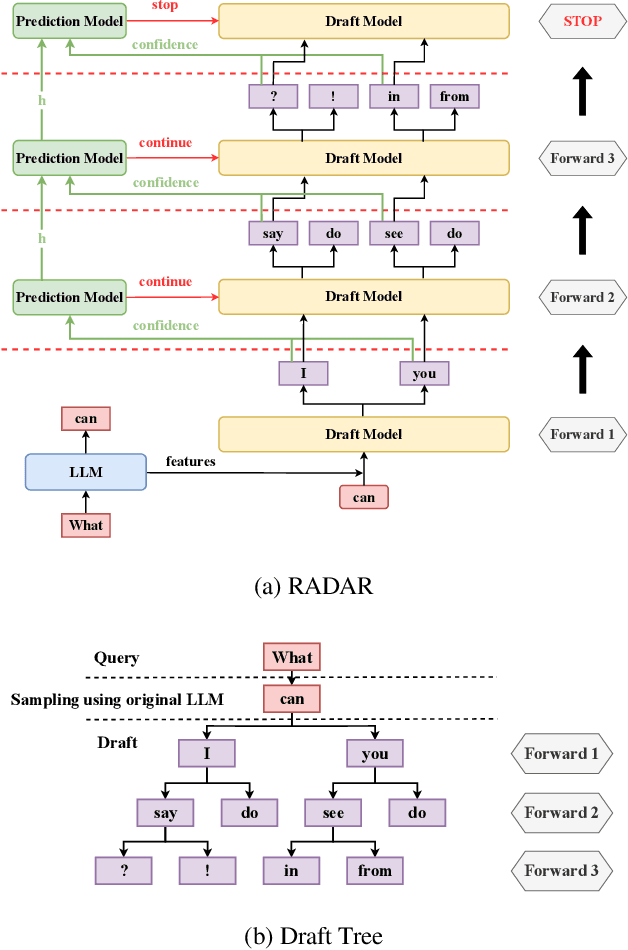

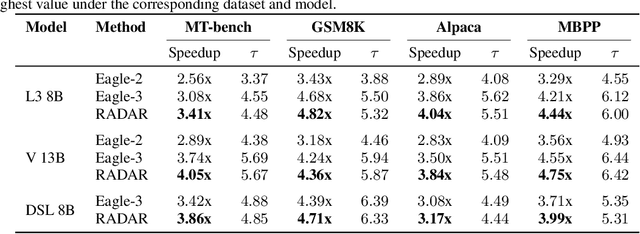

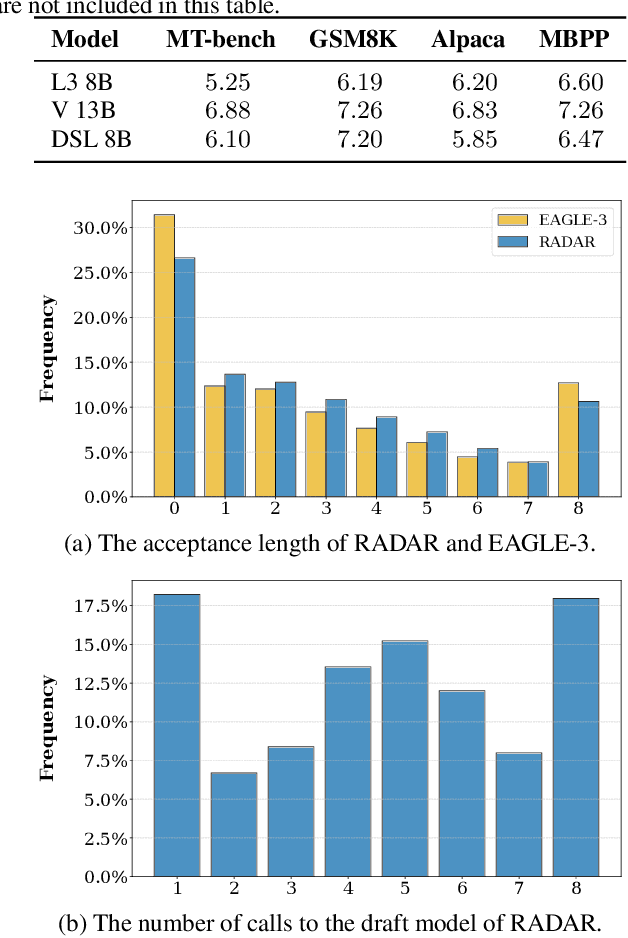

Abstract:Inference with modern Large Language Models (LLMs) is expensive and slow, and speculative sampling has emerged as an effective solution to this problem, however, the number of the calls to the draft model for generating candidate tokens in speculative sampling is a preset hyperparameter, lacking flexibility. To generate and utilize the candidate tokens more effectively, we propose RADAR, a novel speculative sampling method with RL-based dynamic draft trees. RADAR formulates the draft tree generation process as a Markov Decision Process (MDP) and employs offline reinforcement learning to train a prediction model, which enables real-time decision on the calls to the draft model, reducing redundant computations and further accelerating inference. Evaluations across three LLMs and four tasks show that RADAR achieves a speedup of 3.17x-4.82x over the auto-regressive decoding baseline. The code is available at https://github.com/minaduki-sora/RADAR.

CLASH: Collaborative Large-Small Hierarchical Framework for Continuous Vision-and-Language Navigation

Dec 11, 2025Abstract:Vision-and-Language Navigation (VLN) requires robots to follow natural language instructions and navigate complex environments without prior maps. While recent vision-language large models demonstrate strong reasoning abilities, they often underperform task-specific panoramic small models in VLN tasks. To address this, we propose CLASH (Collaborative Large-Small Hierarchy), a VLN-CE framework that integrates a reactive small-model planner (RSMP) with a reflective large-model reasoner (RLMR). RSMP adopts a causal-learning-based dual-branch architecture to enhance generalization, while RLMR leverages panoramic visual prompting with chain-of-thought reasoning to support interpretable spatial understanding and navigation. We further introduce an uncertainty-aware collaboration mechanism (UCM) that adaptively fuses decisions from both models. For obstacle avoidance, in simulation, we replace the rule-based controller with a fully learnable point-goal policy, and in real-world deployment, we design a LiDAR-based clustering module for generating navigable waypoints and pair it with an online SLAM-based local controller. CLASH achieves state-of-the-art (SoTA) results (ranking 1-st) on the VLN-CE leaderboard, significantly improving SR and SPL on the test-unseen set over the previous SoTA methods. Real-world experiments demonstrate CLASH's strong robustness, validating its effectiveness in both simulation and deployment scenarios.

ILRe: Intermediate Layer Retrieval for Context Compression in Causal Language Models

Aug 25, 2025

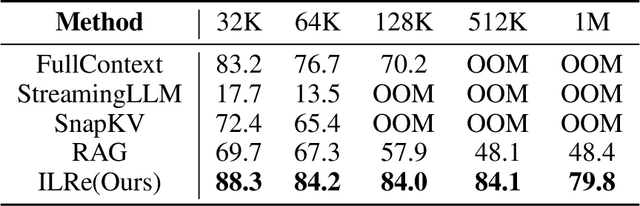

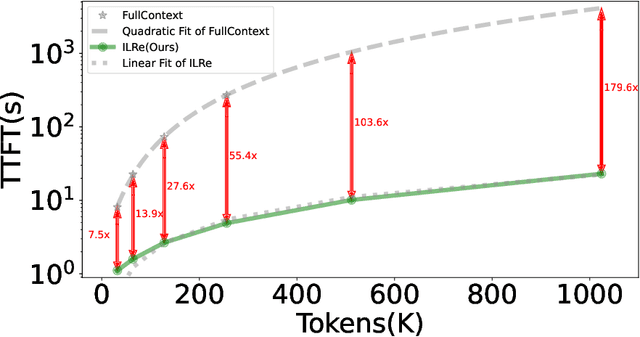

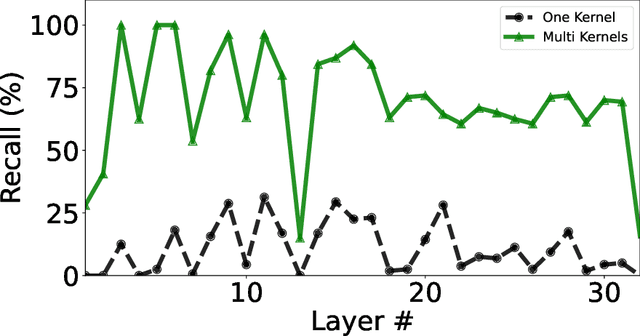

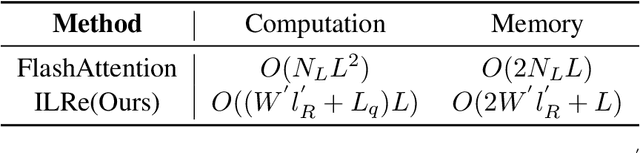

Abstract:Large Language Models (LLMs) have demonstrated success across many benchmarks. However, they still exhibit limitations in long-context scenarios, primarily due to their short effective context length, quadratic computational complexity, and high memory overhead when processing lengthy inputs. To mitigate these issues, we introduce a novel context compression pipeline, called Intermediate Layer Retrieval (ILRe), which determines one intermediate decoder layer offline, encodes context by streaming chunked prefill only up to that layer, and recalls tokens by the attention scores between the input query and full key cache in that specified layer. In particular, we propose a multi-pooling kernels allocating strategy in the token recalling process to maintain the completeness of semantics. Our approach not only reduces the prefilling complexity from $O(L^2)$ to $O(L)$, but also achieves performance comparable to or better than the full context in the long context scenarios. Without additional post training or operator development, ILRe can process a single $1M$ tokens request in less than half a minute (speedup $\approx 180\times$) and scores RULER-$1M$ benchmark of $\approx 79.8$ with model Llama-3.1-UltraLong-8B-1M-Instruct on a Huawei Ascend 910B NPU.

Orthogonal Projection Subspace to Aggregate Online Prior-knowledge for Continual Test-time Adaptation

Jun 23, 2025

Abstract:Continual Test Time Adaptation (CTTA) is a task that requires a source pre-trained model to continually adapt to new scenarios with changing target distributions. Existing CTTA methods primarily focus on mitigating the challenges of catastrophic forgetting and error accumulation. Though there have been emerging methods based on forgetting adaptation with parameter-efficient fine-tuning, they still struggle to balance competitive performance and efficient model adaptation, particularly in complex tasks like semantic segmentation. In this paper, to tackle the above issues, we propose a novel pipeline, Orthogonal Projection Subspace to aggregate online Prior-knowledge, dubbed OoPk. Specifically, we first project a tuning subspace orthogonally which allows the model to adapt to new domains while preserving the knowledge integrity of the pre-trained source model to alleviate catastrophic forgetting. Then, we elaborate an online prior-knowledge aggregation strategy that employs an aggressive yet efficient image masking strategy to mimic potential target dynamism, enhancing the student model's domain adaptability. This further gradually ameliorates the teacher model's knowledge, ensuring high-quality pseudo labels and reducing error accumulation. We demonstrate our method with extensive experiments that surpass previous CTTA methods and achieve competitive performances across various continual TTA benchmarks in semantic segmentation tasks.

Lag-Relative Sparse Attention In Long Context Training

Jun 13, 2025

Abstract:Large Language Models (LLMs) have made significant strides in natural language processing and generation, yet their ability to handle long-context input remains constrained by the quadratic complexity of attention computation and linear-increasing key-value memory footprint. To reduce computational costs and memory, key-value cache compression techniques are commonly applied at inference time, but this often leads to severe performance degradation, as models are not trained to handle compressed context. Although there are more sophisticated compression methods, they are typically unsuitable for post-training because of their incompatibility with gradient-based optimization or high computation overhead. To fill this gap with no additional parameter and little computation overhead, we propose Lag-Relative Sparse Attention(LRSA) anchored by the LagKV compression method for long context post-training. Our method performs chunk-by-chunk prefilling, which selects the top K most relevant key-value pairs in a fixed-size lagging window, allowing the model to focus on salient historical context while maintaining efficiency. Experimental results show that our approach significantly enhances the robustness of the LLM with key-value compression and achieves better fine-tuned results in the question-answer tuning task.

LagKV: Lag-Relative Information of the KV Cache Tells Which Tokens Are Important

Apr 07, 2025Abstract:The increasing size of the Key-Value (KV) cache during the Large Language Models long-context inference is the main obstacle for its balance between the deployment cost and task accuracy. To reduce the KV cache size in such scenarios, most previous efforts leveraged on the attention weight to evict non-critical cache tokens. But there is a trade-off in those methods, they usually require major modifiation of the inference infrastructure and significant computation overhead. Base on the fact that the Large Lanuage models are autoregresssive models, we propose {\it LagKV}, a KV allocation strategy only relying on straight forward comparison among KV themself. It is a totally attention free method which offers easy integration to the main stream inference platform and comparable performance comparing to other complicated KV compression methods. Results on LongBench and PasskeyRetrieval show that, our approach achieves nearly zero loss when the ratio is $2\times$ and $\approx 90\%$ of the original model performance for $8\times$. Especially in the 64-digit passkey retrieval task, our mehod outperforms the attention weight based method $H_2O$ over $60\%$ with same compression ratios. Our code is available at \url{https://github.com/AI-Lab-China-Merchants-Bank/LagKV}.

A Training-free LLM Framework with Interaction between Contextually Related Subtasks in Solving Complex Tasks

Mar 29, 2025Abstract:Large language models (LLMs) have shown remarkable capabilities in solving complex tasks. Recent work has explored decomposing such tasks into subtasks with independent contexts. However, some contextually related subtasks may encounter information loss during execution, leading to redundant operations or execution failures. To address this issue, we propose a training-free framework with an interaction mechanism, which enables a subtask to query specific information or trigger certain actions in completed subtasks by sending requests. To implement interaction, we introduce a subtask trajectory memory to enable resumption of completed subtasks upon receiving interaction requests. Additionally, we propose a new action during execution, which generates a concise and precise description of execution process and outcomes of a subtask, to assist subsequent subtasks in determining interaction targets and requests. We evaluate our framework on interactive decision-making task WebShop and multi-hop question answering HotpotQA, with GPT-3.5 and GPT-4, and comparison results show that our framework outperforms the state-of-the-art training-free baselines.

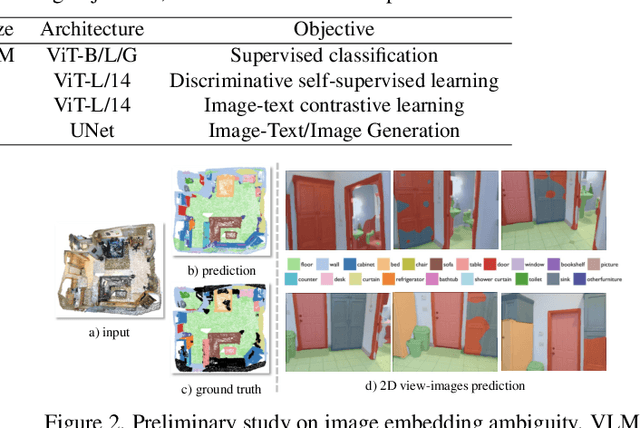

Cross-Modal and Uncertainty-Aware Agglomeration for Open-Vocabulary 3D Scene Understanding

Mar 20, 2025

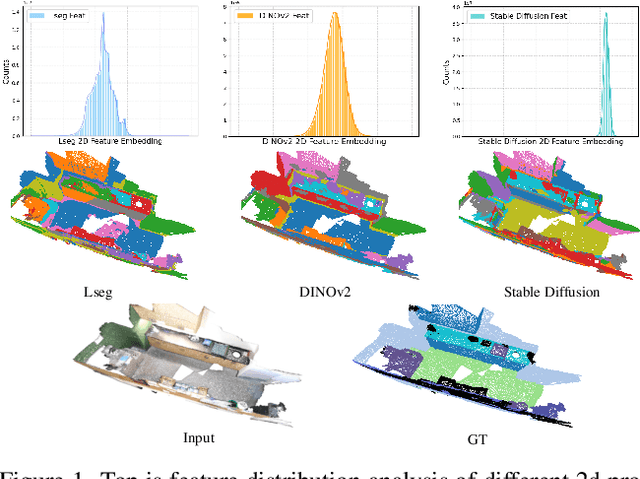

Abstract:The lack of a large-scale 3D-text corpus has led recent works to distill open-vocabulary knowledge from vision-language models (VLMs). owever, these methods typically rely on a single VLM to align the feature spaces of 3D models within a common language space, which limits the potential of 3D models to leverage the diverse spatial and semantic capabilities encapsulated in various foundation models. In this paper, we propose Cross-modal and Uncertainty-aware Agglomeration for Open-vocabulary 3D Scene Understanding dubbed CUA-O3D, the first model to integrate multiple foundation models-such as CLIP, DINOv2, and Stable Diffusion-into 3D scene understanding. We further introduce a deterministic uncertainty estimation to adaptively distill and harmonize the heterogeneous 2D feature embeddings from these models. Our method addresses two key challenges: (1) incorporating semantic priors from VLMs alongside the geometric knowledge of spatially-aware vision foundation models, and (2) using a novel deterministic uncertainty estimation to capture model-specific uncertainties across diverse semantic and geometric sensitivities, helping to reconcile heterogeneous representations during training. Extensive experiments on ScanNetV2 and Matterport3D demonstrate that our method not only advances open-vocabulary segmentation but also achieves robust cross-domain alignment and competitive spatial perception capabilities. The code will be available at \href{https://github.com/TyroneLi/CUA_O3D}{CUA_O3D}.

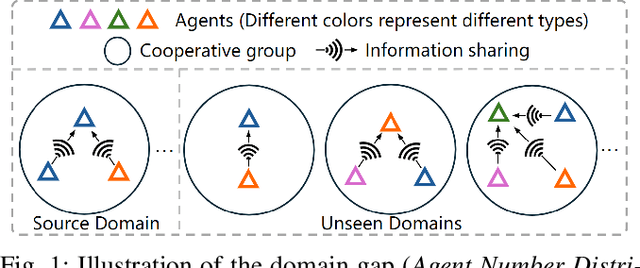

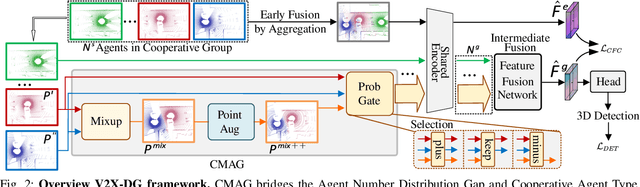

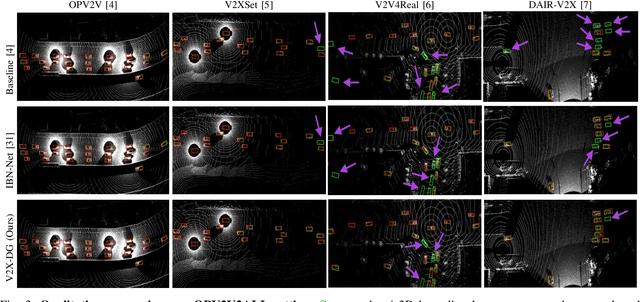

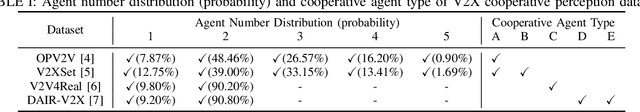

V2X-DG: Domain Generalization for Vehicle-to-Everything Cooperative Perception

Mar 19, 2025

Abstract:LiDAR-based Vehicle-to-Everything (V2X) cooperative perception has demonstrated its impact on the safety and effectiveness of autonomous driving. Since current cooperative perception algorithms are trained and tested on the same dataset, the generalization ability of cooperative perception systems remains underexplored. This paper is the first work to study the Domain Generalization problem of LiDAR-based V2X cooperative perception (V2X-DG) for 3D detection based on four widely-used open source datasets: OPV2V, V2XSet, V2V4Real and DAIR-V2X. Our research seeks to sustain high performance not only within the source domain but also across other unseen domains, achieved solely through training on source domain. To this end, we propose Cooperative Mixup Augmentation based Generalization (CMAG) to improve the model generalization capability by simulating the unseen cooperation, which is designed compactly for the domain gaps in cooperative perception. Furthermore, we propose a constraint for the regularization of the robust generalized feature representation learning: Cooperation Feature Consistency (CFC), which aligns the intermediately fused features of the generalized cooperation by CMAG and the early fused features of the original cooperation in source domain. Extensive experiments demonstrate that our approach achieves significant performance gains when generalizing to other unseen datasets while it also maintains strong performance on the source dataset.

Survey on Single-Image Reflection Removal using Deep Learning Techniques

Feb 12, 2025Abstract:The phenomenon of reflection is quite common in digital images, posing significant challenges for various applications such as computer vision, photography, and image processing. Traditional methods for reflection removal often struggle to achieve clean results while maintaining high fidelity and robustness, particularly in real-world scenarios. Over the past few decades, numerous deep learning-based approaches for reflection removal have emerged, yielding impressive results. In this survey, we conduct a comprehensive review of the current literature by focusing on key venues such as ICCV, ECCV, CVPR, NeurIPS, etc., as these conferences and journals have been central to advances in the field. Our review follows a structured paper selection process, and we critically assess both single-stage and two-stage deep learning methods for reflection removal. The contribution of this survey is three-fold: first, we provide a comprehensive summary of the most recent work on single-image reflection removal; second, we outline task hypotheses, current deep learning techniques, publicly available datasets, and relevant evaluation metrics; and third, we identify key challenges and opportunities in deep learning-based reflection removal, highlighting the potential of this rapidly evolving research area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge