Hsiang-Wei Huang

Modeling LLM Agent Reviewer Dynamics in Elo-Ranked Review System

Jan 13, 2026Abstract:In this work, we explore the Large Language Model (LLM) agent reviewer dynamics in an Elo-ranked review system using real-world conference paper submissions. Multiple LLM agent reviewers with different personas are engage in multi round review interactions moderated by an Area Chair. We compare a baseline setting with conditions that incorporate Elo ratings and reviewer memory. Our simulation results showcase several interesting findings, including how incorporating Elo improves Area Chair decision accuracy, as well as reviewers' adaptive review strategy that exploits our Elo system without improving review effort. Our code is available at https://github.com/hsiangwei0903/EloReview.

Reasoning Matters for 3D Visual Grounding

Jan 13, 2026Abstract:The recent development of Large Language Models (LLMs) with strong reasoning ability has driven research in various domains such as mathematics, coding, and scientific discovery. Meanwhile, 3D visual grounding, as a fundamental task in 3D understanding, still remains challenging due to the limited reasoning ability of recent 3D visual grounding models. Most of the current methods incorporate a text encoder and visual feature encoder to generate cross-modal fuse features and predict the referring object. These models often require supervised training on extensive 3D annotation data. On the other hand, recent research also focus on scaling synthetic data to train stronger 3D visual grounding LLM, however, the performance gain remains limited and non-proportional to the data collection cost. In this work, we propose a 3D visual grounding data pipeline, which is capable of automatically synthesizing 3D visual grounding data along with corresponding reasoning process. Additionally, we leverage the generated data for LLM fine-tuning and introduce Reason3DVG-8B, a strong 3D visual grounding LLM that outperforms previous LLM-based method 3D-GRAND using only 1.6% of their training data, demonstrating the effectiveness of our data and the importance of reasoning in 3D visual grounding.

Adapting SAM 2 for Visual Object Tracking: 1st Place Solution for MMVPR Challenge Multi-Modal Tracking

May 23, 2025Abstract:We present an effective approach for adapting the Segment Anything Model 2 (SAM2) to the Visual Object Tracking (VOT) task. Our method leverages the powerful pre-trained capabilities of SAM2 and incorporates several key techniques to enhance its performance in VOT applications. By combining SAM2 with our proposed optimizations, we achieved a first place AUC score of 89.4 on the 2024 ICPR Multi-modal Object Tracking challenge, demonstrating the effectiveness of our approach. This paper details our methodology, the specific enhancements made to SAM2, and a comprehensive analysis of our results in the context of VOT solutions along with the multi-modality aspect of the dataset.

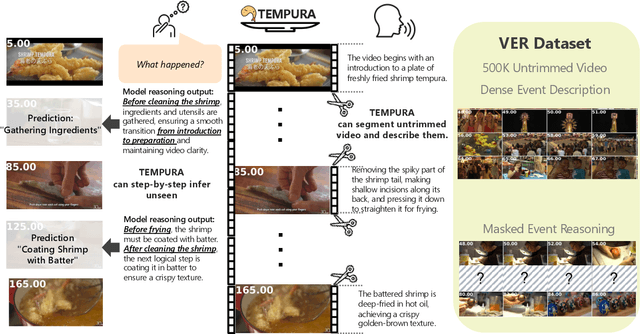

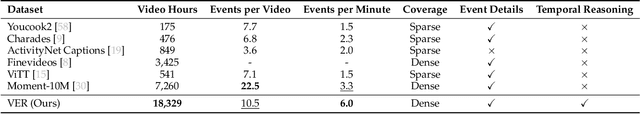

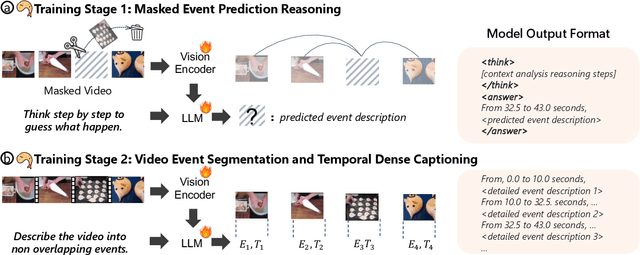

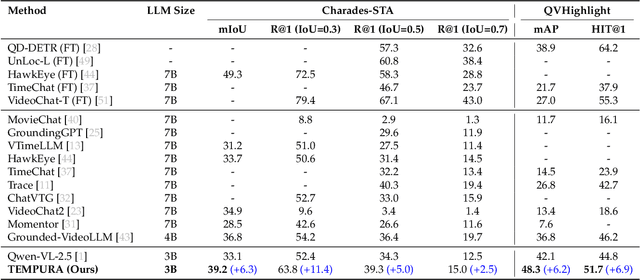

TEMPURA: Temporal Event Masked Prediction and Understanding for Reasoning in Action

May 02, 2025

Abstract:Understanding causal event relationships and achieving fine-grained temporal grounding in videos remain challenging for vision-language models. Existing methods either compress video tokens to reduce temporal resolution, or treat videos as unsegmented streams, which obscures fine-grained event boundaries and limits the modeling of causal dependencies. We propose TEMPURA (Temporal Event Masked Prediction and Understanding for Reasoning in Action), a two-stage training framework that enhances video temporal understanding. TEMPURA first applies masked event prediction reasoning to reconstruct missing events and generate step-by-step causal explanations from dense event annotations, drawing inspiration from effective infilling techniques. TEMPURA then learns to perform video segmentation and dense captioning to decompose videos into non-overlapping events with detailed, timestamp-aligned descriptions. We train TEMPURA on VER, a large-scale dataset curated by us that comprises 1M training instances and 500K videos with temporally aligned event descriptions and structured reasoning steps. Experiments on temporal grounding and highlight detection benchmarks demonstrate that TEMPURA outperforms strong baseline models, confirming that integrating causal reasoning with fine-grained temporal segmentation leads to improved video understanding.

PackDiT: Joint Human Motion and Text Generation via Mutual Prompting

Jan 27, 2025

Abstract:Human motion generation has advanced markedly with the advent of diffusion models. Most recent studies have concentrated on generating motion sequences based on text prompts, commonly referred to as text-to-motion generation. However, the bidirectional generation of motion and text, enabling tasks such as motion-to-text alongside text-to-motion, has been largely unexplored. This capability is essential for aligning diverse modalities and supports unconditional generation. In this paper, we introduce PackDiT, the first diffusion-based generative model capable of performing various tasks simultaneously, including motion generation, motion prediction, text generation, text-to-motion, motion-to-text, and joint motion-text generation. Our core innovation leverages mutual blocks to integrate multiple diffusion transformers (DiTs) across different modalities seamlessly. We train PackDiT on the HumanML3D dataset, achieving state-of-the-art text-to-motion performance with an FID score of 0.106, along with superior results in motion prediction and in-between tasks. Our experiments further demonstrate that diffusion models are effective for motion-to-text generation, achieving performance comparable to that of autoregressive models.

SAMURAI: Adapting Segment Anything Model for Zero-Shot Visual Tracking with Motion-Aware Memory

Nov 18, 2024Abstract:The Segment Anything Model 2 (SAM 2) has demonstrated strong performance in object segmentation tasks but faces challenges in visual object tracking, particularly when managing crowded scenes with fast-moving or self-occluding objects. Furthermore, the fixed-window memory approach in the original model does not consider the quality of memories selected to condition the image features for the next frame, leading to error propagation in videos. This paper introduces SAMURAI, an enhanced adaptation of SAM 2 specifically designed for visual object tracking. By incorporating temporal motion cues with the proposed motion-aware memory selection mechanism, SAMURAI effectively predicts object motion and refines mask selection, achieving robust, accurate tracking without the need for retraining or fine-tuning. SAMURAI operates in real-time and demonstrates strong zero-shot performance across diverse benchmark datasets, showcasing its ability to generalize without fine-tuning. In evaluations, SAMURAI achieves significant improvements in success rate and precision over existing trackers, with a 7.1% AUC gain on LaSOT$_{\text{ext}}$ and a 3.5% AO gain on GOT-10k. Moreover, it achieves competitive results compared to fully supervised methods on LaSOT, underscoring its robustness in complex tracking scenarios and its potential for real-world applications in dynamic environments. Code and results are available at https://github.com/yangchris11/samurai.

GTA: Global Tracklet Association for Multi-Object Tracking in Sports

Nov 12, 2024

Abstract:Multi-object tracking in sports scenarios has become one of the focal points in computer vision, experiencing significant advancements through the integration of deep learning techniques. Despite these breakthroughs, challenges remain, such as accurately re-identifying players upon re-entry into the scene and minimizing ID switches. In this paper, we propose an appearance-based global tracklet association algorithm designed to enhance tracking performance by splitting tracklets containing multiple identities and connecting tracklets seemingly from the same identity. This method can serve as a plug-and-play refinement tool for any multi-object tracker to further boost their performance. The proposed method achieved a new state-of-the-art performance on the SportsMOT dataset with HOTA score of 81.04%. Similarly, on the SoccerNet dataset, our method enhanced multiple trackers' performance, consistently increasing the HOTA score from 79.41% to 83.11%. These significant and consistent improvements across different trackers and datasets underscore our proposed method's potential impact on the application of sports player tracking. We open-source our project codebase at https://github.com/sjc042/gta-link.git.

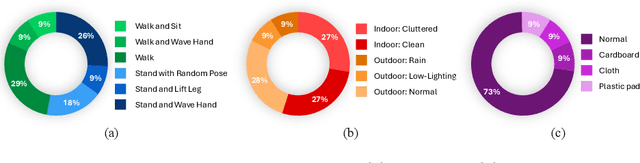

ToddlerAct: A Toddler Action Recognition Dataset for Gross Motor Development Assessment

Aug 31, 2024Abstract:Assessing gross motor development in toddlers is crucial for understanding their physical development and identifying potential developmental delays or disorders. However, existing datasets for action recognition primarily focus on adults, lacking the diversity and specificity required for accurate assessment in toddlers. In this paper, we present ToddlerAct, a toddler gross motor action recognition dataset, aiming to facilitate research in early childhood development. The dataset consists of video recordings capturing a variety of gross motor activities commonly observed in toddlers aged under three years old. We describe the data collection process, annotation methodology, and dataset characteristics. Furthermore, we benchmarked multiple state-of-the-art methods including image-based and skeleton-based action recognition methods on our datasets. Our findings highlight the importance of domain-specific datasets for accurate assessment of gross motor development in toddlers and lay the foundation for future research in this critical area. Our dataset will be available at https://github.com/ipl-uw/ToddlerAct.

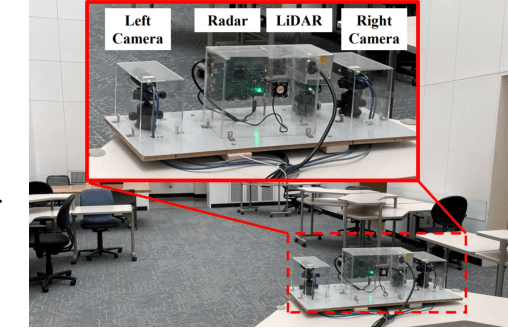

RT-Pose: A 4D Radar Tensor-based 3D Human Pose Estimation and Localization Benchmark

Jul 18, 2024

Abstract:Traditional methods for human localization and pose estimation (HPE), which mainly rely on RGB images as an input modality, confront substantial limitations in real-world applications due to privacy concerns. In contrast, radar-based HPE methods emerge as a promising alternative, characterized by distinctive attributes such as through-wall recognition and privacy-preserving, rendering the method more conducive to practical deployments. This paper presents a Radar Tensor-based human pose (RT-Pose) dataset and an open-source benchmarking framework. The RT-Pose dataset comprises 4D radar tensors, LiDAR point clouds, and RGB images, and is collected for a total of 72k frames across 240 sequences with six different complexity-level actions. The 4D radar tensor provides raw spatio-temporal information, differentiating it from other radar point cloud-based datasets. We develop an annotation process using RGB images and LiDAR point clouds to accurately label 3D human skeletons. In addition, we propose HRRadarPose, the first single-stage architecture that extracts the high-resolution representation of 4D radar tensors in 3D space to aid human keypoint estimation. HRRadarPose outperforms previous radar-based HPE work on the RT-Pose benchmark. The overall HRRadarPose performance on the RT-Pose dataset, as reflected in a mean per joint position error (MPJPE) of 9.91cm, indicates the persistent challenges in achieving accurate HPE in complex real-world scenarios. RT-Pose is available at https://huggingface.co/datasets/uwipl/RT-Pose.

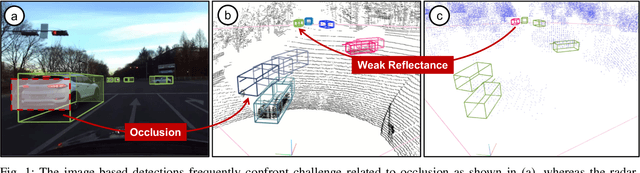

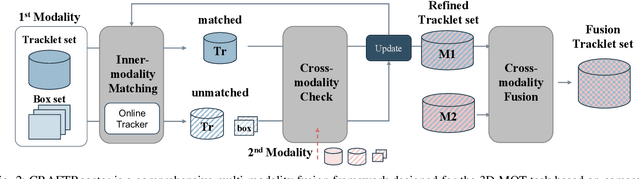

Boosting Online 3D Multi-Object Tracking through Camera-Radar Cross Check

Jul 18, 2024

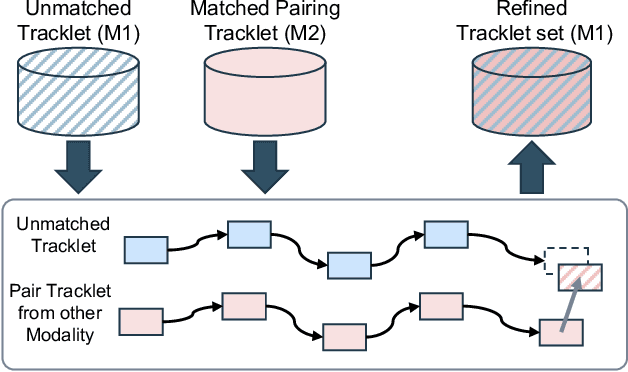

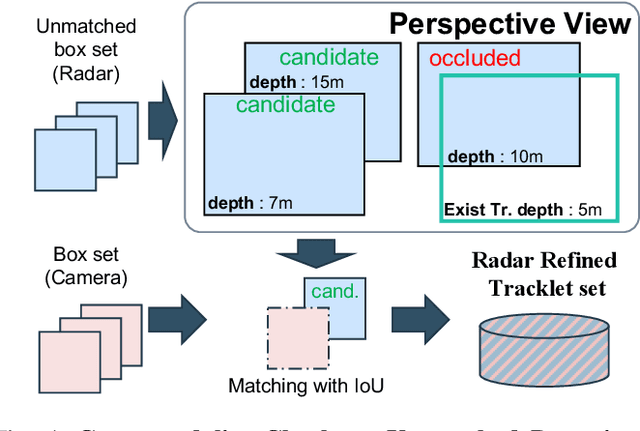

Abstract:In the domain of autonomous driving, the integration of multi-modal perception techniques based on data from diverse sensors has demonstrated substantial progress. Effectively surpassing the capabilities of state-of-the-art single-modality detectors through sensor fusion remains an active challenge. This work leverages the respective advantages of cameras in perspective view and radars in Bird's Eye View (BEV) to greatly enhance overall detection and tracking performance. Our approach, Camera-Radar Associated Fusion Tracking Booster (CRAFTBooster), represents a pioneering effort to enhance radar-camera fusion in the tracking stage, contributing to improved 3D MOT accuracy. The superior experimental results on the K-Radaar dataset, which exhibit 5-6% on IDF1 tracking performance gain, validate the potential of effective sensor fusion in advancing autonomous driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge